Introduction and requests the library is a simple HTTP request handling third-party libraries

get () is the most common way to obtain web page, its basic use is as follows

After using HTML page requests to obtain library and convert it to a string, it is necessary to further parse the HTML page format, where we used is beautifulsoup4 library for parsing and processing XML and HTML

The following code is crawling Baidu information and simple information output interface Baidu

import requests from bs4 import BeautifulSoup r=requests.get('http://www.baidu.com') r.encoding=None result=r.text bs=BeautifulSoup(result,'html.parser') print(bs.title) print(bs.title.text)

Import Requests from BS4 Import the BeautifulSoup # used to solve the distortion phenomena, the write code information is preferably crawling belt (or garbled output UnicodeEncodeError: 'gbk'codec CAN Not encode Character) Import IO Import SYS sys.stdout = IO .TextIOWrapper (sys.stdout.buffer, encoding = ' GB18030 ' )

# is used to prevent anti-crawling, you can look at headers = { " the User-- Agent " : " the Mozilla / 5.0 (the Windows; the U-; the Windows NT 5.1; en-US CN; rv: 1.9.1.6) " , " the Accept " : "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8", "Accept-Language" : "en-us", "Connection" : "keep-alive", "Accept-Charset" : "GB2312,utf-8;q=0.7,*;q=0.7" }

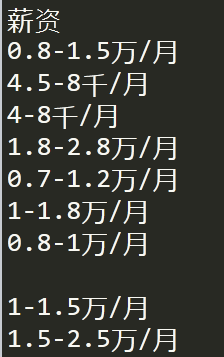

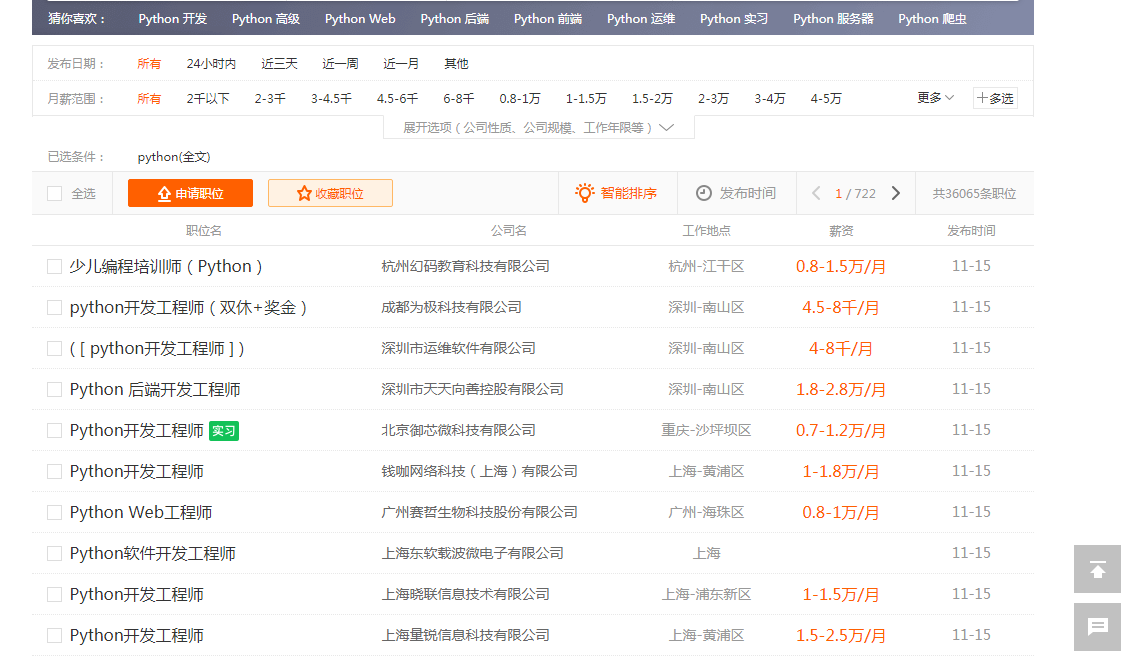

#获取51job网站的基本信息 r=requests.get('https://search.51job.com/list/000000,000000,0000,00,9,99,python,2,1.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=') r.encoding=r.apparent_encoding result=r.text bs=BeautifulSoup(result,'html.parser') print(bs.prettify()) u1=bs.find_all('u1',attrs={'class':'item_con_list'}) # This part of the code is the goal of our crawling, python on 51job website about professional salary Print (len (U1)) li = bs.find_all ( ' span ' , attrs = { ' class ' : ' T4 ' } ) for L in li: Print (l.text)

The code above is crawling salaries and related occupations python on 51job website