- Download docker docker got me a mirror, create a mirror

yum -y install docker

systemctl Start dokcer

docker pull CentOS: 6

docker RUN --privileged -dti -p 65000: 6500 --name gptest1 CentOS: 6 bash

docker RUN --privileged -dti - CentOS gptest2 -name: 6 bash

Docker RUN --privileged -dti --name gptest3 CentOS: 6 bash

Docker RUN --privileged -dti --name gptest4 CentOS: 6 bash

--privileges Docker have root privileges

2, and start the download dependencies SSH, and configure the time synchronization

docker default SSH not started, in order to facilitate the interconnection between the nodes, each node starts the docker SSH inside, and create the associated authentication Key

yum the install -Y Which OpenSSH-Tools-NET OpenSSH-Server Clients ZIP less

the unzip iproute.x86_64 Vim NTP ED

yum kernel updates the install update

SSH-RSA keygen -t -f / etc / SSH / ssh_host_rsa_key

SSH DSA-keygen -t -f / etc / SSH / ssh_host_dsa_key

/ usr / sbin / sshd

ntpdate ntp1.aliyun.com

-

Configuring host name mapping relationship

172.17.0.2. 1 MDW-DW-Greenplum

172.17.0.3 DW-2-Greenplum SDw1

172.17.0.4. 3-DW-Greenplum SDw2

172.17.0.4. 4-DW-Greenplum SDw3

simultaneously modify all nodes inside the / etc / sysconfig / network file, keeping the hostname consistent

modify / etc / hostname name

[root @ MDW /] # CAT / etc / hostname

MDW

[root @ MDW /] # CAT / etc / sysconfig / network

NETWORKING = yes

hOSTNAME = MDW - Modify each node above to limit the number of open files

cat /etc/security/limits.conf- soft nofile 65536

- Hard nofile 65536

- soft nproc 131072

- hard nproc 131072

[root@mdw /]# cat /etc/security/limits.d/90-nproc.conf - soft nproc 131072

root soft nproc unlimited

-

Modify kernel parameters

sysctl -w kernel.sem = "500 50 64000 150"

CAT / proc / SYS / Kernel / SEM -

Shut down all nodes firewall and SELinux

Service iptables STOP

chkconfig iptables OFF

setenforce 0 - Greenplum download the installation package

need to register an account. May be pulled in the existing binary packages above the machine's

https://network.pivotal.io/products/pivotal-gpdb

7. Create greenplum users and groups all nodes in

the groupadd -g 530. gpadmin

the useradd -g -u 530. 530. -m -d / Home / gpadmin -s / bin / the bash gpadmin

chown -R & lt gpadmin: gpadmin / Home / gpadmin

echo 123456 | passwd gpadmin --stdin

-

In the above master installation Greenplum

gpadmin - SU

the unzip greenplum-db-4.3.14.1-rhel5-x86_64.zip

SH /greenplum-db-4.3.14.1-rhel5-x86_64.bin

need to modify the default installation directory during the installation, input / home / gpadmin / greenplum-db

for easy mounting clustering, an instruction is provided Greenplum batch operation, by creating a configuration file, using the batch command to

create a file

[gpadmin MDW @ ~] $ the conf CAT / the hostlist

MDW

SDw1

SDw2

SDw3

[gpadmin MDW @ ~] CAT conf $ / seg_hosts

SDw1

SDw2 - Set the environment variable to get through all the nodes

Source Greenplum-DB / greenplum_path.sh

[gpadmin MDW @ ~] -f $ gpssh-exkeys / Home / gpadmin / the conf / transport keys the hostlist //

[STEP 1 of 5] create local ID and ON local Host the authorize

... /home/gpadmin/.ssh/id_rsa File EXISTS ... Key Generation Skipped

[STEP 2 of 5] keyscan all hosts and update known_hosts file

[STEP 3 of 5] authorize current user on remote hosts

... send to sdw1

... send to sdw2

[STEP 4 of 5] determine common authentication file content

[Of the STEP. 5. 5] Remote Copy All the hosts to authentication Files

... Finished Key Exchange with SDw1

... Key Exchange with SDw2 Finished

... Finished with SDw3 Key Exchange

[the INFO] Completed successfully

be sure to use the command gpssh-exkeys use gpadmin identity, because /home/gpadmin/.ssh will

generate ssh in the password-free login keys,

[gpadmin MDW @ ~] $ gpssh -f / Home / gpadmin / conf / hostlist

Note: the Command History Unsupported oN the this Machine ...

=> pwd

[MDW] / Home / gpadmin

[SDw2] / Home / gpadmin

[SDw1] / Home / gpadmin

[SDw3] / Home / gpadmin

-

All installation package distributed to each node values

tar cf greenplum.tar greenplum-db /

copy files to each of the above machines

gpscp -f / home / gpadmin / conf / hostlist greenplum.tar =: / home / gpadmin /

Batch decompression

tar -xf greenplum.tar

so that all the nodes to complete the installation - Creating initialization data directory

[gpadmin @ MDW conf] -f $ gpssh hostlist

=> mkdir gpdata

[MDW]

[SDw2]

[SDw1]

[SDw3]

=> cd gpdata

[MDW]

[SDw2]

[SDw1]

[SDw3]

=> mkdir gpdatap1 gpdatap2 gpdatam1 gpdatam2 gpmaster

[MDW]

[SDw2]

[SDw1]

[SDw3]

=> Exit

The above configuration of each node environment variable

CAT .bash_profile

Source /home/gpadmin/greenplum-db/greenplum_path.sh

Export MASTER_DATA_DIRECTORY = / Home / gpadmin / gpdata / gpmaster / gpseg. 1-

Export the PGPORT = 6500

Export PGDATABASE = Postgres

Source .bash_profile

Configuring initialization file

[gpadmin @ MDW conf] $ CAT gpinitsystem_config

ARRAY_NAME = "Greenplum"

MACHINE_LIST_FILE = / Home / gpadmin / conf / seg_hosts

Segment name prefixes

SEG_PREFIX = gpseg

Primary Segment starting port number

PORT_BASE = 33000

Primary Segment specified data directory

declare -a DATA_DIRECTORY=(/home/gpadmin/gpdata/gpdatap1 /home/gpadmin/gpdata/gpdatap2)

Hostname Master machine where

MASTER_HOSTNAME=mdw

Master of the specified data directory

MASTER_DIRECTORY=/home/gpadmin/gpdata/gpmaster

Master port

MASTER_PORT=6500

Specifies the version of Bash

TRUSTED_SHELL=ssh

Mirror Segment start port number

CHECK_POINT_SEGMENTS=256

MIRROR_PORT_BASE=43000

Primary Segment standby synchronization starting port number

REPLICATION_PORT_BASE=34000

Mirror Segment standby synchronization starting port number

MIRROR_REPLICATION_PORT_BASE=44000

Mirror Segment data directory

declare -a MIRROR_DATA_DIRECTORY=(/home/gpadmin/gpdata/gpdatam1 /home/gpadmin/gpdata/gpdatam2)

Initialize the database

gpinitsystem hostlist -s -h -c gpinitsystem_config SDw3 -S

// If no error is initialized, if there is an error, you need to modify the configuration according to the log prompts, and re-initialize

[gpadmin@mdw conf]$ psql

psql (8.2.15)

Type "help" for help.

postgres=# select version();

version

PostgreSQL 8.2.15 (Greenplum Database 4.3.10.0 build commit: f413ff3b006655f14b6b9aa217495ec94da5c96c) on x86_64-unknown-linux-gnu, compiled by GCC gcc (GCC) 4.4.2 compi

led on Oct 21 2016 19:36:26

(1 row)

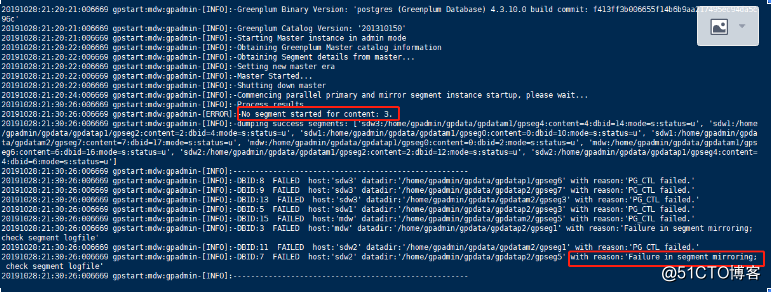

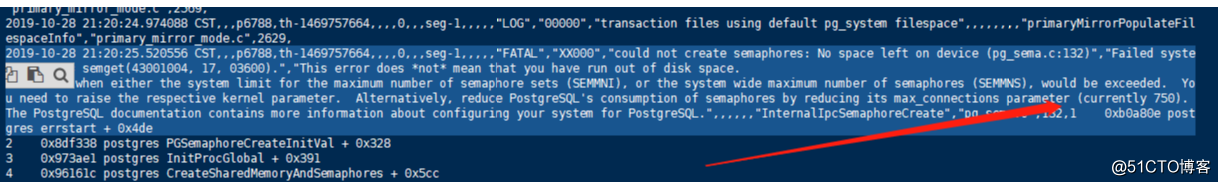

Error screenshots, logs and screenshots

node some up, no part of it. Host insufficient resources.

2019-10-28 21:20:25.520556 CST,,,p6788,th-1469757664,,,,0,,,seg-1,,,,,"FATAL","XX000","could not create semaphores: No space left on device (pg_sema.c:132)","Failed system call was semget(43001004, 17, 03600).","This error does not mean that you have run out of disk space.

It occurs when either the system limit for the maximum number of semaphore sets (SEMMNI), or the system wide maximum number of semaphores (SEMMNS), would be exceeded. You need to raise the respective kernel parameter. Alternatively, reduce PostgreSQL's consumption of semaphores by reducing its max_connections parameter (currently 750).

The PostgreSQL documentation contains more information about configuring your system for PostgreSQL.",,,,,,"InternalIpcSemaphoreCreate","pg_sema.c",132,1 0xb0a80e postgres errstart + 0x4de

Adjust the configuration file

vim /home/gpadmin/gpdata/gpdatam1/gpseg6/postgresql.conf

// four segments each machine must modify

max_connections = 200 // 750 original value, adjusted to 200

shared_buffers 500MB = 275 // original value adjustment to 500

gpstart -a reboot start the cluster

gpstop -u reload the configuration file

# common operations command

gpstate: view the database cluster status, example: gpstate -a

gpstart: start the database cluster example: gpstart -a

gpstop: Close the database cluster, example: -a -M FAST gpstop

gpssh: remotely execute shell commands, examples: gpssh -f hosts -e 'date'

Reference deployment Bowen

https://www.cnblogs.com/dap570/archive/2015/03/21/greenplum_4node_install.html

https://my.oschina.net/u/876354/blog/1606419