SOFAJRaft is based on production-level high-performance algorithm Raft Java implementation, support MULTI-RAFT-GROUP. There Leader election scenarios, distributed lock service, highly reliable meta-information management, distributed storage system.

If you do not understand the algorithm Raft friends can go and see this article: Why Raft is a distributed consensus algorithm easier to understand , to write very detailed.

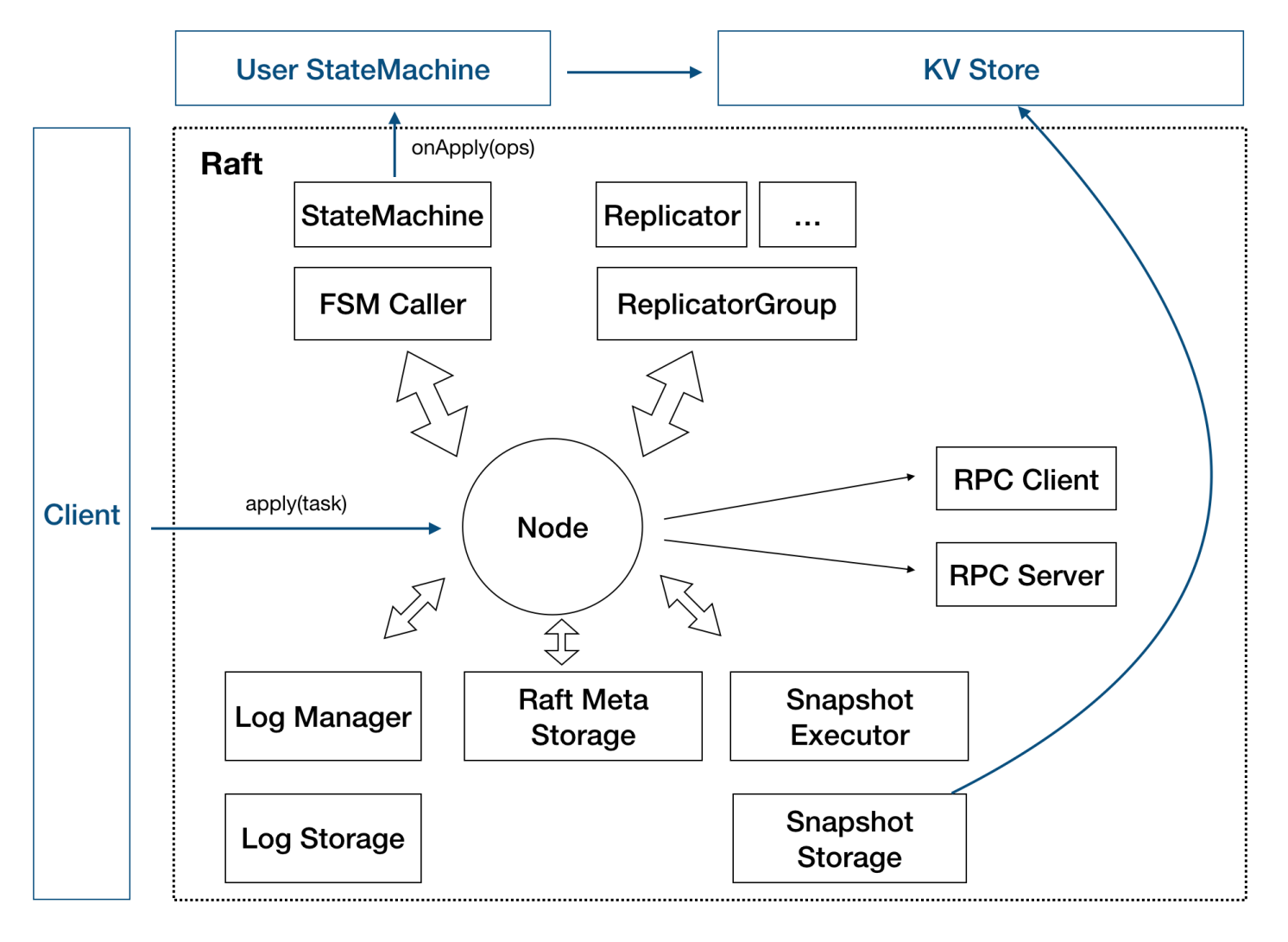

This map is SOFAJRaft of design, which represents a SOFAJRaft Server Node node.

Since Node SOFAJRaft node is a distributed configuration, the Node node needs to transmit information to other Node, the role of the Replicator is used to copy information to another Node. More Replicator together to form a ReplicatorGroup.

Snapshot is a snapshot, is a record of the current value of the data, it will save save, provide cold standby data function.

Leader generated snapshots there are so few roles:

- When there Node join the cluster when the new, do not rely on log replication, and replay data to be consistent Leader, but a large number of logs to skip the playback by installing an early snapshot of Leader;

- Alternatively Log copy Leader Snapshots amount of data on the network can be reduced;

- Snapshots can replace the older Log save storage space;

StateMachine portion to the user interface is used to achieve. So as to reach a consensus in a distributed system by the user to achieve specific business logic.

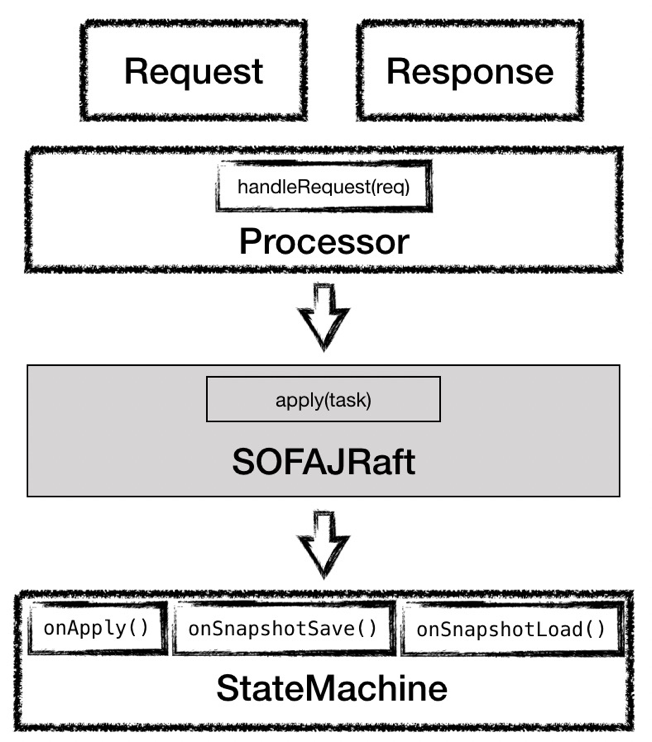

On the StateMachine, we go to achieve the state machine is exposed to several interfaces us to be achieved, the most important is the interface onApply, where the interface will be in this request command computes Cilent converted into a specific counter value. The onSnapshotSave and onSnapshotLoad the interface is responsible for generating and loading snapshots.

Client also requires the user to implement portions, the user needs to define different message types and processing logic in the client.

Distributed achieve Counter Counter

Below we give a demand: a Counter, you can specify Client stride when each count, you can also initiate inquiries at any time.

It will be translated into specific function points, has three main parts:

- Achieved: Counter server, includes a counting function, particularly calculation formula is: Cn = Cn-1 + delta;

- Provide writing services, writes delta trigger counter operations;

- Provide reading services, reading the current value Cn;

Specific code: Counter

In this demo, we start three server as a group, passed the following parameters:

/tmp/server1 counter 127.0.0.1:8081 127.0.0.1:8081,127.0.0.1:8082,127.0.0.1:8083

/tmp/server2 counter 127.0.0.1:8082 127.0.0.1:8081,127.0.0.1:8082,127.0.0.1:8083

/tmp/server3 counter 127.0.0.1:8083 127.0.0.1:8081,127.0.0.1:8082,127.0.0.1:8083Indication / tmp / server1, / tmp / server2, / tmp / server3 three directories used to store data, raft group name counter, nodes are also ip

127.0.0.1:8081,127.0.0.1:8082,127.0.0.1:8083Then start the client and pass the following parameters:

counter 127.0.0.1:8081,127.0.0.1:8082,127.0.0.1:8083Represent binding raft group name for the counter, the cluster is:

127.0.0.1:8081,127.0.0.1:8082,127.0.0.1:8083Server

CounterServer

public CounterServer(final String dataPath, final String groupId, final PeerId serverId,

final NodeOptions nodeOptions) throws IOException {

// 初始化路径

FileUtils.forceMkdir(new File(dataPath));

// 这里让 raft RPC 和业务 RPC 使用同一个 RPC server, 通常也可以分开

final RpcServer rpcServer = new RpcServer(serverId.getPort());

RaftRpcServerFactory.addRaftRequestProcessors(rpcServer);

// 注册业务处理器

rpcServer.registerUserProcessor(new GetValueRequestProcessor(this));

rpcServer.registerUserProcessor(new IncrementAndGetRequestProcessor(this));

// 初始化状态机

this.fsm = new CounterStateMachine();

// 设置状态机到启动参数

nodeOptions.setFsm(this.fsm);

// 设置存储路径

// 日志, 必须

nodeOptions.setLogUri(dataPath + File.separator + "log");

// 元信息, 必须

nodeOptions.setRaftMetaUri(dataPath + File.separator + "raft_meta");

// snapshot, 可选, 一般都推荐

nodeOptions.setSnapshotUri(dataPath + File.separator + "snapshot");

// 初始化 raft group 服务框架

this.raftGroupService = new RaftGroupService(groupId, serverId, nodeOptions, rpcServer);

// 启动

this.node = this.raftGroupService.start();

}In CounterServer server instantiation sets respective processors, and there is provided GetValueRequestProcessor IncrementAndGetRequestProcessor.

GetValueRequestProcessor used to provide reading services, reading the current value Cn;

IncrementAndGetRequestProcessor offer writing services, writes delta trigger counter operations;

GetValueRequestProcessor

@Override

public Object handleRequest(final BizContext bizCtx, final GetValueRequest request) throws Exception {

if (!this.counterServer.getFsm().isLeader()) {

return this.counterServer.redirect();

}

final ValueResponse response = new ValueResponse();

response.setSuccess(true);

response.setValue(this.counterServer.getFsm().getValue());

return response;

}GetValueRequestProcessor process is very simple, direct access to the value of the state machine then returns.

IncrementAndGetRequestProcessor

public void handleRequest(final BizContext bizCtx, final AsyncContext asyncCtx,

final IncrementAndGetRequest request) {

//判断当前节点是否是leader

if (!this.counterServer.getFsm().isLeader()) {

asyncCtx.sendResponse(this.counterServer.redirect());

return;

}

//设置响应数据

final ValueResponse response = new ValueResponse();

//封装请求数据,并回调响应结果

final IncrementAndAddClosure closure = new IncrementAndAddClosure(counterServer, request, response,

status -> {

//响应成功

if (!status.isOk()) {

response.setErrorMsg(status.getErrorMsg());

response.setSuccess(false);

}

//发送响应请求

asyncCtx.sendResponse(response);

});

try {

final Task task = new Task();

task.setDone(closure);

//序列化请求

task.setData(ByteBuffer

.wrap(SerializerManager.getSerializer(SerializerManager.Hessian2).serialize(request)));

//调用node处理请求

// apply task to raft group.

counterServer.getNode().apply(task);

} catch (final CodecException e) {

LOG.error("Fail to encode IncrementAndGetRequest", e);

//请求失败,则立即响应

response.setSuccess(false);

response.setErrorMsg(e.getMessage());

asyncCtx.sendResponse(response);

}

}As used herein, and in response to a request to encapsulate IncrementAndAddClosure and asynchronous write back data to the client by way of a callback. Task then instantiate instances, the sequence of the data request, the method calls the apply node.

CounterStateMachine then the state machine is provided, and the set value of the log storage path, the meta information and snapshots.

CounterStateMachine achieved StateMachineAdapter abstract class, and override three methods:

OnApply to handle specific business

onSnapshotSave saved snapshot

onSnapshotLoad loaded snapshot

used in place CounterSnapshotFile class loading and saving snapshots be aided.

CounterStateMachine

public class CounterStateMachine extends StateMachineAdapter {

...

private final AtomicLong value = new AtomicLong(0);

public void onApply(final Iterator iter) {

//获取processor中封装的数据

while (iter.hasNext()) {

long delta = 0;

//用于封装请求数据和回调结果

IncrementAndAddClosure closure = null;

if (iter.done() != null) {

// This task is applied by this node, get value from closure to avoid additional parsing.

closure = (IncrementAndAddClosure) iter.done();

delta = closure.getRequest().getDelta();

} else {

// Have to parse FetchAddRequest from this user log.

final ByteBuffer data = iter.getData();

try {

final IncrementAndGetRequest request = SerializerManager.getSerializer(SerializerManager.Hessian2)

.deserialize(data.array(), IncrementAndGetRequest.class.getName());

delta = request.getDelta();

} catch (final CodecException e) {

LOG.error("Fail to decode IncrementAndGetRequest", e);

}

}

//获取当前值

final long prev = this.value.get();

//将当前值加上delta

final long updated = value.addAndGet(delta);

//设置响应,并调用run方法回写响应方法

if (closure != null) {

closure.getResponse().setValue(updated);

closure.getResponse().setSuccess(true);

closure.run(Status.OK());

}

LOG.info("Added value={} by delta={} at logIndex={}", prev, delta, iter.getIndex());

iter.next();

}

}

}Here onApply method first data processor in acquiring the package, and then the processor acquires the incoming closure instance and then call the run after the closure handle business logic to return data to the client callback.

Client

CounterClient

public static void main(final String[] args) throws Exception {

if (args.length != 2) {

System.out.println("Useage : java com.alipay.sofa.jraft.example.counter.CounterClient {groupId} {conf}");

System.out

.println("Example: java com.alipay.sofa.jraft.example.counter.CounterClient counter 127.0.0.1:8081,127.0.0.1:8082,127.0.0.1:8083");

System.exit(1);

}

final String groupId = args[0];

final String confStr = args[1];

final Configuration conf = new Configuration();

if (!conf.parse(confStr)) {

throw new IllegalArgumentException("Fail to parse conf:" + confStr);

}

// 更新raft group配置

RouteTable.getInstance().updateConfiguration(groupId, conf);

//接下来初始化 RPC 客户端并更新路由表

final BoltCliClientService cliClientService = new BoltCliClientService();

cliClientService.init(new CliOptions());

if (!RouteTable.getInstance().refreshLeader(cliClientService, groupId, 1000).isOk()) {

throw new IllegalStateException("Refresh leader failed");

}

//获取 leader 后发送请求

final PeerId leader = RouteTable.getInstance().selectLeader(groupId);

System.out.println("Leader is " + leader);

final int n = 1000;

final CountDownLatch latch = new CountDownLatch(n);

final long start = System.currentTimeMillis();

for (int i = 0; i < n; i++) {

incrementAndGet(cliClientService, leader, i, latch);

}

latch.await();

System.out.println(n + " ops, cost : " + (System.currentTimeMillis() - start) + " ms.");

System.exit(0);

}First, according to the client server binding groupId and IP, and then update the routing table, get leader

private static void incrementAndGet(final BoltCliClientService cliClientService, final PeerId leader,

final long delta, CountDownLatch latch) throws RemotingException,

InterruptedException {

final IncrementAndGetRequest request = new IncrementAndGetRequest();

request.setDelta(delta);

cliClientService.getRpcClient().invokeWithCallback(leader.getEndpoint().toString(), request,

new InvokeCallback() {

@Override

public void onResponse(Object result) {

latch.countDown();

System.out.println("incrementAndGet result:" + result);

}

@Override

public void onException(Throwable e) {

e.printStackTrace();

latch.countDown();

}

@Override

public Executor getExecutor() {

return null;

}

}, 5000);

}Then call incrementAndGet method. The method used cliClientService incrementAndGet acquired client requests and then set the value of the incoming request a callback function.

The overall process

Here to sum up the entire call process server and client

First CounterClient the binding server, the server acquires leader node, and then sends a request to the request of IncrementAndGetRequest server.

Upon receiving a request to Server IncrementAndGetRequestProcessor according to the type of the request, and calls handleRequest method.

Then onApply data encapsulation method handleRequest will call the state machine, after processing the service data calls callback closure.

ValueResponse encapsulates a request to send a response back to the client callback closure.

OnResponse client callback method.

Here's an example to the whole counter to explain finished