-K2 learning algorithm based on Bayesian network structure search

An algorithm Introduction

The core algorithm based on Bayesian network structure learning search mainly includes two: First, determine the scoring function to evaluate the quality of the network structure. Second, determine the search strategy to find the best results.

Two scoring function

Learning network structure can actually be attributed to lower demand given the data D, has a network structure Bs maximum a posteriori probability that the demand Bs P (Bs | D) maximum.

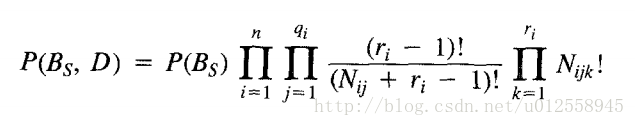

And P (Bs | D) = P (Bs, D) / P (D), the denominator P (D) has nothing to do with Bs, so that the ultimate goal is to find P (Bs, D) Bs largest, is derived through a series of (see the top of the concrete derivation link paper), you can get:

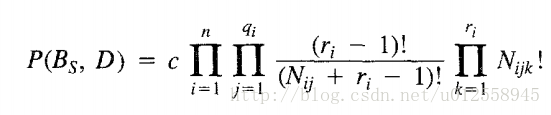

Where P (Bs) Bs on a priori probabilities, the probability is given without data, we give the structure of each set. Later, we can assume that the probability of each construction uniform distribution, i.e., the probability is the same constant c.

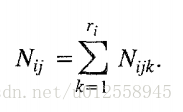

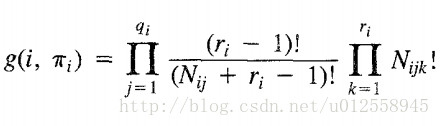

Let Z n comprising a discrete set of random variables, each variable Xi has ri possible values (Vi1, Vi2 ..... Viri). Let D be a database, comprising m case, each case is all random variables Z is instantiated. Bs represents a belief network comprises just a random variable Z. Variable Xi in the parent node Bs is expressed as πi. Wij πi represents the j th instantiated. There are examples of the kinds of qi πi. There are two such variables Xi parent variable, the first parent has two kinds of variable values, the second parent variable has three values, qi then up to 2 * 3 = 6. Nijk data D represents a value of Xi and πi Vik be instantiated as Wij. Simultaneously:

Connected by a first traversing each symbol i by a random variable Xi, n is the number of random variables.

The second even by traversing the current variable j by the symbol Xi is the parent of all the variables instances, qi represents the number of kinds of variable Xi parent instance variables. .

Finally, a multiplicative variable traverse the current variable Xi symbol of all possible values, ri is the number of possible values.

Instead of P (Bs) with a constant of:

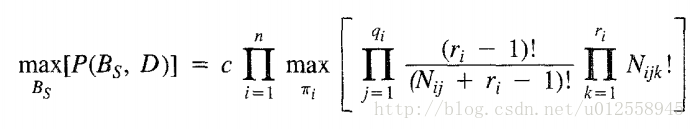

Our goal is to find the maximum posterior probability Bs:

When finding a best network structure, the data in the configuration Nijk into the above equation can be maximum. As can be seen from the above equation, as long as we maximize local maximum for each variable, you can get the maximum overall. We will be part of each variable is presented as a new scoring function:

Three search strategy

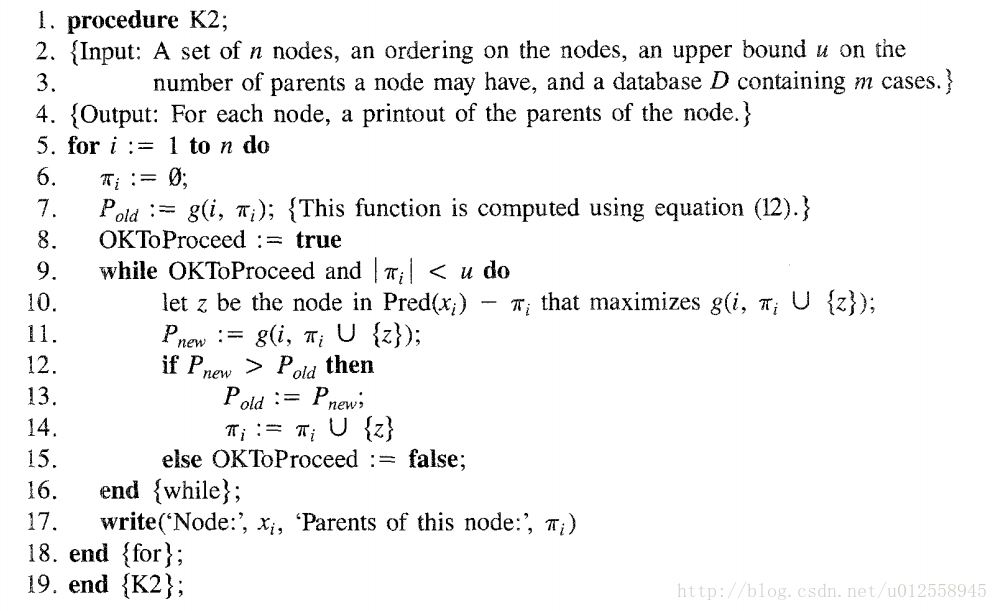

Therefore, as long as we make the score calculated for each variable function of the maximum of the parent variables. K2 algorithm uses a greedy search to get the maximum value. First, we assume there is a sequence of random variables, if Xi Xj before, it can not exist from the edge of Xi to Xj. Also assume that the number of variables up to the parent of each variable u. Each time that the selection function of the maximum rates set into the parent variable, so that when the scoring function can not be increased, the cycle is stopped, the specific algorithm is as follows, wherein the Pred Forte (Xi) represents the order of the variables before Xi:

Four expansion algorithm

In fact, we can compute log g (i, πi), rather than directly computing g (i, πi). Since multiplication can be converted by adding log function.

还有一种算法叫做K2R(K2 Reverse),它从一个全连接的信念网络开始,不断应用贪心算法从结构中移除边。我们可以用K2和K2R分别学习两个结构并从中挑选后验概率更大的结构。也可以在执行K2算法时,初始化不同的节点顺序,并挑选输出的网络结构中较好的那个。