Record the entire reptile code, I've climbed the test site. .

items.py

1 Import scrapy 2 3 4 class DemoItem (scrapy.Item): 5 # the DEFINE at The Fields for your Item here Wallpaper like: 6 folder_name = scrapy.Field () # select the page where the topic or title as a folder name, instead of full folder 7 #img_name = scrapy.Field () # extract the name of the picture, if not do not use 8 img_url = scrapy.Field () # image link

spider.py

1 # -*- coding: utf-8 -*- 2 import scrapy 3 from demo.items import DemoItem 4 5 6 class LogosSpider(scrapy.Spider): 7 name = 'logos' 8 allowed_domains = ['tttt8.net'] 9 #start_urls = ['http://www.tttt8.net/category/legbaby/'] 10 #start_urls = ['http://www.tttt8.net/category/ugirls/'] 11 #start_urls = ['http://www.tttt8.net/category/kelagirls/ ' ] 12 is start_urls = [ ' http://www.tttt8.net/category/xiurenwang/micatruisg/ ' ] 13 is # where the page is used to construct a page turning link 14 page = 1 15 16 DEF the parse (Self, the Response): 17 # to extract a list of all the columns 18 li_list response.xpath = ( ' // * [@ the above mentioned id = "post_container"] / li ' ) 19 for Li in li_list: 20 is # of example 21 is Item = DemoItem () 22 is # Extracting only the name column here, for later storage folder name 23 is Item [ ' FOLDER_NAME ' ] = li.xpath ( ' ./div [2] / H2 / A / text () ' ) .extract_first () 24 # extract links two pages, two pages ready to function to work 25 next_plink = li.xpath ( ' ./div[1]/a/@href ' ) .extract_first () 26 is #item to a page instantiation received content to the two page 27 the yield scrapy.Request (URL = next_plink, the callback = self.parse2, Meta = { ' Item ' : Item}) 28 # turning a page list / self turn find other methods pages, online a lot, I think this simpler structure 29 = response.xpath page_list ( ' // div [@ class = "the pagination"] / A / @ the href ' ) .extract () 30 # to find the last page 31 is LAST_PAGE page_list = [- . 1 ] 32 # extracted maximum the page 33 is MAX_NUM = int (LAST_PAGE [- 2 ]) 34 is # configured turning the page of the link 35 IF self.page <= MAX_NUM: 36 self.page + = . 1 37 [ new_page_url self.start_urls = [ 0 ] + ' Page / ' + STR (self.page) + ' /' 38 is the yield scrapy.Request (URL = new_page_url, the callback = self.parse) 39 40 DEF parse2 (Self, Response): 41 is # receiving a content page, and the adapter request flip, or flip is lost, being given 42 Item = response.meta [ ' Item ' ] 43 P_LIST response.xpath = ( ' // * [@ the above mentioned id = "post_content"] / the p-/ img ' ) 44 # normal link to pepeline extract pictures to download 45 for IMG in P_LIST: 46 is img_url = img.xpath ( ' ./@src ' ) .extract_first () 47 # This must be added in brackets [], a picture download function, a list type requires 48 Item [ ' img_url ' ] = [img_url] 49 yield Item 50 page list # two page, then subsequent requests yield 51 is next_page_list = response.xpath ( ' // * [@ ID = "Content"] / div [. 1] / div [. 3] / div [2] / A / @ the href ' ) .extract () 52 is for next_page in next_page_list: 53 is # here we must add meta, or else two flip on the error, and here I found out a long time. 54 is the yield scrapy.Request (URL = next_page, the callback = self.parse2, Meta = { ' Item ' : Item})

settings.py

1 # -*- coding: utf-8 -*- 2 3 4 BOT_NAME = 'demo' 5 SPIDER_MODULES = ['demo.spiders'] 6 NEWSPIDER_MODULE = 'demo.spiders' 7 8 #存储路径和header 9 IMAGES_STORE = 'D:\pics' 10 USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/50.0.2661.102 Safari/537.36' 11 12 DOWNLOAD_DELAY = 0.2 13 Turn off the robot # 14 ROBOTSTXT_OBEY = False 15 16 ITEM_PIPELINES = { . 17 ' demo.pipelines.DemoPipeline ' : 300 , 18 is } . 19 # download the designated Field 20 is IMAGES_URLS_FIELD = ' img_url '

pipelines.py

. 1 Import Scrapy 2 from scrapy.exceptions Import DropItem . 3 from scrapy.pipelines.images Import ImagesPipeline . 4 . 5 . 6 class DemoPipeline (ImagesPipeline): . 7 # rewritable fixed function without modification . 8 DEF get_media_requests (Self, Item, info): . 9 for img_url in Item [ ' img_url ' ]: 10 # after downloading to other functions to change the name, so use meta to generation . 11 the yield scrapy.Request (img_url, meta = { ' Item ' : Item}) 12 is 13 is file_path DEF (Self, Request, Response = None, info = None): 14 Item request.META = [ ' Item ' ] 15 FOLDER_NAME = Item [ ' FOLDER_NAME ' ] 16 # Item img_name = [ ' img_name ' ] # image no name this statement is not enabled 17 # name is not used because the picture taken url string as the name of the last 18 is image_guid request.url.split = ( ' / ' ) [- . 1 ] . 19 img_name = image_guid 20 is # name = img_name + image_guid 21 is Name = name + # ' .jpg ' 22 is # 0 represent a folder, a representative file 23 is filename = U ' {0} / {1} ' .format (FOLDER_NAME, img_name) 24 return filename 25 26 is # rewritable fixed function, no need to modify 27 DEF item_completed (Self, Results, Item, info): 28 image_paths = [X [ ' path ' ] for OK, X in Results IF OK] 29 IF Not image_paths: 30 raise DropItem('Image Downloaded Failed') 31 return item

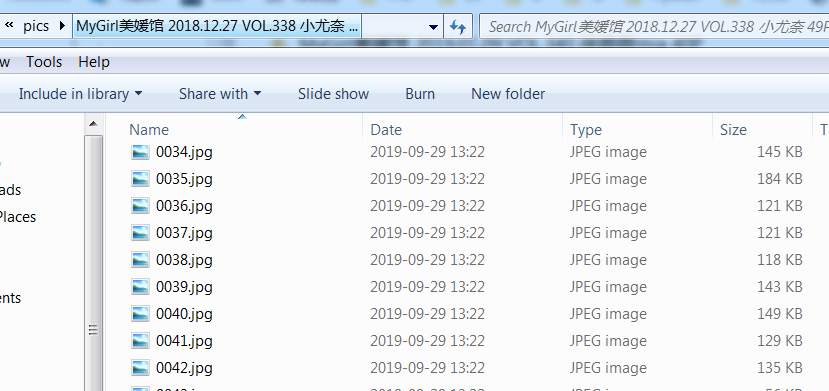

result: