Front FLink article, we have introduced, said Flink has a lot of built-in Connector.

1, "Learning from 0-1 Flink" - Data Source Introduction

2, "Learning from 0-1 Flink" - Data Sink Introduction

Including the Source and Sink, and later I also spoke at how to customize your own Source and Sink.

Well, today to do is Shane? It is to introduce Flink comes ElasticSearch Connector, we will use him to do today Sink, the data Kafka in after Flink processed and then stored to ElasticSearch.

ready

Installation ElasticSearch, here ignored, found himself in my previous article, it is recommended to install ElasticSearch 6.0 above, after all, keep up with the rhythm of the times.

Here we explain the production environment as well as how to use Elasticsearch Sink few notes, and its internal implementation mechanism.

Elasticsearch Sink

Add dependent

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-elasticsearch6_${scala.binary.version}</artifactId>

<version>${flink.version}</version>

</dependency>

Above depending on the version number of your own under the corresponding change depending on the version used.

All of the following code into the import did not come here if you need to see a more detailed code, see my GitHub repository address:

This module contains all the code in this article to achieve, of course, the more he might do later wrote some abstract, so if you have code change is normal, check all the items code directly.

ElasticSearchSinkUtil Tools

The tools of their own package, getEsAddresses method incoming profile es parsed address, the domain name can be a way, it can be ip + port form.

Flink addSink method is the use of encapsulated ElasticsearchSink own layer, passing the necessary tuning parameters and configuration parameters es, repeat the following articles also some other configuration.

ElasticSearchSinkUtil.java

public class ElasticSearchSinkUtil {

/**

* es sink

*

* @param hosts es hosts

* @param bulkFlushMaxActions bulk flush size

* @param parallelism 并行数

* @param data 数据

* @param func

* @param <T>

*/

public static <T> void addSink(List<HttpHost> hosts, int bulkFlushMaxActions, int parallelism,

SingleOutputStreamOperator<T> data, ElasticsearchSinkFunction<T> func) {

ElasticsearchSink.Builder<T> esSinkBuilder = new ElasticsearchSink.Builder<>(hosts, func);

esSinkBuilder.setBulkFlushMaxActions(bulkFlushMaxActions);

data.addSink(esSinkBuilder.build()).setParallelism(parallelism);

}

/**

* 解析配置文件的 es hosts

*

* @param hosts

* @return

* @throws MalformedURLException

*/

public static List<HttpHost> getEsAddresses(String hosts) throws MalformedURLException {

String[] hostList = hosts.split(",");

List<HttpHost> addresses = new ArrayList<>();

for (String host : hostList) {

if (host.startsWith("http")) {

URL url = new URL(host);

addresses.add(new HttpHost(url.getHost(), url.getPort()));

} else {

String[] parts = host.split(":", 2);

if (parts.length > 1) {

addresses.add(new HttpHost(parts[0], Integer.parseInt(parts[1])));

} else {

throw new MalformedURLException("invalid elasticsearch hosts format");

}

}

}

return addresses;

}

}

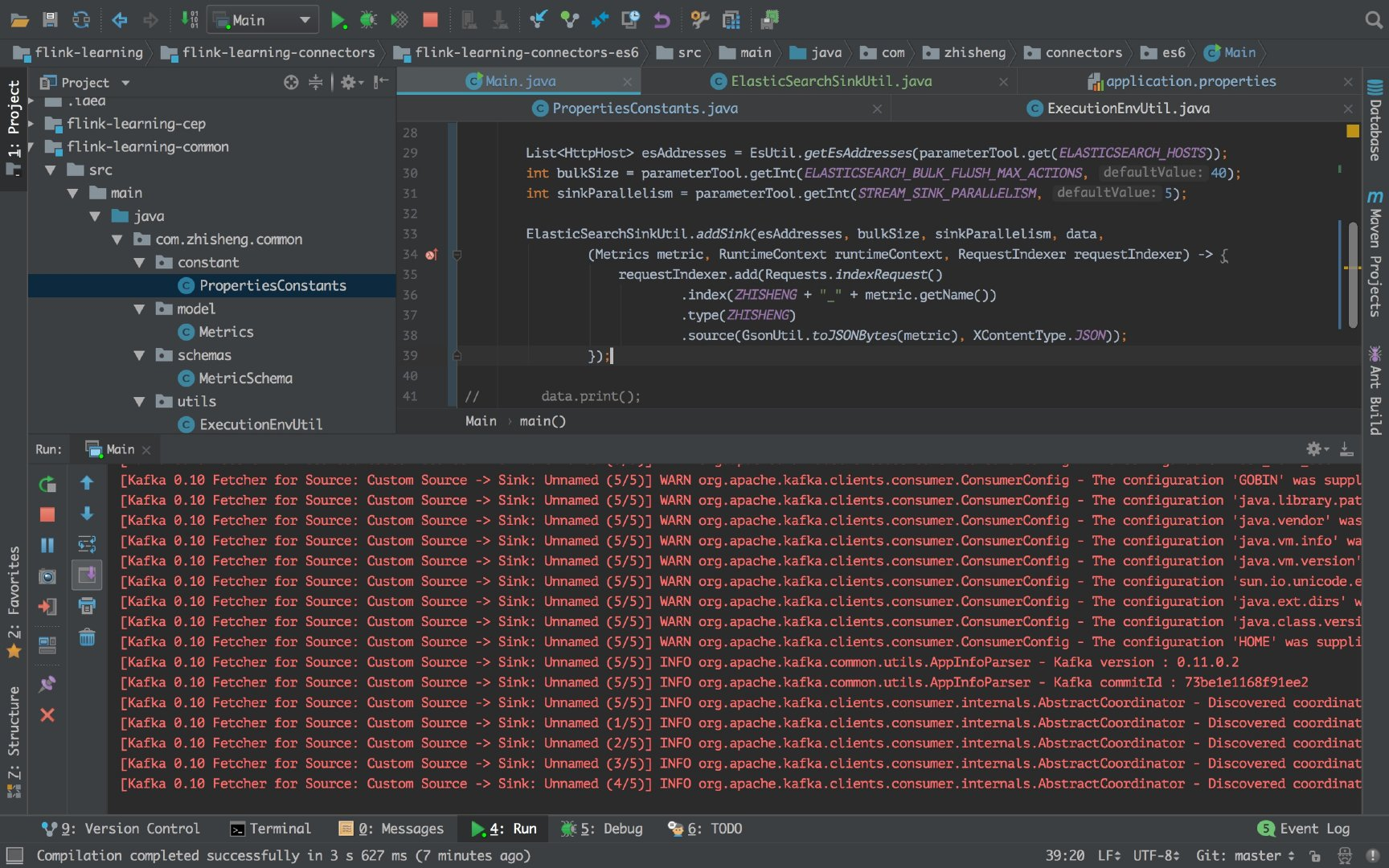

Main startup class

Main.java

class Main {public

public static void main (String [] args) throws Exception {

// Get all parameters

Final ParameterTool parameterTool = ExecutionEnvUtil.createParameterTool (args);

// prepare the environment

StreamExecutionEnvironment env = ExecutionEnvUtil.prepare (parameterTool);

// data read from kafka

DataStreamSource <Metrics> data = KafkaConfigUtil.buildSource (the env);

// read the configuration file from es address

List <HttpHost> esAddresses = ElasticSearchSinkUtil.getEsAddresses (parameterTool.get (ELASTICSEARCH_HOSTS));

// from reading bulk flush size profile representative of a batch number, but this tuning parameter, remind

int bulkSize = parameterTool.getInt (ELASTICSEARCH_BULK_FLUSH_MAX_ACTIONS, 40 );

// read the number of parallel sink from the configuration file, this is performance tuning parameters, cautions, so that it can faster consumption, to prevent the accumulation of data kafka

int sinkParallelism = parameterTool.getInt (STREAM_SINK_PARALLELISM, 5);

// them again es sink layer on the package comes under

ElasticSearchSinkUtil.addSink (esAddresses, bulkSize, sinkParallelism, Data,

(Metrics Metric, the RuntimeContext to RuntimeContext, requestIndexer requestIndexer) -> {

requestIndexer.add (Requests.indexRequest ()

.index (+ Zhisheng "_" + metric.getName ()) // es index name

.type (Zhisheng) // es of the type

.source (GsonUtil.toJSONBytes (Metric), XContentType.JSON));

});

env.execute("flink learning connectors es6");

}

}

Profiles

Cluster-Mode configurations support fill out, pay attention, separated!

kafka.brokers=localhost:9092 kafka.group.id=zhisheng-metrics-group-test kafka.zookeeper.connect=localhost:2181 metrics.topic=zhisheng-metrics stream.parallelism=5 stream.checkpoint.interval=1000 stream.checkpoint.enable=false elasticsearch.hosts=localhost:9200 elasticsearch.bulk.flush.max.actions=40 stream.sink.parallelism=5

operation result

Main class main method of execution, our program is only flink print log, print log no deposit (because we do not have to fight logs):

So it looks like our sink did not know whether it is useful, if data read out from kafka after the deposit to es.

You can view the log es es terminal server or a local play you can see the effects.

es log is as follows:

The figure is es log my local Mac computer terminal, you can see our indexed.

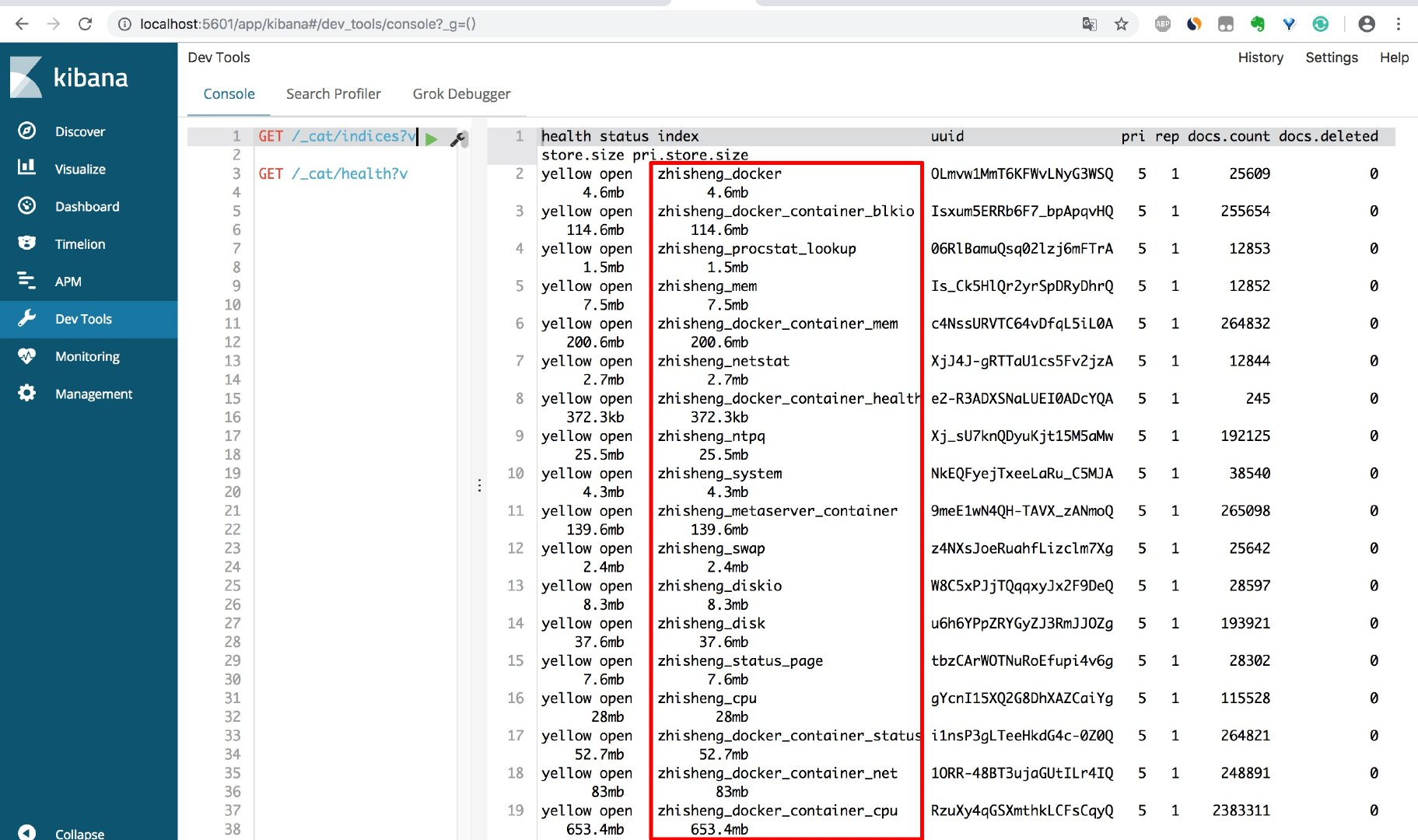

If you do not worry, you can also install a kibana on your computer, then a more intuitive view of the index case es (or direct knock on the command es)

We kibana es view into the index as follows:

Program execution for a while, the amount of data stored on es very big.

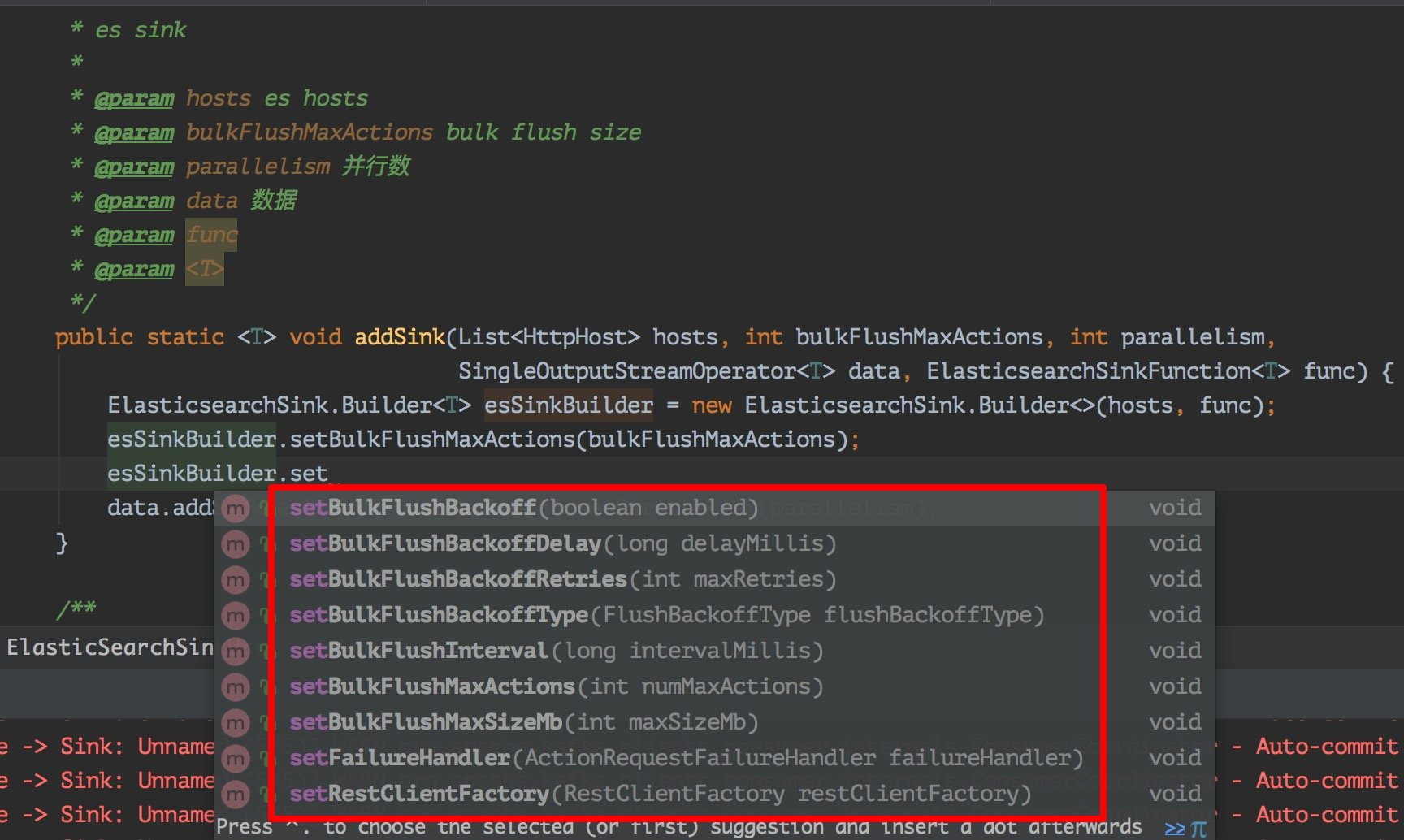

Extended configuration

上面代码已经可以实现你的大部分场景了,但是如果你的业务场景需要保证数据的完整性(不能出现丢数据的情况),那么就需要添加一些重试策略,因为在我们的生产环境中,很有可能会因为某些组件不稳定性导致各种问题,所以这里我们就要在数据存入失败的时候做重试操作,这里 flink 自带的 es sink 就支持了,常用的失败重试配置有:

1、bulk.flush.backoff.enable 用来表示是否开启重试机制 2、bulk.flush.backoff.type 重试策略,有两种:EXPONENTIAL 指数型(表示多次重试之间的时间间隔按照指数方式进行增长)、CONSTANT 常数型(表示多次重试之间的时间间隔为固定常数) 3、bulk.flush.backoff.delay 进行重试的时间间隔 4、bulk.flush.backoff.retries 失败重试的次数 5、bulk.flush.max.actions: 批量写入时的最大写入条数 6、bulk.flush.max.size.mb: 批量写入时的最大数据量 7、bulk.flush.interval.ms: 批量写入的时间间隔,配置后则会按照该时间间隔严格执行,无视上面的两个批量写入配置

看下啦,就是如下这些配置了,如果你需要的话,可以在这个地方配置扩充了。

FailureHandler 失败处理器

写入 ES 的时候会有这些情况会导致写入 ES 失败:

1、ES 集群队列满了,报如下错误

12:08:07.326 [I/O dispatcher 13] ERROR o.a.f.s.c.e.ElasticsearchSinkBase - Failed Elasticsearch item request: ElasticsearchException[Elasticsearch exception [type=es_rejected_execution_exception, reason=rejected execution of org.elasticsearch.transport.TransportService$7@566c9379 on EsThreadPoolExecutor[name = node-1/write, queue capacity = 200, org.elasticsearch.common.util.concurrent.EsThreadPoolExecutor@f00b373[Running, pool size = 4, active threads = 4, queued tasks = 200, completed tasks = 6277]]]]

是这样的,我电脑安装的 es 队列容量默认应该是 200,我没有修改过。我这里如果配置的 bulk flush size * 并发 sink 数量 这个值如果大于这个 queue capacity ,那么就很容易导致出现这种因为 es 队列满了而写入失败。

当然这里你也可以通过调大点 es 的队列。参考:https://www.elastic.co/guide/en/elasticsearch/reference/current/modules-threadpool.html

2、ES 集群某个节点挂了

这个就不用说了,肯定写入失败的。跟过源码可以发现 RestClient 类里的 performRequestAsync 方法一开始会随机的从集群中的某个节点进行写入数据,如果这台机器掉线,会进行重试在其他的机器上写入,那么当时写入的这台机器的请求就需要进行失败重试,否则就会把数据丢失!

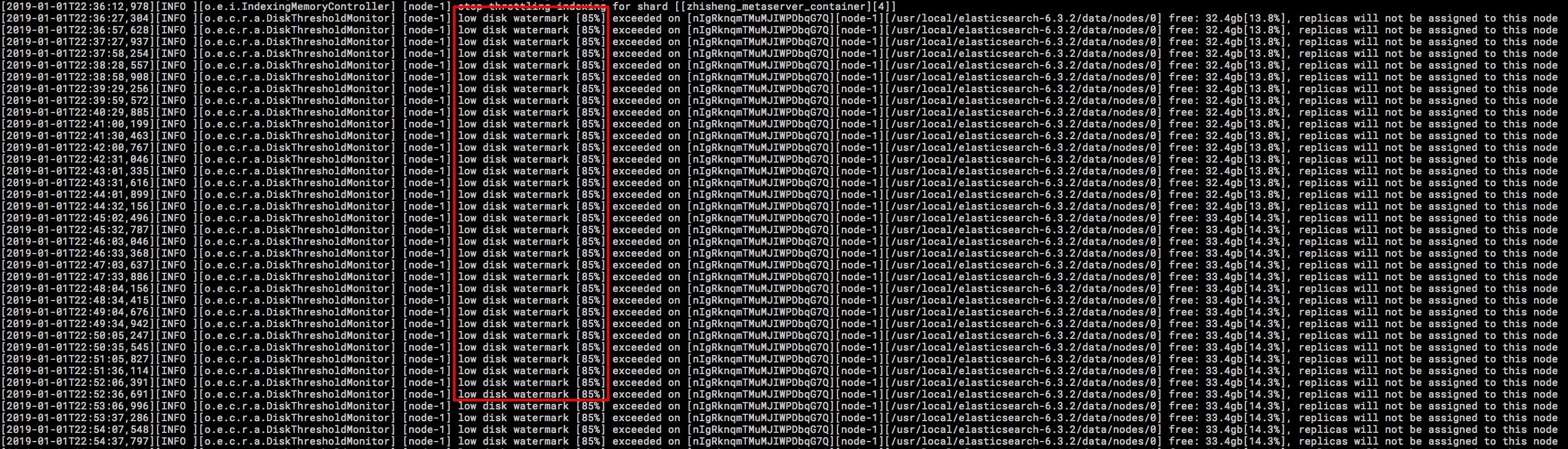

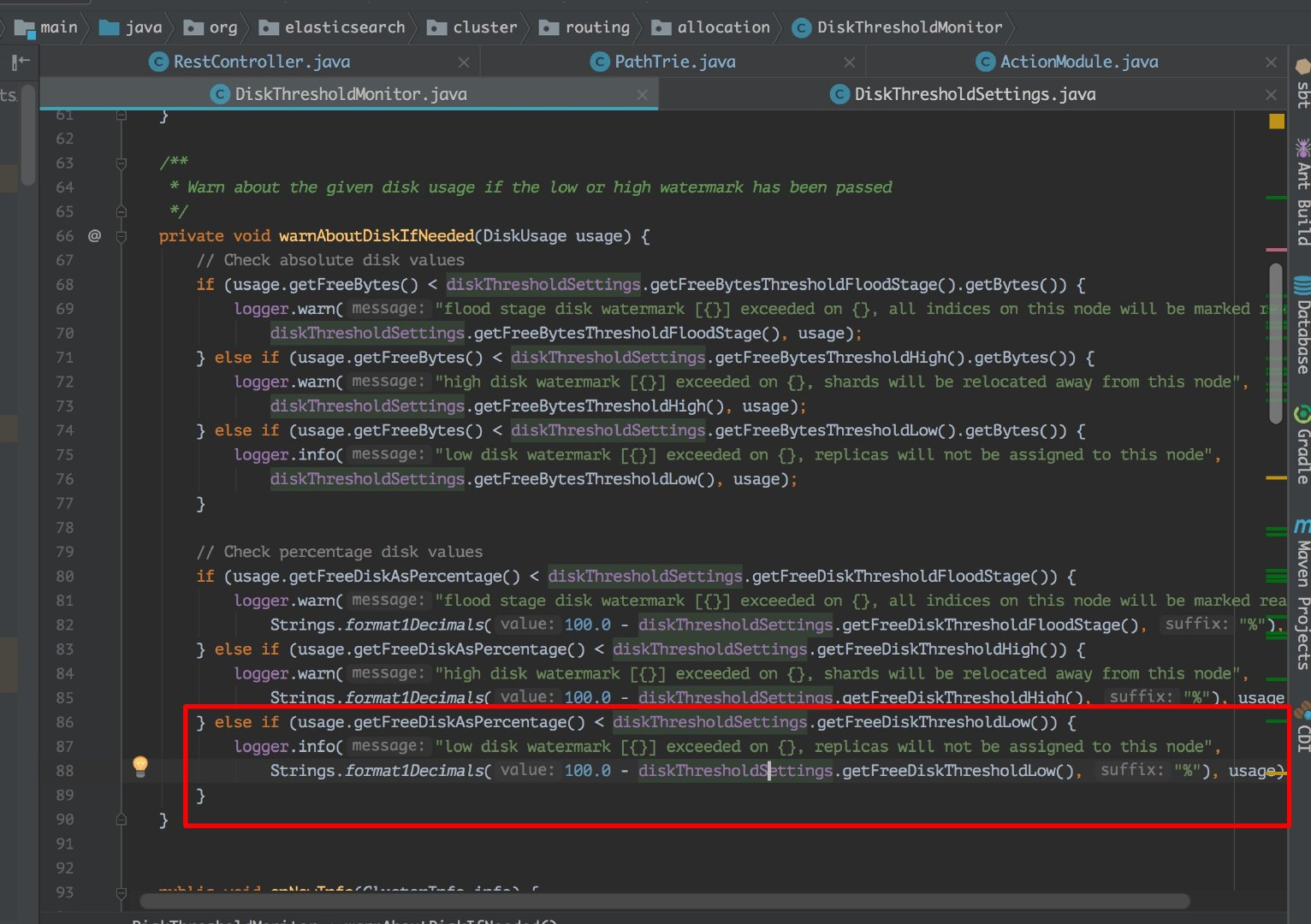

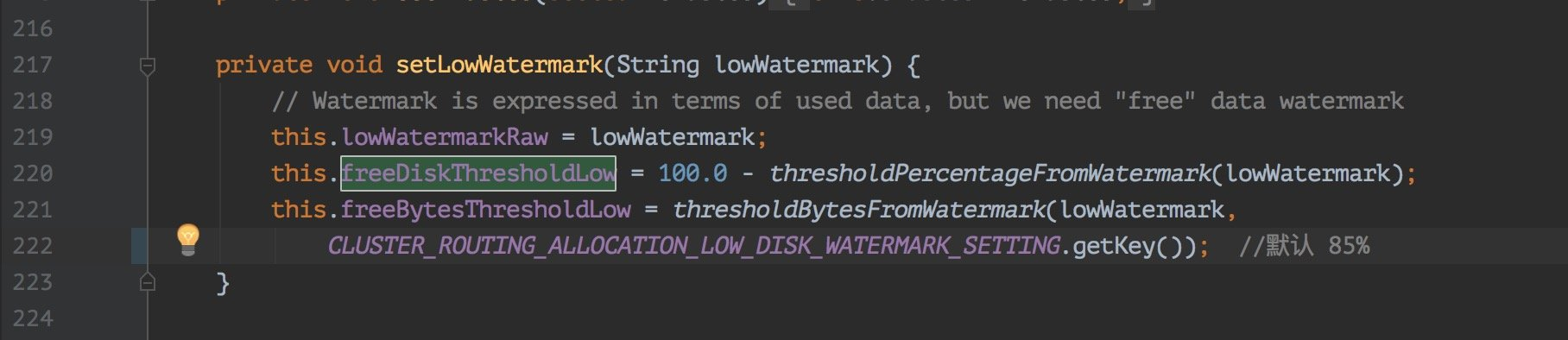

3、ES 集群某个节点的磁盘满了

这里说的磁盘满了,并不是磁盘真的就没有一点剩余空间的,是 es 会在写入的时候检查磁盘的使用情况,在 85% 的时候会打印日志警告。

这里我看了下源码如下图:

如果你想继续让 es 写入的话就需要去重新配一下 es 让它继续写入,或者你也可以清空些不必要的数据腾出磁盘空间来。

解决方法

DataStream<String> input = ...;

input.addSink(new ElasticsearchSink<>(

config, transportAddresses,

new ElasticsearchSinkFunction<String>() {...},

new ActionRequestFailureHandler() {

@Override

void onFailure(ActionRequest action,

Throwable failure,

int restStatusCode,

RequestIndexer indexer) throw Throwable {

if (ExceptionUtils.containsThrowable(failure, EsRejectedExecutionException.class)) {

// full queue; re-add document for indexing

indexer.add(action);

} else if (ExceptionUtils.containsThrowable(failure, ElasticsearchParseException.class)) {

// malformed document; simply drop request without failing sink

} else {

// for all other failures, fail the sink

// here the failure is simply rethrown, but users can also choose to throw custom exceptions

throw failure;

}

}

}));

如果仅仅只是想做失败重试,也可以直接使用官方提供的默认的 RetryRejectedExecutionFailureHandler ,该处理器会对 EsRejectedExecutionException 导致到失败写入做重试处理。如果你没有设置失败处理器(failure handler),那么就会使用默认的 NoOpFailureHandler 来简单处理所有的异常。

总结

本文写了 Flink connector es,将 Kafka 中的数据读取并存储到 ElasticSearch 中,文中讲了如何封装自带的 sink,然后一些扩展配置以及 FailureHandler 情况下要怎么处理。(这个问题可是线上很容易遇到的)

原创地址为:http://www.54tianzhisheng.cn/2018/12/30/Flink-ElasticSearch-Sink/