Use Flume-1 monitoring file changes, Flume-1 using Replicating Channel Selector will change contents to Flume-2, Flume-2 is responsible for storing the HDFS. Meanwhile Flume-1 will change the content delivery to the Flume-3, Flume-3 is responsible for output to the Local FileSystem.

First, create a profile

1.flume-file-flume.conf

Receiving a configuration log file source and two channel, two sink, are supplied to flume-flume-hdfs and flume-flume-dir.

# Name the components on this agent a1.sources = r1 a1.sinks = k1 k2 a1.channels = c1 c2 # 将数据流复制给所有 channel a1.sources.r1.selector.type = replicating # Describe/configure the source a1.sources.r1.type = exec a1.sources.r1.command = tail -F /tmp/tomcat.log a1.sources.r1.shell = /bin/bash -c # Describe the sink # sink 端的 avro 是一个数据发送者 a1.sinks.k1.type = avro a1.sinks.k1.hostname = h136 a1.sinks.k1.port = 4141 a1.sinks.k2.type = avro a1.sinks.k2.hostname = h136 a1.sinks.k2.port = 4142 # Describe the channel a1.channels.c1.type = memory a1.channels.c1.capacity = 1000 a1.channels.c1.transactionCapacity = 100 a1.channels.c2.type = memory a1.channels.c2.capacity = 1000 a1.channels.c2.transactionCapacity = 100 # Bind the source and sink to the channel a1.sources.r1.channels = c1 c2 a1.sinks.k1.channel = c1 a1.sinks.k2.channel = c2

2.flume-flume-hdfs.conf

Configuration Up Flume output of the Source, is output to the HDFS Sink.

The ON the this the Name Components # Agent a2.sources R1 = a2.sinks = K1 a2.channels = C1 # the Describe / Configure The Source Avro # Source terminal is receiving a data service a2.sources.r1.type = Avro a2.sources. = h136 r1.bind a2.sources.r1.port = 4141 # the Describe The sink a2.sinks.k1.type = HDFS a2.sinks.k1.hdfs.path = HDFS: // h136: 9000 / flume2 /% the Y% m% d /% H prefix # upload files a2.sinks.k1.hdfs.filePrefix = flume2- # chronological whether scrolling folder a2.sinks.k1.hdfs.round = true to create a new file # how much time unit clip a2.sinks.k1.hdfs.roundValue = 1 # redefine the unit of time a2.sinks.k1.hdfs.roundUnit hour = # whether to use a local time stamp a2.sinks.k1.hdfs.useLocalTimeStamp = true # how many Event accumulated only flush once to HDFS a2.sinks.k1.hdfs.batchSize = 100 # set the file type that supports compression a2.sinks.k1.hdfs.fileType = DataStream # how long to generate a new file a2.sinks = 600 .k1.hdfs.rollInterval # set the scroll size of each file is about 128M a2.sinks.k1.hdfs.rollSize = 134 217 700 unrelated scroll to the number of Event # files a2.sinks.k1.hdfs.rollCount = 0 # The Channel DESCRIBE a2.channels.c1.type = Memory a2.channels.c1.capacity = 1000 a2.channels.c1.transactionCapacity = 100 # The Source and sink to the Bind The Channel a2.sources.r1.channels C1 = A2. sinks.k1.channel = c1

3.flume-flume-dir.conf

Configuration Up Flume output of the Source, is output to a local directory Sink.

Local output directory must be an existing directory, if the directory does not exist, does not create a new directory.

# Name the components on this agent a3.sources = r1 a3.sinks = k1 a3.channels = c2 # Describe/configure the source a3.sources.r1.type = avro a3.sources.r1.bind = h136 a3.sources.r1.port = 4142 # Describe the sink a3.sinks.k1.type = file_roll a3.sinks.k1.sink.directory = /tmp/flumeData # Describe the channel a3.channels.c2.type = memory a3.channels.c2.capacity = 1000 a3.channels.c2.transactionCapacity = 100 # Bind the source and sink to the channel a3.sources.r1.channels = c2 a3.sinks.k1.channel = c2

Second, the test

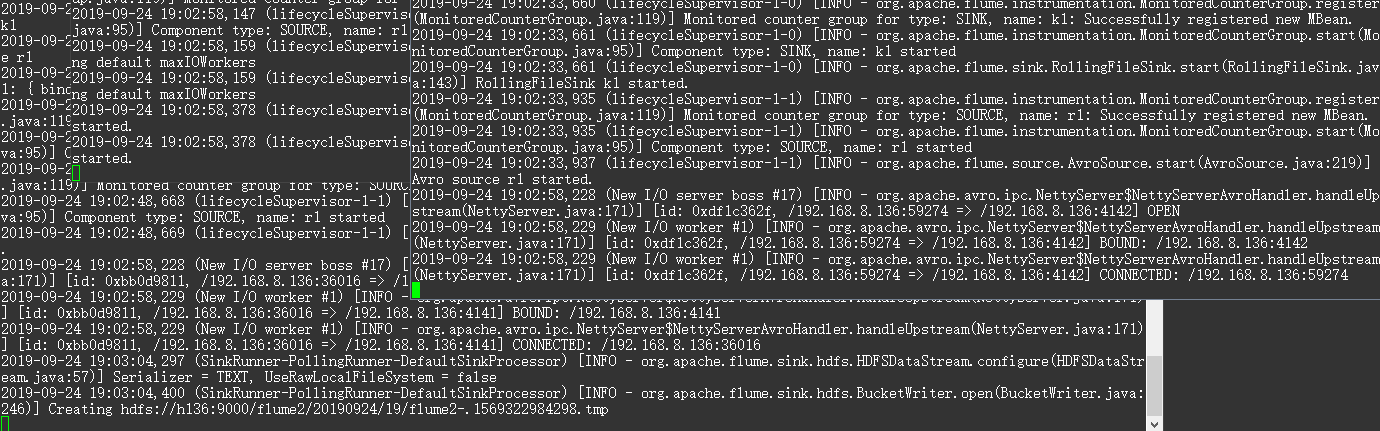

Need to start the HDFS, since the flume-file-flume.conf send data to the other two, i.e. flume-flume-hdfs.conf and flume-flume-dir.conf the server receives data, required in the flume-file-flume.conf start before.

cd /opt/apache-flume-1.9.0-bin bin/flume-ng agent --conf conf/ --name a3 --conf-file /tmp/flume-job/group1/flume-flume-dir.conf -Dflume.root.logger=INFO,console bin/flume-ng agent --conf conf/ --name a2 --conf-file /tmp/flume-job/group1/flume-flume-hdfs.conf -Dflume.root.logger=INFO,console bin/flume-ng agent --conf conf/ --name a1 --conf-file /tmp/flume-job/group1/flume-file-flume.conf -Dflume.root.logger=INFO,console

Append data to monitor file, see the changes.

echo '789qwewqe' >> /tmp/tomcat.log echo '123cvbcvbcv' >> /tmp/tomcat.log echo '456jkuikmjh' >> /tmp/tomcat.log