Transfer: https: //blog.csdn.net/qq_25073545/article/details/78621019

Laplacian smoothing (Laplace Smoothing) also known as 1 plus smoothing, the smoothing method is more commonly used. When there is a smooth approach zero probability to solve the problem.

Background: Why do the smoothing process?

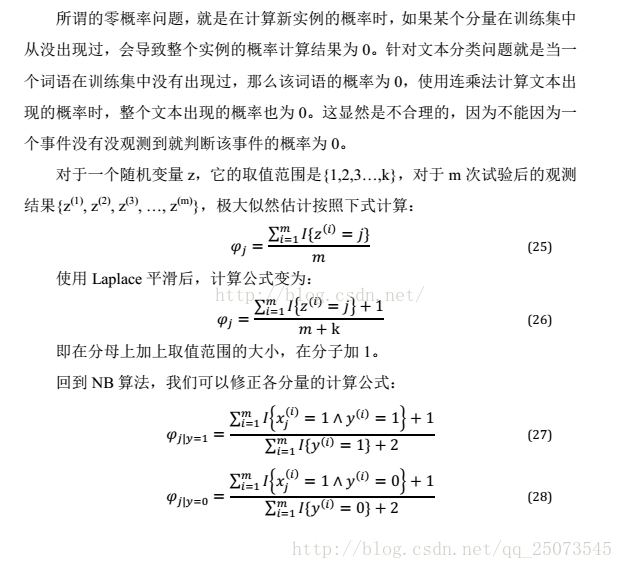

Zero probability problem is that when computing the probability instance, if a certain amount of x, there has never been observed in the sample library (training set), the probability that the results will lead to the entire instance is zero. In the question text classification, when a word does not appear in the training sample, the tone of the word probability is zero, even by using the calculated probability when text is also zero. It is unreasonable, not because it is an event not observed arbitrary believe the probability of this event is 0.

Laplace's theoretical support

In order to solve the problem of zero probability, the French mathematician Laplace was first proposed there have been no estimate of the probability of the phenomenon by the method plus 1, so smooth addition also called Laplace smoothing.

When training a large sample it assumes that each count plus 1 x component of the estimated probability caused by changes negligible, but can be easily and effectively avoid zero probability problem.

Application examples

Suppose text classification, there are three categories, C1, C2, C3, specified training samples, a word K1, respectively 0,990,10 observed count in each class, the probability of 0,0.99 K1 0.01, the amount of these three Laplacian smoothing is calculated as follows:

1/1003 = 0.001,991 / 0.988,11 = 1003/1003 = 0.011

are also frequently used in the actual use of added lambda (1≥lambda ≧ 0) instead of a simple summation. If N counts are combined with lambda, then the denominator remember to add N * lambda.