Disclaimer: This article is a blogger original article, follow the CC 4.0 BY-SA copyright agreement, reproduced, please attach the original source link and this statement.

Article Directory

basic concept

- Flink very important point is to be able to maintain the status of the task, this mission fails, the result will be the last recalculated based, to ensure that data is only counted once

implementation principle is that every bit of time to calculate the state as a mirror, the state point called Checkpoints - savepoint mainly man-made trigger, save computational state of this task, two functions are the same

Practice Code

/**

* Created by shuiyu lei

* date 2019/6/21

*/

public class TestRock {

public static void main(String[] args) {

StreamExecutionEnvironment en = StreamExecutionEnvironment.getExecutionEnvironment();

en.setStreamTimeCharacteristic(TimeCharacteristic.IngestionTime);

en.enableCheckpointing(5000);

RocksDBStateBackend rock = null;

try {

// rock作state 路径为HDFS 增量作state

rock = new RocksDBStateBackend("hdfs://centos-6:8020/flink/ch/", true);

} catch (IOException e) {

e.printStackTrace();

}

en.setStateBackend((StateBackend) rock);

// 自己封装的

KafkaUtil util = new KafkaUtil();

FlinkKafkaConsumer011 consumer = util.getConsumer("dsf", "te");

en.addSource(consumer).flatMap(new Tokenizer())

.keyBy(0)

.window(TumblingEventTimeWindows.of(Time.seconds(10)))

.sum(1)

.print();

try {

en.execute("print dwf log");

} catch (Exception e) {

e.printStackTrace();

}

}

/**

* Implements the string tokenizer that splits sentences into words as a user-defined

* FlatMapFunction. The function takes a line (String) and splits it into

* multiple pairs in the form of "(word,1)" ({@code Tuple2<String, Integer>}).

*/

public static final class Tokenizer implements FlatMapFunction<String, Tuple2<String, Integer>> {

@Override

public void flatMap(String value, Collector<Tuple2<String, Integer>> out) {

// normalize and split the line

String[] tokens = value.toLowerCase().split("\\W+");

// emit the pairs

for (String token : tokens) {

if (token.length() > 0) {

out.collect(new Tuple2<>(token, 1));

}

}

}

}

}

Testing Recovery

- start up

bin/flink run -m yarn-cluster xx.jar

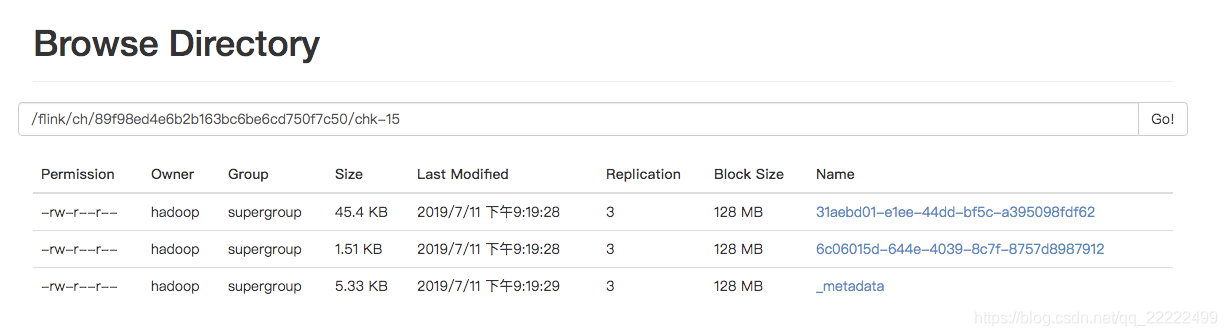

- View checkpoint, 7c50 task ID, you can get from Flink web, ch-no no would have been increased, we found that state has been written here

- View job status

- kill off task

yarn applicationn -kill appid

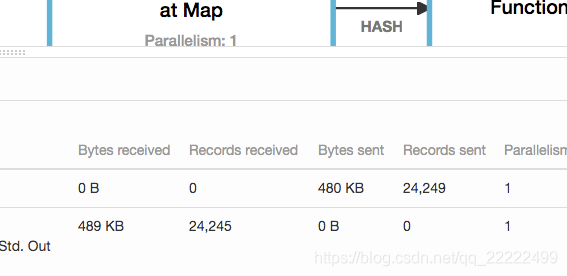

- Restart the task, the task can be observed from the last state recovery came

bin/flink run -m yarn-cluster -s hdfs://centos-6:8020/flink/89f98ed4e6b2b163bc6be6cd750f7c50/chk-47 ./exec-jar/flink-test-1.0-SNAPSHOT.jar

- Save trigger point

bin/flink savepoint 4b2e0be6e97b2e6dca866d6486d3ca0f hdfs://centos-6:8020/flink/save -yid application_1562553530220_0018

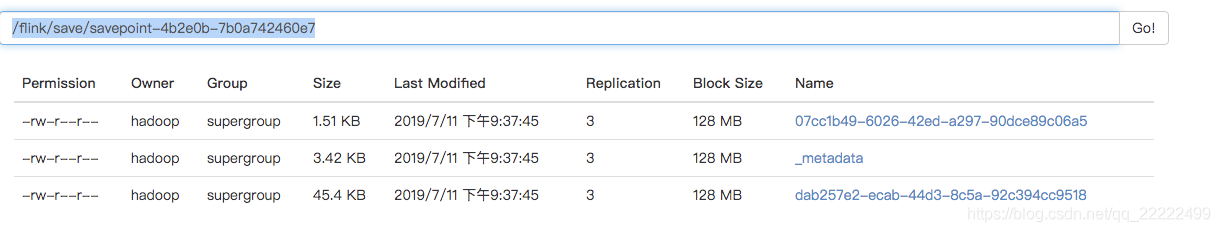

- View saved point

- Restore from a saved point

bin/flink run -m yarn-cluster -s hdfs://centos-6:8020/flink/save/savepoint-4b2e0b-7b0a742460e7 xx.jar

to sum up

- Save trigger point was man-made, as long as people do the task in a mirror, such as what system upgrade

- Checkpoint is triggered automatically

- Save point only the full amount of support incremental checkpoint