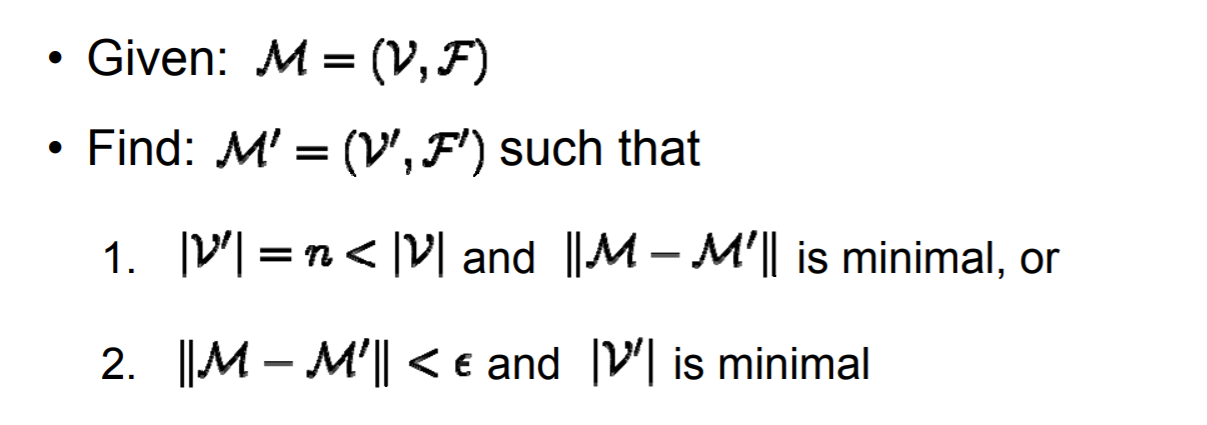

Mesh simplification algorithm:

1. simplified mesh, the mesh can be converted to A a further polygonal grid B

Grid B compared to A, there is less triangular faces, edges, vertices.

2. Simplified procedure is subject to certain constraints. Since there will be a series of defined quality standards to be simplified control. These quality standards in order to make the grid after grid as simple as possible and there is little difference between the original quality.

3. Mesh iterations are often simplified, for example, every time an edge or vertex removal. And this process can be reversed, that is, by the optimized grid, back to the original grid

The main simplification algorithm:

1. aggregation vertex (Vertex Clustering).

According to my own understanding roughly outlined, it is a distance (referred to as [epsilon]) within a range of a vertex representing merged into vertex.

This approach speed, the time complexity is O (n), n is the number of vertices. (Why doubt the persistence of O (n))

Of course, obvious defects may occur a case where even the triangle degenerates into a line of a vertex.

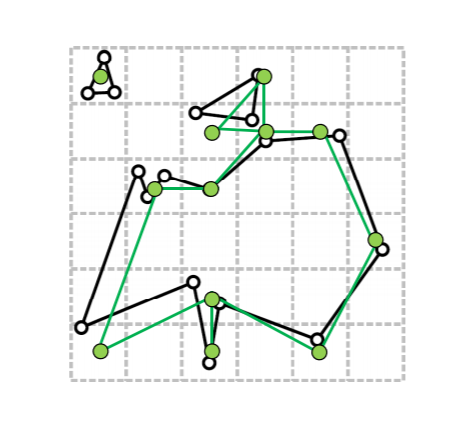

a) generating aggregate (Cluster Generation)

With a large block include mesh, then this large block is divided into a small space (cells), to determine an ε> 0, as a small value as the dimension of the space. The mesh is then mapped to this the box

(White dots on the black line in FIG original mesh, the green dot is simplified mesh vertices)

b) selected to represent the vertices (Representative Vertex)

A small space is all vertices merger, representing selected to represent the vertex (the apex is not necessarily representative of the original mesh vertices exist)

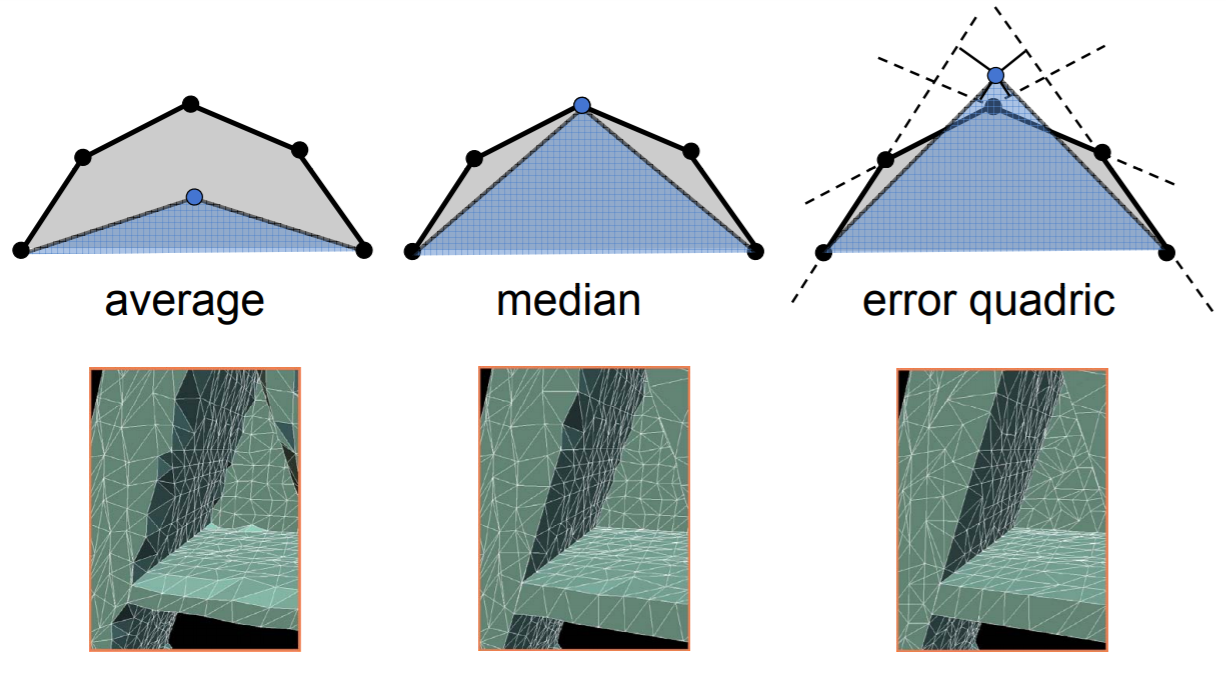

The first selection method: average

The vertex coordinates should be averaged in a small space, and finally select a representative point V P

The second method: Median

Should take is closest to the middle of the vertex it

The third method: Quadrics Error Metrics (QEM) quadratic error measure

QEM specific treatment is recorded in another article, which I also have a lot of doubts about the place, but still simple comb again

"QEM is an error metric indicating a distance from the vertex to the ideal points",

According to my understanding is that in a small space (the aforementioned cell), there exist multiple vertices (a total of n, v 1 ~ v n ), ultimately we need to choose a representative point v the p-,

For each vertex are calculated once with V P in place of V I quadratic error; then the final error plus the n secondary and E SUM . And our task is to find a suitable point V the p- , making E SUM minimum.

Effect of the above three methods as follows:

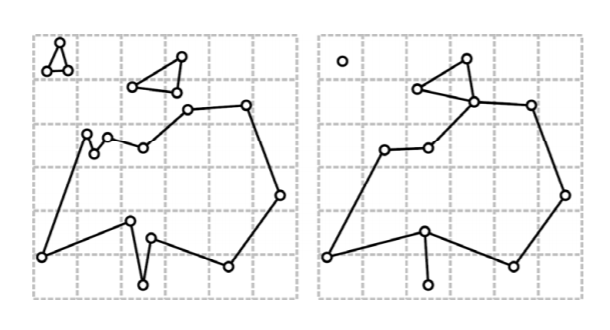

c) generating a mesh (Mesh Generation)

After the above processing steps, each small space (cell) will have a maximum of one representative point V P

Suppose there are two cell, referred to as the Cell A and the Cell B , which is a representative point V PA , V Pb ;

The Cell A vertex set P is { . 1 , P 2 , ..., P n- }, the Cell B vertex set is Q { . 1 , Q 2 , ..., Q m }, if there is connection <p I , Q J >, will V PA , V Pb connector

d) topology changes (Topology Changes)

(This can be seen in FIG defects mentioned above)

2. Incremental reduction (Incremental Decimation)

a) the following idea:

- Repeat:

- pick mesh region

- apply decimation operator

- Until no further reduction possible

For one mesh area, all simplified methods find (after each simplified method will generate a new mesh area)

Then (with a control error evaluation value, out of range deviation simplified scheme are not considered):

- For each region

- evaluate quality after decimation

- enqueue(quality, region)

- Repeat:

- get best mesh region from queue

- if error < ε

- apply decimation operator

- update queue

- Until no further reduction possible

Grid deviation evaluation performed after each simplified region, then placed in a queue;

Remove most items (unfinished)

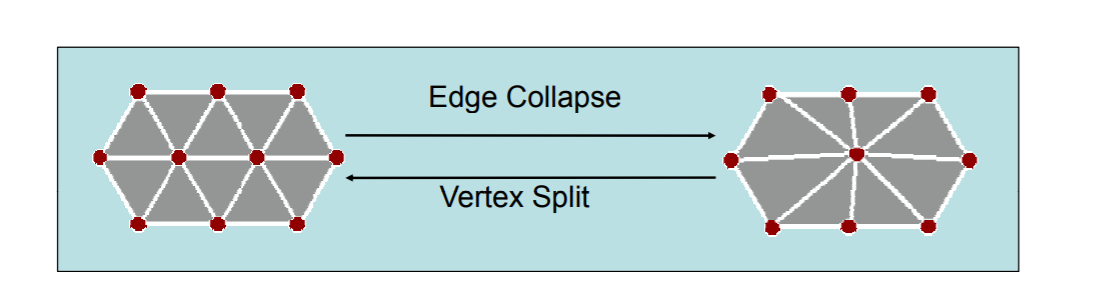

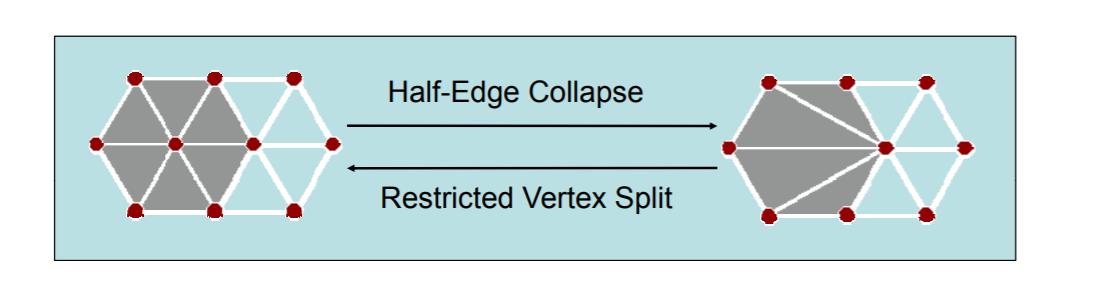

b) simplified method (Decimation Operators)

Including vertex is removed (Vertex Removal), while removing (Edge Collapse), Half-Edge Collapse (do not know how to translate)

Several methods which look very clear illustration, not further described herein

c) error metric (Error Metrics)

Divided into local and global error metric error metric

Local error metric, according to my understanding that the simplification process leads to reduction plane to measure the situation

Global error metric, mesh area is calculated to simplify the error value processed (unfinished)

d) Fairness Criteria (as I understand it, refers to the need to pay attention to simplify the process of binding standards, the following points are explained in detail my own speculation)

Reasonable error - error led to simplify the process can not be too large (it seems a bit like crap)

The shape of triangles - the change in the shape of triangles can not be too large

3. Comparison of two methods

Vertex Clustering

a) gathering vertices time complexity O (n), the processing speed; it is difficult to simplify the process control (persistence questions)

b) topology (may) change, (may) appear non-endemic grid

Increment Decimation

a) between the mesh quality speed and simplify well balanced (persistence questions)

b) a clear control of the mesh topology

c) Limiting vector deviation mesh quality improves

4. Reference

http://graphics.stanford.edu/courses/cs468-10-fall/LectureSlides/08_Simplification.pdf

https://pages.mtu.edu/~shene/COURSES/cs3621/SLIDES/Simplification.pdf