PG

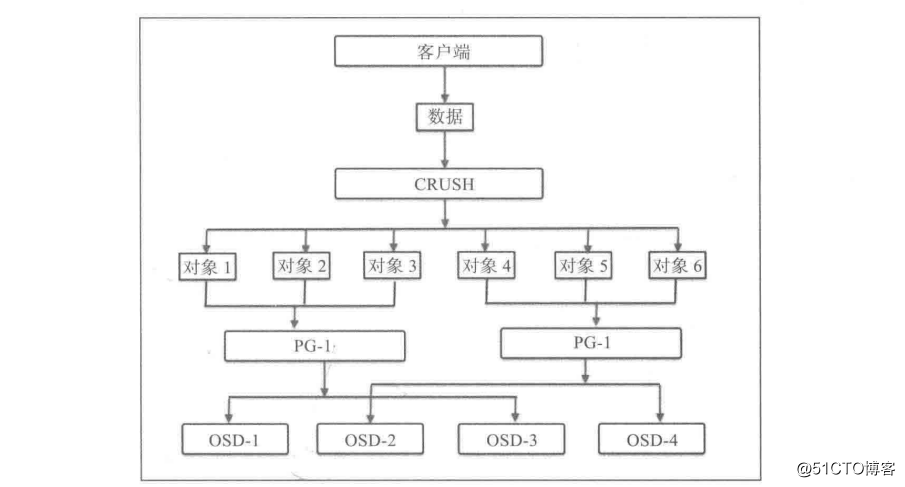

Ceph cluster when receiving a request for data storage, which is distributed to each of the PG. However, CRUSH data is first decomposed into a set of objects, then performs a hash operation by object name, replication level and the total number of system information PG and the like, then the results generated PG ID. PG is a logical collection of a group of objects, by copying it to a different OSD onto provide storage system reliability. According replication level Ceph pools, each PG data will be copied and distributed to multiple OSD Ceph cluster. PG may be thought of as a logical container that includes a plurality of objects, while the logic is mapped to a plurality of containers OSD. PG is necessary scalability and performance part of the support Ceph storage system.

No PG, replication and spread over thousands of OSD management and tracking millions of objects is quite difficult. No management of computing resources consumed by these objects under PG is a nightmare situation. And independent management of each object is different, our system only management PG contains a large number of objects. This makes Ceph become a more manageable and more approachable system. Each PG requires a certain system resources (such as CPU and memory), since a plurality of objects to be managed each PG. Therefore, the number of cluster PG should be carefully calculated. In general, increasing the number of clusters PG reduces the load on each of the OSD, it should be increased in a standardized manner, 50 are placed on each of the recommended OSD - 100 th PG. This is to avoid taking up too much of PG OSD node resources. With the increase of data storage, also need to be adjusted according to the number of PG cluster size, when you add or remove a device in a cluster, when most of PG remains unchanged; PG distribution CRUSH management throughout the internal cluster. PGP is used for positioning the total number of PG, PG is the total number of which should be equal.

Calculating the number of PG

计算正确的 PG 数是构建一个企业级的 Ceph 存储集群中至关重要的一步。 因为 PG在一定程度上能够提高或者影响存储性能。Ceph formula PG cluster number is as follows:

Number of PG = (Number of OSD 100) / the maximum number of copies of

the results must be rounded to the nearest value of 2 N times the screen. For example: If the cluster has Ceph OSD 160 and the number of copies is 3, so the total number of PG is calculated 5333.3 equation, so this value is rounded to the nearest screen 2 N times the result is 8192 PG. We should also calculate the total number of PG each pool Ceph cluster is calculated as follows:

Total number of PG ((the total number of OSD 100) / maximum number of copies) / cell number =

Also Using the previous example: Number of OSD 160, the copy number is 3, 3 is the total number of the pool. According to the formula above, the total number of each cell calculated PG should be 1777.7, rounded up to the last curtain 2 N times to obtain the result for each pool 2048 PG. PG PG number and the number of each OSD balance in each pool to reduce the variance of the OSD, to avoid the slow pace of the recovery process is very important.

Modify PG PGP

If you manage a Ceph storage cluster, sometimes you may need to modify the value of PG and PGP pool. Before modifying PG and PGP, let's look at what PGP Yes. PG PGP is provided to achieve the positioning, its value should be consistent with the total number of PG (i.e. pg num). For a pool of Ceph, if you increase the number of (ie, the value of pg_num) PG, you should also adjust pgp_num to the same value, so the cluster can begin rebalancing below will elaborate on the mysterious re-balancing mechanism. Pg_num parameter defines the number of PG, PG mapped to these OSD when any pool pg_num increases, each PG this pool into two, but they still remain a source map with the OSD. Until this time,

Ceph still not started rebalancing At this time, when you increase the value of pgp_num of the pool, PG began to migrate from the source to the other OSD OSD, the official start of this rebalancing can be seen, PGP plays an important rebalancing in the cluster character of. Now, let's learn how to change pg_num and pgp_num:

1) Get the value of the existing PG PGP:

[node140 the root @ ~] GET # Ceph RBD pg_num the pool OSD

pg_num: 128

[node140 the root @ ~] GET # Ceph RBD pgp_num the pool OSD

pgp_num: 128

2) Use the following command to check the number of copies of the pool, to find where the value of rep size of:

[the root @ node140 ~] # Ceph OSD the dump | grep size

the pool. 3 'RBD' to replicated size. 3 MIN_SIZE. 1 crush_rule 0 object_hash rjenkins pg_num 128 pgp_num The warn the flags autoscale_mode last_change 100 128 hashpspool, selfmanaged_snaps stripe_width file application 0 RBD

the pool. 4 'remote_rbd' to replicated size MIN_SIZE. 3. 1 0 object_hash rjenkins pg_num crush_rule pgp_num 128 128 112 last_change autoscale_mode The warn the flags hashpspool, RBD file application selfmanaged_snaps stripe_width 0

3) The number of PG is calculated according to the new parameters using the following formula:

Number of OSD = 9, the number of copies of the pool (rep size) = 2. Pool Number = 3

According to the previous formula to give the number of each PG is 150, and then rounded to N times the screen 256 of FIG.

4) modify the pool PG and the PGP:

[node140 the root @ ~] # OSD Ceph RBD pg_num the pool 256 SET

SET 256 to the pool. 3 pg_num

[node140 the root @ ~] # OSD Ceph RBD pgp_num the pool 256 SET

SET 256 to the pool. 3 pgp_num

5) Similarly, modify metadata pool and rbd pool the PG the PGP:

[the root @ node140 ~] # Ceph OSD the pool SET metadata pg_num 256

SET the pool. 3 pg_num to 256

[the root @ node140 ~] # Ceph OSD the pool SET metadata pgp_num 256

SET the pool 3 pgp_num to 256

Note: The reference to "ceph Distributed Storage Study Guide"