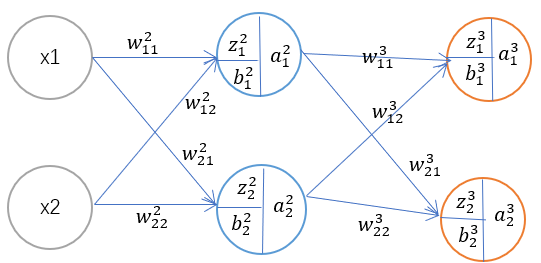

Four back-propagation formula:

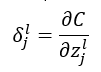

The final purpose is to obtain that the backpropagation minimum optimum value of the cost C w, b, in order to facilitate the calculation of the error introduced into neural cells δ_j ^ l, which is defined as a relationship between the error nerve cell C z; a

definition as described above, an error at the expense of neurons C (total error) on the partial derivative of z, where l is the number of layers of the neural network, j is the first of several neurons;

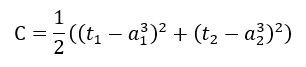

where the cost function (loss function) using a square error, so C is equal to:

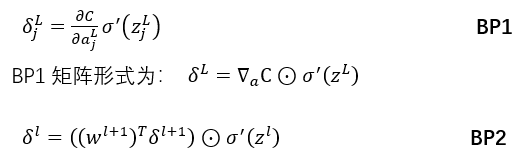

BP1

This formula is used to find an error the last layer of the neural network neurons, the following equation is used to find errors BP1 by the last neuron layer (output layer) of a neuron;

The thus obtained four equations appeal to give the front of the chain rule BP1

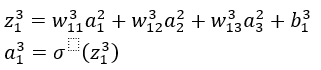

BP1 L is obtained in the last layer of the neural network obtained, this network and in our last layer is 3, where L = 3;

BP2

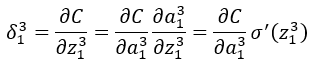

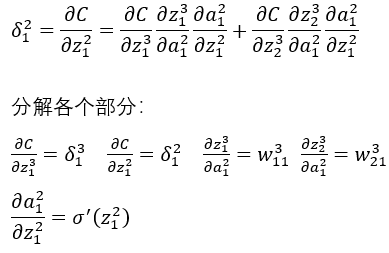

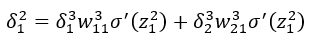

BP1 and BP2 similar, have different BP1 is used to find the final layer neurons error, and is used to find the front BP2 L layers have a layer neurons error, by the following equation is used to find a second layer BP2 neurons the first neuron error;

Similarly, we can also be derived by the chain rule:

Have:

So you can get:

The conversion formula is a matrix pattern, the second layer neurons have error:

We will get into the BP3 and BP4 BP1 and BP2 can;

by BP1 and BP2 formula can through BP3, BP4 easily get w and B;

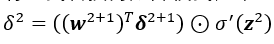

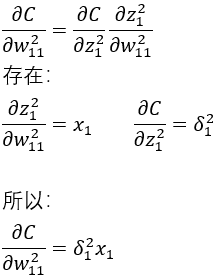

BP3

This formula is used to find the partial derivative of the error on a C weight, just after it is obtained here BP2 BP2 BP3 into the formula can be obtained;

BP4

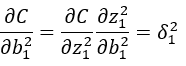

C seek error partial derivatives, BP4 and BP3 on the offset b as obtained BP2 to BP4 is drawn into;

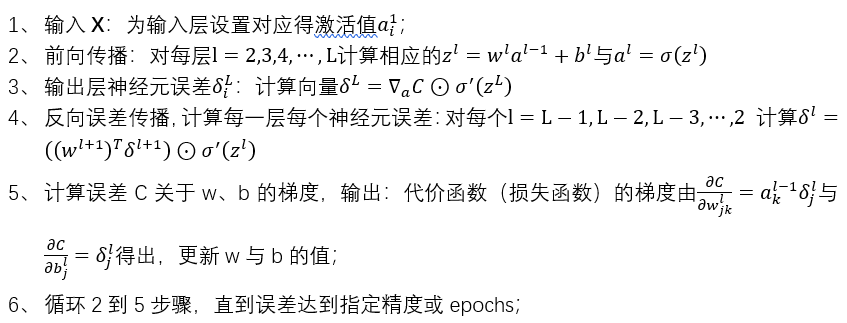

Backpropagation gradient obtained is given a method of calculating the cost function (loss function) is used , the following steps:

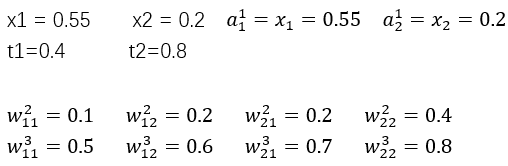

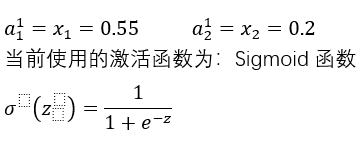

Data calculation

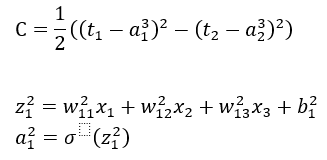

First, the forward spread

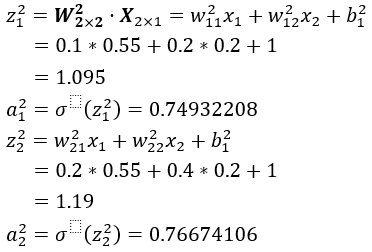

1, the second layer is calculated

in matrix form:

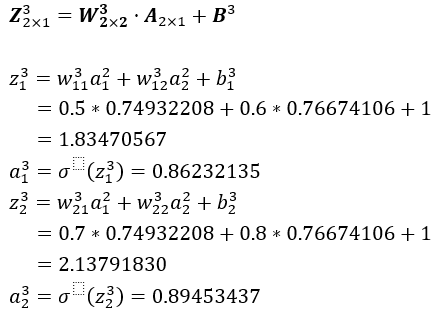

2, the third layer calculated

in matrix form:

Second, the calculation error

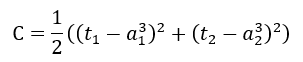

The square of the loss function (cost function) currently in use;

Third, the error in the output layer neuron is calculated

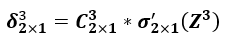

Matrix:

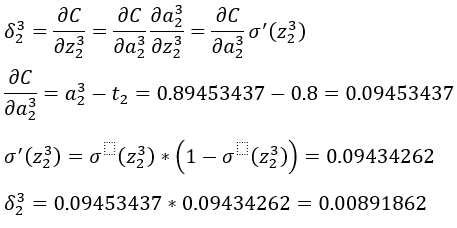

输出层第一个神经元误差δ_1^3:

输出层第一个神经元误差δ_2^3:

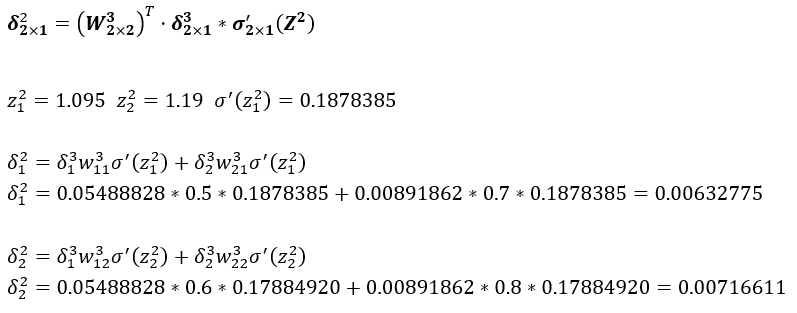

四、计算其余层神经元误差

矩阵形式:

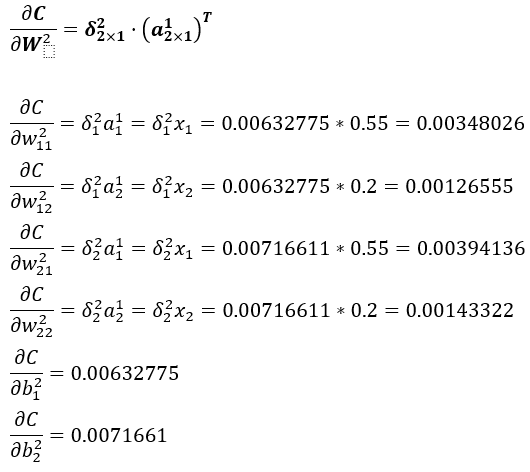

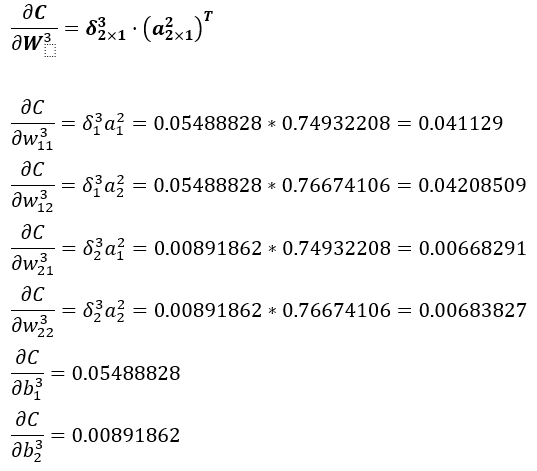

五、计算误差C关于w、b的梯度

此处用的是BP3与BP4公式,在计算出BP1与BP2后将其带入到公式中即可计算出C关于w、b的梯度;

矩阵形式:

矩阵形式:

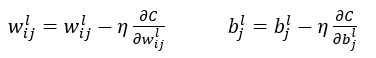

更新w、b公式:

在计算出误差C关于每个权重每个偏置的偏导数后再使用得到的值带入上述公式更新每一个w、b即可,在更新完成w、b后如误差达到指定精度或epochs则继续执行上述的2-5步骤直到误差满意或指定epochs为止;