First, what neural networks are? The human brain cell synapses by the hundreds of billions of interconnected (neurons) components. Synaptic incoming enough excitement can cause neuronal excitation. This process is called "thinking."

We can write a neural network on a computer to simulate this process. In the biomolecular level does not need to simulate the human brain, only analog rule higher level. We use the matrix (two-dimensional data table) this mathematical tool, and for simplicity, only simulate a neuron three inputs and one output.

We will train neurons to solve the following problem. Examples of the former is referred to as four training set. You may find that the output is always equal to the input value in the leftmost column. and so'? 'Should be 1.

Training process

But how to make our neurons answer correct? Given a weight to each input may be a positive or negative number. Have a large positive (or negative) weight input will determine the output neurons. Weight per weight of the first set a random number initial value, and then begin the training process:

- Take a sample of the training input, using the weight adjust their output neuron is calculated by a specific formula.

- Calculating an error, i.e. the difference between the expected output and training samples in neurons.

- The error is slightly adjust weights.

- This process is repeated 100,000 times.

The final weight will become an optimal solution in line with the training set. If a neuron consider a new case of this law, it will give a good prediction.

This process is BP.

Output neuron formula

You might be thinking, what formula to calculate the neuron output is? First, calculating the weighted input and neurons, i.e.,

Then make standardization, the results between 0 and 1. To do this using a mathematical function --Sigmoid functions:

Sigmoid function is a pattern "S" shaped curve.

Substituting the first equation into the second, the final equation for the calculation of the output neuron:

Heavy weight adjustment formula

We constantly adjust the weights during training. But how to adjust it? You can use "Error Weighted Derivative" formula:

Why use this formula? First of all, we want to make proportional resizing and error. Secondly, by the input (0 or 1), if the input is 0, the weights will not be adjusted. Finally, the slope multiplied by Sigmoid curve (FIG. 4).

Sigmoid curve slope can be obtained by derivation:

Substituting the second equation into the first equation, the weights adjusted to give a weight of the final equation:

Python code structure

Although we did not use neural network library, but will import numpy Python math library in the four methods. They are:

- exp-- natural exponent

- array-- create a matrix

- dot-- matrix multiplication

- random-- generate random numbers

".T" methods for matrix transpose (row variable column). So, this store digital computer:

I for each line of source code to add a comment to explain everything. Note that at each iteration, we also deal with all training data. So variables are matrix (two-dimensional data table). The following sample code is written to a complete with Python.

Epilogue

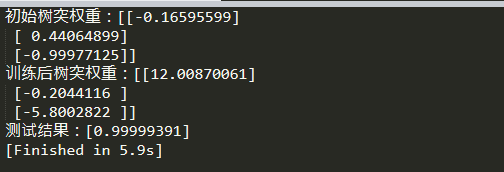

After running you should see this result:

We did it! We use Python to build a simple neural network!

First, given the random neural networks of their own weight, and then use the training set to train yourself. Next, it is considered a new case [1, 0, 0] and the predicted 0.99993704. The correct answer is 1. very close!