Linear change:

Linear transformation (linear mapping) is acting between two vector spaces function , it remains vector addition and scalar multiplication calculation, a change from a vector space to other vector spaces. In fact demonstrated linear transformation is a matrix .

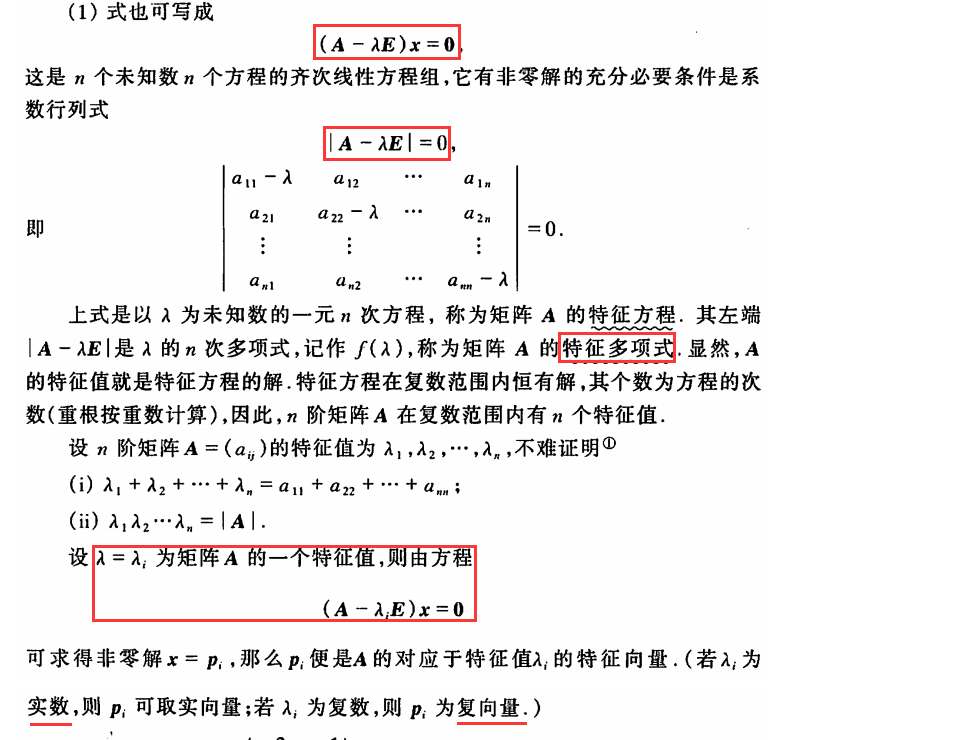

Eigenvalues and eigenvectors are integrally concept:

For a given linear transformation (matrix A), which feature vectors

ξafter the linear transformation, a new vector obtained with the original stillξheld on the same straight line, but the length may change. A length of the scaled feature vector at the linear transformation ratio ([lambda]) which is referred to as characteristic values (eigenvalues).

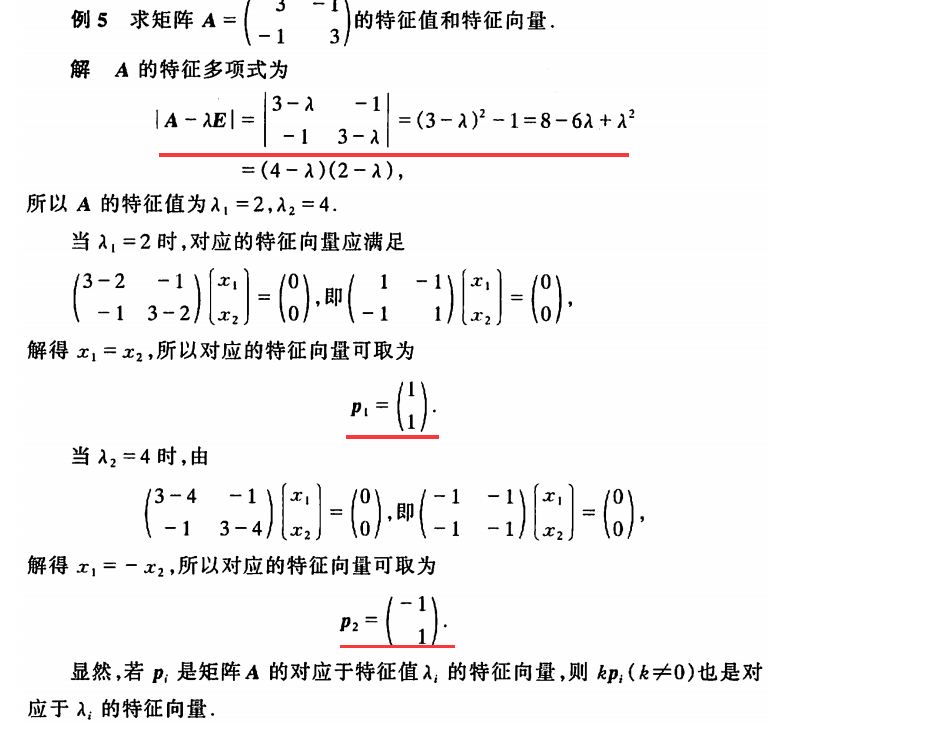

Mathematical description:Aξ=λξ

Linear transformation Aunder the influence of the vector ξonly in scale into the original λfold. He said ξlinear transformation Aa feature vector, λcorresponding eigenvalues.

- Matrix is a representation of an array of two-dimensional space, it can be seen as a transformation matrix. Linear algebra, vector transform matrix can be a position to another, or converting from one coordinate system to another coordinate system. Matrix "group", the actual conversion is, when the coordinate system used.

- Matrix multiplication corresponds to a transformation, a vector is arbitrary direction or into another different lengths are mostly new vector. In this transformation process, the original vector rotation occurs mainly, the stretch changes. If the matrix occurs only for scaling a vector or a certain vectors, these vectors do not produce the effect of the rotation, then these vectors are called eigenvectors of this matrix, the ratio is telescopic feature values.

- Given an arbitrary matrix A, not all vectors x which can lengthen (shorten). Feature vector (Eigenvector) Any vector can be elongated matrix A (shorter) is called the matrix of A; the amount of lengthening (shortening) is the eigenvector corresponding to the eigenvalue (Eigenvalue).

- 一个矩阵可能可以拉长(缩短)多个向量,因此它就可能有多个特征值。

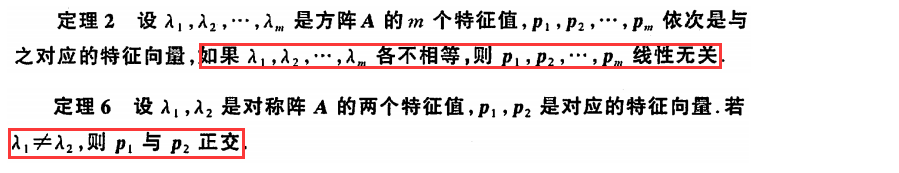

- 对于实对称矩阵来说,不同特征值对应的特征向量必定正交。

- 一个变换矩阵的所有特征向量组成了这个变换矩阵的一组基。所谓基,可以理解为坐标系的轴。我们平常用到的大多是直角坐标系,在线性代数中可以把这个坐标系扭曲、拉伸、旋转,称为基变换。我们可以按需求去设定基,但是基的轴之间必须是线性无关的,也就是保证坐标系的不同轴不要指向同一个方向或可以被别的轴组合而成,否则的话原来的空间就“撑”不起来了。在主成分分析(PCA)中,我们通过在拉伸最大的方向设置基,忽略一些小的量,可以极大的压缩数据而减小失真。

- 变换矩阵的所有特征向量作为空间的基之所以重要,是因为在这些方向上变换矩阵可以拉伸向量而不必扭曲它,使得计算大为简单。因此特征值固然重要,但我们的终极目标却是特征向量。

- 同一特征值的任意多个特征向量的线性组合仍然是A属于同一特征值的特征向量。

顾名思义,特征值和特征向量表达了一个线性变换的特征。在物理意义上,一个高维空间的线性变换可以想象是在对一个向量在各个方向上进行了不同程度的变换,而特征向量之间是线性无关的,它们对应了最主要的变换方向,同时特征值表达了相应的变换程度。

具体的说,求特征向量,就是把矩阵A所代表的空间进行正交分解,使得A的向量集合可以表示为每个向量a在各个特征向量上的投影长度。我们通常求特征值和特征向量即为求出这个矩阵能使哪些向量只发生拉伸,而方向不发生变化,观察其发生拉伸的程度。这样做的意义在于,看清一个矩阵在哪些方面能产生最大的分散度(scatter),减少重叠,意味着更多的信息被保留下来。