Previous learned some basics of multithreading: The basic concept of threads, and the creation and operation of multi-threading. The content is relatively simple, but multithreading knowledge certainly not that simple, otherwise we do not need to spend so much mind to learn, because the security thread in a multithreaded prone.

So what is it thread safe, defined as follows:

When multiple threads access the same objects, if not consider the scheduling of threads in the runtime environment and alternate operation and does not require additional synchronization, or perform any other operation in coordination caller, calling the object's behavior You can get the right result, that this object is thread safe.

This simply means that the expected operating results in multi-threaded code is correctly inconsistent results, and problems thread safety is generally composed of main memory and working memory data inconsistencies and reordering caused.

To understand these must first understand the java memory model.

A Java Memory Model

In the field of concurrent programming, there are two key questions: between the threads of communication and synchronization

1.1 communication and synchronization

Thread communication refers to what mechanism to exchange information between the threads, the programming command, the communication mechanism between the two threads shared memory and message passing ,

In the shared-memory concurrency model, the common state shared program between the threads between the thread by writing - implicitly communicate, typical of shared memory communication is to communicate by sharing a common target state read memory.

In concurrency model messaging, there is no common thread between the state, must be explicitly communicate by transmitting explicit messages between threads, in a typical embodiment is the java messaging wait () and notify ().

Thread Synchronization refers to a program for controlling operation of the mechanism occurs relative order between the threads. In the shared-memory concurrency model, explicit synchronization is performed. The programmer must explicitly specify a method or a piece of code required to perform mutual exclusion between threads. In concurrency model's messaging, since the transmission message before receiving the message must therefore implicit synchronization is performed.

java memory model is concurrent shared memory model , mainly by reading between threads - to complete the implicit communication write shared variables. If you can not understand Java shared memory model will encounter various problems regarding visibility of memory when writing concurrent programs.

1.2 java memory model (JMM)

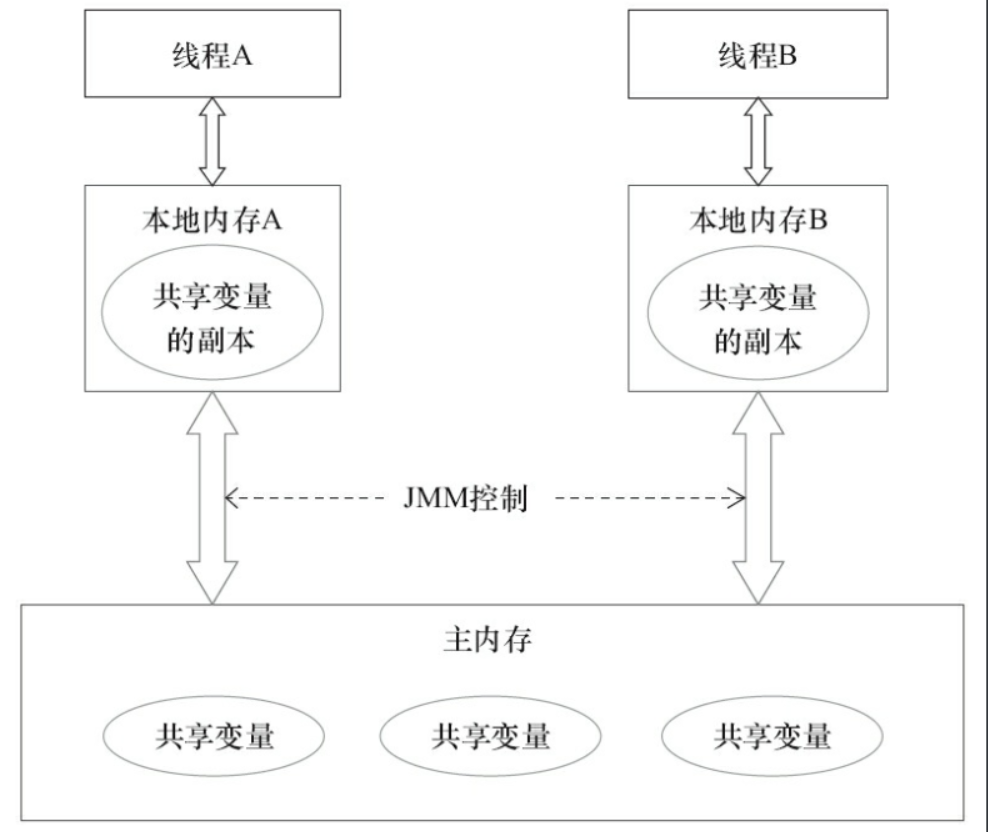

Processing speed and main memory read and write speed of the CPU is not an order of magnitude, in order to balance this huge gap, there will be cached for each CPU. Thus, the shared variables on the first main memory, each thread has its own working memory, and the shared variable is located will be copied to the main memory of its own working memory, read and write operations are then used in the working memory the copy of the variable, and at some point a copy of the variable working memory is written back to main memory. JMM is defined in this way from the level of abstraction, and JMM decided to write a shared variable thread when other threads are visible.

FIG JMM abstract is a schematic view, between the thread A and thread B to complete the communication, then, involves the following two steps:

-

After thread A shared variable from main memory will be read into the working memory of the thread A and the operation, after the re-write the data back to the main memory;

-

Thread B reads the latest shared variable from main memory

From the horizontal to see, thread A and thread B if performing implicit communication through shared variables. This one has a very interesting question, if the thread A timely updated data is not written back to main memory, at a time when thread B is read stale data, which appeared in "dirty read" phenomenon. By synchronization mechanism (controlling the relative order of operations occurring between different threads) or solved by such volatile keyword volatile variables each can be forced to refresh main memory, so that each thread is visible.

java memory model contents of many, it is recommended to see this article: https://blog.csdn.net/suifeng3051/article/details/52611310

1.3 可见性和竞争现象

当对象和变量存储到计算机的各个内存区域时,必然会面临一些问题,其中最主要的两个问题是:

-

共享对象对各个线程的可见性

-

共享对象的竞争现象

共享对象的可见性

当多个线程同时操作同一个共享对象时,如果没有合理的使用volatile和synchronization关键字,一个线程对共享对象的更新有可能导致其它线程不可见。

一个CPU中的线程读取主存数据到CPU缓存,然后对共享对象做了更改,但CPU缓存中的更改后的对象还没有flush到主存,此时线程对共享对象的更改对其它CPU中的线程是不可见的。最终就是每个线程最终都会拷贝共享对象,而且拷贝的对象位于不同的CPU缓存中。

要解决共享对象可见性这个问题,我们可以使用volatile关键字,volatile 关键字可以保证变量会直接从主存读取,而对变量的更新也会直接写到主存,这个后面会详讲。

竞争现象

如果多个线程共享一个对象,如果它们同时修改这个共享对象,这就产生了竞争现象。

线程A和线程B共享一个对象obj。假设线程A从主存读取Obj.count变量到自己的CPU缓存,同时,线程B也读取了Obj.count变量到它的CPU缓存,并且这两个线程都对Obj.count做了加1操作。此时,Obj.count加1操作被执行了两次,不过都在不同的CPU缓存中。

要解决竞争现象我们可以使用synchronized代码块。synchronized代码块可以保证同一个时刻只能有一个线程进入代码竞争区,synchronized代码块也能保证代码块中所有变量都将会从主存中读,当线程退出代码块时,对所有变量的更新将会flush到主存,不管这些变量是不是volatile类型的。

二 重排序

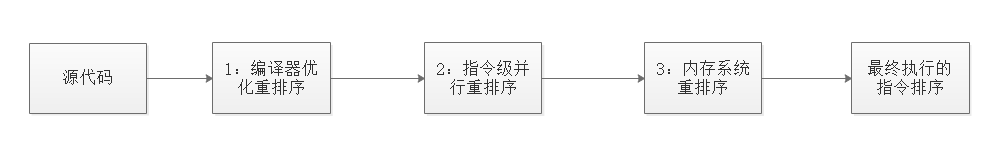

指令重排序是指编译器和处理器为了提高性能对指令进行重新排序,重排序一般有以下三种:

-

-

指令级并行的重排序:如果不存在数据依赖性,处理器可以改变语句对应机器指令的执行顺序。

-

内存系统的重排序:处理器使用缓存和读写缓冲区,这使得加载和存储操作看上去可能是在乱序执行。

1属于编译器重排序,而2和3统称为处理器重排序。这些重排序会导致线程安全的问题,JMM确保在不同的编译器和不同的处理器平台之上,通过插入特定类型的Memory Barrier来禁止特定类型的编译器重排序和处理器重排序,为上层提供一致的内存可见性保证。

那么什么情况下一定不会重排序呢?编译器和处理器不会改变存在数据依赖性关系的两个操作的执行顺序,即不会重排序,这里有个数据依赖性概念是什么意思呢?看如下代码:

int a = 1;//A int b = 2;//B int c = a + b;//c

这段代码中A和B没有任何关系,改变A和B的执行顺序,不会对结果产生影响,这里就可以对A和B进行指令重排序,因为不管是先执行A或者B都对结果没有影响,这个时候就说这两个操作不存在数据依赖性,数据依赖性是指如果两个操作访问同一个变量,且这两个操作有一个为写操作,此时这两个操作就存在数据依赖性,如果我们对变量a进行了写操作,后又进行了读取操作,那么这两个操作就是有数据依赖性,这个时候就不能进行指令重排序,这个很好理解,因为如果重排序的话会影响结果。

这里还有一个概念要理解:as-if-serial:不管怎么重排序,单线程下的执行结果不能被改变。编译器、runtime和处理器都必须遵守as-if-serial语义。

这里也比较好理解,就是在单线程情况下,重排序不能影响执行结果,这样程序员不必担心单线程中重排序的问题干扰他们,也无需担心内存可见性问题。

三 happens-before规则

我们知道处理器和编译器会对指令进行重排序,但是如果要我们去了解底层的规则,那对我们来说负担太大了,因此,JMM为程序员在上层提供了规则,这样我们就可以根据规则去推论跨线程的内存可见性问题,而不用再去理解底层重排序的规则。

3.1 happens-before

我们无法就所有场景来规定某个线程修改的变量何时对其他线程可见,但是我们可以指定某些规则,这规则就是happens-before。

在JMM中,如果一个操作执行的结果需要对另一个操作可见,那么这两个操作之间必须存在happens-before关系。

因此,JMM可以通过happens-before关系向程序员提供跨线程的内存可见性保证(如果A线程的写操作a与B线程的读操作b之间存在happens-before关系,尽管a操作和b操作在不同的线程中执行,但JMM向程序员保证a操作将对b操作可见)。具体的定义为:

1)如果一个操作happens-before另一个操作,那么第一个操作的执行结果将对第二个操作可见,而且第一个操作的执行顺序排在第二个操作之前。

2)两个操作之间存在happens-before关系,并不意味着Java平台的具体实现必须要按照happens-before关系指定的顺序来执行。如果重排序之后的执行结果,与按happens-before关系来执行的结果一致,那么这种重排序并不非法(也就是说,JMM允许这种重排序)。

3.2 具体规则

具体的规则有8条:

具体的一共有六项规则:

-

程序次序规则:一个线程内,按照代码顺序,书写在前面的操作先行发生于书写在后面的操作。

-

锁定规则:一个unLock操作先行发生于后面对同一个锁额lock操作。

-

volatile变量规则:对一个变量的写操作先行发生于后面对这个变量的读操作。

-

传递规则:如果操作A先行发生于操作B,而操作B又先行发生于操作C,则可以得出操作A先行发生于操作C。

-

线程启动规则:Thread对象的start()方法先行发生于此线程的每个一个动作。

-

线程中断规则:对线程interrupt()方法的调用先行发生于被中断线程的代码检测到中断事件的发生。

-

线程终结规则:线程中所有的操作都先行发生于线程的终止检测,我们可以通过Thread.join()方法结束、Thread.isAlive()的返回值手段检测到线程已经终止执行。

-

对象终结规则:一个对象的初始化完成先行发生于他的finalize()方法的开始。

参考文章: