Reprinted from the first piece of heart

1 Introduction

Since the cluster deployment document of the Dolphin Scheduling Organ Network is written in a messy manner, and you need to jump to many places for operation during the installation process, I have summarized a document that you can follow directly from beginning to end to facilitate subsequent deployment and upgrades. , related operations of adding nodes and reducing nodes.

2. Prepare in advance

2.1. Basic components

- JDK: Download JDK (1.8+), install and configure the JAVA_HOME environment variable, and append the bin directory under it to the PATH environment variable. If it already exists in your environment, you can skip this step.

- Binary package: Download the DolphinScheduler binary package on the download page

- Database: PostgreSQL (8.2.15+) or MySQL (5.7+), you can choose one of the two. For example, MySQL requires JDBC Driver 8 version, which can be downloaded from the central warehouse.

- Registration center: ZooKeeper (3.4.6+), download address.

- Process tree analysis

- macOS install pstree

- Fedora/Red/Hat/CentOS/Ubuntu/Debian install psmisc.

Note: DolphinScheduler itself does not depend on Hadoop, Hive, Spark, etc., but if the tasks you run need to rely on them, you need corresponding environmental support.

3. Upload

Upload the binary package and extract it to a certain directory. You can decide the specific directory location yourself.

Pay attention to the directory name. It is best to add some characters after it. The installation directory and the binary package decompression directory must have different names to distinguish them.

tar -xvf apache-dolphinscheduler-3.1.7-bin.tar.gz

mv apache-dolphinscheduler-3.1.7-bin dolphinscheduler-3.1.7-origin

The following -origin indicates that this is the original binary package decompression file. If there are subsequent configuration changes, you can modify the files in the directory and re-execute the installation script.

4. User

4.1. Configure user password exemption and permissions

Create a deployment user and be sure to configure sudo password-free. Take creating the dolphinscheduler user as an example:

# 创建用户需使用 root 登录

useradd dolphinscheduler

# 添加密码

echo "dolphinscheduler" | passwd --stdin dolphinscheduler

# 配置 sudo 免密

sed -i '$adolphinscheduler ALL=(ALL) NOPASSWD: ALL' /etc/sudoers

sed -i 's/Defaults requirett/#Defaults requirett/g' /etc/sudoers

# 修改目录权限,使得部署用户对二进制包解压后的 apache-dolphinscheduler-*-bin 目录有操作权限

chown -R dolphinscheduler:dolphinscheduler apache-dolphinscheduler-*-bin

Notice:

- Because the task execution service uses sudo -u {linux-user} to switch between different Linux users to implement multi-tenant running jobs, the deployment user needs to have sudo permissions and is password-free. If beginners don’t understand it, they can ignore this point temporarily.

- If you find the "Defaults requirett" line in the /etc/sudoers file, please comment it out as well.

4.2. Configure machine SSH password-free login

Since resources need to be sent to different machines during installation, SSH password-free login is required between each machine. The steps to configure password-free login are as follows:

su dolphinscheduler

ssh-keygen -t rsa -P '' -f ~/.ssh/id_rsa

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

# 一定要执行下面这个命令,否则免密登录会失败

chmod 600 ~/.ssh/authorized_keys

Note: After the configuration is completed, you can run the command ssh localhostto determine whether it is successful. If you can log in via ssh without entering a password, it is successful.

5. Start zookeeper

Just start zookeeper in the cluster.

6. Modify configuration

All the following operations are performed under the dolphinscheduler user.

After completing the preparation of the basic environment, you need to modify the configuration file according to your machine environment. Configuration files can be found in the directory bin/env, they are install_env.shand dolphinscheduler_env.sh.

6.1. install_env.sh

install_env.shThe file configures which machines DolphinScheduler will be installed on, and which services will be installed on each machine. You can find this file in the path bin/env/and then modify the corresponding configuration according to the instructions below.

# ---------------------------------------------------------

# INSTALL MACHINE

# ---------------------------------------------------------

# A comma separated list of machine hostname or IP would be installed DolphinScheduler,

# including master, worker, api, alert. If you want to deploy in pseudo-distributed

# mode, just write a pseudo-distributed hostname

# Example for hostnames: ips="ds1,ds2,ds3,ds4,ds5", Example for IPs: ips="192.168.8.1,192.168.8.2,192.168.8.3,192.168.8.4,192.168.8.5"

# 配置海豚调度器要安装到那些机器上

ips=${ips:-"ds01,ds02,ds03,hadoop02,hadoop03,hadoop04,hadoop05,hadoop06,hadoop07,hadoop08"}

# Port of SSH protocol, default value is 22. For now we only support same port in all `ips` machine

# modify it if you use different ssh port

sshPort=${sshPort:-"22"}

# A comma separated list of machine hostname or IP would be installed Master server, it

# must be a subset of configuration `ips`.

# Example for hostnames: masters="ds1,ds2", Example for IPs: masters="192.168.8.1,192.168.8.2"

# 配置 master 角色要安装到哪些机器上

masters=${masters:-"ds01,ds02,ds03,hadoop04,hadoop05,hadoop06,hadoop07,hadoop08"}

# A comma separated list of machine <hostname>:<workerGroup> or <IP>:<workerGroup>.All hostname or IP must be a

# subset of configuration `ips`, And workerGroup have default value as `default`, but we recommend you declare behind the hosts

# Example for hostnames: workers="ds1:default,ds2:default,ds3:default", Example for IPs: workers="192.168.8.1:default,192.168.8.2:default,192.168.8.3:default"

# 配置 worker 角色要安装到哪些机器上,默认都放到 default 的 worker 分组内,其他分组,可以通过海豚调度器界面进行单独配置

workers=${workers:-"ds01:default,ds02:default,ds03:default,hadoop02:default,hadoop03:default,hadoop04:default,hadoop05:default,hadoop06:default,hadoop07:default,hadoop08:default"}

# A comma separated list of machine hostname or IP would be installed Alert server, it

# must be a subset of configuration `ips`.

# Example for hostname: alertServer="ds3", Example for IP: alertServer="192.168.8.3"

# 配置 alert 角色安装到哪个机器上,配置一台机器即可

alertServer=${alertServer:-"hadoop03"}

# A comma separated list of machine hostname or IP would be installed API server, it

# must be a subset of configuration `ips`.

# Example for hostname: apiServers="ds1", Example for IP: apiServers="192.168.8.1"

# 配置 api 角色安装到哪个机器上,配置一台机器即可

apiServers=${apiServers:-"hadoop04"}

# The directory to install DolphinScheduler for all machine we config above. It will automatically be created by `install.sh` script if not exists.

# Do not set this configuration same as the current path (pwd). Do not add quotes to it if you using related path.

# 配置安装路径,将会在所有海豚集群的机器上安装服务,一定要和上面解压的二进制包目录区分开,最好带上版本号,以方便后续的升级操作。

installPath=${installPath:-"/opt/dolphinscheduler-3.1.5"}

# The user to deploy DolphinScheduler for all machine we config above. For now user must create by yourself before running `install.sh`

# script. The user needs to have sudo privileges and permissions to operate hdfs. If hdfs is enabled than the root directory needs

# to be created by this user

# 部署使用的用户,用上面自己新建的用户即可

deployUser=${deployUser:-"dolphinscheduler"}

# The root of zookeeper, for now DolphinScheduler default registry server is zookeeper.

# 配置注册到 zookeeper znode 名称,如果配置了多个海豚集群,则需要配置不同的名称

zkRoot=${zkRoot:-"/dolphinscheduler"}

6.2. dolphinscheduler_env.sh

This file can bin/env/be found in the path. This file is used to configure some environments used. Just follow the instructions below to modify the corresponding configuration:

# JDK 路径,一定要修改

export JAVA_HOME=${JAVA_HOME:-/usr/java/jdk1.8.0_202}

# 数据库类型,支持 mysql、postgresql

export DATABASE=${DATABASE:-mysql}

export SPRING_PROFILES_ACTIVE=${DATABASE}

# 连接 url,主要修改下面的 hostname,最后配置的是东八区

export SPRING_DATASOURCE_URL="jdbc:mysql://hostname:3306/dolphinscheduler?useUnicode=true&characterEncoding=UTF-8&useSSL=false&serverTimezone=Asia/Shanghai"

export SPRING_DATASOURCE_USERNAME=dolphinscheduler

# 如果密码比较复杂,则需要前后使用英文单引号括起来

export SPRING_DATASOURCE_PASSWORD='xxxxxxxxxxxxx'

export SPRING_CACHE_TYPE=${SPRING_CACHE_TYPE:-none}

# 配置各角色 JVM 启动时使用的时区,默认为 -UTC,如果想要完全支持东八区,则设置为 -GMT+8

export SPRING_JACKSON_TIME_ZONE=${SPRING_JACKSON_TIME_ZONE:-GMT+8}

export MASTER_FETCH_COMMAND_NUM=${MASTER_FETCH_COMMAND_NUM:-10}

export REGISTRY_TYPE=${REGISTRY_TYPE:-zookeeper}

# 配置使用的 zookeeper 地址

export REGISTRY_ZOOKEEPER_CONNECT_STRING=${REGISTRY_ZOOKEEPER_CONNECT_STRING:-hadoop01:2181,hadoop02:2181,hadoop03:2181}

# 配置使用到的一些环境变量,按照自己的需要进行配置即可,所有需要的组件,都自己安装

export HADOOP_HOME=${HADOOP_HOME:-/opt/cloudera/parcels/CDH/lib/hadoop}

export HADOOP_CONF_DIR=${HADOOP_CONF_DIR:-/etc/hadoop/conf}

export SPARK_HOME1=${SPARK_HOME1:-/opt/soft/spark1}

export SPARK_HOME2=${SPARK_HOME2:-/opt/spark-3.3.2}

export PYTHON_HOME=${PYTHON_HOME:-/opt/python-3.9.16}

export HIVE_HOME=${HIVE_HOME:-/opt/cloudera/parcels/CDH/lib/hive}

export FLINK_HOME=${FLINK_HOME:-/opt/flink-1.15.3}

export DATAX_HOME=${DATAX_HOME:-/opt/datax}

export SEATUNNEL_HOME=${SEATUNNEL_HOME:-/opt/seatunnel-2.1.3}

export CHUNJUN_HOME=${CHUNJUN_HOME:-/opt/soft/chunjun}

export PATH=$HADOOP_HOME/bin:$SPARK_HOME1/bin:$SPARK_HOME2/bin:$PYTHON_HOME/bin:$JAVA_HOME/bin:$HIVE_HOME/bin:$FLINK_HOME/bin:$DATAX_HOME/bin:$SEATUNNEL_HOME/bin:$CHUNJUN_HOME/bin:$PATH

6.3. common.properties

Download hdfs-site.xml the and core-site.xmlfiles from your own hadoop cluster, and then put them in the api-server/conf/and worker-server/conf/directory. If it is a native cluster of Apache built by yourself, you can find it from the conf directory of each component. If it is CDH, you can download it directly through the CDH interface.

Modify this file in the api-server/conf/and worker-server/conf/directory. This file is mainly used to configure parameters related to resource upload, such as uploading dolphin resources to HDFS, etc. Just modify it according to the following instructions:

# 本地路径,主要用来存放任务运行时的临时文件,要保证用户对该文件具有读写权限,一般保持默认即可,如果后续任务运行报错说是对该目录下的文件没有操作权限,直接将该目录权限修改为 777 即可

data.basedir.path=/tmp/dolphinscheduler

# resource view suffixs

#resource.view.suffixs=txt,log,sh,bat,conf,cfg,py,java,sql,xml,hql,properties,json,yml,yaml,ini,js

# 保存资源的地方,可用值为: HDFS, S3, OSS, NONE

resource.storage.type=HDFS

# 资源上传的基本路径,必须以 /dolphinscheduler 开头,要保证用户对该目录有读写权限

resource.storage.upload.base.path=/dolphinscheduler

# The AWS access key. if resource.storage.type=S3 or use EMR-Task, This configuration is required

resource.aws.access.key.id=minioadmin

# The AWS secret access key. if resource.storage.type=S3 or use EMR-Task, This configuration is required

resource.aws.secret.access.key=minioadmin

# The AWS Region to use. if resource.storage.type=S3 or use EMR-Task, This configuration is required

resource.aws.region=cn-north-1

# The name of the bucket. You need to create them by yourself. Otherwise, the system cannot start. All buckets in Amazon S3 share a single namespace; ensure the bucket is given a unique name.

resource.aws.s3.bucket.name=dolphinscheduler

# You need to set this parameter when private cloud s3. If S3 uses public cloud, you only need to set resource.aws.region or set to the endpoint of a public cloud such as S3.cn-north-1.amazonaws.com.cn

resource.aws.s3.endpoint=http://localhost:9000

# alibaba cloud access key id, required if you set resource.storage.type=OSS

resource.alibaba.cloud.access.key.id=<your-access-key-id>

# alibaba cloud access key secret, required if you set resource.storage.type=OSS

resource.alibaba.cloud.access.key.secret=<your-access-key-secret>

# alibaba cloud region, required if you set resource.storage.type=OSS

resource.alibaba.cloud.region=cn-hangzhou

# oss bucket name, required if you set resource.storage.type=OSS

resource.alibaba.cloud.oss.bucket.name=dolphinscheduler

# oss bucket endpoint, required if you set resource.storage.type=OSS

resource.alibaba.cloud.oss.endpoint=https://oss-cn-hangzhou.aliyuncs.com

# if resource.storage.type=HDFS, the user must have the permission to create directories under the HDFS root path

resource.hdfs.root.user=hdfs

# if resource.storage.type=S3, the value like: s3a://dolphinscheduler; if resource.storage.type=HDFS and namenode HA is enabled, you need to copy core-site.xml and hdfs-site.xml to conf dir

#

resource.hdfs.fs.defaultFS=hdfs://bigdata:8020

# whether to startup kerberos

hadoop.security.authentication.startup.state=false

# java.security.krb5.conf path

java.security.krb5.conf.path=/opt/krb5.conf

# login user from keytab username

[email protected]

# login user from keytab path

login.user.keytab.path=/opt/hdfs.headless.keytab

# kerberos expire time, the unit is hour

kerberos.expire.time=2

# resourcemanager port, the default value is 8088 if not specified

resource.manager.httpaddress.port=8088

# if resourcemanager HA is enabled, please set the HA IPs; if resourcemanager is single, keep this value empty

yarn.resourcemanager.ha.rm.ids=hadoop02,hadoop03

# if resourcemanager HA is enabled or not use resourcemanager, please keep the default value; If resourcemanager is single, you only need to replace ds1 to actual resourcemanager hostname

yarn.application.status.address=http://ds1:%s/ws/v1/cluster/apps/%s

# job history status url when application number threshold is reached(default 10000, maybe it was set to 1000)

yarn.job.history.status.address=http://hadoop02:19888/ws/v1/history/mapreduce/jobs/%s

# datasource encryption enable

datasource.encryption.enable=false

# datasource encryption salt

datasource.encryption.salt=!@#$%^&*

# data quality option

data-quality.jar.name=dolphinscheduler-data-quality-dev-SNAPSHOT.jar

#data-quality.error.output.path=/tmp/data-quality-error-data

# Network IP gets priority, default inner outer

# Whether hive SQL is executed in the same session

support.hive.oneSession=false

# use sudo or not, if set true, executing user is tenant user and deploy user needs sudo permissions; if set false, executing user is the deploy user and doesn't need sudo permissions

sudo.enable=true

setTaskDirToTenant.enable=false

# network interface preferred like eth0, default: empty

#dolphin.scheduler.network.interface.preferred=

# network IP gets priority, default: inner outer

#dolphin.scheduler.network.priority.strategy=default

# system env path

#dolphinscheduler.env.path=dolphinscheduler_env.sh

# development state

development.state=false

# rpc port

alert.rpc.port=50052

# set path of conda.sh

conda.path=/opt/anaconda3/etc/profile.d/conda.sh

# Task resource limit state

task.resource.limit.state=false

# mlflow task plugin preset repository

ml.mlflow.preset_repository=https://github.com/apache/dolphinscheduler-mlflow

# mlflow task plugin preset repository version

ml.mlflow.preset_repository_version="main"

6.4. application.yaml

You need to modify the /conf/application.yaml files under all roles, including: master-server/conf/application.yaml, worker-server/conf/application.yaml, api-server/conf/application.yaml, alert-server/conf /application.yaml, the main modification is the time zone setting, the specific modifications are as follows:

spring:

banner:

charset: UTF-8

jackson:

# 将时区设置为东八区,只修改这一个地方即可

time-zone: GMT+8

date-format: "yyyy-MM-dd HH:mm:ss"

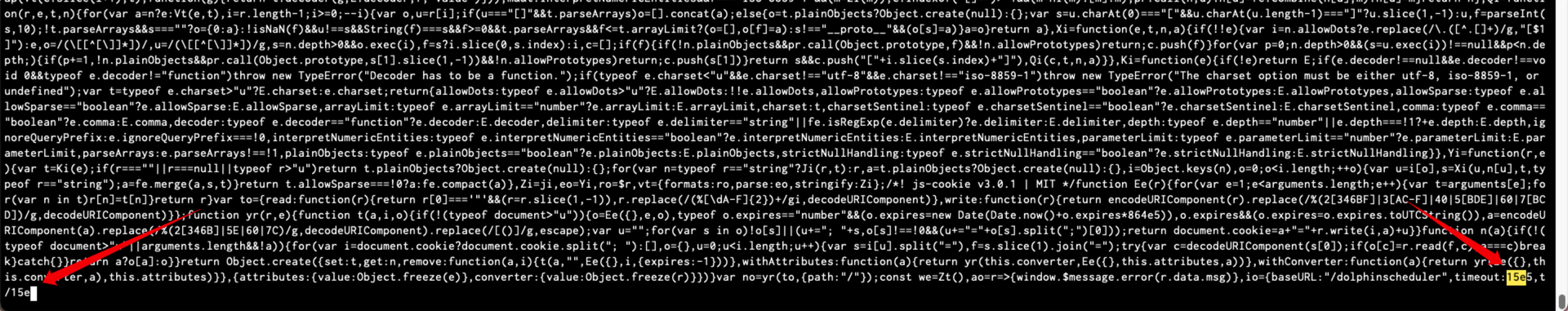

6.5. service.57a50399.js and service.57a50399.js.gz

These two files are in api-server/ui/assets/the and ui/assets/directories.

Switch to these two directories respectively, then find these two files respectively, then open them with the vim command, then search for 15e3, and after finding them, change them to 15e5. This changes the page response timeout. The default value 15e3 means 15 seconds. We change it to 1500 seconds. When uploading large files, no error will be reported due to page timeout.

7. Initialize the database

Driver configuration

Copy the mysql driver (8.x) to the lib directory of each role of the Dolphin scheduler, including: api-server/libs, alert-server/libs, master-server/libs, worker-server/libs, tools/libs.

database user

Log in to mysql as the root user, and then execute the following sql. Both mysql5 and mysql8 support:

create database `dolphinscheduler` character set utf8mb4 collate utf8mb4_general_ci;

create user 'dolphinscheduler'@'%' IDENTIFIED WITH mysql_native_password by 'your_password';

grant ALL PRIVILEGES ON dolphinscheduler.* to 'dolphinscheduler'@'%';

flush privileges;

Execute database upgrade script:

bash tools/bin/upgrade-schema.sh

8. Installation

bash ./bin/install.sh

Executing this script will remotely transfer all local files to all machines configured in the configuration file above through scp, then stop the roles on the corresponding machines, and then start the roles on all machines.

After the first installation, all roles have been started, and there is no need to start any role separately. If any roles are not started, you can check the corresponding logs on the corresponding machine to see what is causing the problem.

9. Start and stop services

# 一键停止集群所有服务

bash ./bin/stop-all.sh

# 一键开启集群所有服务

bash ./bin/start-all.sh

# 启停 Master

bash ./bin/dolphinscheduler-daemon.sh stop master-server

bash ./bin/dolphinscheduler-daemon.sh start master-server

# 启停 Worker

bash ./bin/dolphinscheduler-daemon.sh start worker-server

bash ./bin/dolphinscheduler-daemon.sh stop worker-server

# 启停 Api

bash ./bin/dolphinscheduler-daemon.sh start api-server

bash ./bin/dolphinscheduler-daemon.sh stop api-server

# 启停 Alert

bash ./bin/dolphinscheduler-daemon.sh start alert-server

bash ./bin/dolphinscheduler-daemon.sh stop alert-server

It is important to note that these scripts must be executed by the user who installed the Dolphin Scheduler, otherwise there will be some permission issues.

Each service <service>/conf/dolphinscheduler_env.shhas dolphinscheduler_env.shfiles in the path to facilitate microservice needs. This means that you can configure it in the corresponding service <service>/conf/dolphinscheduler_env.shand then use <service>/bin/start.shcommands to start each service based on different environment variables. But if you use the command /bin/dolphinscheduler-daemon.sh start <service>to start the server, it will bin/env/dolphinscheduler_env.shoverwrite the file <service>/conf/dolphinscheduler_env.shand then start the service. This is done to reduce the cost for users to modify the configuration.

10. Expansion

10.1. Standard approach

Refer to the above steps and proceed as follows:

- new node

- Install and configure JDK.

- Create a new Dolphin user (Linux user), and then configure password-free login, permissions, etc.

- On the machine where the binary installation package was extracted before installing the Dolphin scheduler.

- Log in as the user who installed Dolphin.

- When switching to the previous installation of the Dolphin Scheduler, unzip the binary installation package and modify the configuration file:

bin/env/install_env.sh. In the configuration file, modify the roles that need to be deployed on the new node. - Execute

/bin/install.shthe file to install. The script willbin/env/install_env.shrelocate the entire directory to all machines according to the configuration in the file,scpthen stop the roles on all machines, and then start all roles again.

Disadvantages of this method: If there are many minute-level tasks on the Dolphin scheduler, or real-time tasks such as flink and spark, this operation will stop all roles and then start them, which will take a certain amount of time. During this period, these tasks may stop abnormally due to the restart of the entire cluster, or may not be scheduled normally. However, the Dolphin scheduler itself implements functions such as automatic fault tolerance and disaster recovery, so you can do this and finally observe whether all tasks are executed normally.

10.2. The easy way

Refer to the above steps and proceed as follows:

- new node

- Install and configure JDK.

- Create a new Dolphin user (Linux user), and then configure password-free login, permissions, etc.

- On the machine where the binary installation package was extracted before installing the Dolphin scheduler.

- Log in as the user who installed Dolphin.

- Directly compress the entire directory where the configuration has been modified before, and then transfer it to the new node.

- new node

- Unzip the file on the new node and rename it to

bin/env/install_env.shthe installation directory configured in the previous configuration file. - Log in as the user who installed Dolphin.

- Start which roles need to be deployed on the new node. The specific script location is:

/bin/dolphinscheduler-daemon.sh, and the startup command is:

- Unzip the file on the new node and rename it to

./dolphinscheduler-daemon.sh start master-server

./dolphinscheduler-daemon.sh start worker-server

- Log in to the Dolphin Scheduler interface, and then observe in the "Monitoring Center" whether the corresponding role is started on the new node.

11.Shrink

- On the machine that needs to be offline,

/bin/dolphinscheduler-daemon.shstop all roles on the machine through the script. The stop command is:

./dolphinscheduler-daemon.sh stop worker-server

- Log in to the Dolphin Scheduler interface, and then observe in the "Monitoring Center" whether the character that was stopped on the machine has disappeared.

- On the machine where the binary installation package was unzipped when installing the Dolphin Scheduler previously

- Log in as the user who installed Dolphin.

- Modify the configuration file:

bin/env/install_env.shIn this configuration file, delete the machine corresponding to the offline role.

12. Upgrade

Follow the above steps and operate step by step. For operations that have already been performed, there is no need to perform a second operation. Here are some specific steps:

- Upload the new binary package.

- Unzip it to a different directory than the old version installation directory, or rename it.

- A relatively simple way to modify the configuration file is to copy all the configuration files involved in the above steps from the previously installed directory to the new version directory and replace them.

- Pack all the components deployed on other nodes, and then unzip them and put them in the corresponding location of the new node. For specific components that need to be copied, you can view the configuration in the dolphinscheduler_env.sh file.

- To configure the driver, refer to the steps in "Initializing the Database".

- Stop the previous cluster.

- Back up the entire database.

- Execute the database upgrade script and refer to the steps in "Initializing the Database".

- To execute the installation script, refer to "Installation".

- After the upgrade is completed, log in to the interface and check the "Monitoring Center" to see if all roles have been started successfully.

When reprinting the document, please indicate the source. Everyone is welcome to discuss technology together. If something is wrong, please discuss it together.

Original link: https://blog.csdn.net/u012443641/article/details/131419391

Linus took matters into his own hands to prevent kernel developers from replacing tabs with spaces. His father is one of the few leaders who can write code, his second son is the director of the open source technology department, and his youngest son is a core contributor to open source. Huawei: It took 1 year to convert 5,000 commonly used mobile applications Comprehensive migration to Hongmeng Java is the language most prone to third-party vulnerabilities. Wang Chenglu, the father of Hongmeng: open source Hongmeng is the only architectural innovation in the field of basic software in China. Ma Huateng and Zhou Hongyi shake hands to "remove grudges." Former Microsoft developer: Windows 11 performance is "ridiculously bad " " Although what Laoxiangji is open source is not the code, the reasons behind it are very heartwarming. Meta Llama 3 is officially released. Google announces a large-scale restructuringThis article is published by Beluga Open Source Technology !