On January 17, the press conference of Scholar Puyuan 2.0 (InternLM2) and the launching ceremony of Scholar Puyuan Large Model Challenge were held in Shanghai. Shanghai Artificial Intelligence Laboratory and SenseTime, together with the Chinese University of Hong Kong and Fudan University, officially released the new generation of large language model scholar Puyu 2.0 (InternLM2) .

Open source address

- Github:https://github.com/InternLM/InternLM

- HuggingFace:https://huggingface.co/internlm

- ModelScope:https://modelscope.cn/organization/Shanghai_AI_Laboratory

According to reports, InternLM2 was trained on high-quality corpus of 2.6 trillion tokens. Following the settings of the first-generation scholar Puyu (InternLM), InternLM2 includes two parameter specifications of 7B and 20B as well as base and dialogue versions to meet the needs of different complex application scenarios. Adhering to the concept of "empowering innovation with high-quality open source", Shanghai AI Laboratory continues to provide free commercial license for InternLM2.

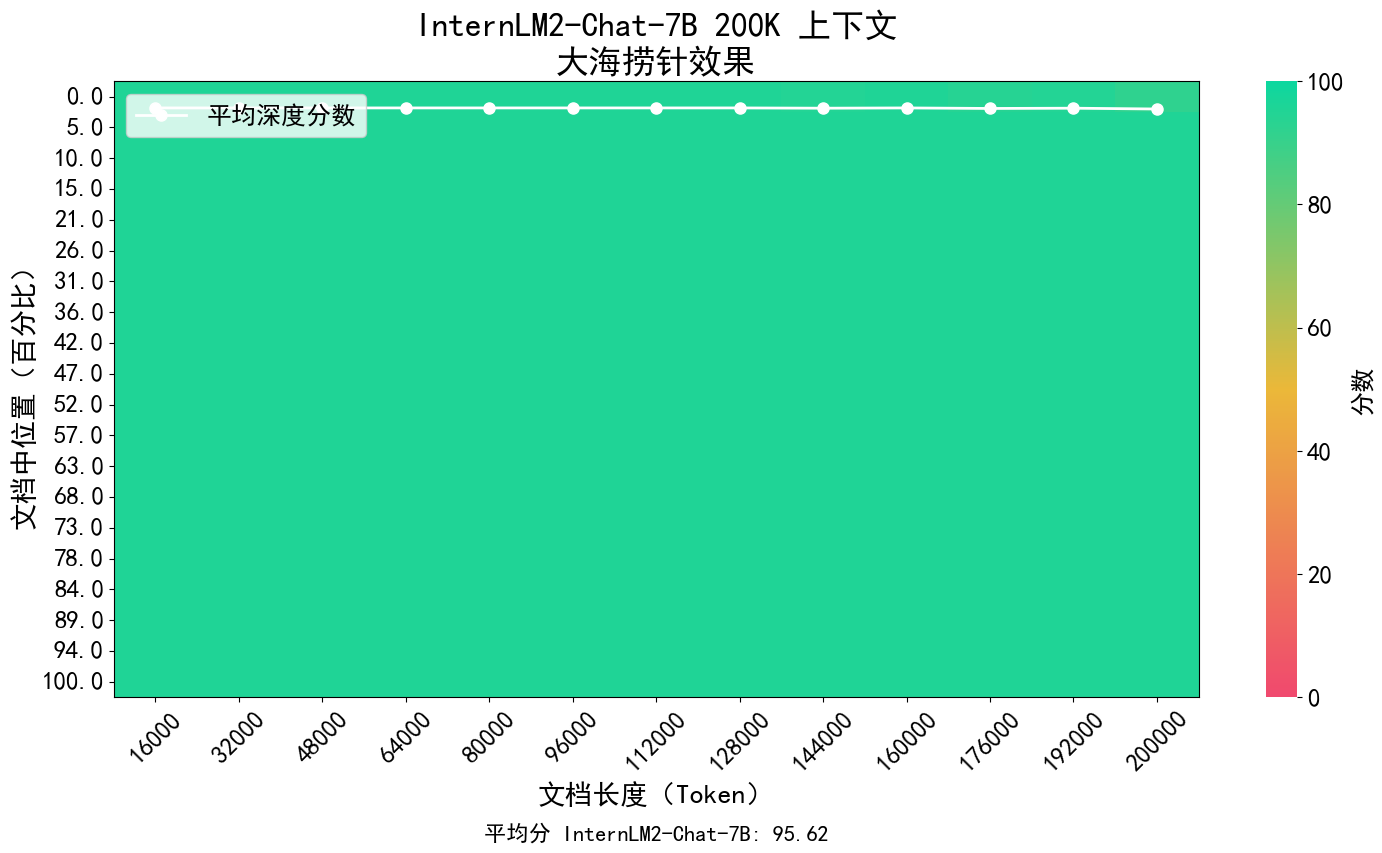

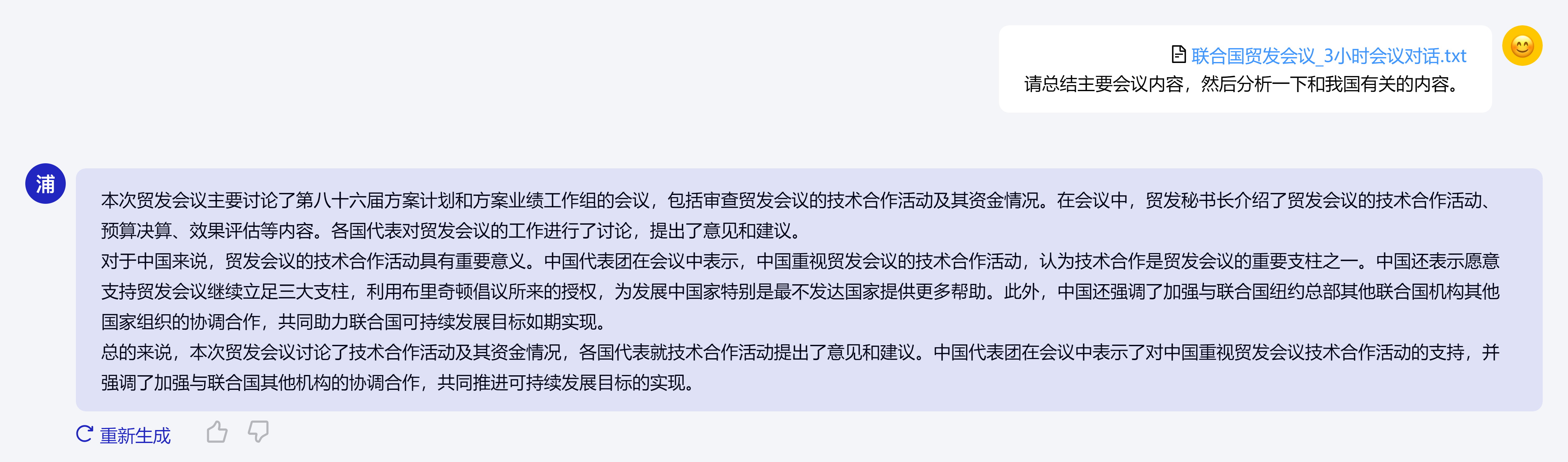

The core concept of InternLM2 is to return to the essence of language modeling, and is committed to achieving a qualitative improvement in the language modeling capabilities of the model base by improving the quality of corpus and information density, and then making great strides in mathematics, coding, dialogue, creation, etc. Progress has been made, and the comprehensive performance has reached the leading level of open source models of the same magnitude. It supports the context of 200K tokens, receives and processes input content of about 300,000 Chinese characters at one time, accurately extracts key information, and achieves "finding the needle in the haystack" of long text.

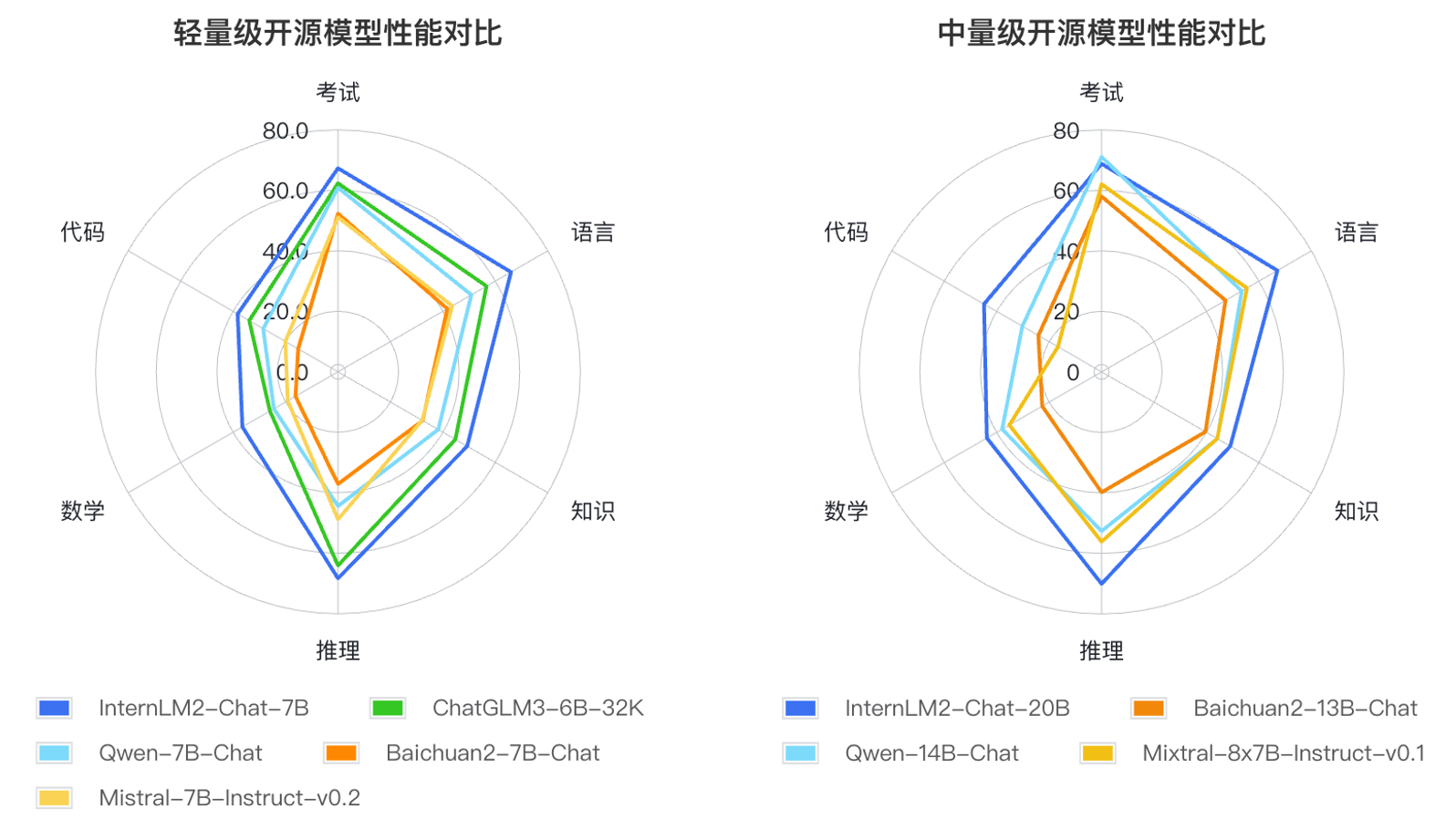

In addition, InternLM2 has made comprehensive progress in various capabilities. Compared with the first generation InternLM, its capabilities in reasoning, mathematics, coding, etc. have been significantly improved, and its comprehensive capabilities are ahead of the same level of open source models.

Based on the application methods of large language models and the key areas of user concern, the researchers defined six competency dimensions such as language, knowledge, reasoning, mathematics, code, and examination, and tested the performance of multiple models of the same magnitude on 55 mainstream evaluation sets. The performance was comprehensively evaluated. The evaluation results show that the lightweight (7B) and middleweight (20B) versions of InternLM2 perform well among models of the same size.