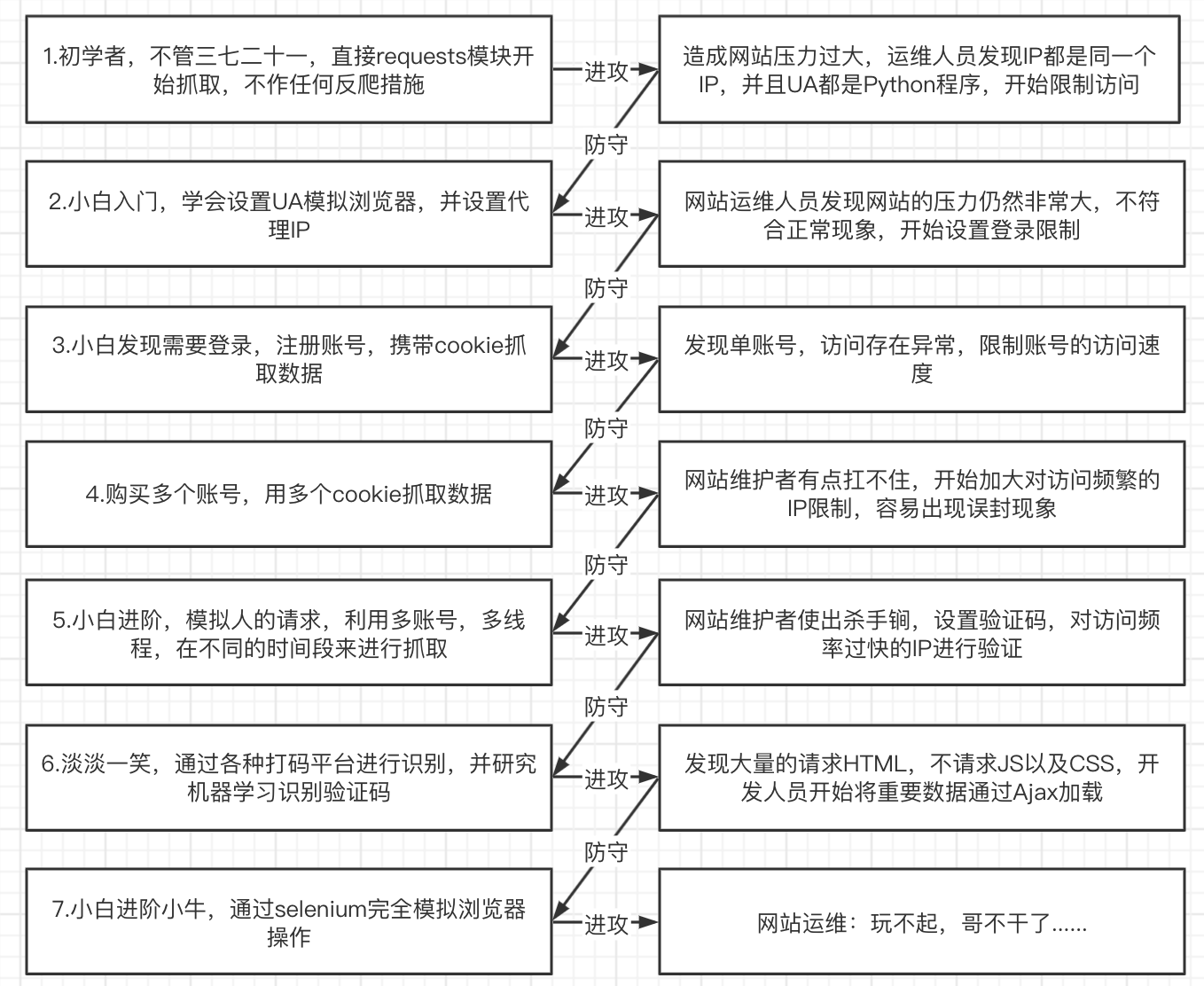

1: The struggle between reptiles and anti-reptiles

crawler suggestions

- Minimize the number of requests

- Save what you get

HTMLfor error checking and reuse

- Save what you get

- Follow all types of pages on your website

H5pageAPP

- Many disguises

- acting

IP

- acting

import requests

proxy = {

'http': '117.114.149.66:55443'

# ip参考网站 https://www.kuaidaili.com/free/

}

response = requests.get("http://httpbin.org/ip",proxies=proxy)

print(response.text)

- Random request header

# 导入模块

from fake_useragent import UserAgent

# 实例化UserAgent

ua = UserAgent()

headres = {

"User-Agent": ua.random # 随机取出一个UserAgent

}

print(headres)

- Utilize multi-threaded distribution

- Let's increase the speed as much as possible without being discovered.

2: Basic introduction to Ajax

Dynamic understanding HTMLof technology

JavaScript- It is the most commonly used scripting language on the Internet. It can collect user tracking data, submit forms directly without reloading the page, embed multimedia files on the page, and even run web pages.

jQueryjQueryIt is a fast and simpleJavaScriptframework that encapsulatesJavaScriptcommonly used functional codes.

AjaxAjaxYou can use web pages to implement asynchronous updates, and you can update certain parts of the web page without reloading the entire web page.

How to get Ajaxdata

1. Directly analyze Ajaxthe calling interface. Then request this interface through code.

2. Use Selenium+chromedriversimulated browser behavior to obtain data.

| Way | advantage | shortcoming |

|---|---|---|

| Analysis interface | Data can be requested directly. No need to do any parsing work. Less code and high performance | Analyzing interfaces is relatively complex, especially some jsinterfaces that are obfuscated, and requires a certain jsamount of knowledge. Easily spotted as a crawler |

| selenium | Directly simulate the behavior of the browser. What the browser can request can also be requested by selenium. Crawlers are more stable | A lot of code. Low performance |

three:Selenium+chromedriver

1 seleniumIntroduction

seleniumIt is anwebautomated testing tool. It was originally developed for website automation testing.seleniumIt can run directly on the browser. It supports all mainstream browsers and can receive instructions to let the browser automatically load the page, obtain the required data, and even Page screenshotchromedriverIt is aChromedriver that drives the browser. Only by using it can the browser be driven. Of course, there are different ones for different browsersdriver. The different browsers and their corresponding ones are listed belowdriver:- Google Chrome download official website: https://www.google.cn/intl/zh-CN/chrome/

- Chrome:http://npm.taobao.org/mirrors/chromedriver/,http://chromedriver.storage.googleapis.com/index.html

- Firefox:https://github.com/mozilla/geckodriver/releases

- Edge:https://developer.microsoft.com/en-us/microsoft-edge/tools/webdriver/

- Safari:https://webkit.org/blog/6900/webdriver-support-in-safari-10/

- download

chromedriver- Baidu search: Taobao mirror ( https://npm.taobao.org/ )

- Download address: https://googlechromelabs.github.io/chrome-for-testing/

- Install

Selenium:pip install selenium

2. SeleniumQuick Start

from selenium import webdriver

# 实例化浏览器

driver = webdriver.Chrome()

# 发送请求

driver.get('https://www.baidu.com')

# 退出浏览器

driver.quit()

3. driverPositioning elements

from selenium import webdriver

from selenium.webdriver.common.by import By

driver = webdriver.Chrome()

driver.get("https://www.baidu.com")

# 1、通过id值定位

driver.find_element(By.ID,"kw").send_keys('马化腾')

# 2、通过class值定位

driver.find_element(By.CLASS_NAME,"s_ipt").send_keys('马化腾')

# 3、通过name定位

driver.find_element(By.NAME,"wd").send_keys('马化腾')

# 4、通过tag_name定位

ipt_tag = driver.find_elements(By.TAG_NAME,"input")

print(ipt_tag)

# 说明:HTML本质就是由不同的tag(标签)组成,而每个tag都是指同一类,所以tag定位效率低,一般不建议使用;

# 5、通过XPATH语法定位

driver.find_element(By.XPATH,'//*[@id="kw"]').send_keys('海贼王')

# 6、通过css语法定位

driver.find_element(By.CSS_SELECTOR,"#kw").send_keys('海贼王')

# # 7、通过文本定位--精确定位

driver.find_element(By.LINK_TEXT,"新闻").click()

4. driverManipulate form elements

Operation input box: divided into two steps

Step 1: Find the element

Step 2: Use send_keys(value)and fill in the data

- How

clearto clear the content in the input box

inputTag.clear()

- Action button

- There are many ways to operate buttons. For example, click, right-click, double-click, etc. Here is one of the most commonly used ones. Just click.

clickJust call the function directly

- There are many ways to operate buttons. For example, click, right-click, double-click, etc. Here is one of the most commonly used ones. Just click.

inputTag = driver.find_element(By.ID,'su')

inputTag.click()

- choose

selectselectElements cannot be clicked directly. Because the element still needs to be selected after clicking. At this time, selenium provides a class specifically for the select tag_from _selenium.webdriver.support.ui _import _Select, passes the obtained elements as parameters to this class, and creates this object. You can then use this object to make selections. https://kyfw.12306.cn/otn/regist/init

from selenium import webdriver

from selenium.webdriver.support.ui import Select

from selenium.webdriver.common.by import By

import time

driver = webdriver.Chrome()

url = 'https://kyfw.12306.cn/otn/regist/init'

driver.get(url)

'''

iframe 是HTML的一个标签 作用:文档中的文档

如果你要找的标签元素被iframe标签所嵌套,那这个时候你需要切换iframe

'''

'''

总结:

1 先找到要操作的select标签

2 通过Select类 传递参数(你要找到下来菜单的元素)

3 选择方式(根据值来选择 根据索引来选择)

'''

# # 定位到select标签 实例化select对象

select_tag = Select(driver.find_element(By.ID,"cardType")) # webelement 将这个element对象传递给select对象

# # 暂停2秒

time.sleep(2)

# # 根据属性值定位

# select_tag.select_by_value('C')

# # 根据索引定位(从0开始)

select_tag.select_by_index(2)

5. Selenium simulates login to Douban

from selenium import webdriver

from selenium.webdriver.common.by import By

import time

# 加载驱动

driver = webdriver.Chrome()

# 拿到目标url

driver.get("https://www.douban.com/")

'''

iframe 是HTML的一个标签 作用:文档中的文档

如果你要找的标签元素被iframe标签所嵌套,那这个时候你需要切换iframe

'''

# 切换iframe

login_iframe = driver.find_element(By.XPATH,'//*[@id="anony-reg-new"]/div/div[1]/iframe')

driver.switch_to.frame(login_iframe)

time.sleep(2)

# 1 切换登录方式 定位到 密码登录(但是在这一步操作之前 要确实是否要切换iframe)

driver.find_element(By.CLASS_NAME,"account-tab-account").click()

time.sleep(2)

# 2 输入账号和密码

user_input = driver.find_element(By.ID,"username").send_keys("123456789")

time.sleep(2)

pwd_input = driver.find_element(By.ID,"password").send_keys("123456789")

time.sleep(2)

# 3 点击登录豆瓣

# 定位登录按钮点击 我们在定位元素的时候 如果属性出现空格的状态 形如:btn btn-account

# 解决方式 第一种我们选择属性当中的一部分(需要测试)

driver.find_element(By.CLASS_NAME,'btn-account').click()

time.sleep(2)

Four: Mouse behavior chain

Sometimes there may be many steps in the operation on the page, then you can use the mouse behavior chain class ActionChainsto complete it. For example, now you want to move the mouse to an element and execute a click event

actions = ActionChains(driver)

actions.move_to_element(inputTag)

actions.send_keys_to_element(inputTag,'python')

actions.move_to_element(submitTag)

actions.context_click()

actions.click(submitTag)

actions.perform()

There are more mouse related operations

click_and_hold(element): Click but do not release the mouse.context_click(element):right click.double_click(element):double click.- For more methods, please refer to: http://selenium-python.readthedocs.io/api.html

practise:

from selenium import webdriver

from selenium.webdriver import ActionChains

from selenium.webdriver.common.by import By

import time

# 加载驱动

driver = webdriver.Chrome()

# 拿到目标url

driver.get("https://www.baidu.com")

# 定位百度的输入框

inputtag = driver.find_element(By.ID, "kw")

# 定位百度按钮

buttontag = driver.find_element(By.ID, "su")

# 实例化一个鼠标行为链的对象

actions = ActionChains(driver)

# 在已经定位好的输入框输入内容

actions.send_keys_to_element(inputtag, "python")

# 等待一秒

time.sleep(1)

# 第一种方法

# buttontag.click() # # 注意你用的逻辑操作和鼠标行为链没有相关 那么这些个操作需要放到perform()的外面

# # 第二种方法 在鼠标行为链中进行操作 没有问题

# actions.move_to_element(buttontag)

# actions.click(buttontag)

# # 提交行为链的操作

# actions.perform()

Five: CookieOperation

- get all

cookie

cookies = driver.get_cookies()

- Acquisition based

cookieonnamecookie

value = driver.get_cookie(name)

- Delete a

cookie

driver.delete_cookie('key')

1.Page waiting

Today's web pages increasingly use Ajaxtechnology such that the program cannot determine when an element has been fully loaded. If the actual page wait time is too long and an domelement has not yet come out, but your code uses this directly WebElement, NullPointeran exception will be thrown. To solve this problem. So Selenium provides two waiting methods: one is implicit waiting, and the other is explicit waiting.

- Implicit wait: call

driver.implicitly_wait. Then it will wait for 10 seconds before getting the unavailable element.

driver.implicitly_wait(10)

- Display waiting: Display waiting is to indicate that a certain condition is met before executing the operation of obtaining elements. You can also specify a maximum time when waiting. If this time is exceeded, an exception will be thrown. Display waits should be completed using

selenium.webdriver.support.excepted_conditionsthe desired conditions andselenium.webdriver.support.ui.WebDriverWait

Comprehensive use of cases

from selenium import webdriver

from selenium.webdriver.common.by import By

import time

# 加载驱动

driver = webdriver.Chrome()

# driver.get('https://www.baidu.com/')

# 定位到输入框

# input_tag = driver.find_element_by_id('kw').send_keys('python')

'''

python自带的time模块去进行等待

相当于阻塞当前线程5秒。不建议过多使用,会严重影响脚本的性能。

'''

# time.sleep(3) # 3秒为强制休息时间 低于三秒浪费了时间--->爬取效率变低

'''

隐式等待 只要找到元素就会立即执行 如果找不到才会等待10秒

好处: 只需设置一次,全局都生效。如果超时时间内网页完成了全部加载,

则立即进行下面的操作。比sleep()智能很多。

劣势: 隐式等待需要等到网页所有元素都加载完成才会执行下面的操作,

如果我需要操作的元素提前加载好了,但是其他无关紧要的元素还没有加载完成,

那么还是需要浪费时间去等待其他元素加载完成。

'''

# driver.implicitly_wait(10) # 10秒

# # 定位到百度一下的按钮

# but_tag = driver.find_element_by_id('su')

# but_tag.click()

'''

显式等待

指定某个条件,然后设置最长等待时间。

如果在这个时间还没有找到元素,那么便会抛出异常,只有当条件满足时才会执行后面的代码。

好处: 解决了隐式等待的不足之处。

缺点: 缺点是稍微复杂一些,需要有一些学习成本。

'''

'''

需要用到的库

'''

from selenium.webdriver.support import expected_conditions as EC # 核心

from selenium.webdriver.support.ui import WebDriverWait # 核心

from selenium.webdriver.common.by import By

driver.get("https://www.baidu.com/")

try:

element = WebDriverWait(driver, 5).until(

EC.presence_of_element_located((By.XPATH, '//*[@id="su"]'))

)

text = driver.page_source

print("text", text)

finally:

driver.quit()

Some other wait conditions

presence_of_element_located: An element has been loaded.presence_of_all_elements_located: All elements in the web page that meet the conditions have been loaded.element_to_be_clickable: An element is clickable.

For more conditions, please refer to: http://selenium-python.readthedocs.io/waits.html

Six: Operation of multiple windows and page switching

- Sometimes there are many

tabsubpages in the window. You definitely need to switch at this time.seleniumProvides a tool calledswitch_to.windowto switch. You candriver.window_handlesfind

from selenium import webdriver

from selenium.webdriver import ActionChains

from selenium.webdriver.common.by import By

import time

# 加载驱动

driver = webdriver.Chrome()

# 拿到目标url # 这个时候发现同时拿到两个url 不能够打开两个窗口

# 访问百度

driver.get("https://www.baidu.com")

# driver.get("https://www.douban.com")

time.sleep(2)

# 访问豆瓣

driver.execute_script("window.open('https://www.douban.com')")

time.sleep(2)

# driver.close() # 此时发现百度被关闭了

# 检测当前驱动执行的url

print(driver.current_url)

# # 那这个时候我们操作多窗口防护变了没有了意义 因为我们只能操作第一个百度 那这个时候我就可以切换一下界面

# # 切换界面

driver.switch_to.window(driver.window_handles[1])

# # [1] 0代表的第一个最开始打开的那个 1代表的第二个 -1切换到最新打开的窗口 -2 倒数第二个打开的窗口

print(driver.current_url) # 检测看是否切换到豆瓣的url了

time.sleep(1)

# driver.switch_to.window(driver.window_handles[0])

# time.sleep(1)

# # 检测看是否能对百度进行操作

# driver.find_element(By.ID,"kw").send_keys("python")

# 切换iframe

login_iframe = driver.find_element(By.XPATH,'//*[@id="anony-reg-new"]/div/div[1]/iframe')

driver.switch_to.frame(login_iframe)

time.sleep(2)

# 1 切换登录方式 定位到 密码登录(但是在这一步操作之前 要确实是否要切换iframe)

driver.find_element(By.CLASS_NAME,"tab-start").click()

time.sleep(2)

# 2 输入账号和密码

user_input = driver.find_element(By.ID,"username").send_keys("123456789")

time.sleep(2)

pwd_input = driver.find_element(By.ID,"password").send_keys("123456789")

time.sleep(2)

# 3 点击登录豆瓣

# 定位登录按钮点击 我们在定位元素的时候 如果属性出现空格的状态 形如:btn btn-account

# 解决方式 第一种我们选择属性当中的一部分(需要测试)

driver.find_element(By.CLASS_NAME,'btn-account').click()

time.sleep(3)

1. SeleniumExecution jssyntax

Sometimes seleniumthere are some problems with the methods provided, or it is very troublesome to execute. We can consider implementing them through seleniumexecution javascriptto simplify complex operations and

seleniumexecute jsscripts:

execute_script(script, *args)

描述:用来执行js语句

参数:

script:待执行的js语句,如果有多个js语句,使用英文分号;连接

2. seleniumExecute jscode under common conditions

1 滚动页面:您可以使用 JavaScript 滚动网页以将元素显示在视图中或在页面上移动

driver.execute_script('window.scrollTo(0,document.body.scrollHeight)')

2 js点击:

button = driver.find_element(By.CLASS_NAME),'my-button')

driver.execute_script("arguments[0].click();", button)

3 等待元素出现:有时候,您需要等待一个元素出现或变为可见状态后再与它进行交互。

您可以使用JavaScript等待元素出现或变为可见状态后再进行交互。

# 等待元素在页面上可见

element = driver.find_element(By.CSS_SELECTOR,'#my-element')

driver.execute_script("""

var wait = setInterval(function() {

if (arguments[0].offsetParent !== null) {

clearInterval(wait);

// 元素现在可见,可以对其进行操作

}

}, 100);

""", element)

4 执行自定义JavaScript:使用execute_script方法可以执行您编写的任何自定义 JavaScript 代码,

以完成测试或自动化任务。

driver.execute_script("console.log('Hello, world!');")

5 打开多窗口

driver.execute_script("window.open('https://www.douban.com')")

3. SeleniumAdvanced Grammar

page_source(elements source code)find()(Find whether a certain string exists in the web page source code)find_element(By.LINK_TEXT)(Getting general processing of turning pages based on link text)node.get_attribute(nodeRepresents the node name;get_attributerepresents getting the attribute name)node.text()(Getting the text content of a node includes child nodes and descendant nodes)

from selenium import webdriver

from selenium.webdriver import ActionChains

from selenium.webdriver.common.by import By

import time

# 加载驱动

driver = webdriver.Chrome()

# 访问百度

# driver.get('https://www.baidu.com/')

"""

1拿到百度的数据结构源码 page_source

这个指的不是网页源代码而是我们前端页面最终渲染的结果也就是我们element中的数据

"""

# html = driver.page_source

# print(html)

"""

2 find() 在html源码中查找某个字符是否存在

如果存在则会返回一段数字 如果不存在 不会报错 但是会返回-1

find应用场景 比如翻页爬取

"""

# print(html.find("kw"))

# print(html.find("kwwwwwwwwwwwwwwwwwww"))

""" 3 find_element_by_link_text("链接文本")

# driver.get('https://www.gushiwen.cn/')

# time.sleep(3)

# # 根据链接文本内容定位按钮

# driver.find_element(By.LINK_TEXT,'下一页').click() # 点击豆瓣第一页的下一页

# # 画面会跳转到第二页 打印的是第二页的源码

# time.sleep(3)

# print(driver.page_source)

"""4 node.get_attribute("属性名") node代表的是你想获取的节点 """

# url = 'https://movie.douban.com/top250'

# driver.get(url)

# a_tag = driver.find_element(By.XPATH,'//div[@class="item"]/div[@class="pic"]/a')

# print(a_tag.get_attribute('href'))

""" 5 node.text 获取节点的文本内容 包含子节点和后代节点 """

# driver.get('https://movie.douban.com/top250')

# time.sleep(2)

# div_tag = driver.find_element(By.XPATH,'//div[@class="hd"]')

# print(div_tag.text)

4.Set Seleniuminterfaceless mode

The vast majority of servers have no interface. seleniumThere is also a headless mode for controlling Google Chrome (also called headless mode).

How to turn on the headless mode?

- Instantiate the configuration object

options = webdriver.ChromeOptions()

- Add the command to enable interfaceless mode to the configuration object

options.add_argument("--headless")

- Configuration object adds disabled

gpucommands

options.add_argument("--disable-gpu")

- Instantiate

driveran object with a configuration object

driver = webdriver.Chrome(chrome_options=options)

from selenium import webdriver

# 创建一个配置对象

options = webdriver.ChromeOptions()

# 开启无界面模式

options.add_argument('--headless')

# 实例化带有配置的driver对象

driver = webdriver.Chrome(options=options)

driver.get('http://www.baidu.com/')

html = driver.page_source

print(html)

driver.quit()

5. SeleniumSolutions to identified problems

seleniumBuilding a crawler can solve many anti-crawling problems, but seleniumthere are also many characteristics that can be identified. For example, the value seleniumafter driving the browser window.navigator.webdriveris true, but the value is undefined when the browser is running normally ( undefined)

import time

from selenium import webdriver

# 使用chrome开发者模式

options = webdriver.ChromeOptions()

options.add_experimental_option('excludeSwitches', ['enable-automation'])

# 禁用启用Blink运行时的功能

options.add_argument("--disable-blink-features=AutomationControlled")

# Selenium执行cdp命令 再次覆盖window.navigator.webdriver的值

driver = webdriver.Chrome(options=options)

driver.execute_cdp_cmd("Page.addScriptToEvaluateOnNewDocument", {

"source": """

Object.defineProperty(navigator, 'webdriver', {

get: () => undefined

})

"""

})

driver.get('https://www.baidu.com/')

time.sleep(3)

Selenium comprehensive case of Dangdang book information crawling:

import csv

import time

from selenium import webdriver

from selenium.webdriver.common.by import By

"""

爬取当当网python爬虫书籍信息并保存到csv文件中

"""

class DDspider:

# 初始化方法

def __init__(self):

# 加载驱动

self.driver = webdriver.Chrome()

# 发起请求

self.driver.get('http://www.dangdang.com/')

# 窗口最大化

# self.driver.maximize_window()

# 定位输入框

ipt_tag = self.driver.find_element(By.ID, 'key_S')

ipt_tag.send_keys('python爬虫')

time.sleep(1)

# 定位搜索框

serach_ipt = self.driver.find_element(By.CLASS_NAME, 'button')

serach_ipt.click()

time.sleep(1)

# 解析目标数据

def Getitem(self):

# 将滚轮拉到底部为的是让全部的source进行加载完全

"""想办法把滚轮拖动到最后"""

# window.scrollTo 拖动滚轮 第一个参数0 表示从起始位置开始拉 第二个参数表示的是整个窗口的高度

self.driver.execute_script(

'window.scrollTo(0,document.body.scrollHeight)' # 这一点属于js代码 不需要死记 但是需要积累

)

time.sleep(1)

lilist = self.driver.find_elements(By.XPATH, '//ul[@id="component_59"]/li')

print(len(lilist))

# 定义列表用于存放一页中所有书的信息

books = []

for i, item in enumerate(lilist):

try:

# 定义字典用于存放每一本书的信息

book = {

}

# 书图

if i == 0:

book['img'] = item.find_element(By.XPATH, './a/img').get_attribute('src')

else:

book['img'] = 'https:' + item.find_element(By.XPATH, './a/img').get_attribute('data-original')

# 书名

book['name'] = item.find_element(By.XPATH, './p[@class="name"]/a').get_attribute('title')

# 价格

book['price'] = item.find_element(By.XPATH, './p[@class="price"]/span').text

# 作者

book['author'] = item.find_element(By.XPATH, './p[@class="search_book_author"]/span[1]').text

# 出版时间

book['Publication_time'] = item.find_element(By.XPATH, './/p[@class="search_book_author"]/span[2]').text

# 出版社

book['Publishing_house'] = item.find_element(By.XPATH, './/p[@class="search_book_author"]/span[3]').text

books.append(book)

except Exception as e:

print(e.__class__.__name__)

# print(books,len(books))

return books

# 翻页函数

def Next_page(self):

alldata = []

while True:

books = self.Getitem()

alldata += books

print(len(alldata),"*" * 50)

if self.driver.page_source.find("next none") == -1:

next_tag = self.driver.find_element(By.XPATH, '//li[@class="next"]/a')

self.driver.execute_script("arguments[0].click();", next_tag)

time.sleep(1)

else:

self.driver.quit()

break

return alldata

# 写入数据

def WriteData(self,alldata):

headers = ('img', 'name', 'price', 'author','Publication_time','Publishing_house')

with open('当当.csv', mode='w', encoding='utf-8', newline="")as f:

writer = csv.DictWriter(f, headers)

writer.writeheader() # 写入表头

writer.writerows(alldata)

if __name__ == '__main__':

DD = DDspider()

alldata = DD.Next_page()

DD.WriteData(alldata)