Table of contents

1. Let’s talk about the port concept again

5. Things to note when using UDP

6. Representation form of UDP protocol in the kernel

7. Application layer protocol based on UDP

2.TCP confirmation response mechanism

3. Timeout retransmission mechanism

4. TCP message six-bit flag bit

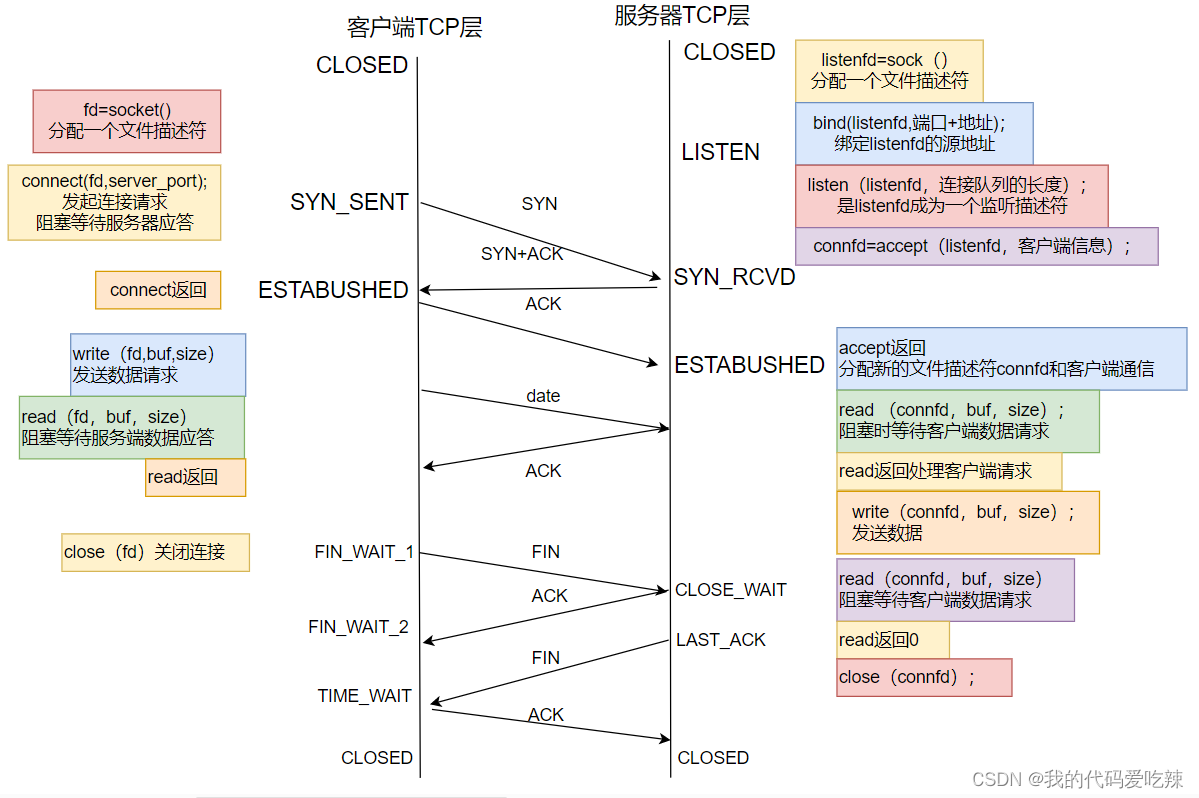

12. Connection management mechanism

13. The second parameter of listen

16. Based on TCP application layer protocol

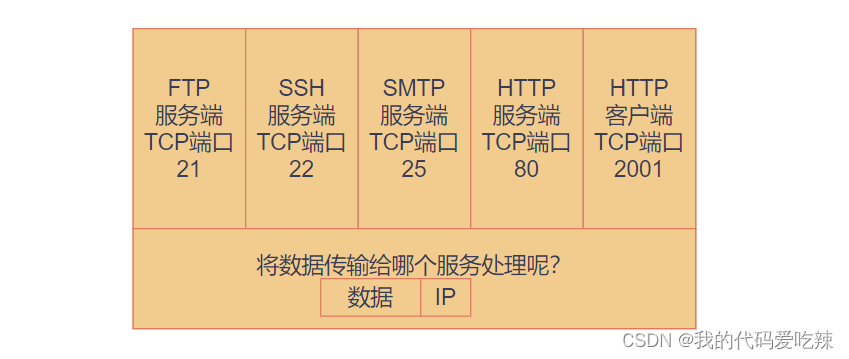

1. Let’s talk about the port concept again

Port numbers (Port) identify different applications communicating on a host;

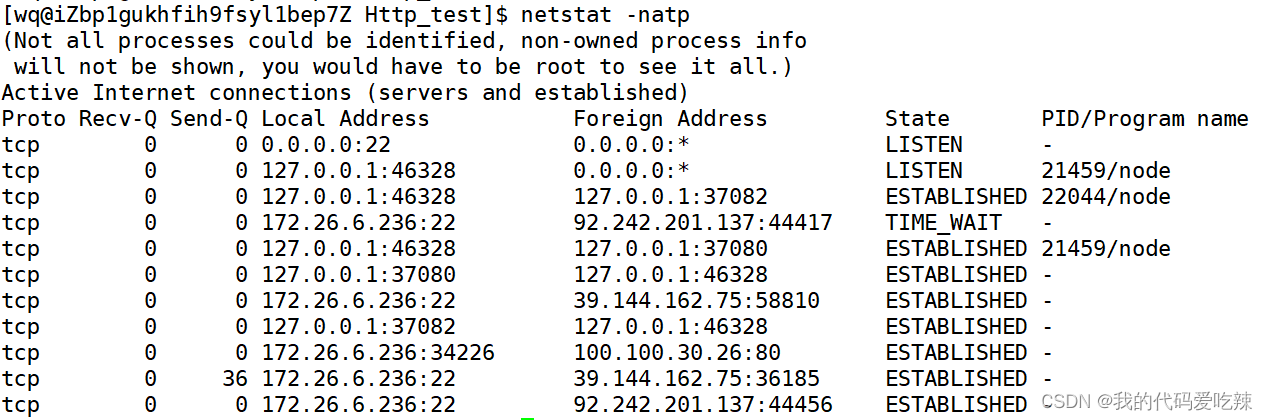

In the TCP/IP protocol, a five-tuple of "source IP", "source port number", "destination IP", "destination port number", "protocol number" is used to identify a Communication (can be viewed through netstat -n);

Netstat common options:

- n Refuse to display aliases and convert all numbers that can be displayed into numbers

- l Only list the service status that is Listening

- p displays the name of the program that establishes the relevant link

- t (tcp) only displays tcp related options

- u (udp) displays only udp related options

- a (all) displays all options, LISTEN-related is not displayed by default

pidof: is very convenient when viewing the process id of the server.

- Syntax: pidof [process name]

- Function: View process id through process name

![]()

2. UDP protocol

1.UDP protocol format

16-bit UDP length: Indicates the maximum length of the entire datagram (UDP header + UDP data);

16-bit checksum: If the checksum is incorrect, it will be discarded directly;

How does the UDP protocol separate headers and payloads:

- The UDP protocol has a fixed-length header.

- Just read the header at a fixed length and get the length of the 16-bit message, that is, the length of the header and payload.

- The payload length is obtained by subtracting the 8-byte fixed-length header from the 16-bit message length.

How the UDP protocol achieves upward delivery:

- As long as the header is read, the destination port is known, and the destination port is the application layer process we deliver this time.

2. Characteristics of UDP

The process of UDP transmission is similar to sending a letter.

- No connection: Transmit directly if you know the IP and port number of the opposite end. No need to establish a connection;

- Unreliable: There is no confirmation mechanism and no retransmission mechanism; if the segment cannot be sent to the other party due to network failure, the UDP protocol layer will not return any error message to the application layer;

- Datagram-oriented: cannot flexibly control the number and quantity of reading and writing data;

3. Datagram-oriented

The application layer hands over the message of any length to UDP, and UDP sends it as it is, neither splitting nor merging;

Transfer 100 bytes of data using UDP:

- If the sender calls sendto once and sends 100 bytes, then the receiver must also call the corresponding recvfrom once and receive 100 bytes; instead of calling recvfrom 10 times in a loop and receiving 10 bytes each time;

4.UDP buffer

- UDP does not have a real send buffer. Calling sendto will be handed directly to the kernel, which will pass the data to the network layer protocol for subsequent transmission actions;

- UDP has a receive buffer. However, this receive buffer cannot guarantee that the order of received UDP packets is consistent with the order of sent UDP packets; if the buffer is full, then arriving UDP data will be discarded, which is also what UDP shows. Relative to the unreliability of TCP.

- UDP socket can both read and write. This concept is called Full-duplex.

5. Things to note when using UDP

- We noticed that the UDP protocol header has a maximum length of 16 bits. In other words, the maximum length of data that can be transmitted by a UDP is 64K (including the UDP header).

- However, 64K is a very small number in today's Internet environment.

- If the data we need to transmit exceeds 64K, we need to manually subpackage it at the application layer, send it multiple times, and manually assemble it at the receiving end;

6. Representation form of UDP protocol in the kernel

The Linux kernel is written in C language, and the transport layer and network layer belong to the operating system, so the protocols of the transport layer are also written in C language.  Since it is written in C language, if we think of a structure in the format of UDP, we can easily use structures or bit fields to implement it. There is a doubt that the size of the payload cannot be determined, so how to define the structure? C99 syntax supports flexible arrays.

Since it is written in C language, if we think of a structure in the format of UDP, we can easily use structures or bit fields to implement it. There is a doubt that the size of the payload cannot be determined, so how to define the structure? C99 syntax supports flexible arrays.

struct Udp

{

uint16_t src_port;

uint16_t dst_port;

uint16_t udp_len;

uint16_t check;

char date[];//柔性数组。

};7. Application layer protocol based on UDP

- NFS: Network File System

- TFTP: Trivial File Transfer Protocol

- DHCP: Dynamic Host Configuration Protocol

- BOOTP: Boot Protocol (for booting diskless devices)

- DNS: Domain Name Resolution Protocol

- It also includes custom application layer protocols when you write your own UDP program;

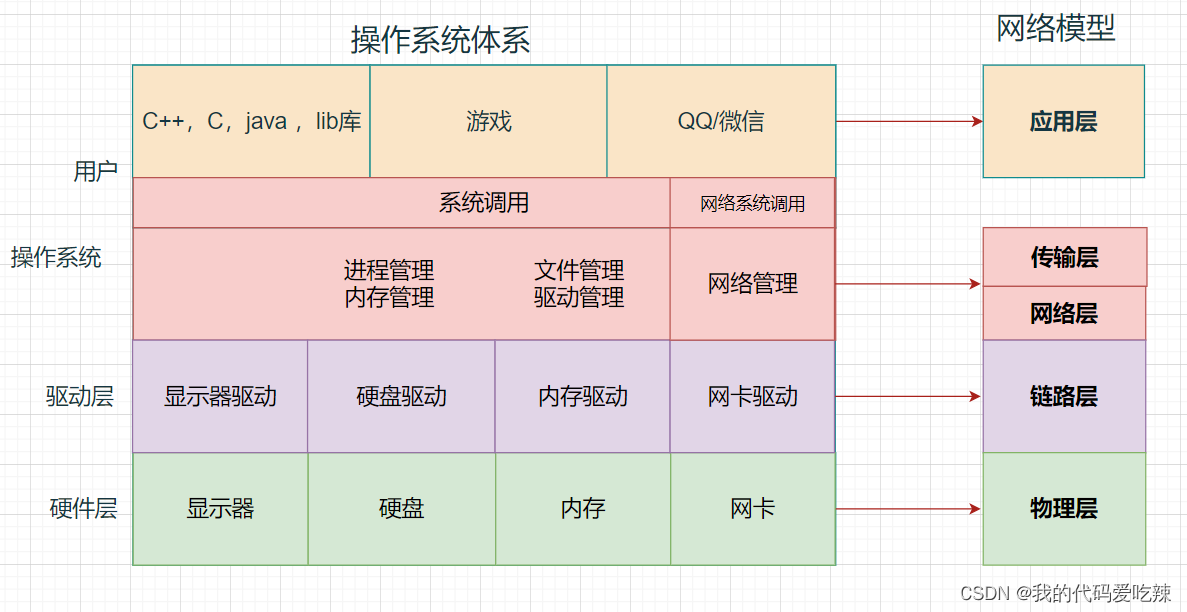

3.TCP protocol

The full name of TCP is "Transmission Control Protocol". As its name suggests, it requires detailed control over the transmission of data;

When the upper application layer service uses send and write to send the data that needs to be sent to the "network", in fact, for the application layer, it thinks that as long as it calls send and write, the data has been sent. In fact, This is not the case, the data still needs to go through the transport layer protocol before it can be sent. As for the application layer, he said he didn't know, didn't know, or didn't care about how the transport layer sent the data and when it was sent. How to send, when to send, how to ensure the reliability of the transmitted data, this is what TCP at the transport layer should do.

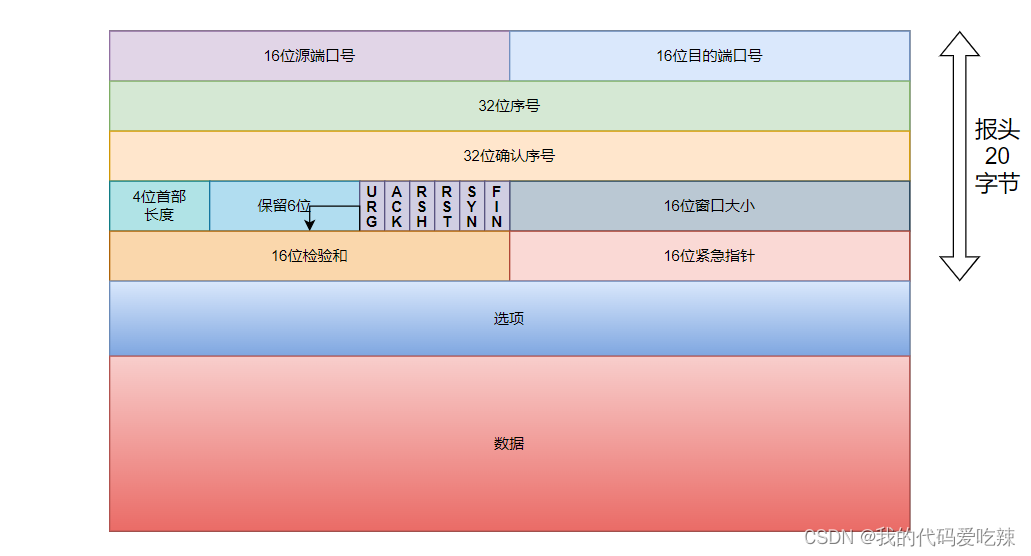

1.TCP protocol format

1. Source/destination port number: Indicates which process the data comes from and which process it goes to;

2. 32-bit serial number/32-bit confirmation number: More details later;

3. 4-bit TCP header length: Indicates how many 32-bit bits the TCP header has (how many 4-bytes there are); so the maximum length of the TCP header is 15 * 4 = 60

4. 6-bit flag:

- URG: Is the urgent pointer valid?

- ACK: Confirm whether the number is valid

- PSH: prompts the receiving application to immediately read the data from the TCP buffer

- RST: The other party requires re-establishing the connection; we call the message segment carrying the RST identifier a reset segment.

- SYN: Requests to establish a connection; we call the SYN identifier a synchronization segment

- FIN: Notify the other party that the local end is about to close. We call the segment carrying the FIN identifier an end segment.

5. 16-bit window size: More on this later.

6. 16-bit checksum: padding at the sender, CRC check. If the check at the receiver fails, it is considered that there is a problem with the data. The checksum here not only includes the TCP header, but also the TCP data part.

7. 16-bit emergency pointer: identifies which part of the data is urgent data;

8. 40-byte header option: ignored for now;

How does the TCP protocol separate headers and payloads:

First, the four-digit header length cold len is read. The header length is len*4 bytes. The header data is removed from the message, and the rest is the payload.

Note: In the absence of option length, the length of the four-digit header is 20 bytes. Naturally, the binary field filled in with the length of the four-digit header is 1001.

How TCP achieves upward delivery:

When we read the header, we naturally know the destination port, which is the application layer process we delivered this time.

2.TCP confirmation response mechanism

reliability:

When we mention TCP, we first think of reliability, so what is reliability and what is not reliability?

Unreliability: loss of data (packet loss), transmission too fast, transmission too slow, disorder, duplication, etc., are all unreliable.

The opposite is naturally reliability.

Confirmation response: Solving the problem of packet loss

In order to ensure the reliability of TCP, when we send several messages to the opposite host, how do we know whether the opposite host has received our messages? It's very simple. As long as the other party gives you a response, we will know that the other party has received the message data we sent.

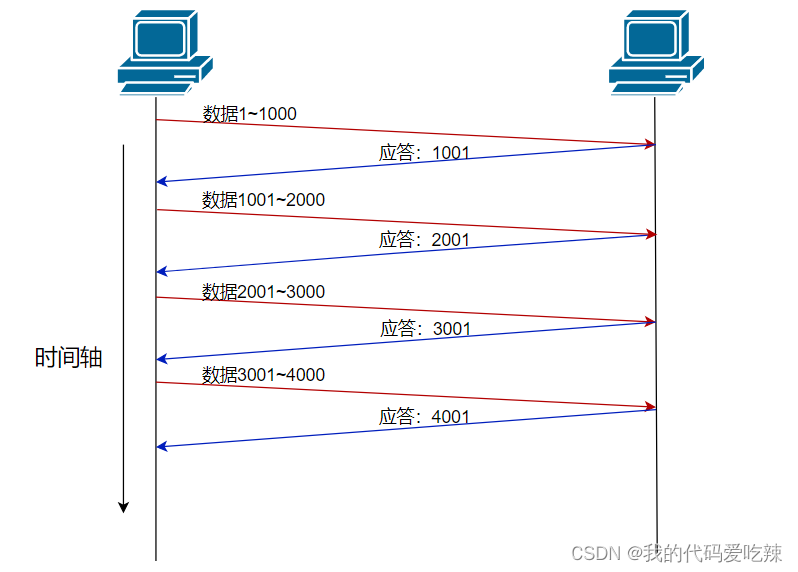

However, this is a serial process of sending one response each time, and the efficiency will inevitably be somewhat low. Therefore, in practice, sending messages and responses are not parallel, but concurrent.

However, this is a serial process of sending one response each time, and the efficiency will inevitably be somewhat low. Therefore, in practice, sending messages and responses are not parallel, but concurrent.

The role of serial number and confirmation serial number:

So if there is a data message that does not receive a response, and the order of the messages received by our receiving end is not necessarily the order sent by the sender, then how to determine which data message is lost?

When our data is not sent to the peer host, our data will be stored in the TCP buffer. So what does the TCP buffer look like?

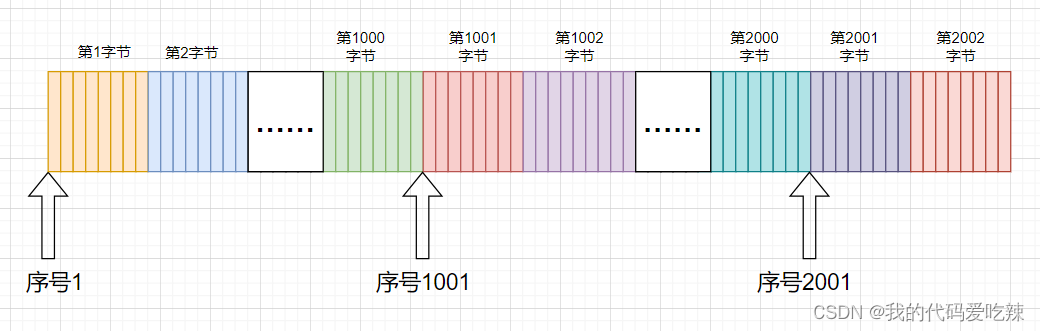

In fact, TCP's sending buffer is a char array, and because the array naturally has subscripts, TCP numbers each byte in the buffer. And this number is actually the sequence number in the TCP protocol header. When the data we send each time has a sequence number, then the receiving end only needs to sort and deduplicate it according to the sequence number. First, the receiving end can ensure the orderliness of the data, and can also easily know which messages have not been received. arrive.

For the receiving end, after we receive a message and get the sequence number, we can respond to the sending end with a response message with a confirmation signal. The confirmation sequence number of the response message is the next position of the sequence number of the previous message, which represents You can continue sending from that location next time.

The mechanism of confirming the sequence number means to inform the sender of the next sending position. In other words, it means to tell the sender that I have received all the messages before the current confirmation number X.

3. Timeout retransmission mechanism

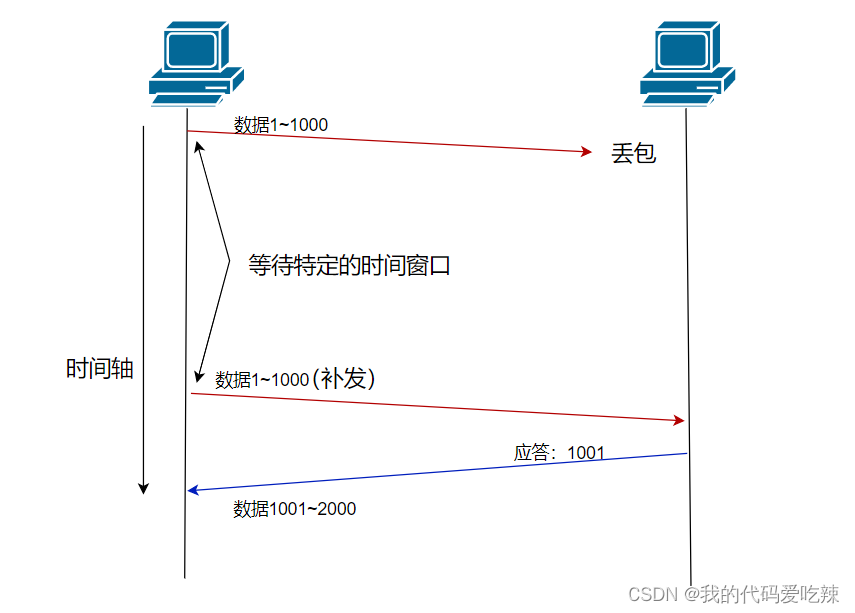

If we send a data message but do not receive a response, should we immediately send a new message to the other party? No, first we need to know that if we do not receive a response, there are generally two situations:

- The other party received the data message, but the response sent to the sender was lost.

- The other party has indeed not received the message, so naturally it will not send a response.

If this is case 1:Although we did not receive the response message for the current message data, we received the response message for the next message after a while. , due to the mechanism of the response message, we receive the response of the next message, which means that the other party has received all the current messages.

If it is situation 1, host B will receive a lot of duplicate data. Then the TCP protocol needs to be able to identify those packets as duplicate packets and discard the duplicates. At this time, we can use the sequence number mentioned above to easily It is easy to achieve the effect of weight removal.

If it is case 2:We don’t know that the sent message is indeed lost. We are still expecting the first situation to happen, but after a while there is still no After receiving the confirmation response, we really need to give a resend message at this time. But in the final analysis, we cannot directly reissue the message. Faced with situation 2, where after waiting for a period of time, there is still no response message, we resend it, which is the timeout retransmission mechanism.

So, how to determine the timeout if it times out?

- In the most ideal situation, find a minimum time to ensure that "the confirmation response must be returned within this time."

- However, the length of this time varies depending on the network environment.

- If the timeout is set too long, it will affect the overall retransmission efficiency;

- If the timeout is set too short, duplicate packets may be sent frequently;

In order to ensure high-performance communication in any environment, TCP dynamically calculates this maximum timeout.

- In Linux (the same is true for BSD Unix and Windows), the timeout is controlled in units of 500ms, and the timeout for each timeout retransmission is an integer multiple of 500ms.

- If you still get no response after retransmitting once, wait 2*500ms before retransmitting.

- If there is still no response, wait 4*500ms for retransmission. And so on, increasing exponentially.

- When a certain number of retransmissions is accumulated, TCP considers that there is an abnormality in the network or the peer host and forcibly closes the connection.

4. TCP message six-bit flag bit

1.ACK

Description: Confirm whether it is valid, which means that if the current message is confirmed and valid, then the current message must be a response message.

2.RST

Description: The other party requires re-establishing the connection. For example, when the client disconnects due to network jitter, the server does not know it, which leads to inconsistencies in the re-establishment of the connection between the two parties. The client can send a message with SRT to request re-establishment of the connection.

3. URG

Note: Whether the emergency pointer is valid, the emergency pointer is a 16-bit integer data. If the emergency pointer is valid, this message does not need to wait in the receive buffer, and can be directly queued and obtained by the upper-layer application, and this time The payload also carries 1 byte of emergency data, and a 16-bit emergency pointer identifies the starting position of the emergency data in the payload.

4. SYN

Note: TCP is connection-oriented, so when there is a message requesting a TCP connection, the message initiating the connection will carry the SYN flag.

5.END

Note: When the communication between the two parties ends and the connection is disconnected, a message is required to request the disconnection. It will carry the FIN flag.

6.PSH

If one of the communicating parties feels that the other party's receiving buffer does not have enough remaining space, it can urge the other party's application layer to quickly read the data from the buffer. This kind of message will carry the PSH flag.

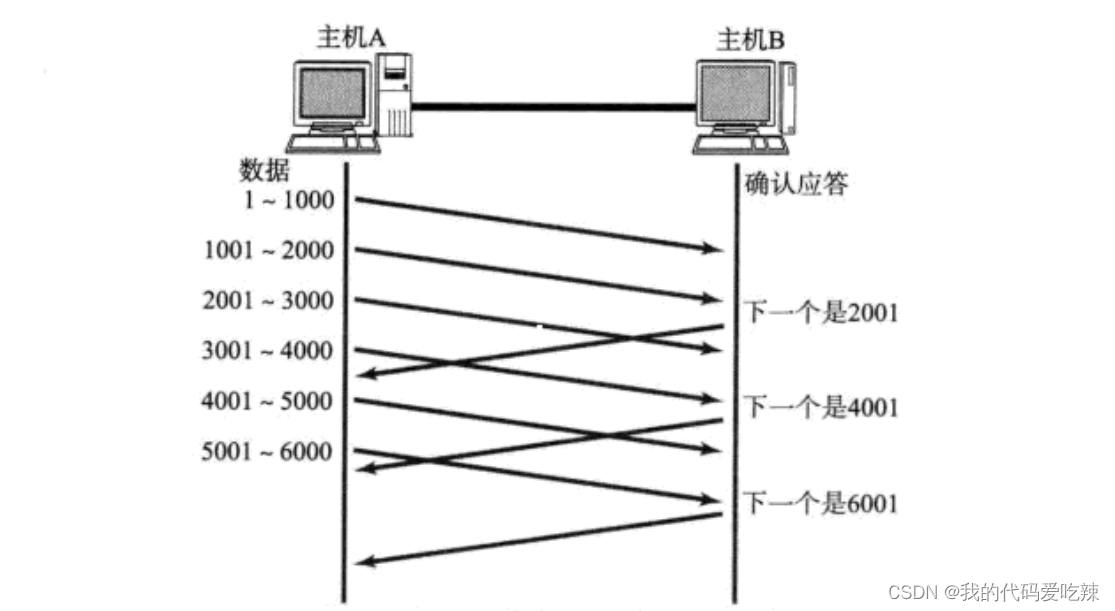

5. Sliding window

We just discussed the confirmation response strategy. For each data segment sent, an ACK confirmation response must be given. After receiving the ACK, the next data segment is sent. This has a major disadvantage, that is, poor performance. Especially This is when the data round-trip time is longer.

Since the performance of this one-send-one-receive method is low, we can greatly improve performance by sending multiple pieces of data at once (actually overlapping the waiting times of multiple segments).

Where is the sliding window? What is it?

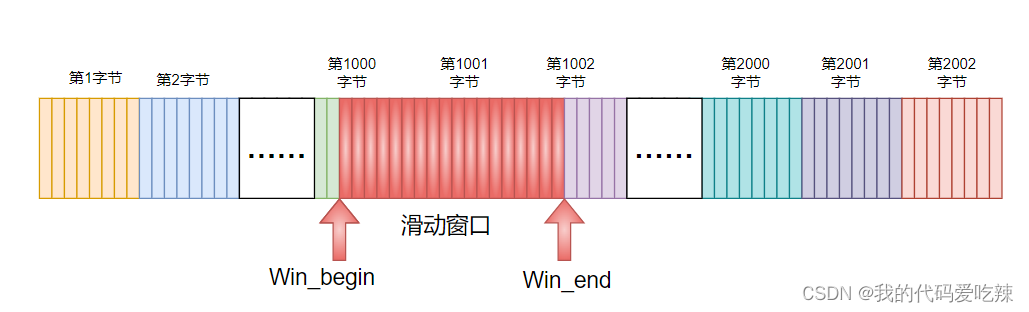

Today we know that TCP transmission control is mainly for the data packets to be sent, because the data packets are in the TCP sending buffer,Then the sliding window is in the TCP send buffer. The sliding window is just two pointers.

Can the sliding window be made larger? Can it be made smaller? Can it be 0?

It can be made larger or smaller, just change the position of the two pointers according to the other party's ability to accept it.

Can be 0, that is, the sender does not send data.

Can the sliding window keep sliding? How to slide?

The sliding window naturally divides the entire buffer into three parts. Before the sliding window, there are data packets that have received responses, and after the sliding window, there are packets that have not yet been sent. The sliding window contains data packets that are sent directly without waiting for any ACK, or data packets that have been sent but have not yet received a response.

When the response to the sent message in the window is accepted, you can directly move Win_begin to the right.

Moreover, the message at the left end of the sliding window has been accepted by the other party, so it is free for the sending buffer. The sending buffer can be designed in a ring shape, and the sliding window will not cross the boundary.

How to update the size of East China window? What is the basis?

The size of the sliding window determines the size of the data packets sent this time. TCP not only ensures that the data will not be missed, but even if it is missed, it can also let me know in time. We also need to ensure that the amount of data we send each time is capable of being accepted by the other party. If the other party's receiving ability is weak, we will send less data; if the other party's receiving ability is strong, we will send more data. So we need to know how big the other party's receive buffer is, which requires the 16-bit window size in the TCP message format.

16 is the window size: is used to notify the other party of its acceptance capabilities.

Therefore, the size of our sliding window should be the size of the other party's window, that is:

- Win_begin = Confirm serialization.

- Win_end = Win_begin + Win_size.

What happens if data packets are lost in the sliding window?

If there is data loss, the lost data must be at the first position of the window. Because the received response message will be removed from the window, when a message is lost, just resend the first message.

illustrate:

The window size refers to the maximum value that can continue to send data without waiting for an acknowledgment. The window size in the above figure is 4000 bytes (four segments). When sending the first four segments, there is no need to wait for any ACK and is sent directly. ;

After receiving the first ACK, the sliding window moves backward and continues to send the data of subsequent segments; and so on;

In order to maintain this sliding window, the operating system kernel needs to open up Send a buffer to record which data is currently unanswered; only data that has been acknowledged can be deleted from the buffer; the larger the window, the higher the network throughput rate;

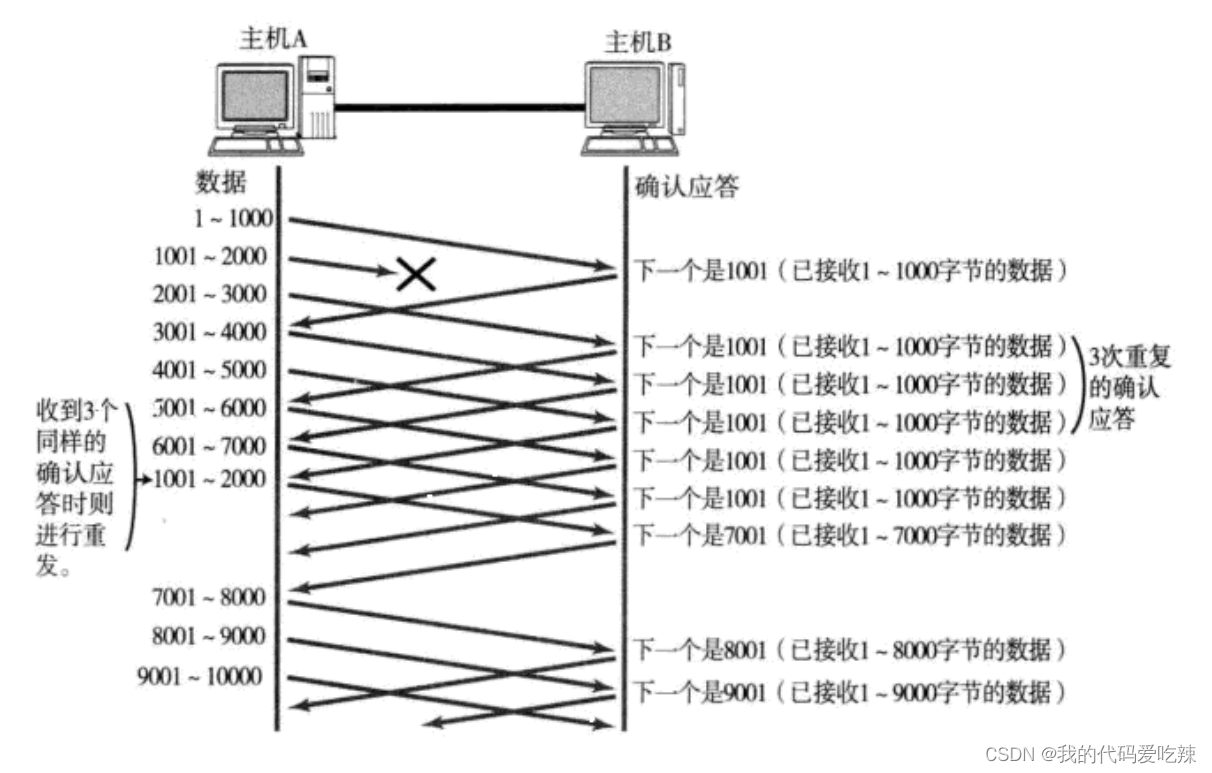

Quick retransmission:

- When a certain segment is lost, the sender will always receive ACKs like 1001, which is like reminding the sender "What I want is 1001";

- If the sending host receives the same "1001" response three times in a row, it will resend the corresponding data 1001 - 2000;

- At this time, after the receiving end receives 1001, the ACK returned again is 7001 (because 2001 - 7000). The receiving end has actually received it before, and it was placed in the receiving buffer of the receiving operating system kernel;

This mechanism is called "High-speed retransmission control" (also called "fast retransmission").

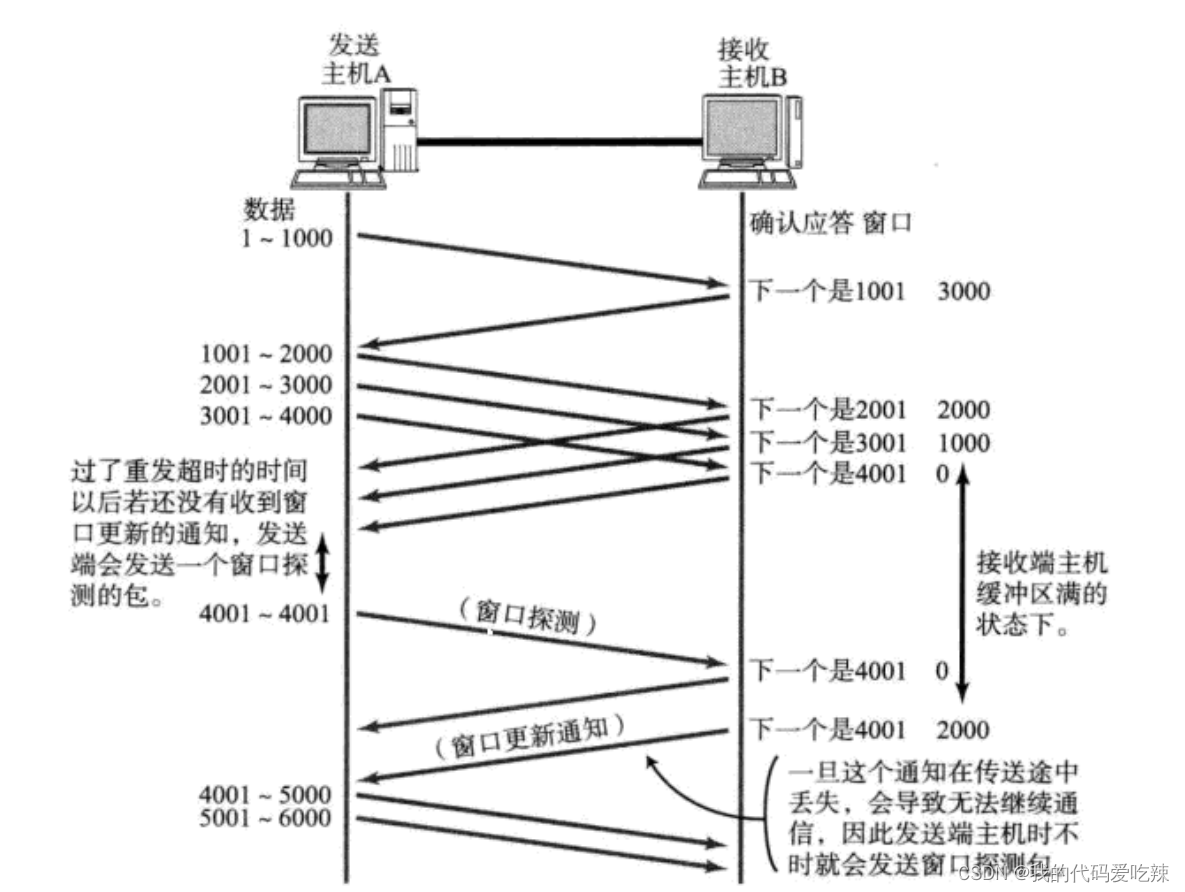

6. Flow control

The speed at which the receiving end processes data is limited. If the sending end sends too fast, causing the receiving end's buffer to be filled up. At this time, if the sending end continues to send, packet loss will occur, which in turn will cause packet loss. Retransmission and a series of chain reactions.

Therefore, TCP supports determining the sending speed of the sender based on the processing capabilities of the receiving end. This mechanism is called Flow Control;

- The receiving end puts the buffer size it can receive into the "window size" field in the TCP header, and notifies the sending end through the ACK end;

- The larger the window size field, the higher the throughput of the network;

- Once the receiving end finds that its buffer is almost full, it will set the window size to a smaller value and notify the sending end;

- After the sender receives this window, it will slow down its sending speed, that is, reduce the sliding window.

- If the buffer of the receiving end is full, the window will be set to 0 and the sliding window size of the sending end is 0. At this time, the sender will no longer send data, but it needs to send a window detection data segment regularly to let the receiving end tell the window size. sending end.

How does the receiving end tell the sending end about the window size? Recall that in our TCP header, there is a 16-bit window field, which stores the window size information; then the question arises, the maximum 16-bit number represents 65535, then Is the maximum TCP window 65535 bytes?

In fact, the 40-byte option in the TCP header also contains a window expansion factor M. The actual window size is the value of the window field shifted left by M bits;

7.Congestion control

Although TCP has the sliding window as a killer, it can send large amounts of data efficiently and reliably. However, if a large amount of data is sent at the beginning, it may still cause problems.

Because there are many computers on the network, the current network status may already be relatively congested. Without knowing the current network status, rashly sending a large amount of data is likely to make things worse.

Are you looking down on me too much? The thousands of data packets I can send are just a drop in the bucket for the entire network. How could they possibly aggravate network congestion?

We must have a consensus that there is more than just one host on the Internet. A large number of hosts will send several packets to the network at any time, and everyone uses the TCP protocol. Therefore, when the network is congested, as long as TCP can prevent the host from slowing down sending, it will also cause the hosts on the entire network to send less data. The pressure on the network will slowly recover.

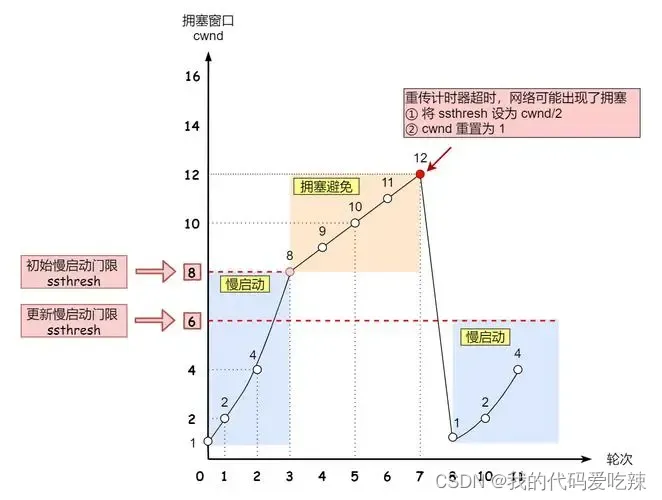

TCP introducesslow start mechanism, which first sends a small amount of data, explores the path, and finds out the current network congestion status, and then decides according to the How fast to transfer data;

- A concept called the congestion window is introduced here

- When sending starts, define the congestion window size as 1;

- Each time an ACK response is received, the congestion window is increased by 1;

- Every time a data packet is sent, the congestion window is compared with the window size fed back by the receiving host, and the smaller value is used as the actual sliding window, because the factors that affect our sending at this time are not only the other party's acceptance ability. , plus the current network congestion level. The smaller value of the two is used as the size of the sliding window. Ensure that network congestion does not increase and the other party can accept it.

The growth rate of the congestion window like the above is exponential. "Slow start" only means that it is slow at first, but the growth rate is very fast.

- In order not to grow so fast, the congestion window cannot be simply doubled.

- A threshold called slow start is introduced here

- When the congestion window exceeds this threshold, it no longer grows exponentially but linearly.

- When TCP starts, the slow start threshold is equal to the maximum value of the window;

- During each timeout and retransmission, the slow start threshold will become half of the original value, and the congestion window will be reset to 1.

A small amount of packet loss, we only trigger a timeout retransmission; a large amount of packet loss, we think the network is congested;

When TCP communication starts, the network throughput will gradually increase ; As network congestion occurs, the throughput will immediately drop;

Congestion control, in the final analysis, is that the TCP protocol wants to transmit data to the other party as quickly as possible, but it must avoid putting too much pressure on the network A compromise solution.

A process like TCP congestion control is likethe feeling of being in love

8. Delayed response

If the host receiving the data returns an ACK response immediately, the return window may be smaller at this time.

- Assume that the buffer at the receiving end is 1M. 500K data is received at one time; if the response is immediate, the returned window is 500K;

- But in fact, the processing speed of the processing end may be very fast, and 500K data is consumed from the buffer within 10ms;

- In this case, the receiving end's processing is far from reaching its limit. Even if the window is enlarged, it can still handle it;

- If the receiving end waits for a while before responding, for example, waits for 200ms before responding, then the window size returned at this time is 1M;

Be sure to remember, The larger the window, the greater the network throughput and the higher the transmission efficiency. Our goal is to ensure Try to improve transmission efficiency when the network is not congested:

- So can all packets be responded to with delay? Definitely not;

- Quantity limit: respond once every N packets;

- Time limit: Respond once if the maximum delay time is exceeded;

The specific number and timeout period vary depending on the operating system; generally N is 2 and the timeout period is 200ms;

9. Piggybacking on responses

Based on the delayed response, we found that in many cases, the client server also "sends and receives" at the application layer. This means that the client tells the server "How are you" , the server will also respond to the client with "Fine, thank you";

. Then at this time, ACK can take advantage of it and return it together with the "Fine, thank you" that the server responded to. client.

To put it simply:This response is not just a response, but also carries some other information. This is called a piggyback response.

10. Oriented to byte stream

Create a TCP socket, and create a sending buffer and a receiving buffer in the kernel Buffer;

- When calling write, the data will first be written into the send buffer;

- If the number of bytes sent is too long, it will be split into multiple TCP packets and sent out;

- If the number of bytes sent is too short, it will wait in the buffer until the buffer length is almost the same, or send it out at another appropriate time;

- When receiving data, the data also reaches the kernel's receive buffer from the network card driver;

- The application can then call read to get data from the receive buffer;

- On the other hand, a TCP connection has both a sending buffer and a receiving buffer, so for this connection, you can both read data and write data. This concept is calledFull duplex;

Due to the existence of the buffer, the reading and writing of TCP programs do not need to match one-to-one, for example:

- When writing 100 bytes of data, you can call write once to write 100 bytes, or you can call write 100 times to write one byte each time;

- When reading 100 bytes of data, there is no need to consider how to write it. You can read 100 bytes at a time, or read one byte at a time, repeating 100 times;

- is like water flow. can take more at one time or less at many times, so it is called byte stream oriented.

11. Sticky bag problem

- First of all, we must make it clear that the "packet" in the sticky problem refers to the data packet of the application layer.

- In the TCP protocol header, there is no "message length" field like UDP, but there is a field such as a sequence number.

- From the perspective of the transport layer, TCP messages come one by one. They are sorted according to the sequence number and placed in the buffer.

- From the perspective of the application layer, what we see is just a series of continuous bytes of data.

- Then the application program sees such a series of byte data and does not know which part starts from which part and which part is a complete application layer data packet.

So how to avoid the sticky package problem? It boils down to one sentence, Clear the boundary between the two packages.

- For fixed-length packets, ensure that they are read at a fixed size every time; for example, if a Request structure is of a fixed size, then just read it sequentially from the beginning of the buffer according to sizeof(Request);

- For variable-length packets, you can agree on a field of the total length of the packet at the position of the packet header, so that you know the end position of the packet, just like the network version calculator we wrote ourselves.

- For variable-length packages, you can also use clear delimiters between packages (the application layer protocol is determined by the programmer himself, as long as the delimiter does not conflict with the text);

For the UDP protocol, is there also a "sticky packet problem"?

- No, UDP is datagram-oriented, and the reception of datagrams is atomic. Either a complete one is received, or it is not received. For UDP, if the upper layer has not yet delivered the data, the UDP message length is still. At the same time, UDP delivers data to the application layer one by one. There are very clear data boundaries.

- From the perspective of those standing at the application layer, when using UDP, you will either receive a complete UDP message or not. There will not be a "half" situation.

12. Connection management mechanism

Under normal circumstances, TCP needs to go through three handshakes to establish a connection, and four waves to disconnect. Connect.

Server-side status transition:

- [CLOSED -> LISTEN] After the server calls listen, it enters the LISTEN state and waits for the client to connect.

- [LISTEN -> SYN_RCVD] Once the connection request (synchronous message segment) is monitored, the connection is placed in the kernel waiting queue and a SYN confirmation message is sent to the client.

- [SYN_RCVD -> ESTABLISHED] Once the server receives the confirmation message from the client, it enters the ESTABLISHED state and can read and write data.

- [ESTABLISHED -> CLOSE_WAIT] When the client actively closes the connection (calls close), the server will receive the end message segment, the server returns the confirmation message segment and enters CLOSE_WAIT.

- [CLOSE_WAIT -> LAST_ACK] After entering CLOSE_WAIT, it means that the server is ready to close the connection (the previous data needs to be processed); when the server actually calls close to close the connection, it will send FIN to the client. At this time, the server enters the LAST_ACK state and waits for the last one. ACK arrives (this ACK is the client's confirmation of receipt of FIN).

- [LAST_ACK -> CLOSED] The server received the ACK for FIN and closed the connection completely.

Client status transition:

- [CLOSED -> SYN_SENT] The client calls connect and sends a synchronization message segment.

- [SYN_SENT -> ESTABLISHED] If the connect call is successful, it will enter the ESTABLISHED state and start reading and writing data.

- [ESTABLISHED -> FIN_WAIT_1] When the client actively calls close, it sends an end message segment to the server and enters FIN_WAIT_1.

- [FIN_WAIT_1 -> FIN_WAIT_2] When the client receives the server's confirmation of the end segment, it enters FIN_WAIT_2 and starts waiting for the server's end segment.

- [FIN_WAIT_2 -> TIME_WAIT] The client receives the end segment sent by the server, enters TIME_WAIT, and issues LAST_ACK.

- [TIME_WAIT -> CLOSED] The client has to wait for 2MSL (Max Segment Life, maximum packet survival time) before entering the CLOSED state.

What is a connection?

There will inevitably be a large number of connections within the OS at the same time, and the operating system will definitely need to manage these connections, so there will be these link data structures and management structures. struct link{......}; requires memory and CPU resources.

Why is there a three-way handshake when establishing a connection, 2, 4, and 5 times? Is this okay?

We note that it is not difficult to find that if the connection is established with two handshakes, then the client only needs to initiate a SYN, and the server will establish the connection. This will lead to the server using a very low cost to establish the server connection. The creation of a connection also requires CPU and memory resources. If a machine sends a large number of SYNs to the server, the server will soon be overloaded. This is a SYN flood attack. Even-numbered connections will have some such problems, and odd-numbered times, the minimum cost to establish a connection is a three-way handshake.

The second handshake is a piggyback response.

Several states when disconnecting:

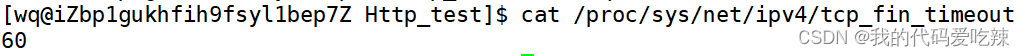

1.TIME_WAIT

We can see from the figure that the party that actively disconnects will enter a TIME_WAIT state.

The TCP protocol stipulates that the party actively closing the connection must be in the TIME_ WAIT state and wait for two MSL (maximum segment lifetime) times before returning to the CLOSED state.

MSL is specified as two minutes in RFC1122, but the implementation of each operating system is different. The default configuration value on Centos7 is 60s;

You can use cat /proc/sys/net /ipv4/tcp_fin_timeout View the value of msl.

This kernel file can be modified, and you must be the root user, but the modification will have no effect.

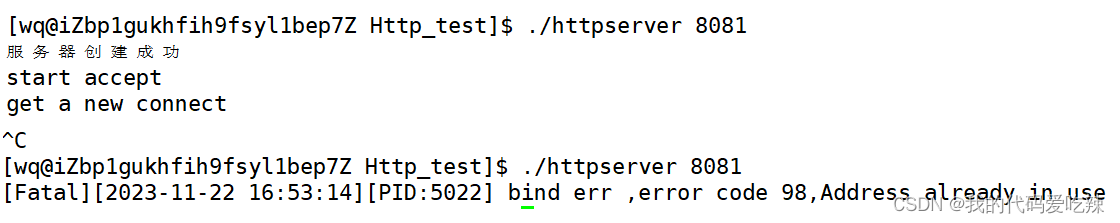

test:

Start the service, Ctrl C to disconnect, and start again:

We checked the current connection of port 8081 and found that the service is still using port 8081. , which is why our binding failed.

Why is the time of TIME_WAIT 2MSL?

- MSL is the maximum survival time of TCP messages, so if TIME_WAIT persists for 2MSL

- It can ensure that all the message segments that have not been received or are late in both transmission directions have disappeared (otherwise, the server will restart immediately and may receive late data from the previous process, but this data is likely to be wrong. );

- At the same time, it is also theoretically guaranteed that the last message arrives reliably (assuming that the last ACK is lost, the server will resend a FIN. At this time, although the client's process is gone, the TCP connection is still there, and LAST_ACK can still be resent);

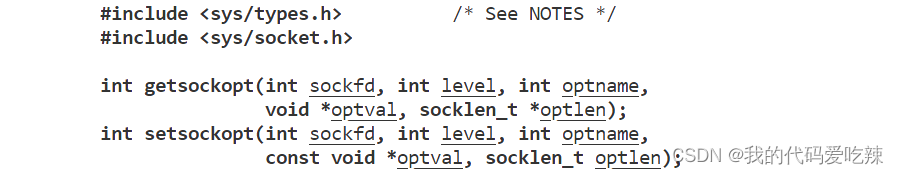

Methods to solve bind failure caused by TIME_WAIT state:

Re-listening is not allowed until the server's TCP connection is completely disconnected, which may be unreasonable in some cases:

- The server needs to handle a very large number of client connections (the lifetime of each connection may be short, but there are a large number of client requests every second).

- At this time, if the server actively closes the connection (for example, some clients are inactive and need to be actively cleaned up by the server), a large number of TIME_WAIT connections will be generated.

- Since our request volume is large, there may be a large number of TIME_WAIT connections, and each connection will occupy a communication quintuple (source ip, source port, destination ip, destination port, protocol). The server's ip and port are The protocol is fixed. If the IP and port number of the new client connection are the same as the link occupied by TIME_WAIT, problems will occur.

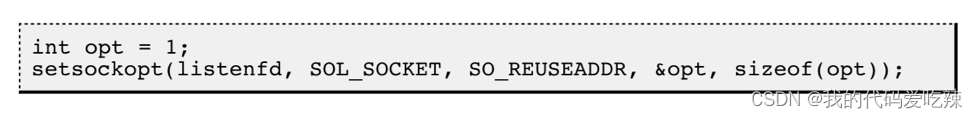

Use setsockopt() to set the SO_REUSEADDR option of the socket descriptor to 1, which means that multiple socket descriptors with the same port number but different IP addresses are allowed to be created.

void Bind()

{

// 设置无需等待TIME_WAIT状态

int opt = 1;

setsockopt(_listensock, SOL_SOCKET, SO_REUSEADDR, &opt, sizeof(opt));

struct sockaddr_in host;

host.sin_family = AF_INET;

host.sin_port = htons(_port);

host.sin_addr.s_addr = INADDR_ANY; // #define INADDR_ANY 0x00000000

socklen_t hostlen = sizeof(host);

int n = bind(_listensock, (struct sockaddr *)&host, hostlen);

if (n == -1)

{

Logmessage(Fatal, "bind err ,error code %d,%s", errno, strerror(errno));

exit(BING_ERR);

}

}2.CLOSE_WAIT state

When the other host first closes its own fd and initiates a FIN request, after the other end receives the FIN, it will also enter the CLOSE_WAIT state, close the communication file descriptor and then send ACK and enter the LAST_ACK state. However, the closing of the connected file descriptor is controlled by the programmer. What if we do not close the file descriptor:

Disconnect the client:

Disconnect the client:

At this time, the server has entered the CLOSE_WAIT state. Combined with our four-wave wave flow chart, it can be considered that the four wave waves were not completed correctly.

Summary: For a large number of CLOSE_WAIT states on the server, the reason is that the server did not close the socket correctly, resulting in the four waves not being completed correctly. This is a BUG. Just add The corresponding close can solve the problem.

13. The second parameter of listen

const static int backlog = 1;

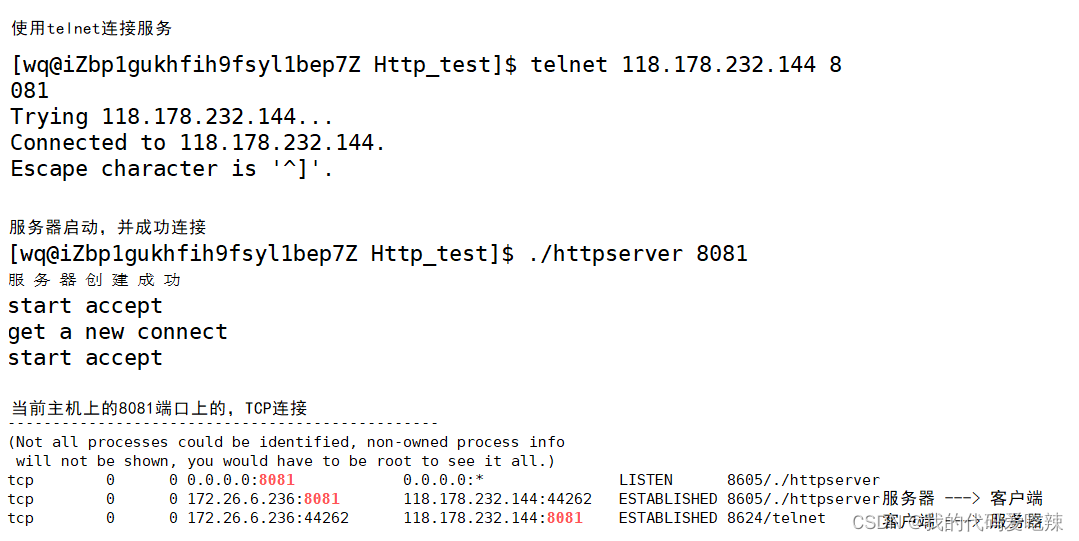

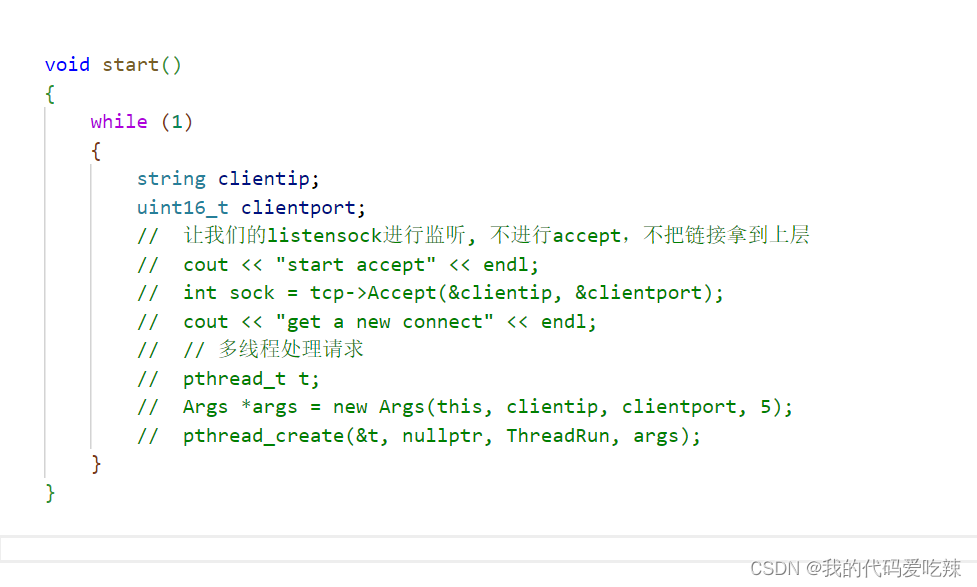

int n = listen(_listensock, backlog);Make the server only listen, but not accept, accept the connection, but do not take the link to the upper layer:

Use telnet to connect:

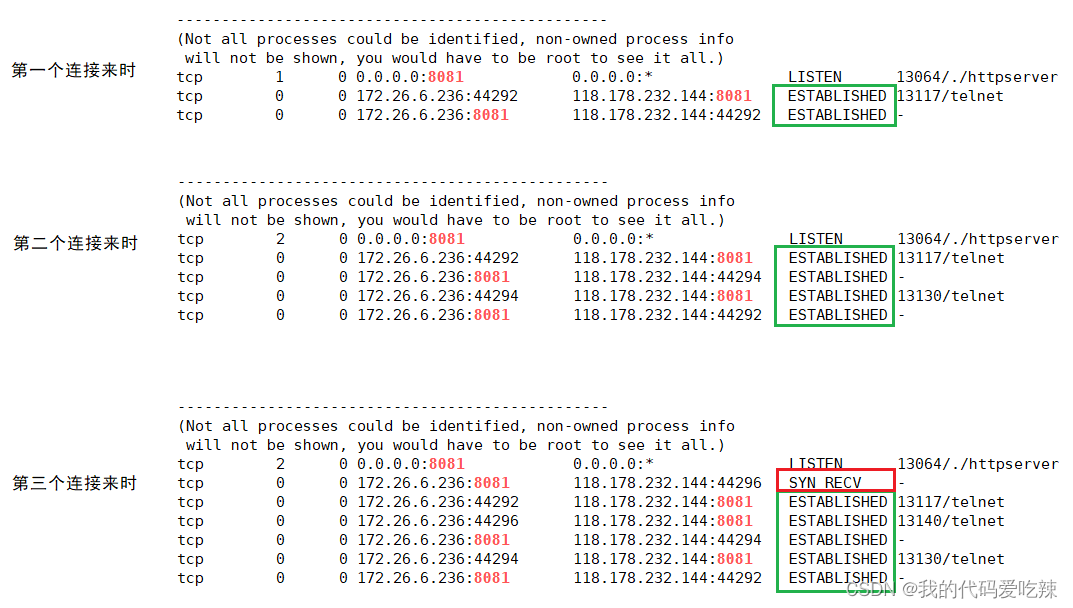

At this time, 2 clients are started to connect to the server at the same time, and netstat is used to check the server status. Everything is normal.

However, when the third client is started, it is found that the server cannot accept the third connection. There is a problem with the status.

The client status is normal, but the server side has the SYN_RECV status instead of the ESTABLISHED status

This is because the Linux kernel protocol stack uses two queues for a tcp connection management:< /span>

- 1. Semi-link queue (used to save requests in SYN_SENT and SYN_RECV status)

- 2. Full connection queue (accpetd queue) (used to save requests that are in the established state, but the application layer does not call accept)

The length of the full connection queue will be affected by the second parameter of listen.

When the full connection queue is full, the current connection status cannot continue to enter the established state. .

The length of this queue can be known from the above experiment, which is the second parameter of listen + 1.

Note: The second parameter of listen +1 is not the maximum number of links processed by the server, but the maximum number of links that are temporarily stored and not sent up to the application layer.

14.TCP abnormal situation

- Process termination: Process termination will release the file descriptor and FIN can still be sent. There is no difference from normal shutdown.

- Machine restart: Same situation as process termination.

- The machine is powered off/the network cable is disconnected: The receiving end thinks that the connection is still there. Once the receiving end has a write operation and finds that the connection is no longer there, it will reset. Even if there is no write operation, TCP itself has a built-in keep-alive function. The timer will periodically ask whether the other party is still there. If the other party is not there, the connection will also be released.

- In addition, some protocols at the application layer also have such detection mechanisms. For example, in HTTP long connections, the status of the other party will be regularly detected. For example, QQ will also periodically try to reconnect after QQ is disconnected.

15.TCP Summary

Why is TCP so complicated? Because it is necessary to ensure reliability while improving performance as much as possible.

Reliability:

- Checksum

- Serial number (arriving in order)

- Confirm response

- Timeout and resend

- Connection management

- flow control

- congestion control

Improve performance:

- sliding window

- Fast retransmission

- delayed response

- piggyback reply

Others:

Timer (timeout retransmission timer, keep-alive timer, TIME_WAIT timer, etc.)

16. Based on TCP application layer protocol

- HTTP

- HTTPS

- SSH

- Telnet

- FTP

- SMTP

Of course, it also includes custom application layer protocols when you write your own TCP program;

4.TCP/UDP comparison

We have said that TCP is a reliable connection, so is TCP definitely better than UDP? The advantages and disadvantages between TCP and UDP cannot be compared simply and absolutely:

- TCP is used for reliable transmission and is used in scenarios such as file transfer and important status updates;

- UDP is used in communication fields that require high-speed transmission and real-time performance, such as early QQ, video transmission, etc. In addition, UDP can be used for broadcasting;

In the final analysis, TCP and UDP are both tools for programmers. When to use them and how to use them depends on the specific demand scenarios.

Use UDP to achieve reliable transmission (classic interview question)

Refer to the reliability mechanism of TCP and implement similar logic at the application layer;

For example:

- Introduce serial numbers to ensure data order;

- Introduce acknowledgment response to ensure that the peer has received the data;

- Introducing timeout retransmission, if there is no response after a period of time, the data will be resent;

- ......