In the next period of time, we will mainly introduce some common methods in the direction of model interpretability.

Model interpretability: It is mainly used to explain why the characteristics of this sample are like this and the model results are like that. Report work to the boss (especially the boss who doesn’t understand algorithms) and the business side.

Commonly used tree models

The two models xgboost and lightgbm are often used. I won’t go into the specific principles. You can refer to the previous blog. I have carefully deduced and compiled the information for the interview. I have put it on the blog and shared it with those who are destined.

Main contents:

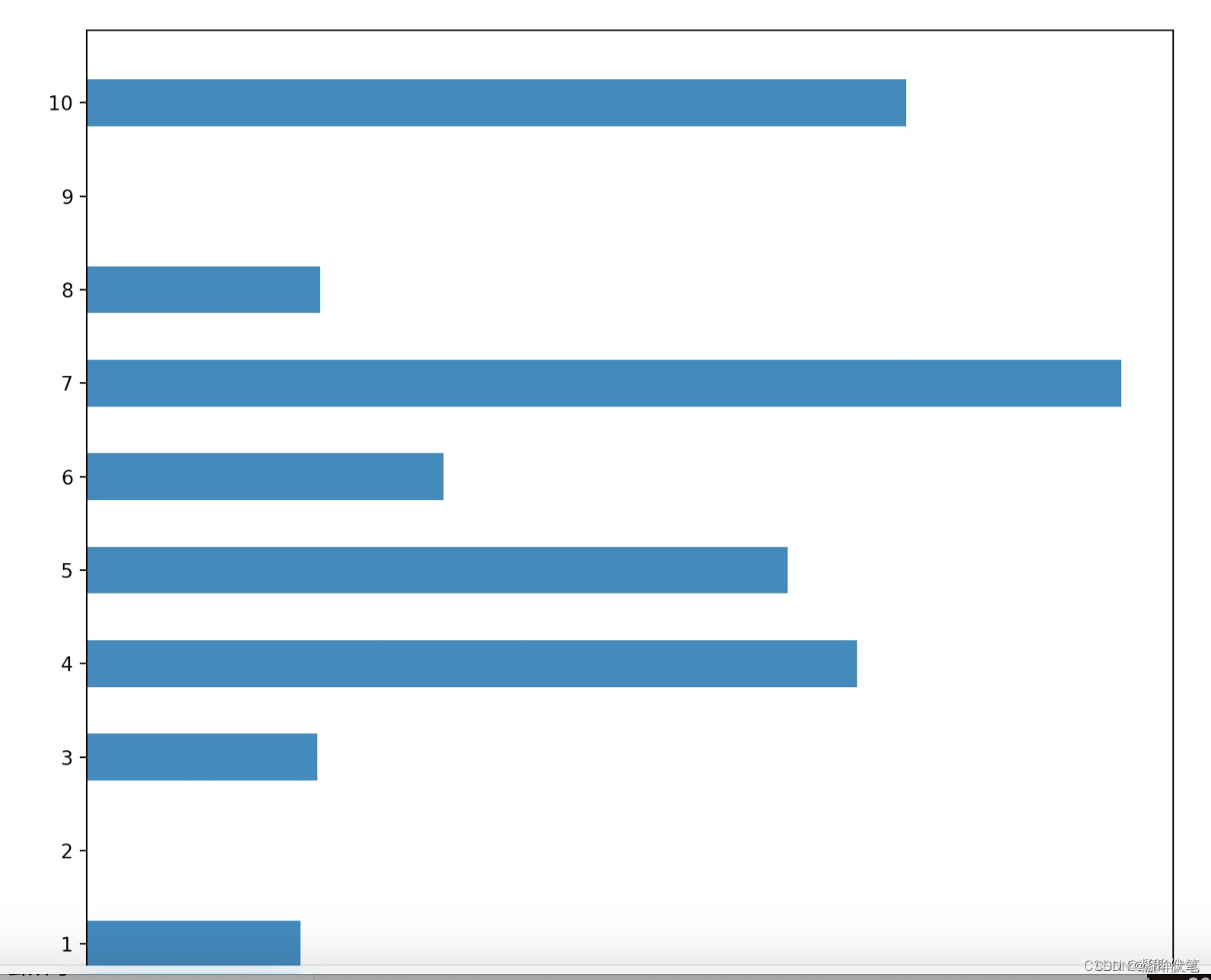

1: Feature importance

After training the model: add code: print(model.feature_importances_). Feature importance will be printed.

The shape is as follows:

To facilitate comparison, you can sort the feature importance and label the feature names.

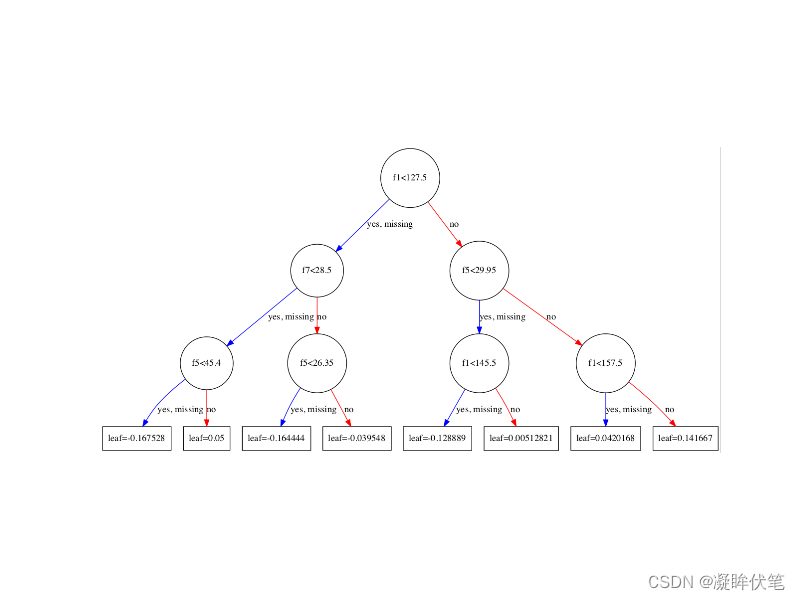

2: Tree structure

The shape is as follows:

The above two methods can more vividly explain to the boss why a change in a certain feature value will have such a big impact on the prediction results of the model. Probably because this feature is located on the head, it easily affects the prediction results of the final model.

In the next article, we will introduce another method, which aims to illustrate whether the increase or decrease of a certain feature value has a positive or negative impact on the model. Stay tuned~

A better xgb feature importance and feature selection blog:XGBoost learning (6): Output feature importance and filter features_xgboost feature importance-CSDN blog