Table of contents

2. akaze feature point algorithm

2.4. Advantages and disadvantages

1. Environment

The usage environment of this article is:

- Windows10

- Python 3.9.17

- opencv-python 4.8.0.74

2. akaze feature point algorithm

The feature point detection algorithm AKAZE is an algorithm widely used in the field of image processing. It can extract feature points of images at different scales and has the advantages of scale invariance and rotation invariance. This article will briefly introduce the basic principles, implementation process and performance of the AKAZE algorithm in practical applications.

2.1. Basic principles

The AKAZE algorithm is based on scale space theory and image pyramid. It constructs scale space through nonlinear diffusion filtering and detects key points in scale space. In AKAZE, the detection of key points is achieved through a process called "accelerated nonlinear diffusion", which can quickly generate scale space. In addition, AKAZE also uses the M-LDB descriptor to describe the surrounding area of the feature point.

2.2. Implementation process

- Image preprocessing: First, preprocess the input image, including operations such as grayscale and noise reduction, to improve the accuracy of the algorithm.

- Construct the scale space: Then, construct the scale space through nonlinear diffusion filtering, and detect key points in the scale space. In this process, a method called "accelerated nonlinear diffusion" is used, which can quickly generate scale space.

- Key point detection: In scale space, a region-based method is used to detect key points. These key points correspond to local extreme points in the image, that is, having the maximum or minimum gray value in the surrounding area.

- Descriptor generation: After detecting key points, AKAZE uses M-LDB descriptors to describe the surrounding areas of the feature points. M-LDB descriptor is an improved LDB descriptor, which can better describe the characteristics of images.

- Feature matching: Finally, feature matching is performed by comparing M-LDB descriptors between different images to identify similar areas in the image.

2.3. Practical application

The AKAZE algorithm has shown good performance in practical applications and can be applied to many fields, such as target recognition, image registration, stitching, etc. For example, in target recognition, AKAZE can be used to detect target feature points in images and identify target objects through feature matching. In addition, AKAZE can also be used in image splicing to achieve seamless splicing by aligning feature points in different images.

2.4. Advantages and disadvantages

The AKAZE algorithm has the following advantages:

- Scale invariance: The AKAZE algorithm can extract feature points of images at different scales, thus adapting to images of different scales.

- Rotation invariance: The AKAZE algorithm has rotation invariance and can extract feature points of images at different angles.

- Acceleration performance: Compared with the SIFT algorithm, the AKAZE algorithm uses an accelerated nonlinear diffusion method to construct the scale space, which has faster running speed.

- Robustness: The AKAZE algorithm has strong robustness to noise and interference and can extract more robust feature points.

However, the AKAZE algorithm also has some shortcomings:

- Sensitive to lighting changes: The AKAZE algorithm is more sensitive to lighting changes and may be affected by lighting changes.

- Sensitive to local changes: The AKAZE algorithm is sensitive to local changes, which may lead to false detections or missed detections.

- Requires manual setting of parameters: The AKAZE algorithm requires manual setting of some parameters, such as scale space series, number of iterations to accelerate nonlinear diffusion, etc. The settings of these parameters will affect the performance and accuracy of the algorithm.

In short, the feature point detection algorithm AKAZE is an effective image feature extraction method with the advantages of scale invariance and rotation invariance. It has shown good performance in practical applications and can be applied to many fields. However, it also has some shortcomings, such as being sensitive to illumination changes, being sensitive to local changes, and requiring manual parameter setting. The performance and accuracy of the AKAZE algorithm can be further improved and perfected in the future.

3. Code

3.1. Data preparation

The code requires two pictures and a file in xml format, namely: H1to3p.xml, as follows:

<?xml version="1.0"?>

<opencv_storage>

<H13 type_id="opencv-matrix">

<rows>3</rows>

<cols>3</cols>

<dt>d</dt>

<data>

7.6285898e-01 -2.9922929e-01 2.2567123e+02

3.3443473e-01 1.0143901e+00 -7.6999973e+01

3.4663091e-04 -1.4364524e-05 1.0000000e+00 </data></H13>

</opencv_storage>

3.2. Complete code

Code:

from __future__ import print_function

import cv2 as cv

import numpy as np

import argparse

from math import sqrt

# 读取两张图片

parser = argparse.ArgumentParser(description='Code for AKAZE local features matching tutorial.')

parser.add_argument('--input1', help='Path to input image 1.', default='graf1.png') # 在这里设置图像1

parser.add_argument('--input2', help='Path to input image 2.', default='graf3.png') # 在这里设置图像2

parser.add_argument('--homography', help='Path to the homography matrix.', default='H1to3p.xml') # 在这里设置H矩阵

args = parser.parse_args()

img1 = cv.imread(cv.samples.findFile(args.input1), cv.IMREAD_GRAYSCALE)

img2 = cv.imread(cv.samples.findFile(args.input2), cv.IMREAD_GRAYSCALE)

if img1 is None or img2 is None:

print('Could not open or find the images!')

exit(0)

fs = cv.FileStorage(cv.samples.findFile(args.homography), cv.FILE_STORAGE_READ)

homography = fs.getFirstTopLevelNode().mat()

## 初始化算法[AKAZE]

akaze = cv.AKAZE_create()

# 检测图像1和图像2的特征点和特征向量

kpts1, desc1 = akaze.detectAndCompute(img1, None)

kpts2, desc2 = akaze.detectAndCompute(img2, None)

## 基于汉明距离,使用暴力匹配来匹配特征点

matcher = cv.DescriptorMatcher_create(cv.DescriptorMatcher_BRUTEFORCE_HAMMING)

nn_matches = matcher.knnMatch(desc1, desc2, 2)

## 下面0.8默认参数,可以手动修改、调试

matched1 = []

matched2 = []

nn_match_ratio = 0.8 # 最近邻匹配参数

for m, n in nn_matches:

if m.distance < nn_match_ratio * n.distance:

matched1.append(kpts1[m.queryIdx])

matched2.append(kpts2[m.trainIdx])

## 使用单应矩阵进行精匹配,进一步剔除误匹配点

inliers1 = []

inliers2 = []

good_matches = []

inlier_threshold = 2.5 # 如果两个点距离小于这个值,表明足够近,也就是一对匹配对

for i, m in enumerate(matched1):

col = np.ones((3,1), dtype=np.float64)

col[0:2,0] = m.pt

col = np.dot(homography, col)

col /= col[2,0]

dist = sqrt(pow(col[0,0] - matched2[i].pt[0], 2) +\

pow(col[1,0] - matched2[i].pt[1], 2))

if dist < inlier_threshold:

good_matches.append(cv.DMatch(len(inliers1), len(inliers2), 0))

inliers1.append(matched1[i])

inliers2.append(matched2[i])

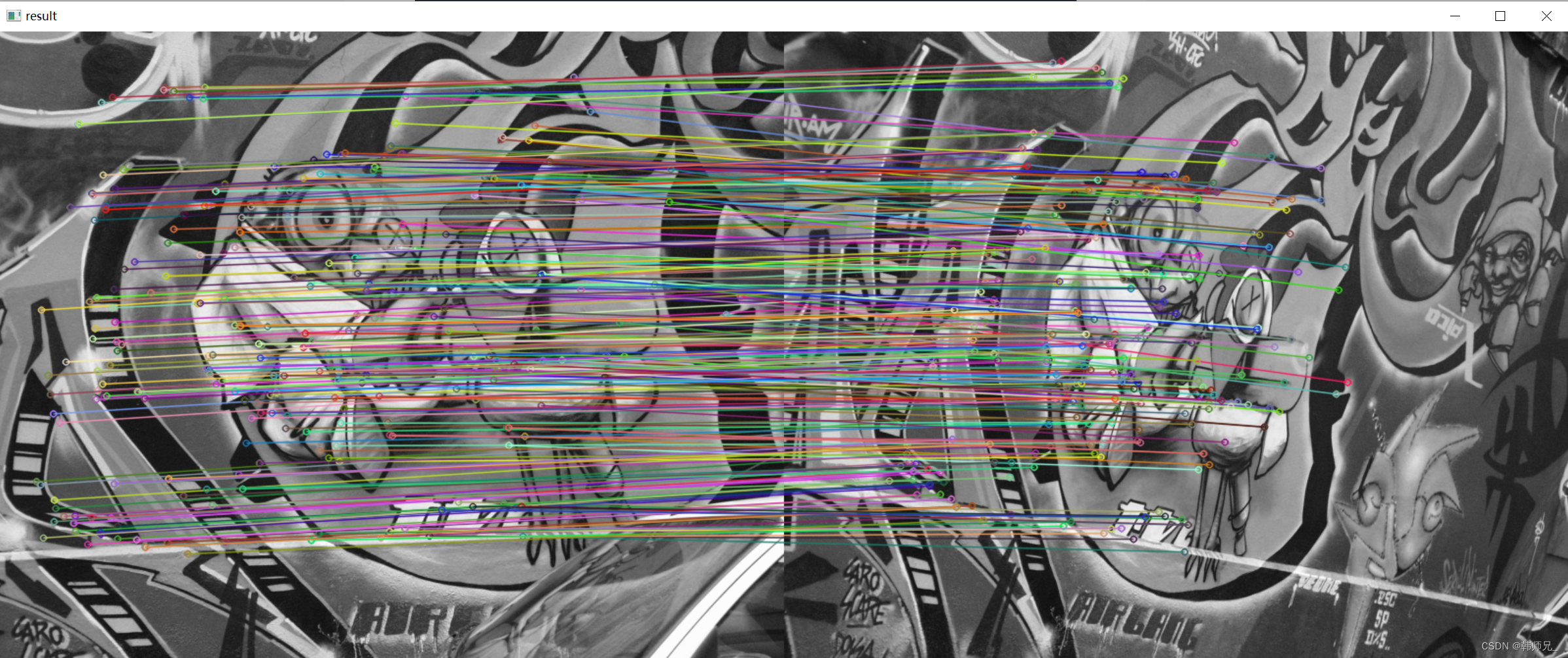

## 可视化

res = np.empty((max(img1.shape[0], img2.shape[0]), img1.shape[1]+img2.shape[1], 3), dtype=np.uint8)

cv.drawMatches(img1, inliers1, img2, inliers2, good_matches, res)

cv.imwrite("akaze_result.png", res)

inlier_ratio = len(inliers1) / float(len(matched1))

print('A-KAZE Matching Results')

print('*******************************')

print('# Keypoints 1: \t', len(kpts1))

print('# Keypoints 2: \t', len(kpts2))

print('# Matches: \t', len(matched1))

print('# Inliers: \t', len(inliers1))

print('# Inliers Ratio: \t', inlier_ratio)

cv.imshow('result', res)

cv.waitKey()