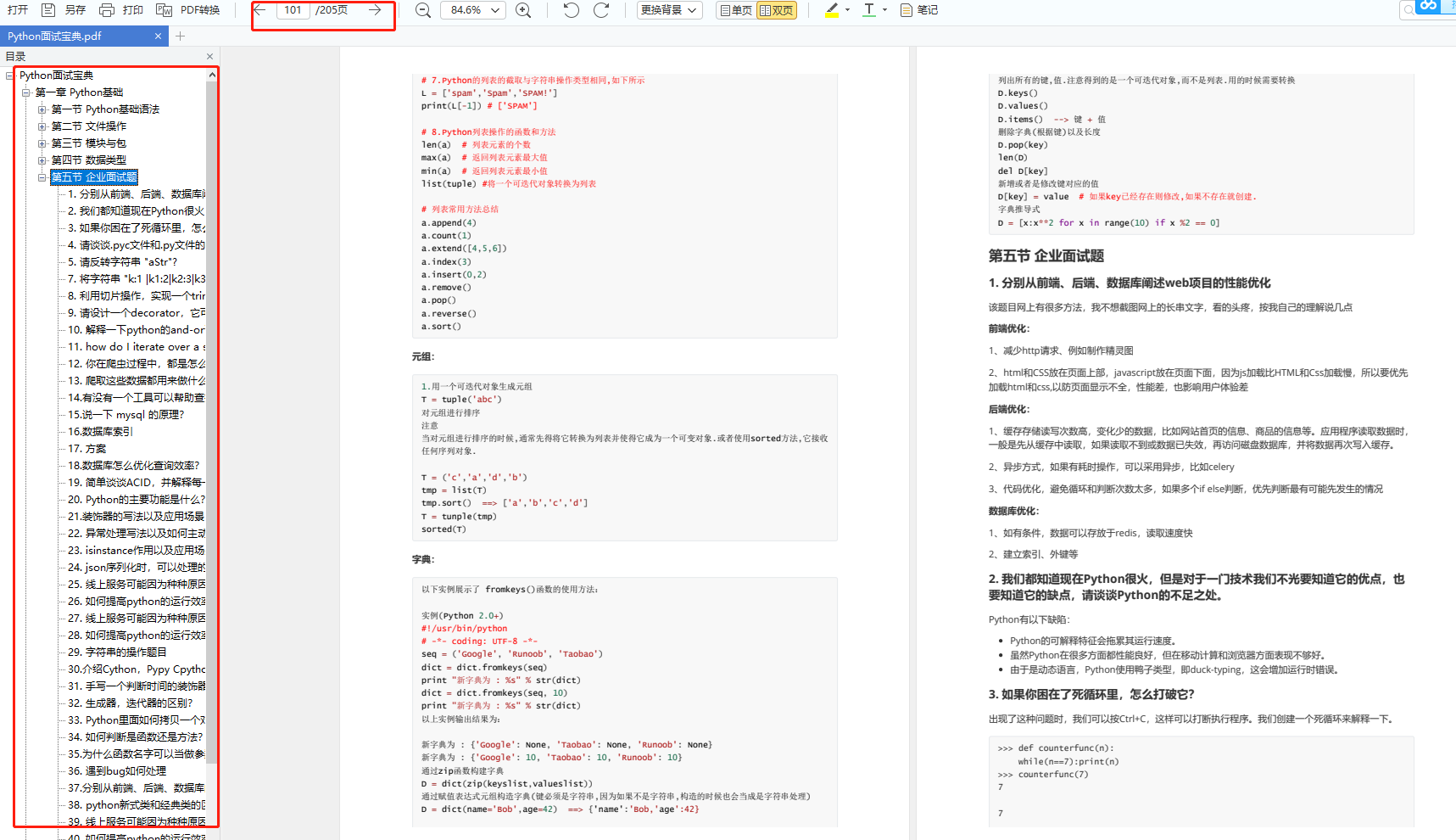

Article directory

Preface

In this example, the requests library and regular expressions are used to crawl the relevant content of the top 100 Maoyan movies.

1. Goal

Extract the movie name, time, rating, pictures and other information of the TOP100 Maoyan movies. The extracted site URL is http://maoyan.com/board/4. The extracted results will be saved in file form.

2. Crawl analysis

The crawled page is as follows:

The effective information displayed on the page includes the film name, starring actors, release time, release area, ratings, pictures and other information.

Scroll to the bottom of the page to find a paginated list. Click directly on page 2 and observe how the URL and content of the page change.

First page URL: https://www.maoyan.com/board/4?offset=0

Second page URL: https://www.maoyan.com/board/4?offset=10

It can be found that offset has changed from 0 to 10, and each page displays ten movies. From this, we can summarize the rules. Offset represents the offset value. If you want to get the TOP100 movies, you only need to make 10 separate requests, and set the offset parameters of the 10 times to 0, 10, 20...90 respectively. After getting different pages, you can use regular expressions to extract relevant information. You can get all the movie information of TOP100.

3. Grab the homepage

First grab the content of the first page. Implement the get_one_page method and pass it the url parameter. Then return the crawled page results. The preliminary code implementation is as follows:

import requests

import re

def get_one_page(url):

headers={'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_4) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/52.0.2743.116 Safari/537.36'}

response=requests.get(url,headers=headers)

if response.status_code==200:

return response.text

return None

def main():

html=get_one_page('https://www.maoyan.com/board/4?offset=0')

print(html)

main()

4. Regular extraction

Go back to the web page and look at the real source code of the page. View the source code in the Network listening component in developer mode. ,

Note that you do not view the source code directly in the Elements tab here, because the source code there may be different from the original request after JavaScript operations. Instead, you need to view the source code obtained from the original request from the Network tab section.

The source code for one of the entries is as follows:

As you can see, the source code corresponding to a movie information is a dd node. We use regular expressions to extract some movie information inside. First, its ranking information needs to be extracted. Its ranking information is in the i node with class board-index. Here, non-greedy matching is used to extract the information in the i node. The regular expression is written as:

result_ranking=re.findall('<dd>.*?board-index.*?>(.*?)</i>',html,re.S)

Then you need to extract the pictures of the movie.

result_img=re.findall('<img\sdata-src="(.*?)"',html,re.S)

Next, you need to extract the name of the movie.

result_name=re.findall('class="name".*?data-val.*?>(.*?)</a>',html,re.S)

The same principle applies when extracting content such as starring roles, release time, ratings, etc. Finally, the regular expression is written as:

result_star=re.findall('class="star".*?>(.*?)</p>',html,re.S)

result_star = [star.strip() for star in result_star]

result_time=re.findall('class="releasetime".*?>(.*?)</p>',html,re.S)

result_score=re.findall('class="score".*?integer.*?>(.*?)</i>.*?fraction">(.*?)</i>',html,re.S)

Since there are spaces and line breaks before and after the leading actors here, use strip to remove them.

At this point we can extract 6 data from a movie, but this is not enough. The data is quite messy. We will process the matching results and generate a dictionary, as follows:

index=0

result={'ranking':result_ranking[index],

'img':result_img[index],

'name':result_name[index],

'star':result_star[index],

'time':result_time[index],

'score':result_score[index]

}

5.Write to file

Subsequently, we write the extracted results to a file, here directly to a text file. Here, the dictionary is serialized through the dumps method of the JSON library, and the ensure_ascii parameter is specified as False, which ensures that the output result is in Chinese form instead of Unicode encoding. code show as below:

import json

def write_to_file(content):

with open('result.txt', 'a', encoding='utf-8') as f:

print(type(json.dumps(content)))

f.write(json.dumps(content, ensure_ascii=False)+'\n')

6. Integrate code page crawling

Because what we need to crawl is the TOP100 movies, we still need to traverse it and pass in the offset parameter to this link to crawl the other 90 movies. At this time, just add the following call:

import time

for i in range(10):

url='https://www.maoyan.com/board/4?offset='+str(i*10)

time.sleep(0.5)

html=get_one_page(url)

result_ranking=re.findall('<dd>.*?board-index.*?>(.*?)</i>',html,re.S)

result_img=re.findall('<img\sdata-src="(.*?)"',html,re.S)

result_name=re.findall('class="name".*?data-val.*?>(.*?)</a>',html,re.S)

result_star=re.findall('class="star".*?>(.*?)</p>',html,re.S)

result_star = [star.strip() for star in result_star]

result_time=re.findall('class="releasetime".*?>(.*?)</p>',html,re.S)

result_score=re.findall('class="score".*?integer.*?>(.*?)</i>.*?fraction">(.*?)</i>',html,re.S)

for index in range(10):

result={'ranking':result_ranking[index],

'img':result_img[index],

'title':result_name[index],

'star':result_star[index],

'reaease_time':result_time[index],

'score':result_score[index]

}

write_to_file(result)

Pay attention to adding a pause time after each page request, otherwise it will be blocked by the anti-crawling mechanism, and new user-agent and cookies need to be replaced.

The crawling results are as follows:

Extra: Rewrite using regular expression objects

In the above regular expression extraction, we wrote a regular expression for each piece of information to be extracted. You can use the compile method to write a regular expression object to extract all six pieces of information at once. Let’s simplify it below and show the information we want to extract. Use a regular expression to describe it and use groups to extract it one by one.

As you can see, the source code corresponding to a movie information is a dd node. We use regular expressions to extract some movie information inside. First, its ranking information needs to be extracted. Its ranking information is in the i node with class board-index. Here, non-greedy matching is used to extract the information in the i node. The regular expression is written as:

<dd>.*?board-index.*?>(.*?)</i>

Then you need to extract the pictures of the movie. As you can see, there is an a node behind it, and there are two img nodes inside it. After inspection, it was found that the data-src attribute of the second img node is a link to the image. Here, the data-src attribute of the second img node is extracted. Based on the original regular expression, it can be rewritten as follows:

<dd>.*?board-index.*?>(.*?)</i>.*?data-src="(.*?)"

Later, we need to extract the name of the movie, which is in the subsequent p node and the class is name. Therefore, you can use name as a flag, and then further extract the text content of the a node inside it. At this time, the regular expression is rewritten as follows:

<dd>.*?board-index.*?>(.*?)</i>.*?data-src="(.*?)".*?name.*?a.*?>(.*?)</a>

The same principle applies when extracting content such as starring roles, release time, ratings, etc. Finally, the regular expression is written as:

<dd>.*?board-index.*?>(.*?)</i>.*?data-src="(.*?)".*?name.*?a.*?>(.*?)</a>.*?star.*?>(.*?)</p>.*?releasetime.*?>(.*?)</p>.*?integer.*?>(.*?)</i>.*?fraction.*?>(.*?)</i>.*?</dd>

Next, extract all the contents by calling the findall method.

pattern = re.compile('<dd>.*?board-index.*?>(.*?)</i>.*?data-src="(.*?)".*?name.*?a.*?>(.*?)</a>.*?star.*?>(.*?)</p>.*?releasetime.*?>(.*?)</p>.*?integer.*?>(.*?)</i>.*?fraction.*?>(.*?)</i>.*?</dd>',

re.S)

items = re.findall(pattern, html)

The output is as follows:

[('1', 'http://p1.meituan.net/movie/20803f59291c47e1e116c11963ce019e68711.jpg@160w_220h_1e_1c', ' 霸王别姬 ', '\n 主演:张国荣,张丰毅,巩俐 \n ', ' 上映时间:1993-01-01(中国香港)', '9.', '6'), ('2', 'http://p0.meituan.net/movie/__40191813__4767047.jpg@160w_220h_1e_1c', ' 肖申克的救赎 ', '\n 主演:蒂姆・罗宾斯,摩根・弗里曼,鲍勃・冈顿 \n ', ' 上映时间:1994-10-14(美国)', '9.', '5'), ('3', 'http://p0.meituan.net/movie/fc9d78dd2ce84d20e53b6d1ae2eea4fb1515304.jpg@160w_220h_1e_1c', ' 这个杀手不太冷 ', '\n 主演:让・雷诺,加里・奥德曼,娜塔莉・波特曼 \n ', ' 上映时间:1994-09-14(法国)', '9.', '5'), ('4', 'http://p0.meituan.net/movie/23/6009725.jpg@160w_220h_1e_1c', ' 罗马假日 ', '\n 主演:格利高利・派克,奥黛丽・赫本,埃迪・艾伯特 \n ', ' 上映时间:1953-09-02(美国)', '9.', '1'), ('5', 'http://p0.meituan.net/movie/53/1541925.jpg@160w_220h_1e_1c', ' 阿甘正传 ', '\n 主演:汤姆・汉克斯,罗宾・怀特,加里・西尼斯 \n ', ' 上映时间:1994-07-06(美国)', '9.', '4'), ('6', 'http://p0.meituan.net/movie/11/324629.jpg@160w_220h_1e_1c', ' 泰坦尼克号 ', '\n 主演:莱昂纳多・迪卡普里奥,凯特・温丝莱特,比利・赞恩 \n ', ' 上映时间:1998-04-03', '9.', '5'), ('7', 'http://p0.meituan.net/movie/99/678407.jpg@160w_220h_1e_1c', ' 龙猫 ', '\n 主演:日高法子,坂本千夏,糸井重里 \n ', ' 上映时间:1988-04-16(日本)', '9.', '2'), ('8', 'http://p0.meituan.net/movie/92/8212889.jpg@160w_220h_1e_1c', ' 教父 ', '\n 主演:马龙・白兰度,阿尔・帕西诺,詹姆斯・凯恩 \n ', ' 上映时间:1972-03-24(美国)', '9.', '3'), ('9', 'http://p0.meituan.net/movie/62/109878.jpg@160w_220h_1e_1c', ' 唐伯虎点秋香 ', '\n 主演:周星驰,巩俐,郑佩佩 \n ', ' 上映时间:1993-07-01(中国香港)', '9.', '2'), ('10', 'http://p0.meituan.net/movie/9bf7d7b81001a9cf8adbac5a7cf7d766132425.jpg@160w_220h_1e_1c', ' 千与千寻 ', '\n 主演:柊瑠美,入野自由,夏木真理 \n ', ' 上映时间:2001-07-20(日本)', '9.', '3')]

-END-

I have also compiled some introductory and advanced information on Python for you. If you need it, you can refer to the following information.

About Python technical reserves

Learning Python well is good whether you are getting a job or doing a side job to make money, but you still need to have a learning plan to learn Python. Finally, we share a complete set of Python learning materials to give some help to those who want to learn Python!

1. Python learning route

2. Basic learning of Python

1. Development tools

We will prepare you with the essential tools you need to use in the Python development process, including the latest version of PyCharm to install permanent activation tools.

2. Study notes

3. Learning videos

3. Essential manual for Python beginners

4. Python practical cases

5. Python crawler tips

6. A complete set of resources for data analysis

7. Python interview highlights

2. Resume template

Data collection

The complete set of Python learning materials mentioned above has been uploaded to CSDN official. If you need it, you can scan the CSDN official certification QR code below on WeChat and enter "receive materials" to get it.