Preface:

Let’s summarize some of the problems encountered recently when deploying algorithms on the board side:

1. Version problem

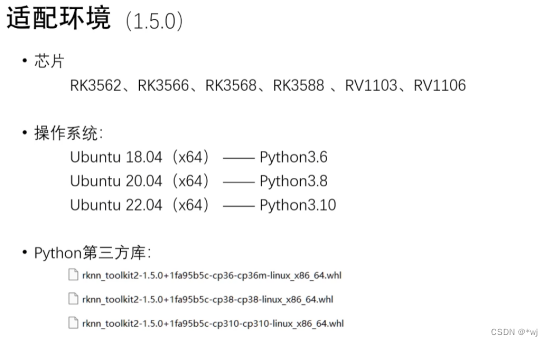

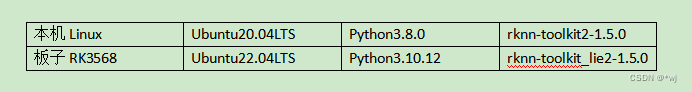

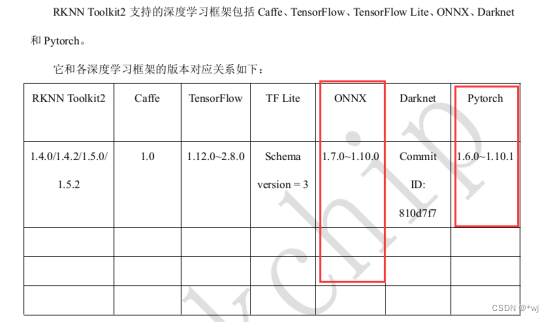

After testing, now configure your environment as follows:

Install rknn-toolkit2-1.5.0 on local linux

- A new python virtual environment created by miniconda used in local Linux (find related methods online by yourself)

- After installing your own virtual environment: install the required dependency packages

sudo apt-get install libxslt1-dev zlib1g-dev libglib2.0 libsm6 libgl1-mesa-glx libprotobuf-dev gcc

- Git the official RKNN-Toolkit2 library to the Linux local: (If the prompt does not find the git command, use:

sudo apt install git-allinstall git tool)

git clone https://github.com/rockchip-linux/rknn-toolkit2

- Enter the Toolkit2 project folder and modify the path in the cd command according to the project's saving path.

cd ~/rknn-toolkit2

- Install the necessary corresponding versions of dependency packages (here I install the py3.8 version that matches my own Linux)

pip3 install -r doc/requirements_cp38-1.5.0

- Install RKNN-Toolkit2 (Python3.6 for x86_64)

pip3 install package/rknn_toolkit2-1.5.0+1fa95b5c-cp38-cp38-linux_x86_64

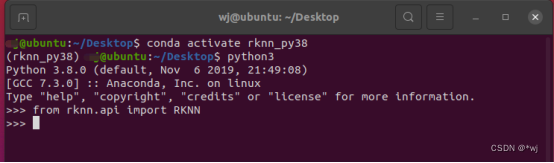

- Check whether RKNN-Toolkit2 is installed successfully. When this interface appears, it means that the installation of the local Linux side has been successful.

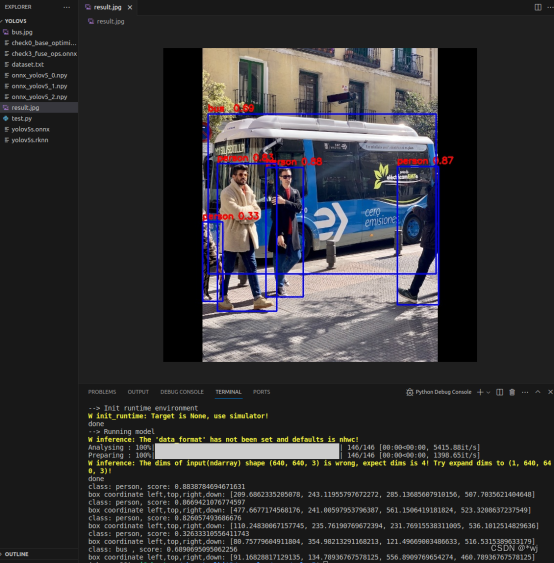

- You can run the official examples case detection: rknn-toolkit2/examples/onnx/yolov5

Board RK3568 environment installation

- git source code to the board

git clone https://github.com/rockchip-linux/rknn-toolkit2

cd ./rknn_toolkit_lite2

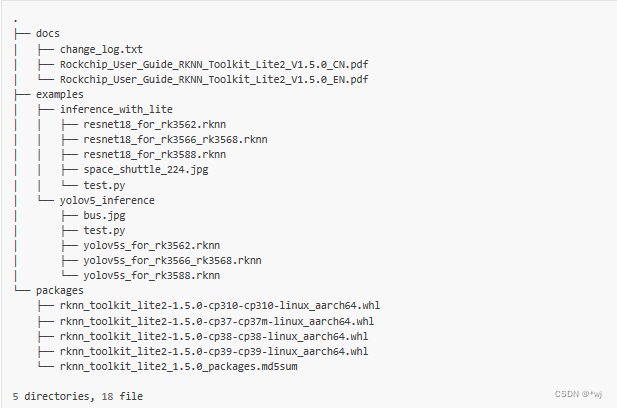

The file structure is as follows:

- Environment installation

sudo apt update

#安装python工具等

sudo apt-get install python3-dev python3-pip gcc

#安装相关依赖和软件包

pip3 install wheel

sudo apt-get install -y python3-opencv

sudo apt-get install -y python3-numpy

sudo apt -y install python3-setuptools

- Toolkit Lite2 tool installation:

# 进入到rknn_toolkit_lite2目录下,

cd rknn_toolkit_lite2/

# ubuntu22 python3.7

pip3 install packages/rknn_toolkit_lite2-1.5.0-cp310-cp310m-linux_aarch64.whl

- Check the installation is successful

python3

>>> from rknnlite.api import RKNNLite

>>>

- To run the official demo,

you must find examples under the file rknn_toolkit_lite2 and run it:

Run my demo: Warehouse address: https://gitee.com/wangyoujie11/rk3568_-demo

Mnist_PC is placed in the Linux environment of the local computer, activates its own python virtual environment, and then python test.py can run

Mnist_rk3568 and placed on the board In the Linux environment, then python3 rk3568_test.py can be run.

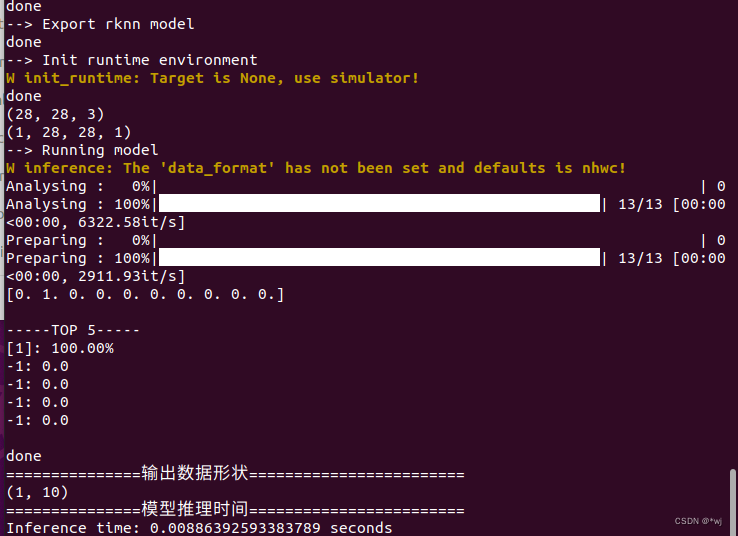

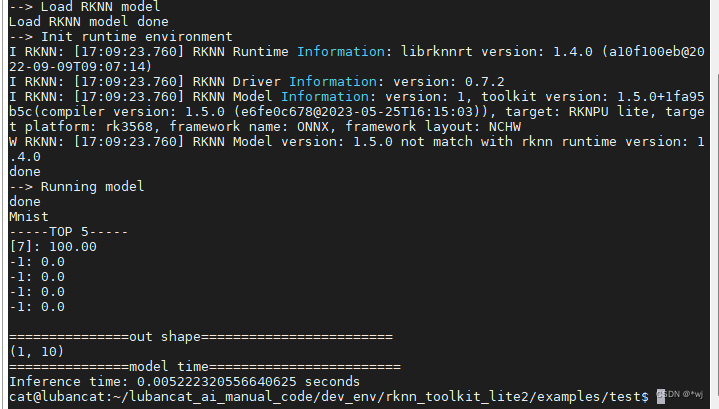

The running results are as follows:

PC side (1.png loaded in the program):

RK3568 board side (7.png loaded in the program):

---------------------------------------------Separating line (irregular renew)-----------------------------------------

Question one

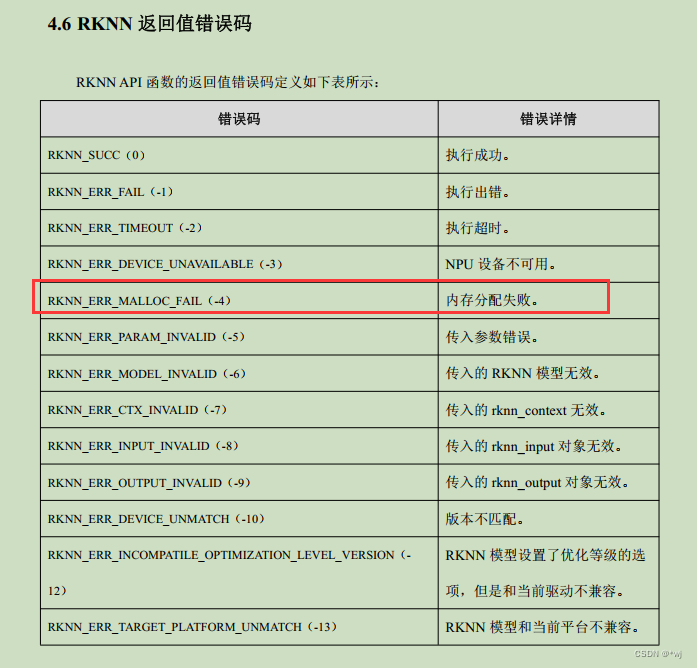

在使用RK3568开发板的时候,在板子侧部署.rknn模型推理的时候报错:

*Exception: RKNN init failed. error code: RKNN_ERR_MALLOC_FAIL*

Then I checked the official manual and found the error message: Memory allocation failed.

But there is no corresponding solution, so I can only debug it by myself (a cup of tea, a pack of cigarettes, a bug search for a day)...

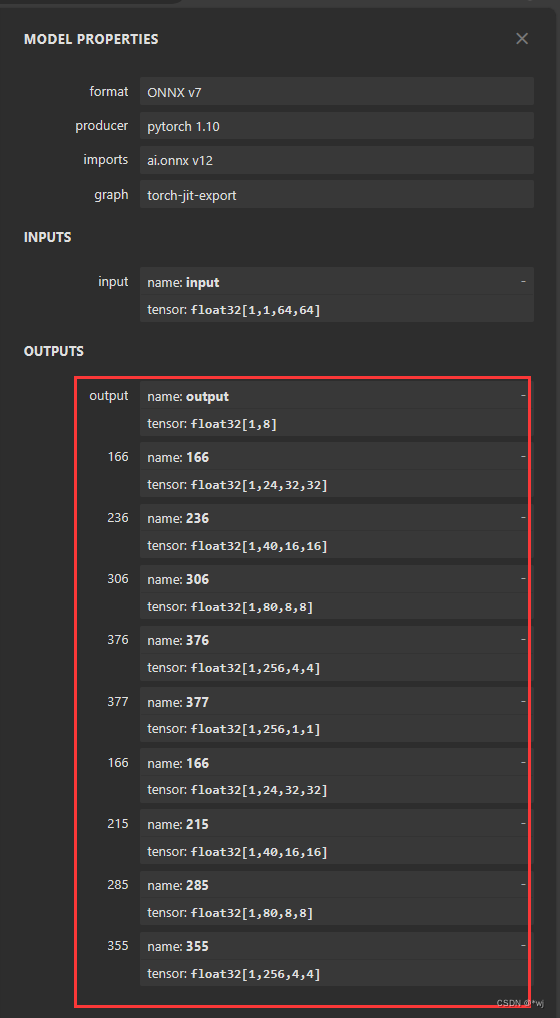

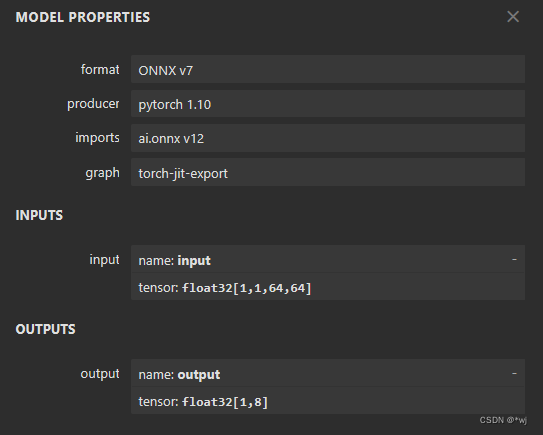

Later I found the problem: my .onnx model output is as follows : There are multiple outputs, so you can run your own model under local Linux, but due to resource constraints on the board, the memory allocation of the board fails. So the solution is to reduce the output of your own .onnx model. https://netron.app/You can load your own .onnx model to see the input and output

#原xxx.onnx文件放在onnx文件夹下,然后创建一个新的文件夹newonnx用来存放新的.onnx模型

#版本说明这里的.onnx模型是在pytorch版本1.6.0~1.10.1直接训练的模型,并且在onnx版本在1.7.0~1.10.0之间

import onnx

model = onnx.load('./onnx/xxx.onnx')

node = model.graph.output[1]

model.graph.output.remove(node)

onnx.save(model, './newonnx/xxx.onnx')

model = onnx.load('./newonnx/xxx.onnx')

for i in range(1,9): #根据自己要删除的节点设置范围

node = model.graph.output[1]

model.graph.output.remove(node)

onnx.save(model, './newonnx/xxx.onnx')

After cutting the .onnx model through the above code and

getting this new .onnx model, you can run the corresponding API of rknn on local Linux to convert the .onnx model into a .rknn model, and then use the .rknn model for inference on the board.