Friends often leave messages in the background asking:

Can the algorithm improved by the author be used to write a paper?

The answer is: of course! No need to add citations!

If my article can help you write a paper, it would be the author's honor!

The cuckoo optimization algorithm is a very classic optimization algorithm, and many people still study and improve it to this day. Today I will bring you a cuckoo optimization algorithm improved by Xiaotao - the cuckoo optimization algorithm (Cauchy-Adaptive-Levy-Cuckoo search, CALCS) that combines Cauchy mutation and adaptive Levy flight. The improved algorithm is the same as that of the original algorithm, and this algorithm does not increase the complexity of the original algorithm. The running time will not rise as sharply as some improved algorithms that incorporate reverse learning, greedy strategies, etc.

At the same time, this algorithm will also be included in the improved intelligent algorithm family bucket : please copy this link and open it in your browser! →

https://mbd.pub/o/bread/mbd-ZJ2XmJ5w

Detailed explanation of the principle

Introduction to the basic cuckoo algorithm principle:

The basic cuckoo search algorithm (CS) solves the optimal solution by simulating the behavior of cuckoos searching for nests and laying eggs. The solution process is:

1) Set the population number, nest abandonment rate, problem boundary and maximum number of iterations.

2) Randomly generate a certain number of populations in the problem domain and calculate the objective function value of each individual to find the current optimal value and optimal solution.

3) Determine whether the number of iterations reaches the maximum number of iterations. If so, exit the loop and output the optimal value and optimal solution; otherwise, proceed to step 4).

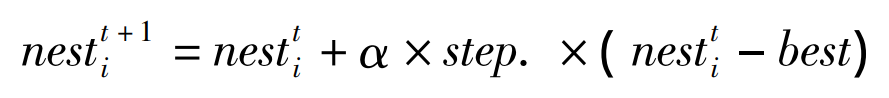

4) Update the bird's nest according to the following formula.

In the formula: nest(t+1)i, nestti represent the position of nest i in the t+1 and t generations respectively; α=0.01R, R∈N(0,1); best represents the best position in the current nest; step represents the step size generated by Levy flight.

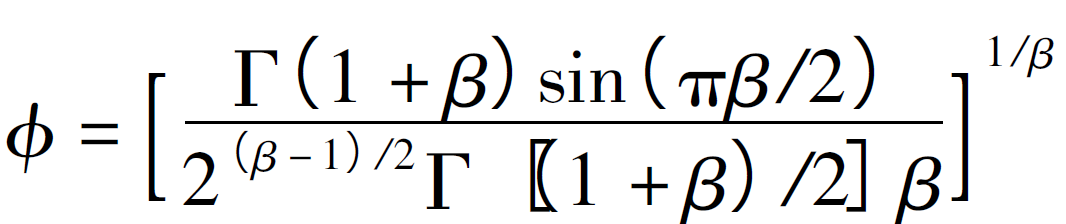

In the formula: β takes the value 1.5; ν,μ∈N(0,1); φ is calculated according to the following formula:

5) Calculate the objective function value of the new bird's nest. If it is superior to the previous one, replace the function value and the corresponding bird's nest, and record the optimal solution.

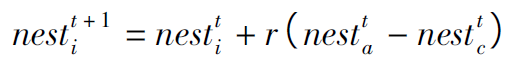

6) Randomly generate a number between [0,1] and compare it with the nest abandonment rate. If it is smaller than the nest abandonment rate, keep the nest, otherwise generate a new nest according to the formula.

In the formula: a and c are random integers that do not repeat in the t-th iteration; r∈[0,1] is a random number.

7) Recalculate the objective function value of the new bird's nest. If it is superior to the previous one, replace the function value and the corresponding bird's nest, and record the optimal solution.

8) Determine the function value and the optimal value. If they are smaller than each other, replace the optimal value and optimal solution; the algorithm moves to step 3) for the next iteration.

Introduction to the principle of improved cuckoo algorithm:

Improvement point 1: Based on the basic cuckoo algorithm, Tent chaotic mapping is used to initialize the diversity of the population.

Improvement point 2: Use adaptive step size Levy flight to improve the formula for updating the bird's nest.

In view of the shortcomings of the standard cuckoo search algorithm such as slow convergence speed and low optimization accuracy, global search is carried out through the adaptive Levy flight mechanism: the Levy flight step size continues to decrease as the iteration proceeds. The improved algorithm has a larger step size factor in the early stage of optimization, thereby expanding the search space in the early stage of the algorithm and improving the global search capability; during the optimization process, the step size is reduced, improving the local search performance of the algorithm. The value of 0.01 in the standard algorithm is changed to the following formula: α=0.5*exp(-t/tmax). Of course, there are many such formulas on the Internet. If you don’t want to use this formula, you can improve it yourself.

Improvement point 3: Before random walk, Cauchy mutation is used to update the nest position. The Cauchy distribution is similar to the standard normal distribution. It is a continuous probability distribution with a smaller value at the origin, more elongated ends at both ends, and a slower approach to zero. Therefore, it can produce a larger probability than the previous random walk strategy. of disturbance. Therefore, Cauchy mutation is used to perturb the nest position, thereby expanding the search scale of the cuckoo algorithm and thereby improving the algorithm's ability to jump out of the local optimum.

Results display

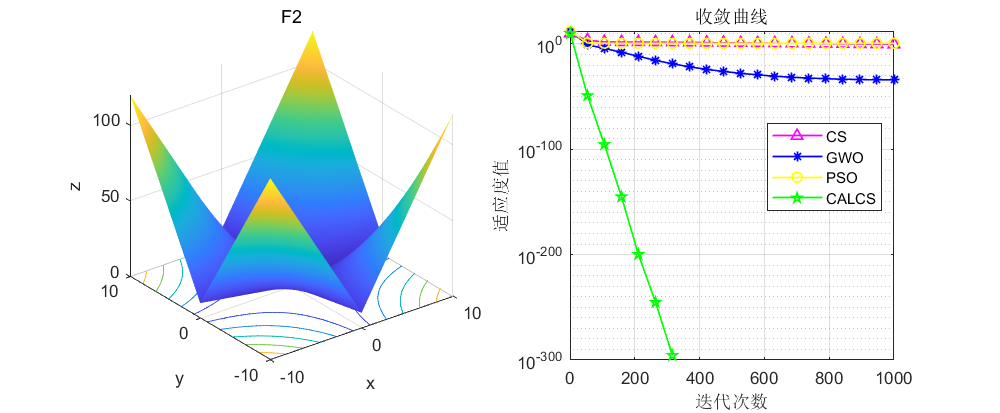

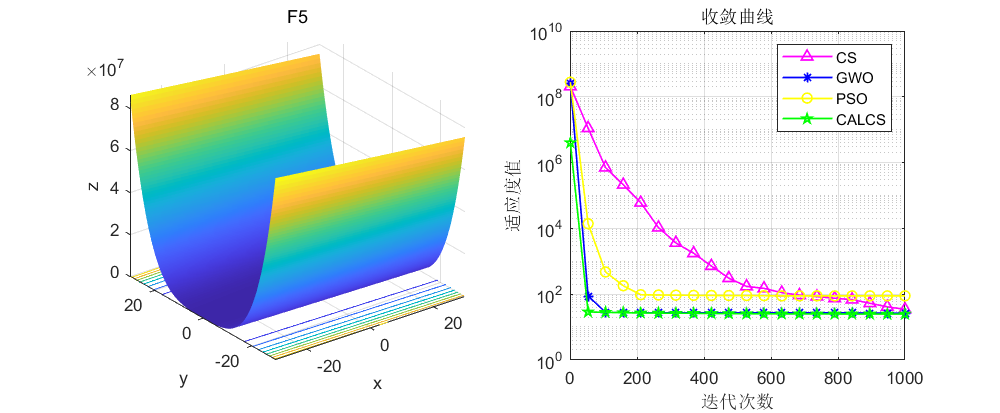

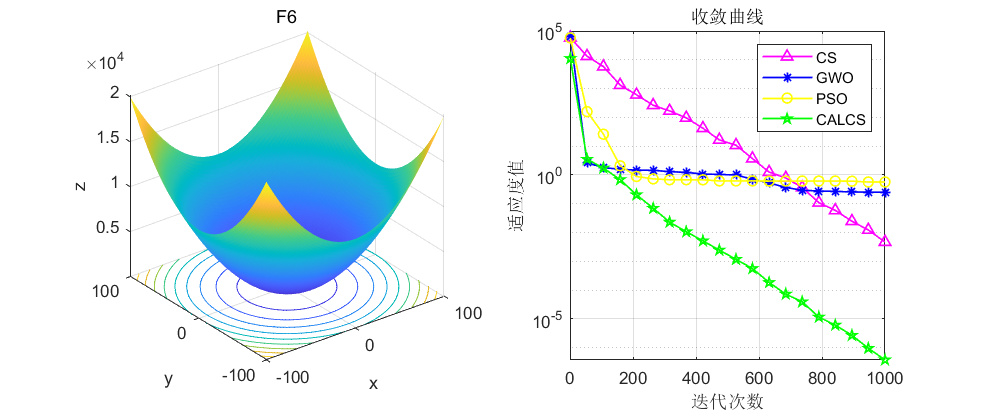

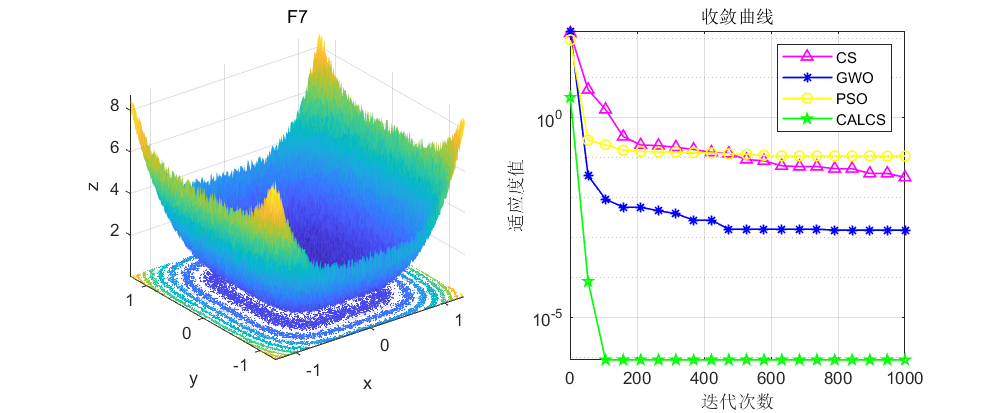

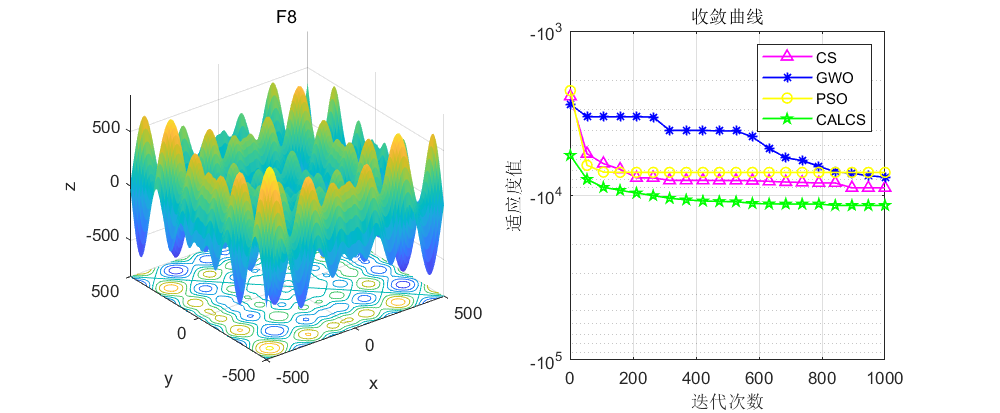

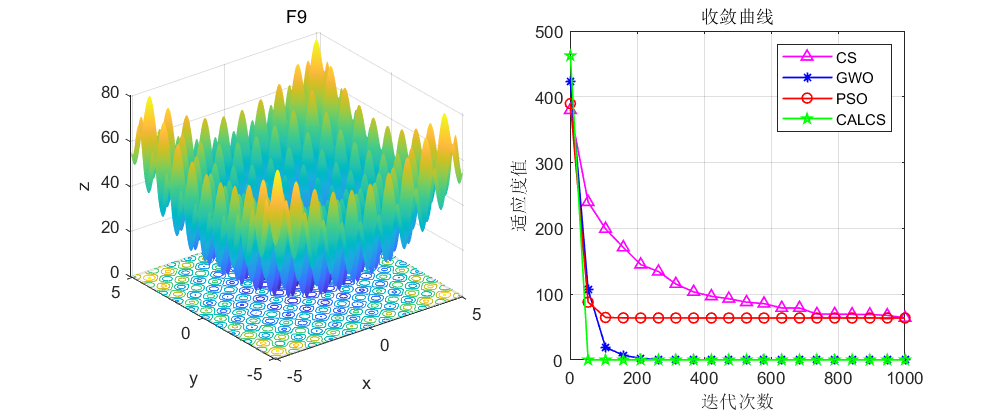

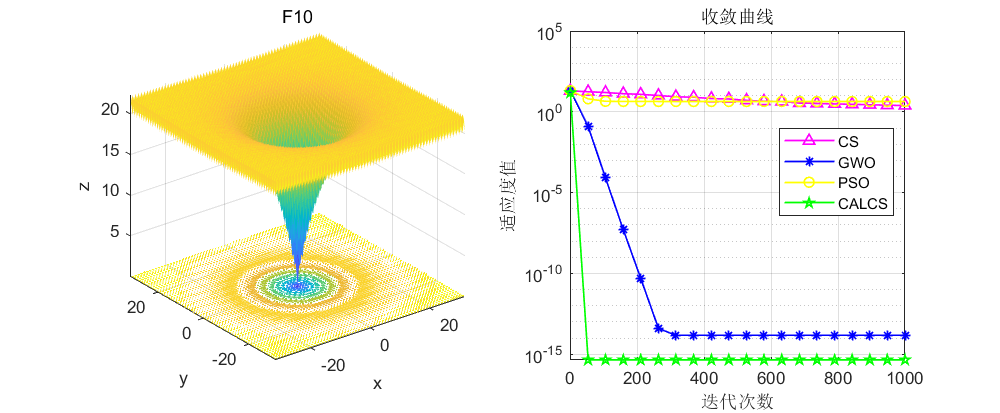

Tested on the CEC2005 function set, the results are as follows: CALCS is the improved cuckoo algorithm proposed in this article, CS is the original cuckoo optimization algorithm, GWO is the gray wolf optimization algorithm, and PSO is the particle swarm optimization algorithm.

The algorithm is iterated 1000 times, and the number of particles for each algorithm is set to 30.

Result analysis : In the test of single-peak function and multi-peak function, we can see that the cuckoo optimization algorithm that combines Cauchy mutation and adaptive Levy flight has really good optimization results!

Code display

%%

clear

clc

close all

number='F1'; %选定优化函数,自行替换:F1~F23

[lower_bound,upper_bound,variables_no,fobj]=CEC2005(number); % [lb,ub,D,y]:下界、上界、维度、目标函数表达式

pop_size=30; % population members

max_iter=1000; % maximum number of iteration

%% GWO

[fMin , bestX,GWO_convergence_curve ] =GWO(pop_size,max_iter,lower_bound,upper_bound,variables_no,fobj);

display(['The best optimal value of the objective funciton found by GWO for ' [num2str(number)],' is : ', num2str(fMin)]);

fprintf ('Best solution obtained by GWO: %s\n', num2str(bestX,'%e '));

%% PSO

[Best_pos,Best_score, PSO_convergence_curve ] = PSO(pop_size,max_iter,lower_bound,upper_bound,variables_no,fobj); % Call PSO

fprintf ('Best solution obtained by PSO: %s\n', num2str(Best_score,'%e '));

display(['The best optimal value of the objective funciton found by PSO for ' [num2str(number)],' is : ', num2str(Best_pos)]);

%% CS

[CS_Score,CSbestx,CS_convergence_curve]=CS(pop_size,max_iter,lower_bound,upper_bound,variables_no,fobj);

display(['The best optimal value of the objective funciton found by CS for ' [num2str(number)],' is : ', num2str(CSbestx)]);

fprintf ('Best solution obtained by CS: %s\n', num2str(CS_Score,'%e '));

%% CALCS

[CALCS_Score,CALCSbestx,CALCS_convergence_curve]=CALCS(pop_size,max_iter,lower_bound,upper_bound,variables_no,fobj);

display(['The best optimal value of the objective funciton found by CALCS for ' [num2str(number)],' is : ', num2str(CALCSbestx)]);

fprintf ('Best solution obtained by CALCS: %s\n', num2str(CALCS_Score,'%e '));

%% Figure

figure1 = figure('Color',[1 1 1]);

G1=subplot(1,2,1,'Parent',figure1);

func_plot(number)

title(number)

xlabel('x')

ylabel('y')

zlabel('z')

subplot(1,2,2)

G2=subplot(1,2,2,'Parent',figure1);

CNT=20;

k=round(linspace(1,max_iter,CNT)); %随机选CNT个点

% 注意:如果收敛曲线画出来的点很少,随机点很稀疏,说明点取少了,这时应增加取点的数量,100、200、300等,逐渐增加

% 相反,如果收敛曲线上的随机点非常密集,说明点取多了,此时要减少取点数量

iter=1:1:max_iter;

if ~strcmp(number,'F16')&&~strcmp(number,'F9')&&~strcmp(number,'F11') %这里是因为这几个函数收敛太快,不适用于semilogy,直接plot

semilogy(iter(k),CS_convergence_curve(k),'m-^','linewidth',1);

hold on

semilogy(iter(k),GWO_convergence_curve(k),'b-*','linewidth',1);

hold on

semilogy(iter(k),PSO_convergence_curve(k),'y-o','linewidth',1);

hold on

semilogy(iter(k),CALCS_convergence_curve(k),'g-p','linewidth',1);

else

plot(iter(k),CS_convergence_curve(k),'m-^','linewidth',1);

hold on

plot(iter(k),GWO_convergence_curve(k),'b-*','linewidth',1);

hold on

plot(iter(k),PSO_convergence_curve(k),'r-o','linewidth',1);

hold on

plot(iter(k),CALCS_convergence_curve(k),'g-p','linewidth',1);

end

grid on;

title('收敛曲线')

xlabel('迭代次数');

ylabel('适应度值');

box on

legend('CS','GWO','PSO','CALCS')

set (gcf,'position', [300,300,800,330]Basic cuckoo algorithm:

function [bestnest,fmin,lhy]=CS(n,N_IterTotal,lb,ub,nd,fobj)

pa=0.25; % Discovery rate of alien eggs/solutions

%% Simple bounds of the search domain

Lb=lb.*ones(1,nd); % Lower bounds

Ub=ub.*ones(1,nd); % Upper bounds

% Random initial solutions

nest=initialization(n,nd,Ub,Lb);

% Get the current best of the initial population

fitness=10^10*ones(n,1);

[fmin,bestnest,nest,fitness]=get_best_nest(nest,nest,fitness,fobj);

%% Starting iterations

for iter=1:N_IterTotal

% Generate new solutions (but keep the current best)

new_nest=get_cuckoos(nest,bestnest,Lb,Ub);

[fnew,best,nest,fitness]=get_best_nest(nest,new_nest,fitness,fobj);

% Discovery and randomization

new_nest=empty_nests(nest,Lb,Ub,pa) ;

% Evaluate this set of solutions

[fnew,best,nest,fitness]=get_best_nest(nest,new_nest,fitness,fobj);

% Find the best objective so far

if fnew<fmin

fmin=fnew;

bestnest=best;

end

lhy(iter) = fmin;

end %% End of iterations

%% --------------- All subfunctions are list below ------------------

%% Get cuckoos by ramdom walk

function nest=get_cuckoos(nest,best,Lb,Ub)

% Levy flights

n=size(nest,1);

% For details about Levy flights, please read Chapter 3 of the book:

% X. S. Yang, Nature-Inspired Optimization Algorithms, Elesevier, (2014).

beta=3/2;

sigma=(gamma(1+beta)*sin(pi*beta/2)/(gamma((1+beta)/2)*beta*2^((beta-1)/2)))^(1/beta);

for j=1:n

s=nest(j,:);

% This is a simple way of implementing Levy flights

% For standard random walks, use step=1;

%% Levy flights by Mantegna's algorithm

u=randn(size(s))*sigma;

v=randn(size(s));

step=u./abs(v).^(1/beta);

% In the next equation, the difference factor (s-best) means that

% when the solution is the best solution, it remains unchanged.

stepsize=0.01*step.*(s-best);

% Here the factor 0.01 comes from the fact that L/100 should be the

% typical step size for walks/flights where L is the problem scale;

% otherwise, Levy flights may become too aggresive/efficient,

% which makes new solutions (even) jump out side of the design domain

% (and thus wasting evaluations).

% Now the actual random walks or flights

s=s+stepsize.*randn(size(s));

% Apply simple bounds/limits

nest(j,:)=simplebounds(s,Lb,Ub);

end

%% Find the current best solution/nest among the population

function [fmin,best,nest,fitness]=get_best_nest(nest,newnest,fitness,fobj)

% Evaluating all new solutions

for j=1:size(nest,1)

fnew=fobj(newnest(j,:));

if fnew<=fitness(j)

fitness(j)=fnew;

nest(j,:)=newnest(j,:);

end

end

% Find the current best

[fmin,K]=min(fitness) ;

best=nest(K,:);

%% Replace some not-so-good nests by constructing new solutions/nests

function new_nest=empty_nests(nest,Lb,Ub,pa)

% A fraction of worse nests are discovered with a probability pa

n=size(nest,1);

% Discovered or not -- a status vector

K=rand(size(nest))>pa;

% Notes: In the real world, if a cuckoo's egg is very similar to

% a host's eggs, then this cuckoo's egg is less likely to be discovered.

% so the fitness should be related to the difference in solutions.

% Therefore, it is a good idea to do a random walk in a biased way

% with some random step sizes.

%% New solution by biased/selective random walks

stepsize=rand*(nest(randperm(n),:)-nest(randperm(n),:));

new_nest=nest+stepsize.*K;

for j=1:size(new_nest,1)

s=new_nest(j,:);

new_nest(j,:)=simplebounds(s,Lb,Ub);

end

% Application of simple bounds/constraints

function s=simplebounds(s,Lb,Ub)

% Apply the lower bound

ns_tmp=s;

I=ns_tmp<Lb;

ns_tmp(I)=Lb(I);

% Apply the upper bounds

J=ns_tmp>Ub;

ns_tmp(J)=Ub(J);

% Update this new move

s=ns_tmp;

%% You can replace the following objective function

%% by your own functions (also update the Lb and Ub)How to obtain the complete code and reply keywords in the background. Key words:

CALCS