This article is based on source code learning of Istio version 1.18.0

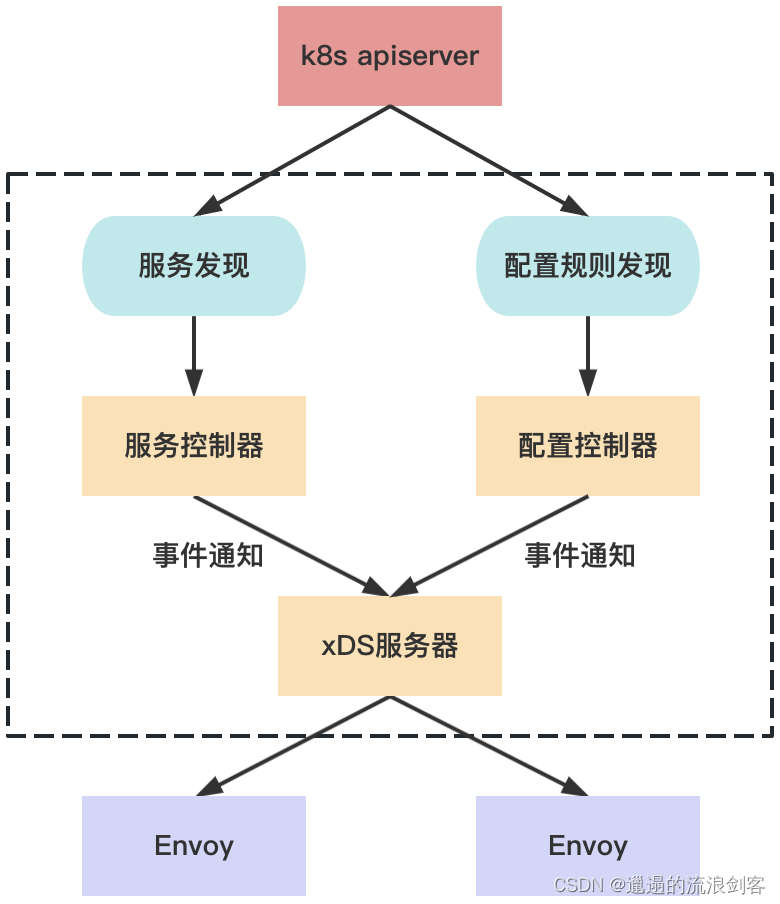

1. Working principle of Pilot-Discovery

Pilot-Discovery is the core of the Istio control plane and is responsible for traffic management in the service mesh and configuration delivery between the control plane and the data plane.

Pilot-Discovery obtains and aggregates service information from the registration center (such as Kubernetes), obtains configuration rules from the Kubernetes API Server, converts the service information and configuration data into the standard data structure of the xDS interface, and delivers it to Envoy on the data plane through GRPC

2. Pilot-Discovery code structure

The entry function of Pilot-Discovery is: pilot/cmd/pilot-discovery/main.gothe main method in. The Pilot Server is created in the main method. The Pilot Server mainly contains three parts of logic:

- ConfigController : manages various configuration data, including user-created traffic management rules and policies

- ServiceController : Get service discovery data in Service Registry

- DiscoveryService : Mainly includes the following logic:

- Start GRPC Server and receive connection requests from the Envoy side

- Receive xDS request from Envoy side, obtain configuration and service information from ConfigController and ServiceController, generate response message and send to Envoy

- Listen to configuration change messages from ConfigController and service change messages from ServiceController, and push configuration and service change content to Envoy through the xDS interface

3. Pilot-Discovery startup process

The code to create Pilot Server is as follows:

// pilot/pkg/bootstrap/server.go

func NewServer(args *PilotArgs, initFuncs ...func(*Server)) (*Server, error) {

e := model.NewEnvironment()

e.DomainSuffix = args.RegistryOptions.KubeOptions.DomainSuffix

e.SetLedger(buildLedger(args.RegistryOptions))

ac := aggregate.NewController(aggregate.Options{

MeshHolder: e,

})

e.ServiceDiscovery = ac

s := &Server{

clusterID: getClusterID(args),

environment: e,

fileWatcher: filewatcher.NewWatcher(),

httpMux: http.NewServeMux(),

monitoringMux: http.NewServeMux(),

readinessProbes: make(map[string]readinessProbe),

readinessFlags: &readinessFlags{

},

workloadTrustBundle: tb.NewTrustBundle(nil),

server: server.New(),

shutdownDuration: args.ShutdownDuration,

internalStop: make(chan struct{

}),

istiodCertBundleWatcher: keycertbundle.NewWatcher(),

webhookInfo: &webhookInfo{

},

}

// Apply custom initialization functions.

for _, fn := range initFuncs {

fn(s)

}

// Initialize workload Trust Bundle before XDS Server

e.TrustBundle = s.workloadTrustBundle

// 初始化discoveryServer

s.XDSServer = xds.NewDiscoveryServer(e, args.PodName, s.clusterID, args.RegistryOptions.KubeOptions.ClusterAliases)

prometheus.EnableHandlingTimeHistogram()

// make sure we have a readiness probe before serving HTTP to avoid marking ready too soon

s.initReadinessProbes()

// 初始化http和grpc server,向grpc server注册discoveryServer

s.initServers(args)

if err := s.initIstiodAdminServer(args, s.webhookInfo.GetTemplates); err != nil {

return nil, fmt.Errorf("error initializing debug server: %v", err)

}

if err := s.serveHTTP(); err != nil {

return nil, fmt.Errorf("error serving http: %v", err)

}

// Apply the arguments to the configuration.

if err := s.initKubeClient(args); err != nil {

return nil, fmt.Errorf("error initializing kube client: %v", err)

}

// used for both initKubeRegistry and initClusterRegistries

args.RegistryOptions.KubeOptions.EndpointMode = kubecontroller.DetectEndpointMode(s.kubeClient)

s.initMeshConfiguration(args, s.fileWatcher)

spiffe.SetTrustDomain(s.environment.Mesh().GetTrustDomain())

s.initMeshNetworks(args, s.fileWatcher)

s.initMeshHandlers()

s.environment.Init()

if err := s.environment.InitNetworksManager(s.XDSServer); err != nil {

return nil, err

}

// Options based on the current 'defaults' in istio.

caOpts := &caOptions{

TrustDomain: s.environment.Mesh().TrustDomain,

Namespace: args.Namespace,

DiscoveryFilter: args.RegistryOptions.KubeOptions.GetFilter(),

ExternalCAType: ra.CaExternalType(externalCaType),

CertSignerDomain: features.CertSignerDomain,

}

if caOpts.ExternalCAType == ra.ExtCAK8s {

// Older environment variable preserved for backward compatibility

caOpts.ExternalCASigner = k8sSigner

}

// CA signing certificate must be created first if needed.

if err := s.maybeCreateCA(caOpts); err != nil {

return nil, err

}

// 初始化configController和serviceController

if err := s.initControllers(args); err != nil {

return nil, err

}

s.XDSServer.InitGenerators(e, args.Namespace, s.internalDebugMux)

// Initialize workloadTrustBundle after CA has been initialized

if err := s.initWorkloadTrustBundle(args); err != nil {

return nil, err

}

// Parse and validate Istiod Address.

istiodHost, _, err := e.GetDiscoveryAddress()

if err != nil {

return nil, err

}

// Create Istiod certs and setup watches.

if err := s.initIstiodCerts(args, string(istiodHost)); err != nil {

return nil, err

}

// Secure gRPC Server must be initialized after CA is created as may use a Citadel generated cert.

if err := s.initSecureDiscoveryService(args); err != nil {

return nil, fmt.Errorf("error initializing secure gRPC Listener: %v", err)

}

// common https server for webhooks (e.g. injection, validation)

if s.kubeClient != nil {

s.initSecureWebhookServer(args)

wh, err := s.initSidecarInjector(args)

if err != nil {

return nil, fmt.Errorf("error initializing sidecar injector: %v", err)

}

s.webhookInfo.mu.Lock()

s.webhookInfo.wh = wh

s.webhookInfo.mu.Unlock()

if err := s.initConfigValidation(args); err != nil {

return nil, fmt.Errorf("error initializing config validator: %v", err)

}

}

// This should be called only after controllers are initialized.

// 向configController和serviceController注册事件回调函数

s.initRegistryEventHandlers()

// 设置discoveryServer启动函数

s.initDiscoveryService()

// Notice that the order of authenticators matters, since at runtime

// authenticators are activated sequentially and the first successful attempt

// is used as the authentication result.

authenticators := []security.Authenticator{

&authenticate.ClientCertAuthenticator{

},

}

if args.JwtRule != "" {

jwtAuthn, err := initOIDC(args)

if err != nil {

return nil, fmt.Errorf("error initializing OIDC: %v", err)

}

if jwtAuthn == nil {

return nil, fmt.Errorf("JWT authenticator is nil")

}

authenticators = append(authenticators, jwtAuthn)

}

// The k8s JWT authenticator requires the multicluster registry to be initialized,

// so we build it later.

if s.kubeClient != nil {

authenticators = append(authenticators,

kubeauth.NewKubeJWTAuthenticator(s.environment.Watcher, s.kubeClient.Kube(), s.clusterID, s.multiclusterController.GetRemoteKubeClient, features.JwtPolicy))

}

if len(features.TrustedGatewayCIDR) > 0 {

authenticators = append(authenticators, &authenticate.XfccAuthenticator{

})

}

if features.XDSAuth {

s.XDSServer.Authenticators = authenticators

}

caOpts.Authenticators = authenticators

// Start CA or RA server. This should be called after CA and Istiod certs have been created.

s.startCA(caOpts)

// TODO: don't run this if galley is started, one ctlz is enough

if args.CtrlZOptions != nil {

_, _ = ctrlz.Run(args.CtrlZOptions, nil)

}

// This must be last, otherwise we will not know which informers to register

if s.kubeClient != nil {

s.addStartFunc(func(stop <-chan struct{

}) error {

s.kubeClient.RunAndWait(stop)

return nil

})

}

return s, nil

}

NewServer()The core logic in the method is as follows:

- Initialize DiscoveryServer

- Initialize HTTP and GRPC Server, register DiscoveryServer with GRPC Server

- Initialize ConfigController and ServiceController

- Register event callback functions with ConfigController and ServiceController, and DiscoveryServer will be notified when configuration and service information changes.

- Set DiscoveryServer startup function

Pilot Server is defined as follows:

// pilot/pkg/bootstrap/server.go

type Server struct {

// discoveryServer

XDSServer *xds.DiscoveryServer

clusterID cluster.ID

// pilot环境所需的api集合

environment *model.Environment

// 处理kubernetes主集群的注册中心

kubeClient kubelib.Client

// 处理kubernetes多个集群的注册中心

multiclusterController *multicluster.Controller

// 统一处理配置规则的controller

configController model.ConfigStoreController

// 配置规则缓存

ConfigStores []model.ConfigStoreController

// 负责serviceEntry的服务发现

serviceEntryController *serviceentry.Controller

httpServer *http.Server // debug, monitoring and readiness Server.

httpAddr string

httpsServer *http.Server // webhooks HTTPS Server.

grpcServer *grpc.Server

grpcAddress string

secureGrpcServer *grpc.Server

secureGrpcAddress string

// monitoringMux listens on monitoringAddr(:15014).

// Currently runs prometheus monitoring and debug (if enabled).

monitoringMux *http.ServeMux

// internalDebugMux is a mux for *internal* calls to the debug interface. That is, authentication is disabled.

internalDebugMux *http.ServeMux

// httpMux listens on the httpAddr (8080).

// If a Gateway is used in front and https is off it is also multiplexing

// the rest of the features if their port is empty.

// Currently runs readiness and debug (if enabled)

httpMux *http.ServeMux

// httpsMux listens on the httpsAddr(15017), handling webhooks

// If the address os empty, the webhooks will be set on the default httpPort.

httpsMux *http.ServeMux // webhooks

// fileWatcher used to watch mesh config, networks and certificates.

fileWatcher filewatcher.FileWatcher

// certWatcher watches the certificates for changes and triggers a notification to Istiod.

cacertsWatcher *fsnotify.Watcher

dnsNames []string

CA *ca.IstioCA

RA ra.RegistrationAuthority

// TrustAnchors for workload to workload mTLS

workloadTrustBundle *tb.TrustBundle

certMu sync.RWMutex

istiodCert *tls.Certificate

istiodCertBundleWatcher *keycertbundle.Watcher

// pilot的所有组件都注册启动任务到此对象,便于在Start()方法中批量启动及管理

server server.Instance

readinessProbes map[string]readinessProbe

readinessFlags *readinessFlags

// duration used for graceful shutdown.

shutdownDuration time.Duration

// internalStop is closed when the server is shutdown. This should be avoided as much as possible, in

// favor of AddStartFunc. This is only required if we *must* start something outside of this process.

// For example, everything depends on mesh config, so we use it there rather than trying to sequence everything

// in AddStartFunc

internalStop chan struct{

}

webhookInfo *webhookInfo

statusReporter *distribution.Reporter

statusManager *status.Manager

// RWConfigStore is the configstore which allows updates, particularly for status.

RWConfigStore model.ConfigStoreController

}

The Pilot-Discovery startup process is as follows:

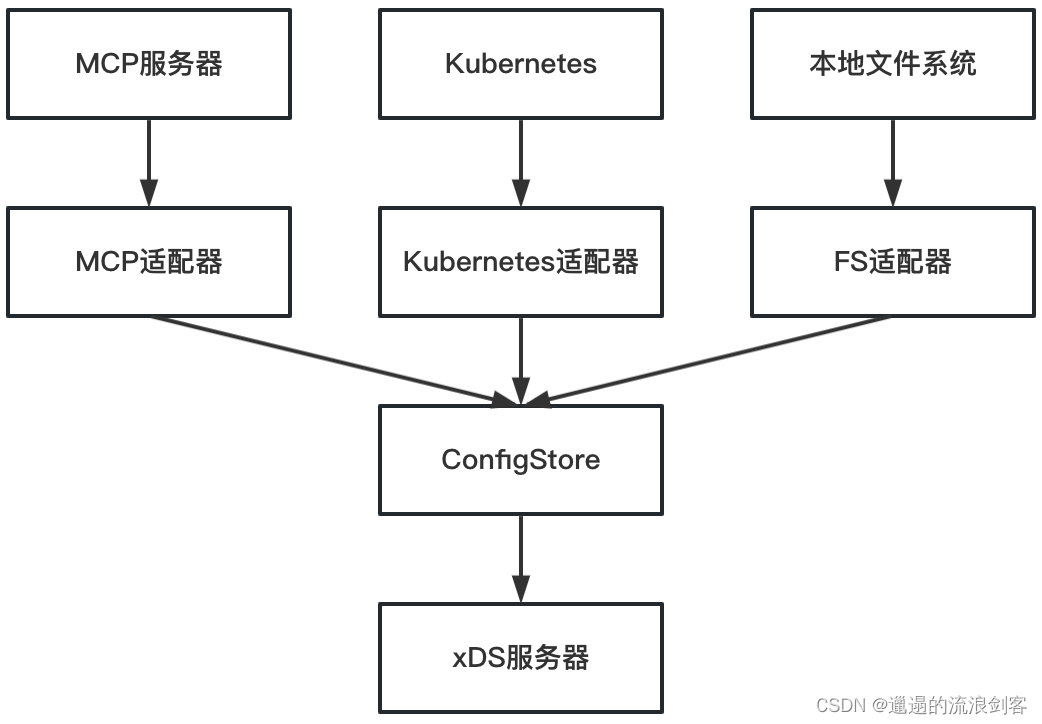

3. Configuration rule discovery: ConfigController

Pilot's configuration rules refer to network routing rules and network security rules, including Virtualservice, Destinationrule, Gateway, PeerAuthentication, RequestAuthentication and other resources. Currently three types of ConfigController are supported:

- MCP: It is a service mesh configuration transmission protocol that is used to isolate Pilot from the underlying platform (Kubernetes, file system or other registration center), so that Pilot does not need to be aware of the differences in the underlying platform and focuses more on the generation and distribution of Envoy xDS configuration.

- Kubernetes: Config discovery based on Kubernetes utilizes the List-Watch capability of Kubernetes Informer. In a Kubernetes cluster, Config exists in the form of CustomResource. Pilot monitors Kubernetes APIServer configuration rule resources through the configuration controller (CRD Controller), maintains the cache of all resources, and triggers the event processing callback function

- File: Periodically read local configuration files through the file monitor, cache configuration rules in memory, and maintain configuration addition, update, and deletion events. When the cache changes, asynchronously notify the memory controller to execute the event callback function

1). Core interface of ConfigController

ConfigController implements the ConfigStoreController interface:

// pilot/pkg/model/config.go

type ConfigStoreController interface {

// 配置缓存接口

ConfigStore

// 注册事件处理函数

// RegisterEventHandler adds a handler to receive config update events for a

// configuration type

RegisterEventHandler(kind config.GroupVersionKind, handler EventHandler)

// 运行控制器

// Run until a signal is received.

// Run *should* block, so callers should typically call `go controller.Run(stop)`

Run(stop <-chan struct{

})

// 配置缓存是否已同步

// HasSynced returns true after initial cache synchronization is complete

HasSynced() bool

}

ConfigStoreController inherits the ConfigStore interface. ConfigStore provides the function of adding, deleting, modifying and checking Config resources for the resource cache interface of the controller core:

// pilot/pkg/model/config.go

type ConfigStore interface {

// Schemas exposes the configuration type schema known by the config store.

// The type schema defines the bidirectional mapping between configuration

// types and the protobuf encoding schema.

Schemas() collection.Schemas

// Get retrieves a configuration element by a type and a key

Get(typ config.GroupVersionKind, name, namespace string) *config.Config

// List returns objects by type and namespace.

// Use "" for the namespace to list across namespaces.

List(typ config.GroupVersionKind, namespace string) []config.Config

// Create adds a new configuration object to the store. If an object with the

// same name and namespace for the type already exists, the operation fails

// with no side effects.

Create(config config.Config) (revision string, err error)

// Update modifies an existing configuration object in the store. Update

// requires that the object has been created. Resource version prevents

// overriding a value that has been changed between prior _Get_ and _Put_

// operation to achieve optimistic concurrency. This method returns a new

// revision if the operation succeeds.

Update(config config.Config) (newRevision string, err error)

UpdateStatus(config config.Config) (newRevision string, err error)

// Patch applies only the modifications made in the PatchFunc rather than doing a full replace. Useful to avoid

// read-modify-write conflicts when there are many concurrent-writers to the same resource.

Patch(orig config.Config, patchFn config.PatchFunc) (string, error)

// Delete removes an object from the store by key

// For k8s, resourceVersion must be fulfilled before a deletion is carried out.

// If not possible, a 409 Conflict status will be returned.

Delete(typ config.GroupVersionKind, name, namespace string, resourceVersion *string) error

}

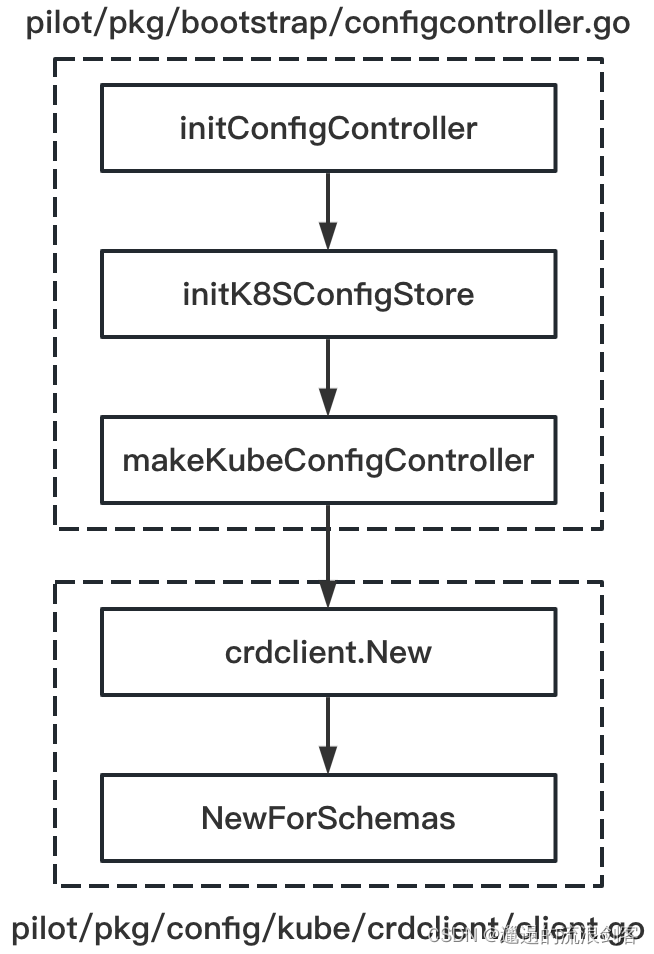

2) Initialization of ConfigController

Kubernetes ConfigController is actually a CRD Operator, which listens to all Istio API resources from the Kubernetes API Server. Its initialization process is as follows:

crdclient.New()The method code is as follows:

// pilot/pkg/config/kube/crdclient/client.go

func New(client kube.Client, opts Option) (*Client, error) {

schemas := collections.Pilot

if features.EnableGatewayAPI {

schemas = collections.PilotGatewayAPI()

}

return NewForSchemas(client, opts, schemas)

}

All Istio Config resource types are defined in collections.Pilot. The code is as follows:

// pkg/config/schema/collections/collections.gen.go

// Pilot contains only collections used by Pilot.

Pilot = collection.NewSchemasBuilder().

MustAdd(AuthorizationPolicy).

MustAdd(DestinationRule).

MustAdd(EnvoyFilter).

MustAdd(Gateway).

MustAdd(PeerAuthentication).

MustAdd(ProxyConfig).

MustAdd(RequestAuthentication).

MustAdd(ServiceEntry).

MustAdd(Sidecar).

MustAdd(Telemetry).

MustAdd(VirtualService).

MustAdd(WasmPlugin).

MustAdd(WorkloadEntry).

MustAdd(WorkloadGroup).

Build()

crdclient.New()The method is called in the method NewForSchemas():

// pilot/pkg/config/kube/crdclient/client.go

func NewForSchemas(client kube.Client, opts Option, schemas collection.Schemas) (*Client, error) {

schemasByCRDName := map[string]resource.Schema{

}

for _, s := range schemas.All() {

// From the spec: "Its name MUST be in the format <.spec.name>.<.spec.group>."

name := fmt.Sprintf("%s.%s", s.Plural(), s.Group())

schemasByCRDName[name] = s

}

// 实例化crd client

out := &Client{

domainSuffix: opts.DomainSuffix,

schemas: schemas,

schemasByCRDName: schemasByCRDName,

revision: opts.Revision,

queue: queue.NewQueue(1 * time.Second),

kinds: map[config.GroupVersionKind]*cacheHandler{

},

handlers: map[config.GroupVersionKind][]model.EventHandler{

},

client: client,

// 创建crdWatcher,监听crd的创建

crdWatcher: crdwatcher.NewController(client),

logger: scope.WithLabels("controller", opts.Identifier),

namespacesFilter: opts.NamespacesFilter,

crdWatches: map[config.GroupVersionKind]*waiter{

gvk.KubernetesGateway: newWaiter(),

gvk.GatewayClass: newWaiter(),

},

}

// 添加回调函数,当crd创建时调用handleCRDAdd方法

out.crdWatcher.AddCallBack(func(name string) {

handleCRDAdd(out, name)

})

// 获取集群中当前所有的crd

known, err := knownCRDs(client.Ext())

if err != nil {

return nil, err

}

// 遍历istio所有的config资源类型

for _, s := range schemas.All() {

// From the spec: "Its name MUST be in the format <.spec.name>.<.spec.group>."

name := fmt.Sprintf("%s.%s", s.Plural(), s.Group())

if s.IsBuiltin() {

handleCRDAdd(out, name)

} else {

// istio config资源类型对应crd已创建,调用handleCRDAdd方法

if _, f := known[name]; f {

handleCRDAdd(out, name)

} else {

out.logger.Warnf("Skipping CRD %v as it is not present", s.GroupVersionKind())

}

}

}

return out, nil

}

NewForSchemas()The CRD Client is instantiated in the method. The CRD Client is defined as follows:

// pilot/pkg/config/kube/crdclient/client.go

type Client struct {

// schemas defines the set of schemas used by this client.

// Note: this must be a subset of the schemas defined in the codegen

schemas collection.Schemas

// domainSuffix for the config metadata

domainSuffix string

// revision for this control plane instance. We will only read configs that match this revision.

revision string

// kinds keeps track of all cache handlers for known types

// 记录所有资源类型对应的informer控制器

kinds map[config.GroupVersionKind]*cacheHandler

kindsMu sync.RWMutex

// 事件处理队列

queue queue.Instance

// handlers defines a list of event handlers per-type

// 资源类型及对应的事件处理回调函数

handlers map[config.GroupVersionKind][]model.EventHandler

// crd相关的schema

schemasByCRDName map[string]resource.Schema

// kubernetes客户端,包含istioClient操作istio api对象,istio informer监听istio api对象变更事件

client kube.Client

// 监听crd的创建

crdWatcher *crdwatcher.Controller

logger *log.Scope

// namespacesFilter is only used to initiate filtered informer.

namespacesFilter func(obj interface{

}) bool

// crdWatches notifies consumers when a CRD is present

crdWatches map[config.GroupVersionKind]*waiter

stop <-chan struct{

}

}

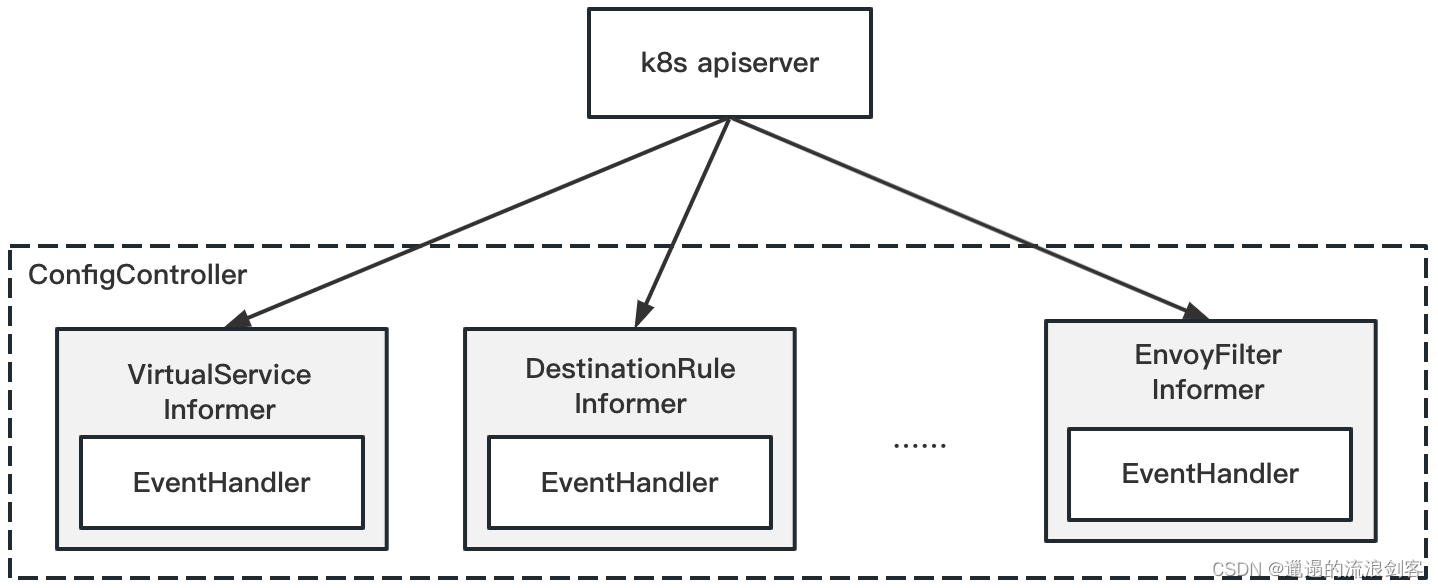

3) Working mechanism of ConfigController

Kubernetes ConfigController creates an Informer for each Config resource to listen to all Config resources and register EventHandler

NewForSchemas()In the method, if the CRD corresponding to the Istio Config resource type has been created or after crdWatcher monitors the CRD creation, handleCRDAdd()the method will be called:

// pilot/pkg/config/kube/crdclient/client.go

func handleCRDAdd(cl *Client, name string) {

cl.logger.Debugf("adding CRD %q", name)

s, f := cl.schemasByCRDName[name]

if !f {

cl.logger.Debugf("added resource that we are not watching: %v", name)

return

}

resourceGVK := s.GroupVersionKind()

gvr := s.GroupVersionResource()

cl.kindsMu.Lock()

defer cl.kindsMu.Unlock()

if _, f := cl.kinds[resourceGVK]; f {

cl.logger.Debugf("added resource that already exists: %v", resourceGVK)

return

}

var i informers.GenericInformer

var ifactory starter

var err error

// 根据api group添加到不同的sharedInformerFactory中

switch s.Group() {

case gvk.KubernetesGateway.Group:

ifactory = cl.client.GatewayAPIInformer()

i, err = cl.client.GatewayAPIInformer().ForResource(gvr)

case gvk.Pod.Group, gvk.Deployment.Group, gvk.MutatingWebhookConfiguration.Group:

ifactory = cl.client.KubeInformer()

i, err = cl.client.KubeInformer().ForResource(gvr)

case gvk.CustomResourceDefinition.Group:

ifactory = cl.client.ExtInformer()

i, err = cl.client.ExtInformer().ForResource(gvr)

default:

ifactory = cl.client.IstioInformer()

i, err = cl.client.IstioInformer().ForResource(gvr)

}

if err != nil {

// Shouldn't happen

cl.logger.Errorf("failed to create informer for %v: %v", resourceGVK, err)

return

}

_ = i.Informer().SetTransform(kube.StripUnusedFields)

// 调用createCacheHandler方法,为informer添加事件回调函数

cl.kinds[resourceGVK] = createCacheHandler(cl, s, i)

if w, f := cl.crdWatches[resourceGVK]; f {

cl.logger.Infof("notifying watchers %v was created", resourceGVK)

w.once.Do(func() {

close(w.stop)

})

}

// Start informer. In startup case, we will not start here as

// we will start all factories once we are ready to initialize.

// For dynamically added CRDs, we need to start immediately though

if cl.stop != nil {

// 启动informer

ifactory.Start(cl.stop)

}

}

Each Informer's event callback function is createCacheHandler()registered through methods, the code is as follows:

// pilot/pkg/config/kube/crdclient/cache_handler.go

func createCacheHandler(cl *Client, schema resource.Schema, i informers.GenericInformer) *cacheHandler {

scope.Debugf("registered CRD %v", schema.GroupVersionKind())

h := &cacheHandler{

client: cl,

schema: schema,

// 创建informer,支持配置namespace级别隔离

informer: kclient.NewUntyped(cl.client, i.Informer(), kclient.Filter{

ObjectFilter: cl.namespacesFilter}),

}

kind := schema.Kind()

// 添加事件回调函数

h.informer.AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: func(obj any) {

incrementEvent(kind, "add")

// 创建任务对象并将其发送到任务队列中

cl.queue.Push(func() error {

return h.onEvent(nil, obj, model.EventAdd)

})

},

UpdateFunc: func(old, cur any) {

incrementEvent(kind, "update")

cl.queue.Push(func() error {

return h.onEvent(old, cur, model.EventUpdate)

})

},

DeleteFunc: func(obj any) {

incrementEvent(kind, "delete")

cl.queue.Push(func() error {

return h.onEvent(nil, obj, model.EventDelete)

})

},

})

return h

}

When Config resources are created, updated, and deleted in Kubernetes, EventHandler creates task objects and sends them to the task queue, which are then processed by the task processing coroutine. The method code for handling resource changes onEvent()is as follows:

// pilot/pkg/config/kube/crdclient/cache_handler.go

func (h *cacheHandler) onEvent(old any, curr any, event model.Event) error {

currItem := controllers.ExtractObject(curr)

if currItem == nil {

return nil

}

// 进行对象转换

currConfig := TranslateObject(currItem, h.schema.GroupVersionKind(), h.client.domainSuffix)

var oldConfig config.Config

if old != nil {

oldItem, ok := old.(runtime.Object)

if !ok {

log.Warnf("Old Object can not be converted to runtime Object %v, is type %T", old, old)

return nil

}

oldConfig = TranslateObject(oldItem, h.schema.GroupVersionKind(), h.client.domainSuffix)

}

if h.client.objectInRevision(&currConfig) {

// 执行事件处理回调函数

h.callHandlers(oldConfig, currConfig, event)

return nil

}

// Check if the object was in our revision, but has been moved to a different revision. If so,

// it has been effectively deleted from our revision, so process it as a delete event.

if event == model.EventUpdate && old != nil && h.client.objectInRevision(&oldConfig) {

log.Debugf("Object %s/%s has been moved to a different revision, deleting",

currConfig.Namespace, currConfig.Name)

// 执行事件处理回调函数

h.callHandlers(oldConfig, currConfig, model.EventDelete)

return nil

}

log.Debugf("Skipping event %s for object %s/%s from different revision",

event, currConfig.Namespace, currConfig.Name)

return nil

}

func (h *cacheHandler) callHandlers(old config.Config, curr config.Config, event model.Event) {

// TODO we may consider passing a pointer to handlers instead of the value. While spec is a pointer, the meta will be copied

// 执行该资源类型对应的事件处理回调函数

for _, f := range h.client.handlers[h.schema.GroupVersionKind()] {

f(old, curr, event)

}

}

onEvent()In the method, TranslateObject()the object is converted through the method, and then the event processing callback function corresponding to the resource type is executed.

h.client.handlersRegisterEventHandler()It is a collection of processing functions for various resource types. It is registered through ConfigController . The registration code is as follows:

// pilot/pkg/bootstrap/server.go

func (s *Server) initRegistryEventHandlers() {

...

if s.configController != nil {

configHandler := func(prev config.Config, curr config.Config, event model.Event) {

defer func() {

// 状态报告

if event != model.EventDelete {

s.statusReporter.AddInProgressResource(curr)

} else {

s.statusReporter.DeleteInProgressResource(curr)

}

}()

log.Debugf("Handle event %s for configuration %s", event, curr.Key())

// For update events, trigger push only if spec has changed.

// 对于更新事件,仅当对象的spec发生变化时才触发xds推送

if event == model.EventUpdate && !needsPush(prev, curr) {

log.Debugf("skipping push for %s as spec has not changed", prev.Key())

return

}

// 触发xds全量更新

pushReq := &model.PushRequest{

Full: true,

ConfigsUpdated: sets.New(model.ConfigKey{

Kind: kind.MustFromGVK(curr.GroupVersionKind), Name: curr.Name, Namespace: curr.Namespace}),

Reason: []model.TriggerReason{

model.ConfigUpdate},

}

s.XDSServer.ConfigUpdate(pushReq)

}

schemas := collections.Pilot.All()

if features.EnableGatewayAPI {

schemas = collections.PilotGatewayAPI().All()

}

for _, schema := range schemas {

// This resource type was handled in external/servicediscovery.go, no need to rehandle here.

// 下面3种类型在serviceEntry controller中处理,这里不用为其注册事件处理函数

if schema.GroupVersionKind() == gvk.ServiceEntry {

continue

}

if schema.GroupVersionKind() == gvk.WorkloadEntry {

continue

}

if schema.GroupVersionKind() == gvk.WorkloadGroup {

continue

}

// 注册其他所有api对象的事件处理函数

s.configController.RegisterEventHandler(schema.GroupVersionKind(), configHandler)

}

...

}

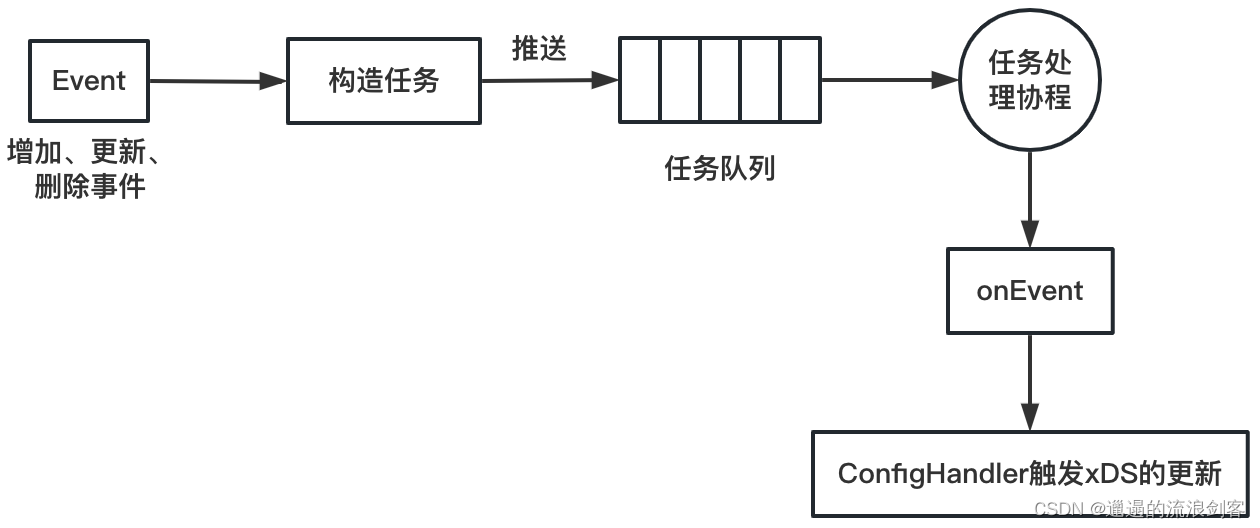

The complete Config event processing flow is shown in the figure below:

- EventHandler constructs a task (Task), which is actually an encapsulation of the onEvent function

- EventHandler pushes the task to the task queue

- The task processing coroutine reads the task queue blockingly, executes the task, handles the event through the onEvent method, and triggers the update of xDS through ConfigHandler

reference:

"The Definitive Guide to Istio"

3. Delve into the Istio source code: Pilot configuration rules ConfigController