Have you ever wondered how a Site Reliability Engineering (SRE) team can effectively and successfully manage complex applications? In the Kubernetes ecosystem, there is only one answer: Kubernetes Operators! In this article, we'll look at what they are and how they work.

The Kubernetes Operator concept was developed by CoreOS engineers in 2016 as a high-level native way to build and drive every application on a Kubernetes cluster, which requires domain-specific knowledge. By working closely with the Kubernetes API, it provides a consistent way to automate all application operations without any human reaction. In other words, Operators are a way to package, run, and manage Kubernetes applications.

The Kubernetes Operator pattern operates according to one of the core principles of Kubernetes: control theory. In the field of robotics and automation, it is a mechanism that continuously operates dynamic systems. It relies on the ability to quickly adjust workload demands to available resources as accurately as possible. The goal is to develop a control model with the necessary logic to help the application or system remain stable. In the Kubernetes world, this part is handled by the controller.

A controller is a special piece of software that responds to changes in the cluster and performs adaptive actions in a loop. The first Kubernetes controller is kube-controller-manager. It is considered the ancestor of all operators built later.

What is a controller loop?

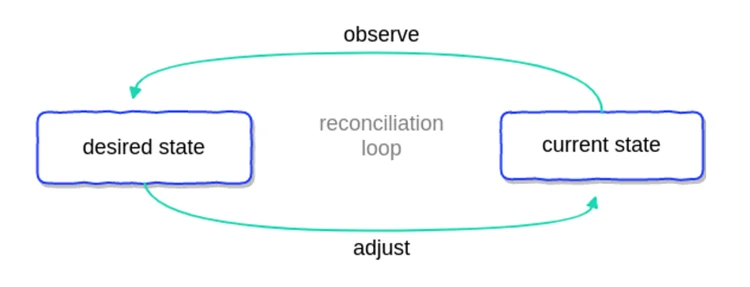

In short, controller loops are the basis of controller actions. Imagine that there is a non-terminating process (called a coordination loop in Kubernetes) that happens over and over again, as shown in the following figure:

This process observes at least one Kubernetes object that contains information about the desired state. Objects such as…

- Deployments

- Services

- Secrets

- Ingress

- Config Maps

…defined by a configuration file consisting of a manifest in JSON or YAML. The controller then continuously adjusts via the Kubernetes API to mimic the desired state based on built-in logic until the current state becomes the desired state.

In this way, Kubernetes handles the dynamic nature of cloud-native systems by handling constant change. Examples of modifications to achieve the desired state include:

- Note when a node fails and requires a new node.

- Check whether the pod needs to be copied.

- If necessary, create a new load balancer.

How do Kubernetes Operators work?

An Operator is an application-specific controller. It extends the Kubernetes API to create, configure, and manage complex applications on behalf of humans (operations engineers or site reliability engineers). Let's see what the Kubernetes documentation says.

"Operators are software extensions to Kubernetes that leverage custom resources to manage applications and their components. Operators follow Kubernetes principles, especially control loops.

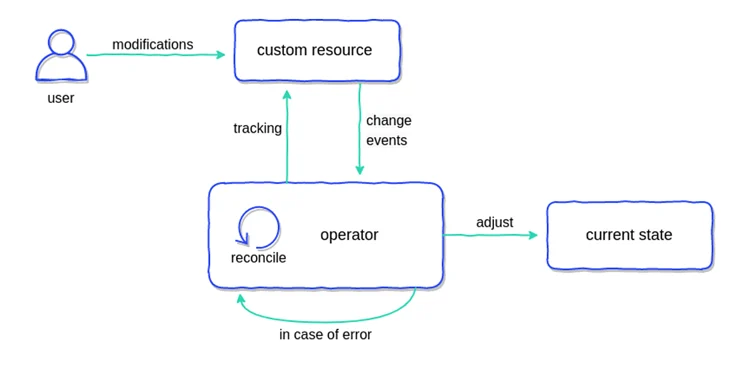

So far, you know that operators use controllers to observe Kubernetes objects. These controllers are a little different in that they track custom objects, often called custom resources (CR). CR is an extension to the Kubernetes API that provides a location where structured data (the desired state of an application) can be stored and retrieved. The entire operating principle is shown in the figure below.

Operators continuously track cluster events related to specific types of custom resources. The types of events that can be tracked on these custom resources are:

Add to

renew

delete

When the operator receives any information, it will take action to adjust the Kubernetes cluster or external system to the desired state as part of its coordination loop in the custom controller.

How to add custom resources

Custom resources extend the functionality of Kubernetes by adding new types of objects that are helpful to your application. Kubernetes provides two ways to add custom resources to the cluster:

- Through API aggregation, an advanced approach that requires you to build your own API server but gives you more control

- With Custom Resource Definitions (CRDs), an easy way to create without any programming knowledge as an extension to the original Kubernetes API server.

Both options meet the needs of different users, who can choose between flexibility and ease of use. The Kubernetes community has created a comparison to help you decide which approach is right for you, but the most popular choice is CRD.

Custom resource definition

Custom resource definitions have been around for a while; the first major API specification was released with Kubernetes 1.16.0. The following listing provides an example:

apiVersion: apiextensions.k8s.io/v1beta1

kind: CustomResourceDefinition

metadata:

name: application.stable.example.com

spec:

group: stable.example.com

version: v1

scope: Namespaced

names:

plural: application

singular: applications

kind: Application

shortNames:

- app

This CRD will allow you to create a CR named "Application". (We'll use this in the next section.) The first two lines define the apiVersion apiextensions.k8s.io/v1beta1 of the CustomResourceDefinition of the object type you want to create.

The metadata describes the name of the resource, but the most important place here is the "spec" field. It allows you to specify the group and version as well as the visibility scope - namespace or cluster scope.

Afterwards, you can define the name in multiple formats and create a convenient short name so that you can execute the command kubectl get crds to get the existing crds.

Custom resources

The CRD above allows you to create the following manifest of custom resources.

apiVersion: stable.example.com/v1

kind: Application

metadata:

name: application-config

spec:

image: container-registry-image:v1.0.0

domain: teamx.yoursaas.io

plan: premium

As you can see, we can include here all the necessary information needed to run the application for a specific case. This custom resource will be observed by our operator—specifically, by the operator’s custom controller. Based on the built-in logic in the controller, the necessary actions will mimic the desired state. It can create Deployment, Service and necessary ConfigMap for our application. Run it and expose it through a portal on a specific domain. This is just a use case example, but it can do whatever it's designed to do.

Operators can also be used to configure resources outside of Kubernetes. You can control the configuration of external routers or create databases in the cloud without leaving the Kubernetes platform.

Kubernetes Operators: Case Study

To fully understand Kubernetes Operators, let's take a look at the Prometheus Operator, one of the earliest and most popular Operators. It simplifies the deployment and configuration of Prometheus, Alertmanager and related monitoring components.

- The core functionality of the Prometheus Operator is to monitor the Kubernetes API server for changes to specific objects and ensure that the current Prometheus deployment matches those objects. Operator performs operations based on the following custom resource definition (CRD):

- Prometheus, which defines the required Prometheus deployment.

- Alertmanager, which defines the required Alertmanager deployment.

- ServiceMonitor, which declaratively specifies how a Kubernetes service group should be monitored. The Operator automatically generates Prometheus fetching configurations based on the current state of objects in the API server.

- PodMonitor, which declaratively specifies how a set of pods should be monitored. The Operator automatically generates Prometheus fetching configurations based on the current state of objects in the API server.

- PrometheusRule, which defines a set of required Prometheus alerting and/or logging rules. The Operator generates a rules file that can be used by Prometheus instances.

The Prometheus Operator automatically detects changes to any of the above objects from the Kubernetes API server and ensures that matching deployments and configurations remain in sync.