C++11 semi-synchronous and semi-asynchronous thread pool

Introduction

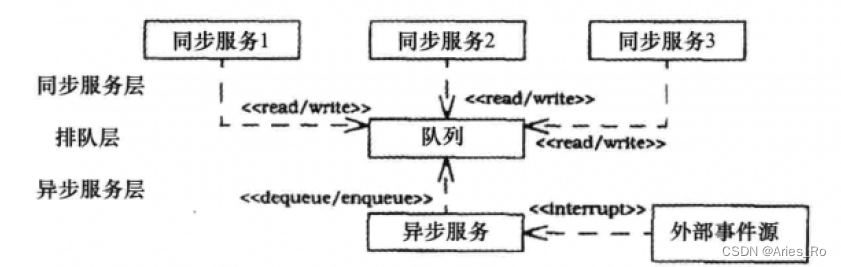

Semi-synchronous and semi-asynchronous thread pools are more commonly used and are relatively simple to implement.

The synchronization layer includes the synchronization service layer and the queuing layer, which refers to queuing received tasks and queuing all tasks into a queue to wait for processing;

The asynchronous layer refers to multiple threads processing tasks. The asynchronous processing layer takes out tasks from the synchronization layer and processes tasks concurrently.

sync queue

The synchronization queue belongs to the synchronization layer. Its main function is to ensure the security of the shared data thread in the queue. It also provides an interface for adding tasks and an interface for retrieving tasks.

This is implemented using C++11 locks, condition variables, rvalue references, std::move and std::forward.

The synchronization queue mainly includes three functions, Take, Add and Stop.

Take function

The implementation here overloads two Take functions to support obtaining multiple tasks at a time, or obtaining one task at a time.

//可一次性获取多个任务,放在list中,减少互斥锁阻塞时间

void Take(std::list<T>& list)

{

std::unique_lock<std::mutex> locker(m_mutex);

m_notEmpty.wait(locker, [this] {return m_needStop || NotEmpty(); });

if (m_needStop)

{

return;

}

list = std::move(m_queue);

m_notFull.notify_one();

}

//获取单个任务

void Take(T& t)

{

std::unique_lock<std::mutex> locker(m_mutex);

m_notEmpty.wait(locker, [this] {return m_needStop || NotEmpty(); });

if (m_needStop)

{

return;

}

t = m_queue.front();

m_queue.pop_front();

m_notFull.notify_one();

}

First create a unique *lock to obtain the mutex, and then wait for the judgment expression through the condition variable m_*notEmpty. The judgment expression consists of two conditions, one is a stop sign, and the other is a not-empty condition. When either condition is not met, the condition variable will release the mutex and put the thread in the waiting state, waiting for other threads to call notify_one/ notify all wakes it up; when any condition is met, continue to execute the following logic, that is, remove the tasks from the queue, and wake up a thread that is waiting to add tasks to add tasks. When a thread in the waiting state is awakened by notify_one or notify all, the condition variable will first reacquire the mutex, and then check whether the condition is satisfied. If it is satisfied, the execution will continue. If it is not satisfied, the mutex will be released and continue to wait.

Add function

The process of Add is similar to that of Take. It also obtains the mutex first, and then checks whether the conditions are met. If the conditions are not met, the mutex is released and continues to wait. If the conditions are met, a new task is inserted into the queue and the fetching task is awakened. thread to fetch data.

template<typename F>

void Add(F &&x)

{

std::unique_lock<std::mutex> locker(m_mutex);

m_notFull.wait(locker, [this] {return m_needStop || NotFull(); });

if (m_needStop)

return;

m_queue.emplace_back(std::forward<F>(x));

m_notEmpty.notify_one();

}

Stop function

The Stop function first gets the mutex and then sets the stop flag to true. Note that in order to ensure thread safety, you need to obtain the mutex first, set its flag to true, and then wake up all waiting threads. Because the waiting condition is m_needStop and the condition is met, the thread will continue to execute. Since the thread will exit when m_needStop is true, all waiting threads will exit one after another.

Another thing worth noting is that we put m notFull.notify_all0 outside the protection range of lock_guard. Here we can also put m_notFull.notify all0) within the protection range of ockguard, and put it outside for some optimization. Because notify_one or notify_all will wake up a waiting thread, after the thread is awakened, it will first obtain the mutex and then check whether the conditions are met. If it is protected by lock guard at this time, the awakened thread needs to be destructed by lock guard to release the mutex before it can obtain it (i.e. It is released after the stop function is executed). If notify_one or notify_all is executed outside lock_guard, the awakened thread does not need to wait for lock_guard to release the lock when acquiring the lock, and the performance will be better, so no lock protection is required when executing notify_one or notify_all.

void Stop()

{

{

std::lock_guard<std::mutex> locker(m_mutex);

m_needStop = true;

}

m_notFull.notify_all();

m_notEmpty.notify_all();

}

SyncQueue complete code

”SyncQueue.h”

The overall code of the synchronization queue:

#pragma once

#include <iostream>

#include <list>

#include <mutex>

using namespace std;

template<typename T>

class SyncQueue

{

public:

SyncQueue(int maxSize) :m_maxSize(maxSize), m_needStop(false)

{

}

void Put(const T &x)

{

Add(x);

}

void Put(T &&x)

{

Add(std::forward<T>(x));

}

//可一次性获取多个任务,放在list中,减少互斥锁阻塞时间

void Take(std::list<T>& list)

{

std::unique_lock<std::mutex> locker(m_mutex);

m_notEmpty.wait(locker, [this] {return m_needStop || NotEmpty(); });

if (m_needStop)

{

return;

}

list = std::move(m_queue);

m_notFull.notify_one();

}

//获取单个任务

void Take(T& t)

{

std::unique_lock<std::mutex> locker(m_mutex);

m_notEmpty.wait(locker, [this] {return m_needStop || NotEmpty(); });

if (m_needStop)

{

return;

}

t = m_queue.front();

m_queue.pop_front();

m_notFull.notify_one();

}

void Stop()

{

{

std::lock_guard<std::mutex> locker(m_mutex);

m_needStop = true;

}

m_notFull.notify_all();

m_notEmpty.notify_all();

}

bool Empty()

{

std::lock_guard<std::mutex> locker(m_mutex);

return m_queue.empty();

}

bool Full()

{

std::lock_guard<std::mutex> locker(m_mutex);

return m_queue.size() == m_maxSize;

}

//可以获取任务数量

int Count()

{

return m_queue.size();

}

private:

bool NotFull() const

{

bool full = m_queue.size() >= m_maxSize;

if (full)

{

cout << "缓冲区满了,需要等待。。。" << endl;

}

return !full;

}

bool NotEmpty() const

{

bool empty = m_queue.empty();

if (empty)

{

cout << "缓冲区空了,需要等待。。。,异步层的线程ID:" << this_thread::get_id() << endl;

}

return !empty;

}

template<typename F>

void Add(F &&x)

{

std::unique_lock<std::mutex> locker(m_mutex);

m_notFull.wait(locker, [this] {return m_needStop || NotFull(); });

if (m_needStop)

return;

m_queue.emplace_back(std::forward<F>(x));

m_notEmpty.notify_one();

}

private:

std::list<T> m_queue; //缓冲区

std::mutex m_mutex; //互斥量

std::condition_variable m_notEmpty; //不为空的条件变量

std::condition_variable m_notFull; //没有满的条件变量

int m_maxSize; //同步队列最大的size

bool m_needStop; //停止的标志

};

Thread Pool

“ThreadPool.h”

The thread pool ThreadPool has three member variables. One is the thread group. The threads in this thread group are pre-created. How many threads should be created are passed on from outside. It is generally recommended to create threads with the number of CPU cores to achieve optimal efficiency. Threads The group loop takes out tasks from the synchronization queue and executes them. If the thread pool is empty, the thread group will be in a waiting state, waiting for the arrival of the task.

Another member variable is the synchronization queue, which is not only used for thread synchronization, but also used to limit the upper limit of the synchronization queue. This upper limit is also set by the user.

The third member variable is used to stop the thread pool. In order to ensure thread safety, we use the atomic variable atomic bool. An example of using this semi-synchronous and semi-asynchronous thread pool will be shown in the next section.

#include<list>

#include<thread>

#include<functional>

#include<memory>

#include<atomic>

#include "SyncQueue.h"

const int MaxTaskCount = 100;

class ThreadPool

{

public:

using Task = std::function<void()>;

ThreadPool(int numThreads = std::thread::hardware_concurrency()) : m_queue(MaxTaskCount)

{

Start(numThreads);

}

~ThreadPool(void)

{

Stop();

}

void Stop()

{

//保证多线程情况下只调用一次 StopThreadGroup

std::call_once(m_flag, [this] {StopThreadGroup(); });

}

//可输入右值,例如lambda表达式

void AddTask(Task&& task)

{

m_queue.Put(std::forward<Task>(task));

}

void AddTask(const Task& task)

{

m_queue.Put(task);

}

void Start(int numThreads)

{

m_running = true;

//创建线程组

for (int i = 0; i < numThreads; ++i)

{

m_threadgroup.emplace_back(std::make_shared<std::thread>(&ThreadPool::RunInThread, this));

}

}

private:

void RunInThread()

{

while (m_running)

{

//取任务分别执行

std::list<Task> list;

m_queue.Take(list);

for (auto& task : list)

{

if (!m_running)

return;

task();

}

}

}

void StopThreadGroup()

{

m_queue.Stop(); //让同步队列中的线程停止

m_running = false; //置为false,让内部线程跳出循环并退出

for (auto thread : m_threadgroup)

{

if (thread)

thread->join();

}

m_threadgroup.clear();

}

std::list<std::shared_ptr<std::thread>> m_threadgroup; //处理任务的线程组

SyncQueue<Task> m_queue; //同步队列

atomic_bool m_running; //是否停止的标志

std::once_flag m_flag;

};

Main function test

#include <iostream>

#include "ThreadPool.h"

using namespace std;

void TestThdPool()

{

ThreadPool pool(2);//创建一个2个线程的线程池

//创建一个线程来添加10个任务1

std::thread thd1([&pool] {

for (int i = 0; i < 10; i++)

{

auto thdId = this_thread::get_id();

pool.AddTask([thdId] {//添加任务可以使用lambda表达式,代码中实现了右值作为参数输入

cout << "同步线程1的线程ID:" << thdId << endl;

});

}

});

//创建一个线程来添加20个任务2

std::thread thd2([&pool] {

for (int i = 0; i < 20; i++)

{

auto thdId = this_thread::get_id();

pool.AddTask([thdId] {

cout << "同步线程2的线程ID:" << thdId << endl;

});

}

});

this_thread::sleep_for(std::chrono::seconds(2));

getchar();

pool.Stop();

thd1.join();

thd2.join();

}

int main()

{

TestThdPool();

return 0;

}

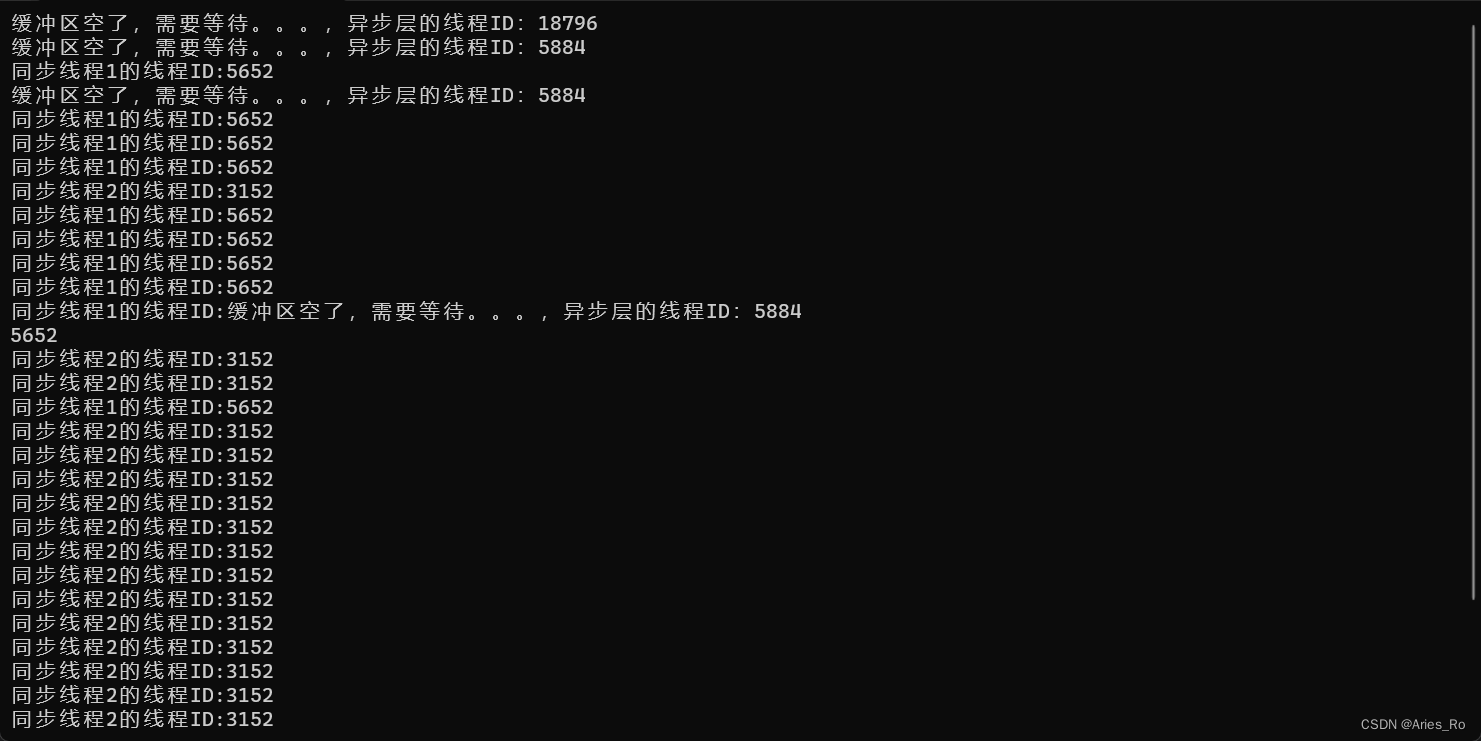

operation result: