文献学习《Past, Present, and Future of Simultaneous Localization And Mapping: Towards the Robust-Perception Age》

This article is mainly a review article about SLAM technology, introducing the historical development stage of SLAM, current system composition, map representation (metric/semantic), as well as new sensors and computing tools.

Literature link: https://arxiv.org/pdf/1606.05830v4.pdf

1 Introduction

Maps serve two purposes:

- Used to support other tasks, such as path planning and provide an intuitive visual operation;

- Limit errors when estimating robot state.

SLAM is often applied in scenarios where no prior map is available and a map needs to be established. In the absence of temporary positioning facilities (such as artificial landmarks, GPS), SLAM can provide an attractive and feasible solution for users to build maps, making robot operation possible.

The three stages of the SLAM problem proposed by Durrant-Whyte and Bailey:

- Classical age: classical age (1986-2004) - Introduces probability formulas to SLAM, including extended Kalman filter, Rao-Blackwellised particle filter and maximum likelihood estimation, and also delineates efficiency and robust data Fundamental challenges of association.

- Algorithmic analysis age: algorithm-analysis age (2004-2015), mainly studying the basic properties of SLAM, including observability, convergence and continuity. Understood the key role of sparsity in efficient SLAM solvers and established the main open source SLAM library.

- Robust-perception age: robust-perception age (2016——): Robust performance (fail-safe mechanism, self-regulation ability), high-level understanding, resource awareness, task-driven perception.

Regarding the question "Do we need SLAM?", the author gave a three-fold answer:

First, the SLAM research conducted in the past decade itself has produced the visual inertial odometry method, which represents the current state of the art. More generally, SLAM directly leads to the study of multi-sensor fusion in more challenging configurations (e.g., no GPS, low-quality sensors).

Second, obtain the topology of the real environment. Scenes that look similar will affect location recognition. For different locations in the environment, SLAM can provide a natural defense against erroneous data association and perceptual aliasing.

Third, many applications require SLAM, whether implicitly or explicitly, a globally consistent map is required.

For a mature SLAM problem, it can be divided into the following aspects:

- Robot: motion type (dynamic, high-speed), available sensors (resolution, sampling rate), available computing resources;

- Environment: two-dimensional/three-dimensional, presence of natural/artificial landmarks, number of dynamic objects, number of symmetries, risk of perceived confusion;

- Performance requirements: Accurate estimation of robot state, scene accuracy and representation type (landmark-based or dense), success rate (percentage of tests reaching an accurate line), estimated latency, maximum execution time, maximum map size.

When faced with robot motion or an environment that is very challenging (for example, the robot moves quickly and the scene is highly dynamic), the SLAM algorithm is prone to failure. Likewise, SLAM algorithms often cannot cope with stringent performance requirements, such as high-frequency estimation for fast closed-loop control.

2 Analysis of modern SLAM systems

A SLAM system includes front-end and back-end. The front-end extracts sensor data and puts it into a processable model for estimation, and the back-end performs inferences based on the data extracted by the front-end.

In the backend, SLAM is constructed as a maximum posterior estimation problem, and factor graphs are often used to reason about the dependencies between variables.

In the front-end data federation, short-term and long-term data connections are included. The short term is mainly responsible for connecting corresponding features in continuous sensor measurements. Long-term data union is mainly loop detection, which is to connect new measurements to old landmarks. The backend feeds information back to the frontend to support closed-loop detection and correction.

3 Long periods of autonomy

3.1 Robustness

Algorithm Robustness: Data federation is a major source of algorithm failure. Due to the emergence of perceptual confusion, data union establishes incorrect measurement-state matching relationships, leading to errors in back-end estimates. At the same time, when data union mistakenly excludes some sensor measurements, there will be fewer measurements used for estimation, affecting the estimation accuracy.

For an environment with dynamic objects, in a short-term, single small-scale map scene, the environment can be considered static and will not change. However, in a long-term large environment, this change is inevitable.

For dynamic environments, there are some mainstream methods that remove the dynamic parts of the scene, and there are also some works that use dynamic objects as part of the model.

3.2 Scalability

In many application prospects, the running time and scenarios of robots are continuously extended, but computing time and memory are limited by robot resources.

In order to reduce the complexity of factor graph optimization, two methods are used:

- Sparsification methods

reduce the nodes in the factor graph and marginalize unimportant nodes and factor information; another method is to use continuous-time trajectory estimation to reduce the number of parameters estimated over time. For example, various interpolation methods (cubic spline interpolation, batch interpolation) are used to obtain the robot's motion trajectory and posture state. - Out-of-core and multi-robot methods

SLAM’s parallel out-of-core algorithms distribute the computational (and memory) load of factor graph optimization across multiple processors. The main idea is to divide the factor graph into different subgraphs and optimize the global graph through optional local optimization of the subgraphs.

For large-scale environment mapping, multiple robots can be used to perform SLAM, dividing the scene into smaller areas, and each small area is mapped by a different robot. There are two different solutions: centralized, where robots build subgraphs to transmit local information to the central site; decentralized, where there is no central data fusion, and agents use local communication to reach consensus on a shared map.

Some current problems:

- How to store maps for long operations;

- How often does it take to update the information in the map, and how to determine if the information has expired and can be deleted;

- How robots handle outliers. Robots do not have a universal reference frame, making it difficult to detect and remove false closed loops; for decentralized settings, robots can only detect outliers from partial and local information.

- How to apply existing SLAM algorithms to robot platforms with very limited computing resources (for example, mobile phones, drones, insect robots)

4 notation

4.1 Metric map model

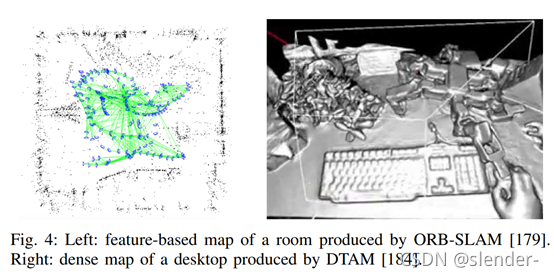

In the 2D case, geometric modeling is easier, and there are two main ways: feature-based maps and maps that occupy grids. 3D geometric modeling needs to be more sophisticated, and the current understanding of how to effectively build 3D geometric models is still in its infancy.

The picture on the left is a sparse representation based on ground objects/features. The sensor measures the geometric level of some ground objects and provides descriptors to establish a data connection between the measured values and the corresponding ground objects.

The picture on the right is a low-level original dense representation method. The dense representation method map provides a high-resolution model of 3D geometry. Such a model is more suitable for obstacle avoidance, visualization and presentation.

Feature-based methods are more mature and can establish accurate and robust SLAM systems with automatic relocation and closed-loop. However, such systems are very dependent on environmental features and detection and matching thresholds, and most feature detectors are optimized for speed rather than accuracy.

Direct methods can utilize original pixel information, and dense direct methods detect all information in the image. For scenes with few features, out-of-focus, and motion blur, this type of method performs better than feature-based methods. But for real-time performance, such methods require higher computing power.

4.2 Semantic map model

Semantic mapping is to connect semantic concepts with geometric entities around the robot.

Semantic representation can be defined in different aspects:

- Semantic level: object classification (e.g., room, corridor, door)

- Semantic organization: usage, attributes (movable, static)

Current problems: Consistent fusion of semantic-metric information. How to consistently fuse semantic information and metric information obtained at different time points is still an open problem. Combining semantic classification and metric representation in a well-established factor graph formulation is a feasible way to establish a semantic-metric reference framework.

Currently robots can manipulate metric representations, but cannot truly explore semantic concepts.

5 ACTIVE SLAM

The problem of controlling the motion of a robot to reduce the uncertainty of its map representation and localization is often called active SLAM.

6 new development directions

The development of new sensors and the application of new computing tools have become key drivers of SLAM.

- New and non-traditional SLAM sensors

Depth cameras, light field cameras, event-based cameras

-

Range-finding cameras: Structured light cameras work by triangulation, and their accuracy is limited by the distance between the camera and the pattern projector (structured light). The accuracy of the ToF camera only relies on the flight event measurement device, which can provide higher ranging accuracy.

Light field cameras: Light field cameras record light intensity and beam direction. Due to cost issues in industrial manufacturing, commercially available light field cameras still only have relatively low resolution. But it has many advantages over ordinary standard cameras, such as depth estimation, noise reduction, video stabilization, interference separation, and specular reflection removal.

Event camera: Event camera only sends local pixel-level changes caused by movement in the scene, and can be applied in high-speed motion and high dynamic range scenes. However, its output is a series of asynchronous events, and traditional frame-based computer vision algorithms are not applicable.

- Deep Learning

By learning deep neural networks, two images of a mobile robot can be obtained directly from the original image pair, thereby regressing the inter-frame pose between the two images, effectively replacing the standard geometry of visual odometry. It is possible to use regression forests and deep convolutional neural networks to localize the camera's 6DoF and estimate the depth of the scene from a single view only as a function of the input image.