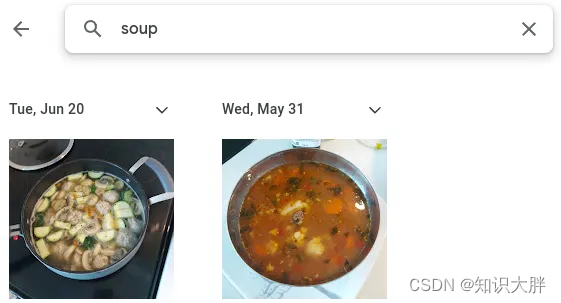

Apps like Google Photos can search images on your phone using text queries. It's worth noting that the app doesn't require you to tag images based on their content. For example, you can search for cats or soup in the Google Photos app and get relevant results, even though your image lacks a text description.

How does the app do this? Such applications understand the connection between the semantic description of a scene and the image content of the scene itself. In this blog, I will demonstrate how to write your own image search application using Python. This is useful if you want to quickly search for images on your local computer but don't upload the files to the standard service due to privacy concerns.

We will leverage a pre-trained machine learning model called CLIP that already understands the joint text/image representation we need. We will also utilize Streamlit as the front end of the application.

CLIP

Contrastive Language-Image Pre-training (CLIP) is a popular text/image multi-modal model based on the paper by Radford et al. (2021). The CLIP model is trained on 400 million text-image pairs obtained from the Internet. Therefore, the model understands the semantic aspects of various scenarios. For our application, we will use a pretrained model to match text search terms against a database of images.

Streamlit

Streamlit is a popular Python framework designed for developing machine learning applications. Streamlit mainly handles the aesthetic design elements of application development, which allows us to focus primarily on the machine learning aspect.

application development

The application consists of two scripts:

get_embeddings.py: In this script, we encode images into embeddings using the CLIP model image encoder.