Reference link: OpenVINS https://docs.openvins.com/index.html

1. Getting Started

Welcome to the OpenVIINS project! The following guide will help new users download the software and run it on our supported datasets. Additionally, we provide information on how to run your own sensors on our systems and provide guidance on how we calibrate them. If you see anything missing or need clarification, please feel free to ask questions.

High-level overview

At a high level, the system is built on several key algorithms. We have ov_core at its core, which contains many standard computer vision algorithms and utilities that anyone can use. Specifically, it stores the following large components:

- Sparse feature visual tracking (based on KLT and descriptors)

- Basic mathematical types used to represent states

- Initialization process

- Multi-sensor simulator that generates synthetic measurements

The ov_core library is used by the ov_msckf system that contains our filter-based estimators . Among them we have state,managers, type systems, prediction and update algorithms. We encourage users to review the specific documentation to learn more about what we offer. The ov_eval library has a bunch of evaluation methods and scripts that can be used to generate research results for publication.

1.5 Sensor Calibration—How to Calibrate Your Own Visual Inertial Sensor

Visual Inertial Sensor

Someone may ask why use visual inertial sensor? The main reason is because of the complementarity of the two different sensing methods. The camera provides high-density external measurements of the environment, while the IMU measures the internal self-motion of the sensor platform. The IMU is crucial to provide robustness to the estimator while also providing system scaling in the case of a monocular camera.

However, there are some challenges when utilizing IMUs in estimation. The IMU needs to estimate other bias terms, and high-precision calibration between the camera and IMU is required. Additionally, small errors in relative timestamps between sensors can degrade performance very quickly in dynamic trajectories. In this OpenVINS project, we address these issues by advocating online estimation of these extrinsic and temporal offset parameters between the camera and IMU.

Camera intrinsic parameter calibration (offline)

The first task is to calibrate the camera intrinsic parameters, such as focal length, camera center and distortion parameters. Our team frequently uses the Kalibr calibration toolbox for offline calibration of internal and external parameters by performing the following steps:

- Download and build the Kalibr toolbox.

- Print out the calibration plate to be used (we usually use Aprilgrid 6x6 0.8x0.8 meters ).

- Make sure sensor drivers are published to ROS with the correct timestamps.

- Sensor preparation.

● Limit motion blur by reducing exposure time;

● Publish at a low frame rate to allow for larger differences in the data set (2-5hz); ●

Make sure the calibration plate can be seen in the image;

● Make sure your sensor is in focus (can Use its kalibr_camera_focus or manually) - Record ROS packets and ensure that the calibration plate can be seen from different directions, distances and each part of the image plane. You can move the calibration plate and keep the camera still, or you can move the camera and keep the calibration plate still.

- Finally run the calibration.

● Use kalibr_calibrate_cameras with the specified topic ● Depending on the degree of distortion, use pinhole-equi

for fisheye , or pinhole-radtan if distortion is low - Check the final results and pay close attention to the final reprojection error plot, a good calibration has less than <0.2-0.5 pixel reprojection error.

IMU Intrinsic Calibration (Offline)

Another intrinsic calibration is to calculate the inertial sensor intrinsic noise characteristics, which is required for batch optimization to calibrate the camera to the IMU transform and any VINS estimator so that we can correctly probabilistically fuse the image and inertial readings . Unfortunately, there is no mature open source toolbox to find these values, instead try our kalibr_allan project, although it is not optimized. Specifically, we estimate the following noise parameters:

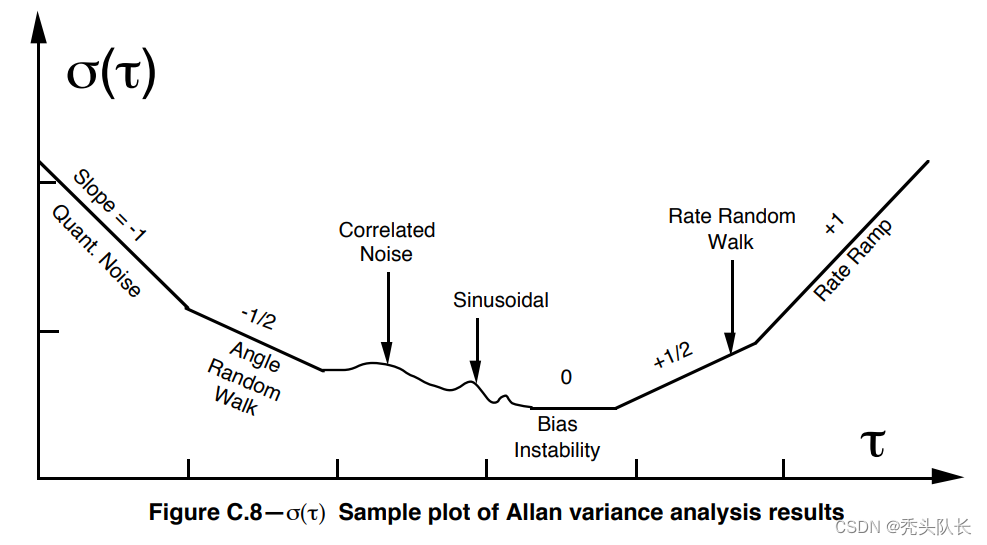

As shown in the figure above, if we calculate the Allen variance, we can see that on the left side of the figure τ = 1 \tau=1t=1 and fit this line with -1/2 slope to obtain the white noise of the sensor. Similarly, one can evaluate the right sideτ = 3 \tau=3t=3 and 1/2 slope of this line to obtain random walk noise. We have a package that can do this in matlab, but the actual verification and conversion to a C++ codebase is not yet complete. See ourkalibr_allangithub project for details on how to generate this plot and calculate the values for the sensor. Note that it may be necessary to inflate the calculated values by a factor of 10-20 to obtain usable sensor values.

Dynamic IMU Camera Calibration (Offline)

After obtaining the intrinsic parameters of the camera and IMU, we can now dynamically calibrate the transformation between the two sensors. For this purpose we again utilize the Kalibr calibration toolbox . For these collected datasets, it is important to minimize motion blur in the camera while ensuring that all axes of the IMU are excited. At least one translational motion as well as two directional changes are required to observe these calibration parameters (see our recent paper: Degenerate motion analysis of auxiliary INS using online spatial and temporal sensor calibration ). We recommend changing directions as much as possible to ensure convergence.

- Download and build the Kalibr toolbox.

- Print out the calibration plate to be used (we usually use Aprilgrid 6x6 0.8x0.8 meters ).

- Make sure sensor drivers are published to ROS with the correct timestamps.

- Sensor preparation.

● Limit motion blur by reducing exposure time;

● Publish at a high frame rate (20-30hz);

● Publish inertial readings at a reasonable rate (200-500hz); - Record ROS packets and make sure the calibration plate can be seen from different directions, distances, and mostly in the center of the image. You should move in a smooth, non-jerky motion with a trajectory that inspires as many directions and translations as possible simultaneously. A data set of 30-60 seconds is usually sufficient for calibration.

- Finally run the calibration.

● Use kalibr_calibrate_imu_camera

● Enter your static calibration file, which will contain the camera topic

● You need to create an imu.yaml file with the noise parameters.

● Depending on the number of frames in the dataset, this may take more than half a day. - Check the final result. You need to make sure that the splines fitted to the inertia readings are fitted correctly. Make sure your estimate does not deviate beyond your 3-sigma bounds. Otherwise, your trajectory is too dynamic, or your noise value is not good. Check your final rotation and translation using manual measurements.

2. IMU Propagation Derivations

2.1 IMU Measurements

We use a 6-axis inertial measurement unit (IMU) to propagate the inertial navigation system (INS), which provides the local rotation speed (angular rate) ω m \omega_mohmand local translational acceleration α m \alpha_mamMeasurement of:

ω m ( t ) = ω ( t ) + bg ( t ) + ng ( t ) \omega_m(t) = \omega(t) + b_g(t) + n_g(t)ohm(t)=ω ( t )+bg(t)+ng(t) α m ( t ) = α ( t ) + G I R ( t ) g G + b a ( t ) + n a ( t ) \alpha_m(t) = \alpha(t) + {_G^I}R(t)^G_g + b_a(t) + n_a(t) am(t)=a ( t )+GIR(t)gG+ba(t)+na( t )

whereω\omegaω andα \alphaα is the IMU local coordinate system{ I } \{I\}

True rotational velocity and translational acceleration in { I } , bg b_gbgJapanese ba b_abais the gyroscope and accelerometer bias, ng n_gngJapanese na n_anais white Gaussian noise, G g = [ 0 0 9.81 ] ⊤ {^G}g =\left[ \begin{array}{ccc} 0 & 0 & 9.81\\ \end{array} \right]^{\top }Gg=[009.81]⊤ is the global coordinate system{ G } \{G\}

Gravity expressed in { G } (note that gravity differs slightly at different locations on Earth), GIR {_G^I}RGIR is the rotation matrix from the global coordinate system to the IMU local coordinate system.

2.2 State Vector

We will INS at time ttThe state vector XI X_Iof tXI 定义为:

X I ( t ) = [ G I q ˉ ( t ) G p I ( t ) G v I ( t ) b g ( t ) b a ( t ) ] X_I(t) = \left[ \begin{array}{ccc} {_G^I} \bar q(t) \\ {^G} p_I(t) \\ {^G} v_I(t)\\ b_g (t) \\ b_a(t)\\ \end{array} \right] XI(t)=

GIqˉ(t)GpI(t)GvI(t)bg(t)ba(t)

Among them, the unit quaternion GI q ˉ {_G^I} \bar qGIqˉRepresents the global rotation of the IMU frame, G p I {^G}p_IGpIis the position of the IMU in the global frame, G v I {^G} v_IGvIis the speed of the IMU in the global frame. For the sake of clarity, we usually write the time as a subscript describing the state of the IMU at that time (for example, GI tq ˉ = GI q ˉ ( t ) {_G^{I_t}} \bar q = {_G^I} \bar q( t)GItqˉ=GIqˉ( t ) ). To define the IMU error state, the standard additive error definition is used for position, velocity and bias, whereas we use multiplicative errors with left quaternions⊗ \otimesQuaternion error state of ⊗ δ q ˉ \delta \bar qdqˉ:

G I q ˉ = δ q ˉ ⊗ G I q ˉ ^ {_G^I} \bar q = \delta \bar q \otimes {_G^I} \hat{\bar q} GIqˉ=dqˉ⊗GIqˉ^δ q ˉ = [ k ^ sin ( 1 2 θ ~ ) cos ( 1 2 θ ~ ) ] ≈ [ 1 2 ^ θ ~ 1 ] \delta \bar q = \begin{bmatrix} \hat ksin({1\over 2} \tilde \theta) \\ cos({1\over 2}\tilde \theta) \\ \end{bmatrix} \approx \begin{bmatrix} \that {1\over 2} \tilde \theta\\ 1\\\end{bmatrix}dqˉ=[k^sin(21i~)cos(21i~)]≈[21^i~1]

wherek ^ \hat kk^ is the axis of rotation,θ ~ \tilde \thetai~ is the rotation angle. For small rotations, the error angle vector is approximatelyθ ~ = θ ~ k ^ \tilde \theta = \tilde \theta \hat ki~=i~k^ , as the error vector about the three directional axes. Therefore, the total IMU error state is defined as the following 15x1 (not 16x1) vector

: b ~ a ( t ) ] \tilde X_I(t) = \left[ \begin{array}{ccc} {_G^I} \tilde \theta(t) \\ {^G} \tilde p_I(t) \ \{^G} \tilde v_I(t)\\ \tilde b_g (t) \\ \tilde b_a(t)\\ \end{array} \right]X~I(t)=

GIi~(t)Gp~I(t)Gv~I(t)b~g(t)b~a(t)

2.3 IMU Kinematics

The IMU state evolves over time as follows ( see Indirect Kalman Filtering for 3D Pose Estimation ).

GI q ˉ ˙ ( t ) = 1 2 [ − ω ( t ) × ω ( t ) − ω ⊤ ( t ) 0 ] GI tq ˉ {_G^I} \dot{ \bar q}(t) = {1 \over 2} \left[ \begin{array}{ccc} -\omega(t)\times & \omega(t)\\ -\omega^\top(t) & 0 \\ \end{array} \ right] {_G^{I_t}} \bar qGIqˉ˙(t)=21[− ω ( t ) ×- Oh⊤(t)ω ( t )0]GItqˉ = : 1 2 Ω ( ω ( t ) ) G I t q ˉ =:{1\over 2}\Omega(\omega (t)){_G^{I_t}} \bar q =:21Ω ( ω ( t ))GItqˉ G p ˙ I ( t ) = G v I ( t ) {^G} \dot p_I(t) = {^G}v_I(t) Gp˙I(t)=GvI(t) G v ˙ I ( t ) = G I t R ⊤ a ( t ) {^G} \dot v_I(t) = {_G^{I_t}} R^{\top}a(t) Gv˙I(t)=GItR⊤a(t) b ˙ g ( t ) = n ω g \dot b_g(t) = n_{\omega g} b˙g(t)=nω g b ˙ a ( t ) = n ω a \dot b_a(t) = n_{\omega a} b˙a(t)=nωa

We model the gyroscope and accelerometer biases as random walks, so their time derivatives are Gaussian white noise (the Gaussian in Gaussian white noise refers to the fact that the probability distribution is a normal function, and white noise refers to its second moment Uncorrelated, the first-order moment is a constant, which refers to the correlation of successive signals in time.). Note that the above kinematics are defined in terms of real acceleration and angular velocity.

3. First-Estimate Jacobian Estimators

3.1 EKF Linearized Error State System

When developing an Extended Kalman Filter (EKF), the nonlinear motion and measurement models need to be linearized around a certain linearization point. This linearization is one source of error (in addition to model error and measurement noise) that leads to inaccurate estimation errors . Let us consider the following linearized error state visual-inertial system:

X ~ k ∣ k − 1 = Φ ( k . k − 1 ) = \Phi_{(kk-1)} \tilde X_{k-1|k-1} + G_kw_kX~k∣k−1=Phi(k.k−1)X~k−1∣k−1+Gkwk Z ~ k = H k X ~ k ∣ k − 1 + n k \tilde Z_k = H_k\tilde X_{k|k-1}+n_k Z~k=HkX~k∣k−1+nk

where the state consists of the inertial navigation state and individual environmental features (note that we do not include biases to simplify the derivation):

[ \begin{array}{ccc} {_G^{I_k}} \bar q^{\top} & {^G} p^{\top}_{I_k} & {^G} v^{\top} _{I_k} & {^G} p^{\top}_{f} \\ \end{array} \right]^\topXk=[GIkqˉ⊤GpIk⊤GvIk⊤Gpf⊤]⊤Note

that we use the left quaternion error state (see Indirect Kalman Filter for 3D Pose Estimation for details). For simplicity, we assume the same transformation of camera and IMU frames. We can use thekthThe perspective projection camera model of k time steps computes the Jacobian measure of a given feature as follows:

H k = H proj , k H state , k H_k = H_{proj,k}H_{state,k}Hk=Hp ro j , kHstate,k = [ 1 I Z 0 _ I x ( I z ) 2 0 1 I z _ I y ( I z ) 2 ] [ ⌊ G I k R ( G p f − G p I k ) × ⌋ − G I k R 0 3 × 3 G I K R ] =\left[ \begin{array}{ccc} {1\over I_Z} & 0& {

{^{\_I}}x \over ({^I}z)^2} \\ 0 & {1\over {^I}z} & {

{^{\_I}y}\over ({^I}z)^2}\\ \end{array} \right]\left[ \begin{array}{ccc} \lfloor {^{I_k}_G}R({^G}p_f-{^G}p_{I_k})\times \rfloor& -{^{I_k}_G}R & 0_{3\times3} & {^{I_K}_G}R\\ \end{array} \right] =[IZ100And z1(and z)2_Ix(and z)2_Iy][⌊GIkR(Gpf−GpIk)×⌋−GIkR03×3GIKR] = H p r o j , k G I k R [ ⌊ ( G p f − G p I k ) × ⌋ G I k R ⊤ − I 3 × 3 0 3 × 3 I 3 × 3 ] =H_{proj,k} {^{I_k}_G}R \left[ \begin{array}{ccc} \lfloor ({^G}p_f-{^G}p_{I_k})\times \rfloor {^{I_k}_G}R^{\top}& -I_{3\times3} & 0_{3\times3} & I_{3\times3} \\ \end{array} \right] =Hp ro j , kGIkR[⌊(Gpf−GpIk)×⌋GIkR⊤−I3×303×3I3×3]

from time stepk − 1 k-1k−1 tokkThe state transition (or system Jacobian matrix) of k is given as (see IMU propagation derivationfor more details):

Φ ( k , k − 1 ) = [ I k − 1 I k R 0 3 × 3 0 3 × 3 0 3 × 3 − GI k − 1 R ⊤ ⌊ α ( k , k − 1 ) × ⌋ I 3 × 3 ( tk − tk − 1 I 3 × 3 0 3 × 3 ) − GI k − 1 R ⊤ ⌊ β ( k , k − 1 ) × ⌋ 0 3 × 3 I 3 × 3 0 3 × 3 0 3 × 3 0 3 × 3 0 3 × 3 I 3 × 3 ] \Phi_{(k,k-1)} =\left[ \begin{array}{ccc} {^{I_k}_{I_{k-1}}R} & 0_{3 \times 3} & 0_{3 \times 3} & 0_{3 \times 3} \\ -{^{I_{k-1}}_G}R^{\top}\lfloor \alpha(k,k-1)\times \rfloor & I_{3 \times 3} & (t_k- t_{k-1}I_{3 \times 3} & 0_{3 \times 3})\\ -{^{I_{k-1}}_G}R^{\top}\lfloor \beta(k, k-1)\times \rfloor & 0_{3 \times 3} & I_{3 \times 3} & 0_{3\times 3} \\ 0_{3\times 3} & 0_{3\times 3} & 0_{3\times 3} & I_{3\times 3} \\ \end{array} \right]Phi(k,k−1)=

Ik−1IkR−GIk−1R⊤⌊α(k,k−1)×⌋−GIk−1R⊤⌊β(k,k−1)×⌋03×303×3I3×303×303×303×3(tk−tk−1I3×3I3×303×303×303×3)03×3I3×3

α ( k , k − 1 ) = ∫ t k − 1 k ∫ t k − 1 s τ I k − 1 R ( α ( τ ) − b a − w a ) d τ d s \alpha(k,k-1)=\int^k_{t_{k-1}}\int^s_{t_{k-1}} {^{I_{k-1}}_\tau}R(\alpha(\tau)-b_a-w_a)d\tau ds a ( k ,k−1)=∫tk−1k∫tk−1stIk−1R ( α ( τ )−ba−wa) d τ d s β ( k , k − 1 ) = ∫ tk − 1 tk τ I k − 1 R ( α ( τ ) − ba − wa ) d τ \beta(k,k-1)=\int^ {t_k}_{t_{k-1}}{^{I_{k-1}}_\tau}R(\alpha(\tau)-b_a-w_a)d\taub ( k ,k−1)=∫tk−1tktIk−1R ( α ( τ )−ba−wa)dτ

份位,α ( τ ) \alpha(\tau)α ( τ ) is the timeτ \tauThe true acceleration of τ , I k − 1 I k R {^{I_k}_{I_{k-1}}R}Ik−1IkR is calculated using gyroscope angular velocity measurements, andG g ^GgG gis the gravity in the global reference frame. During propagation, these integrals need to be solved using analytical or numerical integration, and we are interested here in how the state evolves in order to check its observability.

3.2 Linearized system observability

The observability matrix of this linearized system is defined by the following formula:

O = [ H 0 Φ ( 0 , 0 ) H 1 Φ ( 1 , 0 ) H 2 Φ ( 2 , 0 ) ⋮ H k Φ ( k , 0 ) ] \mathcal{O} = \left[ \begin{array}{ccc} H_0\Phi_{(0,0)} \\ H_1\Phi_{(1,0)} \\ H_2 \Phi_{(2,0)}\\ \vdots\\ H_k\Phi_{(k,0)} \\ \end{array} \right]O=

H0Phi(0,0)H1Phi(1,0)H2Phi(2,0)⋮HkPhi(k,0)

Among them, H k H_kHkis the time step kkJacobian measurement at k , Φ ( k , 0 ) \Phi_{(k,0)}Phi(k,0)is from time step 0 00 tokkComposite state transition of k (system Jacobian matrix). For a given block row of this matrix, we have:

H k Φ ( k , 0 ) = H proj , k GI k R [ Γ 1 Γ 2 Γ 3 Γ 4 ] H_k\Phi_{(k,0)} = H_ {proj,k} {^{I_k}_G}R \left[ \begin{array}{ccc} \Gamma_1 & \Gamma_2 & \Gamma_3 & \Gamma_4\\ \end{array} \right]HkPhi(k,0)=Hp ro j , kGIkR[C1C2C3C4]

Γ 1 = ⌊ ( G p f − G p I k + G I 0 R ⊤ α ( k , 0 ) ) × ⌋ G I 0 R ⊤ \Gamma_1= \lfloor(^Gp_f-^Gp_{I_k}+^{I_0}_GR^{\top}\alpha(k,0))\times\rfloor^{I_0}_GR^{\top} C1=⌊(Gpf−GpIk+GI0R⊤α(k,0))×⌋GI0R⊤ Γ 2 = − I 3 × 3 \Gamma_2 = -I_{3 \times 3} C2=−I3×3 Γ 3 = − ( t k − t 0 ) I 3 × 3 \Gamma_3 = -(t_k-t_0)I_{3 \times 3} C3=−(tk−t0)I3×3 Γ 4 = I 3 × 3 \Gamma_4 = I_{3 \times 3} C4=I3×3

We now verify the following null space, which corresponds to global yaw with respect to gravity and global IMU and feature positions:

N = [ GI 0 RG g 0 3 × 3 − ⌊ G p I 0 ⌋ G g I 3 × 3 − ⌊ G v I 0 ⌋ G g 0 3 × 3 − ⌊ G pf ⌋ G g I 3 × 3 ] \mathcal{N} = \left[ \begin{array}{ccc} ^{I_0}_GR{ {^G}

g } & 0_{3\times 3} \\ -\lfloor^Gp_{I_0}\rfloor ^Gg & I_{3\times 3} \\ -\lfloor^Gv_{I_0}\rfloor ^Gg & 0_{3\ times 3} \\ -\lfloor^Gp_{f}\rfloor ^Gg & I_{3\times 3} \\ \end{array} \right]N=

GI0RGg−⌊GpI0⌋Gg−⌊GvI0⌋Gg−⌊Gpf⌋Gg03×3I3×303×3I3×3

VerificationH k Φ ( k , 0 ) N vio = 0 H_k\Phi_{(k,0)} \mathcal{N}_{vio}=0HkPhi(k,0)Nvio=0 This is not difficult. Therefore, this is the null space of the system, which clearly shows that the visual-inertial system has four unobservable directions (global yaw and position).

3.3 First Estimate Jacobians

The main idea of the First-Estimate Jacobains (FEJ) method is to ensure that state transitions and Jacobian matrices are evaluated at the correct linearization points so that the above observability analysis holds. For those interested in the technical details, check out: 8 and 13 . Let's first consider a small thought experiment on how a standard Kalman filter computes its state transition matrix. from time step 0 00 to1 11 , which will use the current estimate moving forward from state zero. At the next time step after it updates the state with measurements from other sensors, it will use the updated values to calculate the state transition to evolve the state to time step 2. This results in a mismatch in the "continuity" of the state transition matrix, which when multiplied sequentially should represent the transition from time0 00 to time2 22 evolution.

Φ ( k + 1 , k − 1 ) ( xk + 1 ∣ k , xk − 1 ∣ k − 1 ) ≠ Φ ( k + 1 , k ) ( xk + 1 ∣ k , xk ∣ k ) Φ ( k , k − 1 ) ( xk ∣ k − 1 , xk − 1 ∣ k − 1 ) \Phi_{(k+1,k-1)}(x_{k+1|k},x_{k-1|k-1 }) \neq \Phi_{(k+1,k)}(x_{k+1|k},x_{k|k}) \Phi_{(k,k-1)}(x_{k|k- 1},x_{k-1|k-1})Phi(k+1,k−1)(xk+1∣k,xk−1∣k−1)=Phi(k+1,k)(xk+1∣k,xk∣k) F(k,k−1)(xk∣k−1,xk−1∣k−1)

As shown above, we want to calculate from allk − 1 k-1k−k − 1 k-1at time 1k−Measured value of 1 to current propagation timek + 1 k+1k+All kkat 1The state transition matrix of the measured values of k . The right-hand side of the above equation is how one typically does this in a Kalman filter framework. Φ ( k , k − 1 ) ( xk ∣ k − 1 , xk − 1 ∣ k − 1 ) \Phi_{(k,k-1)}(x_{k|k-1},x_{k-1| k-1})Phi(k,k−1)(xk∣k−1,xk−1∣k−1) corresponds to fromk − 1 k-1k−1 update time propagated tokkk time. Then, typicallykkthIt is updated k times, and then propagated from this updated state to the latest time (i.e., the state transition matrix). This is obviously related to the calculation from timek − 1 k-1k−1 tok + 1 k+1k+The state transition at time 1 is different because the second state transition is evaluated at a different linearization point! To solve this problem, we can change the linearization point at which we evaluate these:

Φ ( k + 1 , k − 1 ) ( ( xk + 1 ∣ k , xk ∣ k − 1 ) Φ ( k , k − 1 ) ( xk ∣ k − 1 , xk − 1 ∣ k − 1 ) \Phi_{(k+1,k-1)}( x_{k+1|k},x_{k-1|k-1}) = \Phi_{(k+1,k)}(x_{k+1|k},x_{k|k-1} ) \Phi_{(k,k-1)}(x_{k|k-1},x_{k-1|k-1})Phi(k+1,k−1)(xk+1∣k,xk−1∣k−1)=Phi(k+1,k)(xk+1∣k,xk∣k−1) F(k,k−1)(xk∣k−1,xk−1∣k−1)

We also need to make sure that our measured Jacobian matches the linearization point of the state transition matrix. Therefore, they also need to be inxk ∣ k − 1 x_{k|k-1}xk∣k−1The linearization point is evaluated instead of the usual xk ∣ k x_{k|k}xk∣klinearization point. This approach is called FEJ because we will evaluate the Jacobian matrix at the same linearization point to ensure that the null space remains valid.