【Knowledge Points】

Data reading and preprocessing / 2 √Code : normalization processing method × 2, cropping/rotating/flip, complete case of center cropping

Normalization processing×2:

【Data enhancement】

About torchvision.transforms and compose

from torchvision import transforms ->Enter the transform toolbox that defines many classes

About compose:

Function description : torchvision.transforms is an image preprocessing package in pytorch. Compose is generally used to integrate multiple steps together. The functions in transforms (link is given)

Python Image Library PIL (Python Image Library) is a third-party image processing library for python. However, due to its powerful functions and large number of users, it is almost considered the official image processing library of python.

Use of transforms.Compose() : torchvision is a graphics library of pytorch that serves the PyTorch deep learning framework and is mainly used to build computer vision models. torchvision.transforms is mainly used for common graphics transformations.

The composition of torchvision is as follows:

torchvision.datasets: some functions for loading data and commonly used data set interfaces;

torchvision.models: contains commonly used model structures (including pre-trained models) such as AlexNet, VGG, ResNet, etc.

torchvision.transforms: commonly used pictures Transformations, such as cropping, rotation, etc.;

torchvision.utils: Other useful methods.

Lists some common operations

class torchvision.transforms .CenterCrop(size) Center-cuts the given PIL.Image to obtain the given size. The size can be a tuple, (target_height, target_width). size can also be an Integer, in which case the shape of the cut image is a square .

class torchvision.transforms .RandomCrop(size, padding=0) The position of the cutting center point is randomly selected . size can be a tuple or an Integer.

class torchvision.transforms.RandomHorizontalFlip Randomly flips the given PIL.Image horizontally with probability 0.5 . That is: half the probability of flipping, half the probability of not flipping.

class torchvision.transforms. RandomSizedCrop(size, interpolation=2) first randomly cuts the given PIL.Image , and then resizes it to the given size.

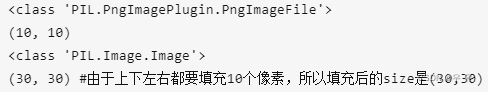

class torchvision.transforms.Padding (padding, fill=0) fills all edges of the given PIL.Image with the given pad value .

padding: how many pixels to fill fill: what value to fill with

eg1.

from torchvision import transforms from PIL import Image padding_img = transforms.Pad(padding=10, fill=0) img = Image.open('test.jpg') print(type(img)) print(img.size) padded_img=padding(img) print(type(padded_img)) print(padded_img.size)

The main function of the Compose() class is to concatenate multiple image transformation operations and traverse the transform operations in the transforms list . The parameters in Compose are actually a list , and the elements in this list are the transform operations you want to perform.

A specific example is given, as well as the single-step code Jupyter proceduralization (the images processed in each step are also given):

transforms.Compose([transforms.RandomResizedCrop(224), transforms.RandomHorizontalFlip(), transforms.ToTensor(), transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])])transforms.RandomResizedCrop(224) randomly crops the given image to different sizes and aspect ratios, and then scales the cropped image to the specified size; (that is, randomly collects first, and then scales the cropped image to the same size) , default scale=(0.08, 1.0) The meaning of this operation is: even if it is only a part of the object, we think it is an object of this type;

transforms.RandomHorizontalFlip() randomly rotates the image of the given PIL horizontally with the given probability, default 0.5

transforms.ToTensor() converts the given image to Tensor

transforms.Normalize() normalization processing

from PIL import Image # 操作1 img = Image.open("./demo.jpg") print("原图大小:",img.size) data1 = transforms.RandomResizedCrop(224)(img) print("随机裁剪后图片大小:",data1.size) data2 = transforms.RandomResizedCrop(224)(img) data1 = transforms.RandomResizedCrop(224)(img) plt.subplot(2,2,1),plt.imshow(img),plt.title("原图") plt.subplot(2,2,2),plt.imshow(data1),plt.title("转换后的图1") plt.subplot(2,2,3),plt.imshow(data2),plt.title("转换后的图2") plt.subplot(2,2,4),plt.imshow(data3),plt.title("转换后的图3") plt.show() # 操作2 img = Image.open("./demo.jpg") img1 = transforms.RandomHorizontalFlip()(img) img2 = transforms.RandomHorizontalFlip()(img) img3 = transforms.RandomHorizontalFlip()(img) plt.subplot(2,2,1),plt.imshow(img),plt.title("原图") plt.subplot(2,2,2), plt.imshow(img1), plt.title("变换后的图1") plt.subplot(2,2,3), plt.imshow(img2), plt.title("变换后的图2") plt.subplot(2,2,4), plt.imshow(img3), plt.title("变换后的图3") plt.show() # 操作3、4 img = Image.open("./demo.jpg") img = transforms.ToTensor()(img) img = transforms.Normalize(mean=[0.5,0.5,0.5], std=[0.5,0.5,0.5])(img) print(img)plt.subplot(m,n,p) #To generate m rows and n columns, this is the p-th figure plt.subplot('row','column','number')

c

c

c

c

c

c

Some understanding of segmentation : When doing medical image processing based on deep learning, the main work is concentrated on the data preprocessing part: in-depth understanding of the format and characteristics of medical images; designing appropriate image preprocessing operations to enhance target features; converting the original format of The data is processed into a format suitable for input to deep learning models . These three points are also the three most difficult points in the process of moving from natural image processing to medical image processing. The difference in models will not have much impact on medical image segmentation based on deep learning. In other words, a network model that is excellent for natural image segmentation can be directly transferred to medical images (and vice versa). The effect can only be seen after training (such as the very famous FCN, SegNet, U-Net, etc. , especially U-Net was originally published on MICCAI). Of course, if you want to design a training model efficiently, you still need to design the network based on the characteristics of the data (such as the size range of the objects to be segmented, 2D or 3D, etc.) . AutoDL technology has achieved outstanding results in 2019 (Neural Architecture Search: A Survey ), and it is estimated that network model design will be difficult to achieve in the future.

Medical imaging data set:

Challenges - Grand Challenge (grand-challenge.org)

Introduction - Grand Challenge (grand-challenge.org)

【Code】

To read:

Flexible loading of partial weights in Pytorch ,

Reading data from a custom dataset in pytorch : Describes how to divide your own data set into a training set and a validation set, and read and package the data into batches through a custom dataset.

Run through the first PyTorch neural network step by step

How to train your own image data set with pytorch

Build your own dataset using Dataset and DataLoader in PyTorch

Read CSV data file - after encapsulation - data batching

Personal records:

Split Pipeline