This article uses Paddle as an example, and the torch operation is similar.

Five years ago, I called this thing that restricted the parameter too much regularization , but now it’s called weight attenuation hhh

I'm a bit out of touch with the trend. I thought that the weight decay was to multiply the weight by one for each iteration 0.99999and let it decay (this operation would also decay too fast, obviously not)

However, it is actually the same thing as regularization

They all add the L1/L2 norm of a parameter after the loss and use it as a loss function for optimization.

l o s s + = 0.5 ∗ c o e f f ∗ r e d u c e _ s u m ( s q u a r e ( x ) ) loss \mathrel{+}= 0.5 * coeff * reduce\_sum(square(x)) loss+=0.5∗coeff∗reduce_sum(square(x))

coeff coeffcoe ff isthe weight attenuation coefficientorregularization coefficient

Let’s use L2Decaythe API to conduct a small experiment:

import paddle

from paddle.regularizer import L2Decay

paddle.seed(1107)

linear = paddle.nn.Linear(3, 4, bias_attr=False)

old_linear_weight = linear.weight.detach().clone()

# print(old_linear_weight.mean())

inp = paddle.rand(shape=[2, 3], dtype="float32")

out = linear(inp)

coeff = 0.1

loss = paddle.mean(out)

# loss += 0.5 * coeff * (linear.weight ** 2).sum()

momentum = paddle.optimizer.Momentum(

learning_rate=0.1,

parameters=linear.parameters(),

weight_decay=L2Decay(coeff)

)

loss.backward()

momentum.step()

delta_weight = linear.weight - old_linear_weight

# print(linear.weight.mean())

# print( - delta_weight / linear.weight.grad ) # 学习率

print( delta_weight )

momentum.clear_grad()

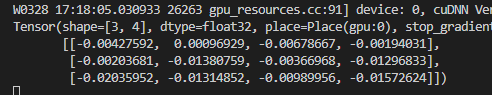

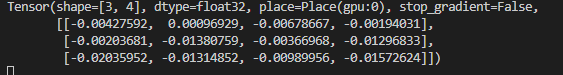

Print the results:

Next, L2Decaydo it manually using the norm of the weight without using the API.

import paddle

from paddle.regularizer import L2Decay

paddle.seed(1107)

linear = paddle.nn.Linear(3, 4, bias_attr=False)

old_linear_weight = linear.weight.detach().clone()

# print(old_linear_weight.mean())

inp = paddle.rand(shape=[2, 3], dtype="float32")

out = linear(inp)

coeff = 0.1

loss = paddle.mean(out)

loss += 0.5 * coeff * (linear.weight ** 2).sum()

momentum = paddle.optimizer.Momentum(

learning_rate=0.1,

parameters=linear.parameters(),

# weight_decay=L2Decay(coeff)

)

loss.backward()

momentum.step()

delta_weight = linear.weight - old_linear_weight

# print(linear.weight.mean())

# print( - delta_weight / linear.weight.grad ) # 学习率

print( delta_weight )

momentum.clear_grad()

API reference address:

In Paddle:

For a trainable parameter, if ParamAttrregularization is defined in , then optimizerthe regularization in will be ignored

my_conv2d = Conv2D(

in_channels=10,

out_channels=10,

kernel_size=1,

stride=1,

padding=0,

weight_attr=ParamAttr(regularizer=L2Decay(coeff=0.01)), # <----- 此处优先级高

bias_attr=False)