practice03:

1.Problem description:

It is to classify the gray and white surfaces of a plane by setting up a neural network with only one hidden layer.

2. Complete code:

# 可提供解题和验证代码,自行产生数据

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch import tensor

from torch.nn import Parameter

class Act_abs(nn.Module):

def __init__(self):

super(Act_abs, self).__init__()

def forward(self, x):

x[abs(x) >= 1] = 1

x[abs(x) < 1] = 0

return x

class Act_v(nn.Module):

def __init__(self):

super(Act_v, self).__init__()

def forward(self, x):

x[x < 1] = 0

x[x >= 1] = 1

return x

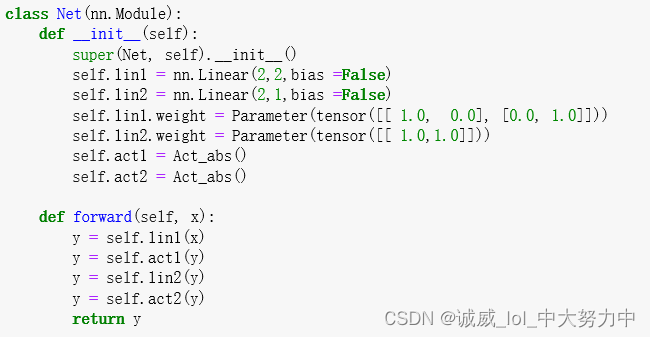

class Net(nn.Module):

def __init__(self):

super(Net, self).__init__()

self.lin1 = nn.Linear(2,2,bias =False)

self.lin2 = nn.Linear(2,1,bias =False)

self.lin1.weight = Parameter(tensor([[ 1.0, 0.0], [0.0, 1.0]]))

self.lin2.weight = Parameter(tensor([[ 1.0,1.0]]))

self.act1 = Act_abs()

self.act2 = Act_abs()

def forward(self, x):

y = self.lin1(x)

y = self.act1(y)

y = self.lin2(y)

y = self.act2(y)

return y

if __name__ == '__main__':

net = Net()

# 第一类

dotIn = [tensor([0.0,0.0]),tensor([0.2,0.2]),tensor([-0.2,-0.2]),tensor([0.2,-0.2]),tensor([-0.2,0.2])]

# 边界点(第二类)

dotEdge = [tensor([1.0,1.0]),tensor([-1.0,1.0]),tensor([1.0,-1.0]),tensor([-1.0,-1.0]),tensor([1.0, 0.2]), tensor([-1.0, 0.2]), tensor([1.0, -0.2]), tensor([-1.0, -0.2])]

# 第二类

dotOut = [tensor([1.5, 0.2]), tensor([-1.5, 0.2]), tensor([1.5, -0.2]), tensor([-1.5, -0.2])]

print('----------内部点--------------')

for x in dotIn:

pre = net(x)

if pre[0] == 0.0:

print('第一类')

else:

print('第二类')

print('----------边界点--------------')

for x in dotEdge:

pre = net(x)

if pre[0] == 0.0:

print('第一类')

else:

print('第二类')

print('----------外部点--------------')

for x in dotOut:

pre = net(x)

if pre[0] == 0.0:

print('第一类')

else:

print('第二类')

print('-----------------生成随机数据------------------')

acc = 0

rand_num = 10**4

for i in range(rand_num):

X = (torch.rand(2) - 0.5) * 4 # [-2, 2]

if abs(X[0]) > 1 or abs(X[1]) > 1:

y = tensor([1])

else:

y = tensor([0])

pre = net(X)

if pre == y:

acc+=1

print('识别准确率Acc: '+ str((acc/rand_num)*100) + '%')

3. Code analysis process:

(1)

What is done here is to define an Act_abs class. The required parameters are variables of type nn.Module. An activation function forward is defined in it. A numerical parameter x needs to be passed. Forward means that the absolute value of x is greater than 1, otherwise 1 is taken. function that takes 0

(2)

Define an Act_v class. The required parameters are also nn.Module, which is basically the same as the above class. The only difference is that x takes the value 1 only if the real value of x is greater than 1.

(3)

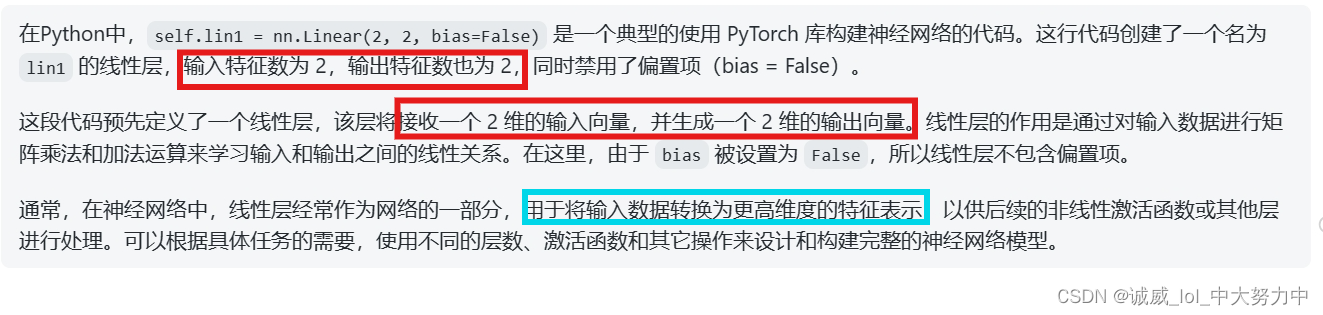

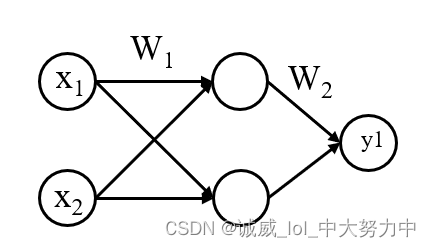

This defines the structure of a neural network:

The so-called Linear layer is used to convert the input vector into another output vector.

Two linear layers are defined here, and the corresponding linear coefficients weight1 and weight2 are set later.

Two identical abs activation function layers are used,

The final neural network structure is as follows:

Because it is a two-classification problem, the choice of activation function is a function similar to the bool function, either 0 or 1 as the output, and the reason why abs absolute value is used is because this square is in 4 quadrants and absolute value is needed for judgment. Is it inside or outside the square?

alright. Finally found out that I am a fool,

In fact, there is no such thing as train and test here, and there is actually no gradient descend or anything like that here, because there will be no parameter adjustment at all.

If you look carefully at this structure, you will find that it is equivalent to realizing the following in C++:

if(abs(x)>=1 && abs(y)>=1) return 1

This effect, so, I feel like it's playing me,

Maybe I just want to imitate the "real" deep learning model in the future.

Anyway, it's easy here