I. Overview

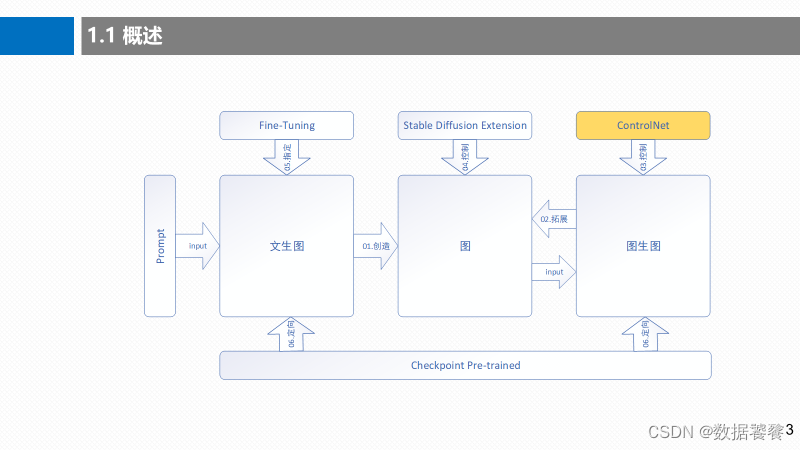

This chapter is the fourth part of the series "Stable Diffusion from Entry-level to Enterprise-level Practical Practice", the advanced capability chapter "Stable Diffusion ControlNet v1.1 Precise Image Control", Section 02, which uses the Stable Diffusion ControlNet Openpose model to accurately control image generation. In the previous section, we introduced " Stable Diffusion ControlNet Inpaint Model Precision Control" . The content of this part is located in the entire Stable Diffusion ecosystem as shown in the yellow part below:

2. Definition

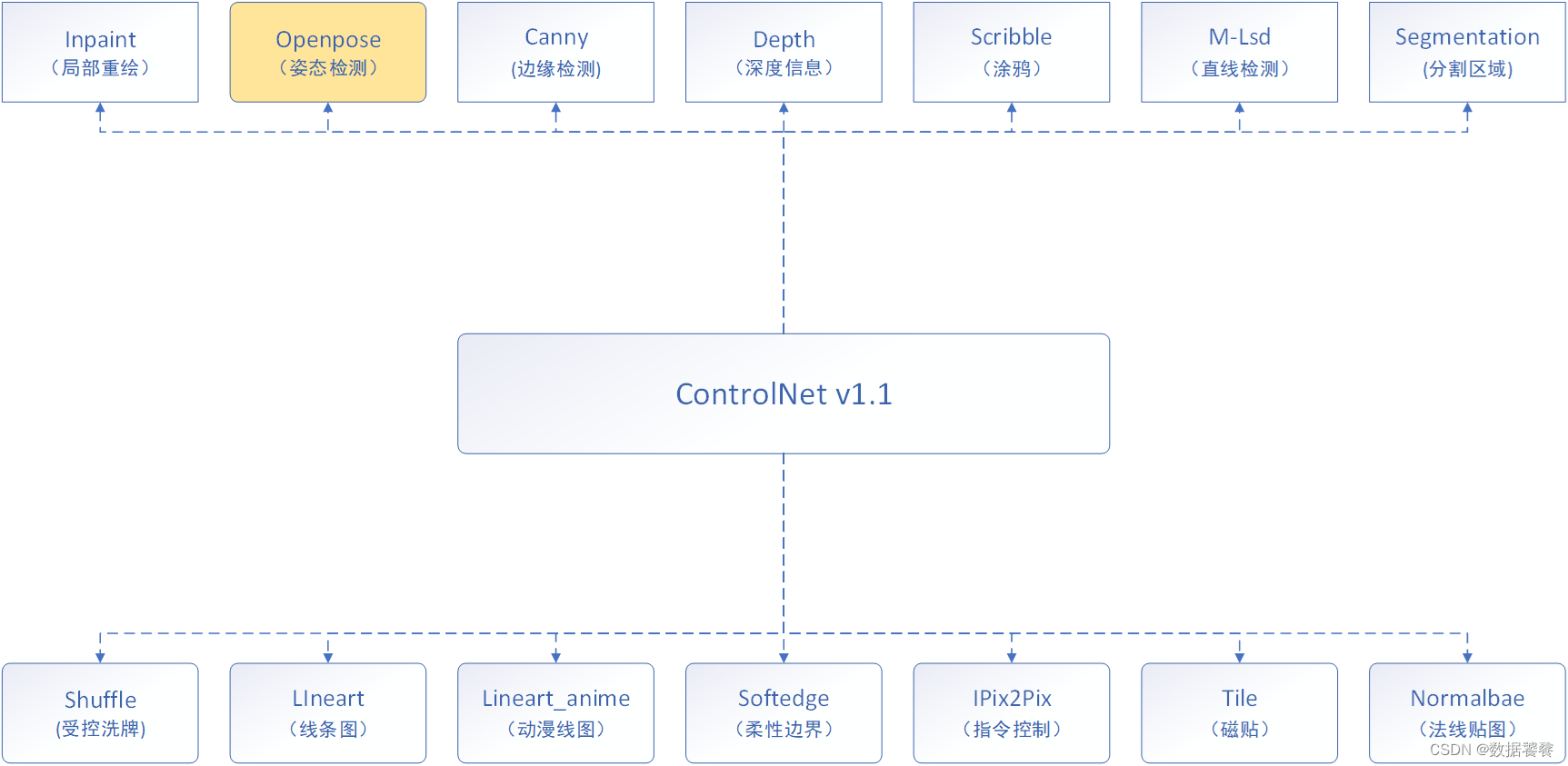

ControlNet v1.1 provides a total of 14 functional models, and each model corresponds to an applicable business scenario. The specific model information is shown in the figure below:

This article introduces the ControlNet Openpose model. Stable Diffusion openpose is combined with openpose pose estimation technology in the image generation process of Stable Diffusion to achieve image generation based on human posture.

Openpose is a tool for human pose estimation through deep learning. It can detect the human body in pictures or videos, locate key points, and output the skeleton diagram and posture of the human body.

3. Work flow

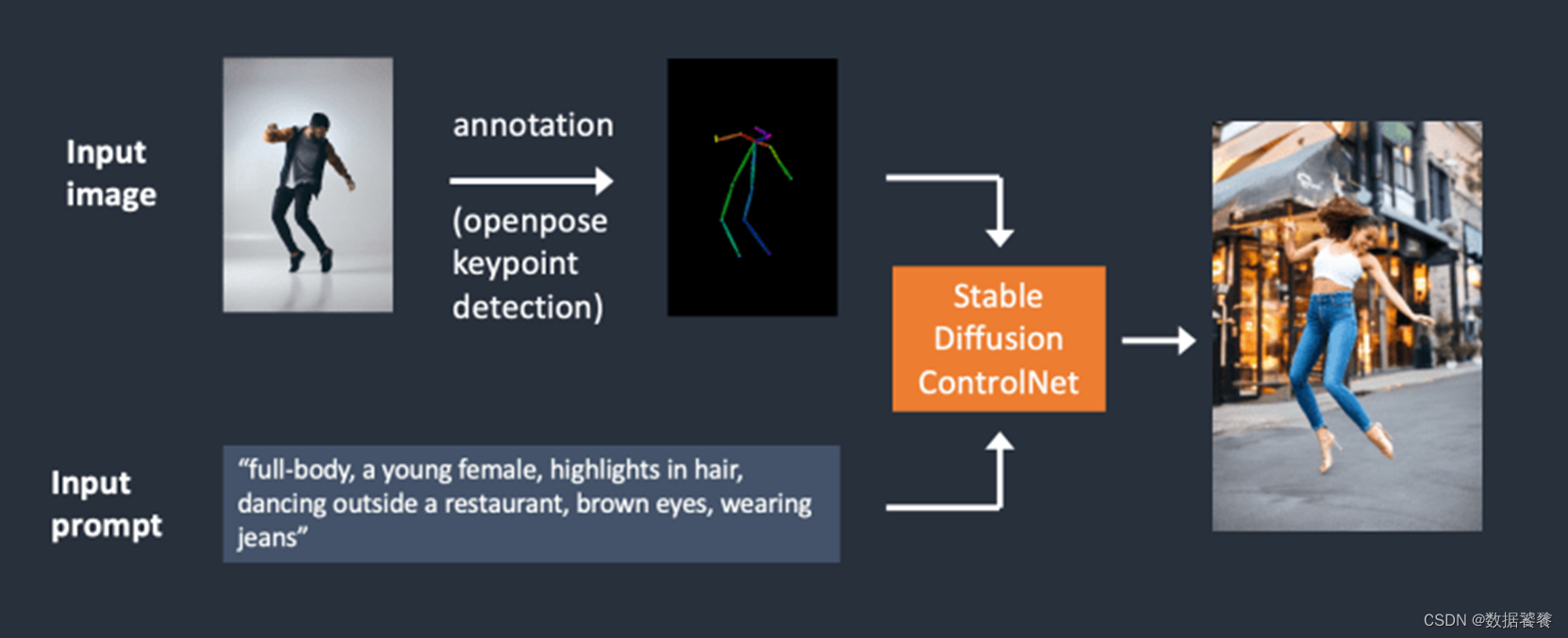

The workflow using ControlNet Openpose is shown in the figure below:

The complete workflow description is as follows:

- Use Openpose to detect the input human body posture picture, and extract the key points of the human body skeleton.

- Use the extracted skeleton key points as conditions and input them into Stable Diffusion.

- Stable Diffusion combines keypoints and other text descriptions to generate new images with corresponding human poses.

4. Creative achievements

Utilizing ControlNet Openpose technology, through attitude detection, the effect of precise image control is shown in the following figure:

The target person and the source person keep the same pose information.

5. Creative process

5.1 Working steps

The entire creative process can be divided into 4 steps, as shown in the figure below:

Environment deployment: Start ControlNet Openpose WebUI service;

Model download: Download the ControlNet Openpose WebUI model;

Practical operation: selecting input, configuring parameters and running;

Run the demo: show the effect of image generation;

5.2 Environment deployment

In order to reduce the impact of integrated encapsulation on our understanding of the underlying implementation, we use ControlNet v1.1 native framework deployment instead of integrated visual interface environment. The specific ControlNet Openpose service program is shown in the figure below. We only need to start the program:

5.3 Model download

There are two main ControlNet v1.1 Openpose pre-training models, as shown in the figure below:

5.4 Practical operation

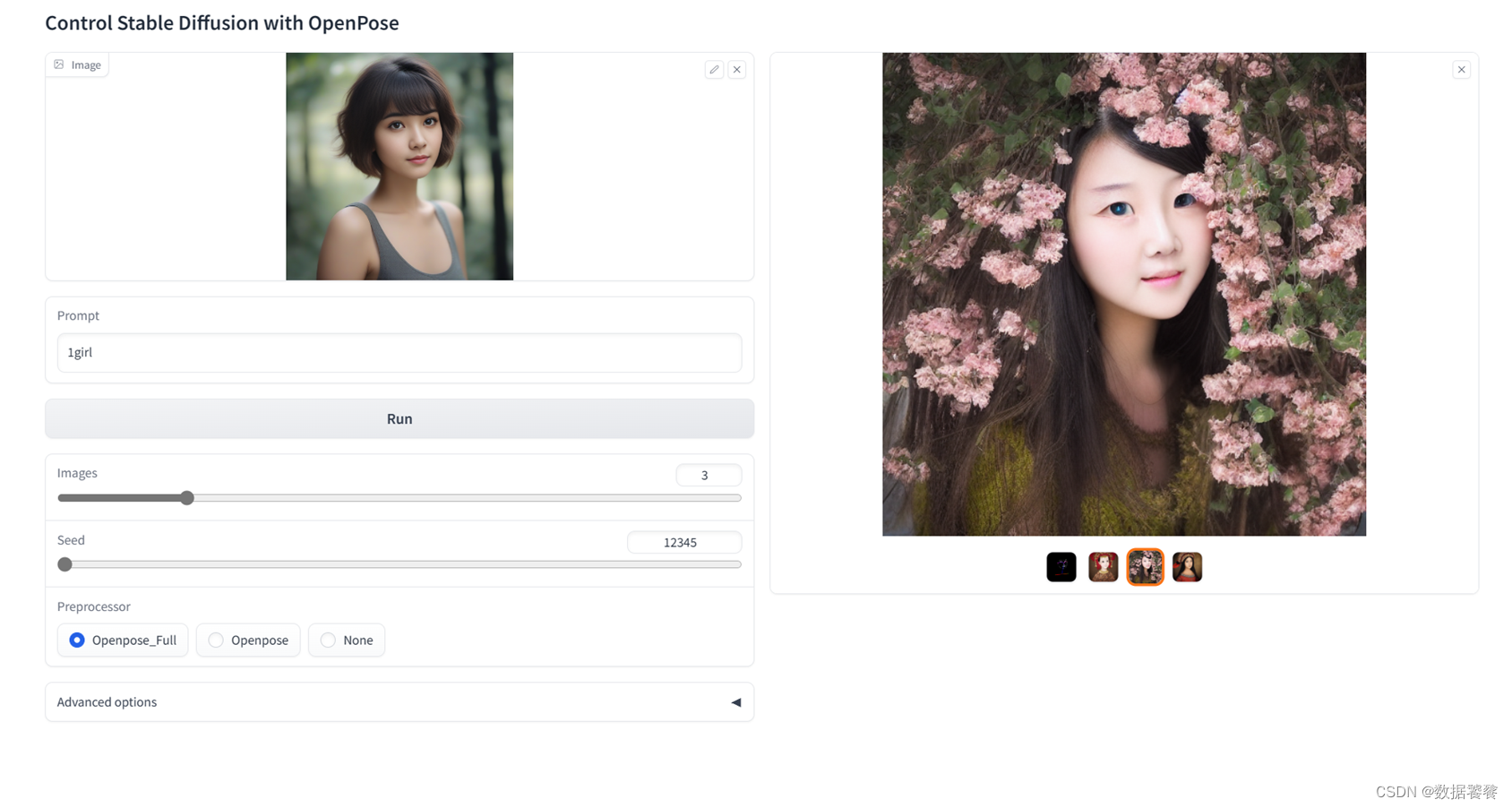

Because it is a visual operation interface, you can understand the operation methods and processes at a glance. We will not go into details again and go directly to the configuration interface, as shown in the figure below:

5.5 Running the demo

6. Summary

This chapter is the fourth part of the series "Stable Diffusion from Entry-level to Enterprise-level Practical Practice", the advanced capability chapter "Stable Diffusion ControlNet v1.1 Precise Image Control", Section 02, which uses the Stable Diffusion ControlNet Openpose model to accurately control image generation. In the previous section, we introduced " Stable Diffusion ControlNet Inpaint Model Precision Control" . In the next section, we will show you how to use "Stable Diffusion ControlNet Canny edge detection to accurately control image generation ".