The transformers framework formed according to the "attention is all you need" paper shines in the chat-gpt application. At present, the transformers framework has become a hot framework. Not only is it very useful in nlp, according to the official website, it can also do many things, such as image classification and target detection.

Combined with the official website examples, two simple examples are given below, one is text processing and the other is target detection.

The transformers framework provides a pipeline method to quickly apply a model to the input object. The official words are:

To immediately use a model on a given input (text, image, audio, ...), we provide the

pipelineAPI

Before proceeding with the example, we need to install the transformers framework. Transformers=4.26.1 is installed on this machine.

pip install transformers==4.26.1The first example of text processing, using transformers to quickly distinguish positive and negative text content. As shown below, we enter a piece of text, and the transformer will give a judgment:

from transformers import pipeline

classifier = pipeline('sentiment-analysis')

res = classifier('we are happy to indroduce pipeline to the transformers repository.')

print(res)Running this code, you can get the following results:

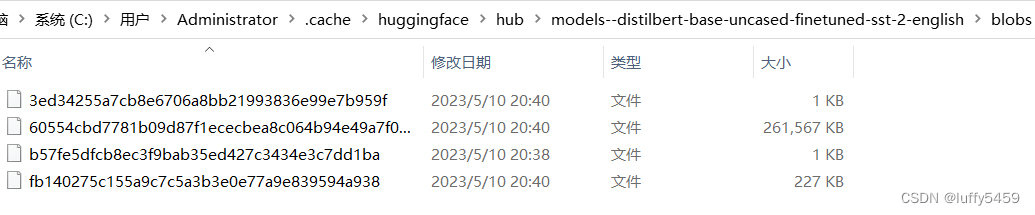

To run this example, you will first download some model files. Here, the distilbert-base-uncased-finetuned-sst-2-english model file will be downloaded by default and stored in .cache\hugginface\hub\models- in the local user directory -distilbert-base-uncased-finetuned-sst-2-english directory, as shown below:

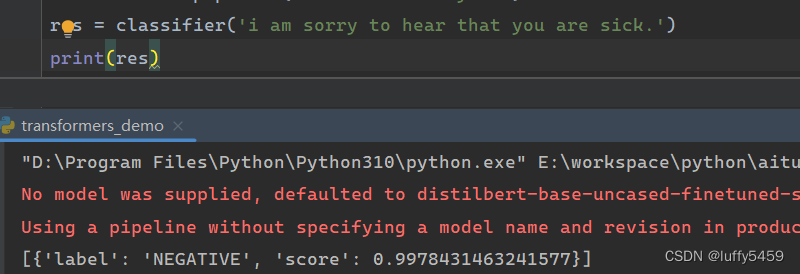

Try another sentence: i am sorry to hear that you are sick.

This time, the semantics of the recognized text are negative, that is, negative or negative, which is in line with expectations.

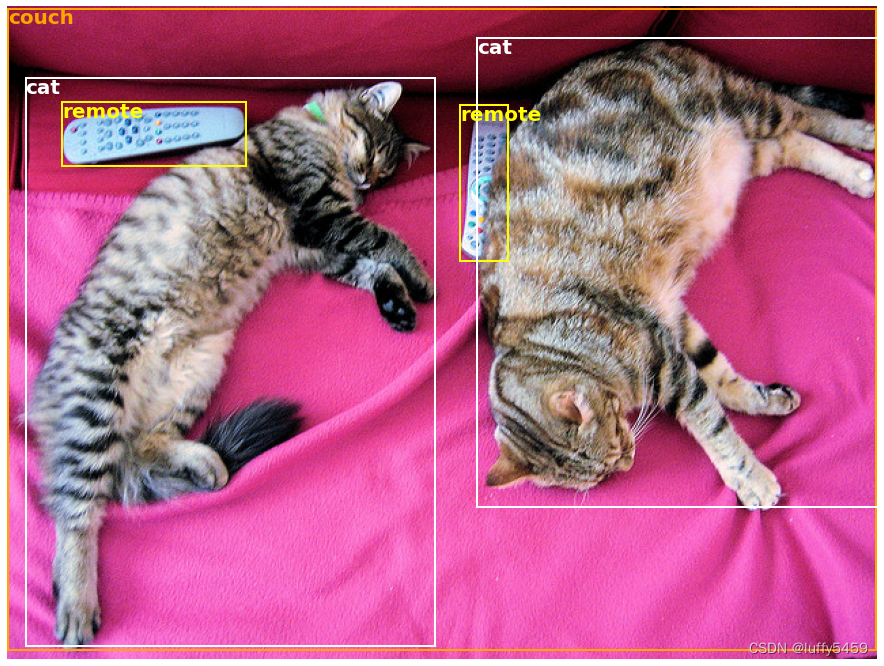

The second example is for target detection. First prepare a picture and then use the target detection model to identify it.

import requests

from PIL import Image

from transformers import pipeline

# Download an image with cute cats

url = "https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/coco_sample.png"

image_data = requests.get(url, stream=True).raw

image = Image.open(image_data)

# Allocate a pipeline for object detection

object_detector = pipeline('object-detection')

res = object_detector(image)

print(res)This image looks like this:

The print result is as follows:

[{'score': 0.9982201457023621,

'label': 'remote',

'box': {'xmin': 40, 'ymin': 70, 'xmax': 175, 'ymax': 117}},

{'score': 0.9960021376609802,

'label': 'remote',

'box': {'xmin': 333, 'ymin': 72, 'xmax': 368, 'ymax': 187}},

{'score': 0.9954745173454285,

'label': 'couch',

'box': {'xmin': 0, 'ymin': 1, 'xmax': 639, 'ymax': 473}},

{'score': 0.9988006353378296,

'label': 'cat',

'box': {'xmin': 13, 'ymin': 52, 'xmax': 314, 'ymax': 470}},

{'score': 0.9986783862113953,

'label': 'cat',

'box': {'xmin': 345, 'ymin': 23, 'xmax': 640, 'ymax': 368}}]

The sofa, remote control, and cat in the picture were identified respectively. Just like the picture below:

Similar to the first example, when this example is run, the facebook/detr-resnet-50 model file will be downloaded. And stored in the .cache\huggingface\hub\models--facebook--detr-resnet-50 directory in the user directory. A file named resnet50_a1_0-14fe96d1.pth will also be downloaded and placed in the .cache\torch\hub\checkpoints directory.