In this paper, we study platoon control of UAVs with camera information only. To this end, we employ real-time object detection based on the deep learning model YOLO. The YOLO object detector continuously estimates the relative position of the drones ahead, through which each drone is controlled by a PD (proportional derivative) feedback controller for queue operation. Our indoor experiments with three drones demonstrate the effectiveness of the system.

Original article: Real-time control of UAV queue based on YOLO object detection

01 Introduction

Platooning is a control method for cooperative driving of multiple vehicles [1], which is a basic technology in automatic highway systems [2]. In particular, truck platooning has been actively studied for decades [3]. One of the advantages of a truck fleet is to increase transportation capacity through driverless follow-up while reducing labor costs. In a truck platoon, each following truck needs to detect the relative position and velocity of the truck in front by using sensors such as lidar and cameras to detect other trucks (especially the one in front), as well as exchange communications between trucks.

Drone platoons are important for practical applications such as forest fire monitoring [4], road search [5] and transportation [6], though these assume that GPS is available. However, indoor queues where GPS is not available are also critical for tunnel inspections [7], bridge inspections [8], and warehousing and delivery [9], among others. Inspired by these applications, we consider an indoor drone platooning system that uses only camera images, without GPS, LIDAR, or motion capture systems.

Some theoretical studies on UAV platoon control have been published [10-12], while experiments on UAV platoon control in real environments have not been reported. In this paper, we developed a drone platoon experiment using small drones from DJI Tello.

For real-time object detection, we employ a deep learning model called YOLO [13]. According to the result of object detection, the relative 3D position of the UAV in front is estimated, from which the following UAV is controlled by PD (proportional derivative) feedback control. For PD parameter tuning, we use the transfer function-based system identification technique [14] to identify the dynamics of the combination of UAV and YOLO object detector, and then tune the PD parameters by computer simulation. We then conduct drone experiments in an indoor environment. We use three drones; one is the leader drone and the others are follower drones. We present experimental results in an indoor environment to illustrate the effectiveness of the proposed system.

02 YOLO real-time target detection

For real-time object detection using a camera attached to a drone, we employ the YOLO deep learning model [13]. By using YOLO, we can obtain the prediction of a class of objects and the bounding box of the specified object position in real time. In particular, YOLO returns information about the center, width and height of the bounding box, which can be effectively used to detect the 3D position of the drone in front. There are multiple versions of YOLO [15, 16], we use YOLOv5s [16], which has the smallest model size but achieves fast detection, so it is ideal for our drone fleet application.

We use the YOLOv5s model pretrained by Ultralytics [17] and retrain the model using 700 320×320 drone images. We split the dataset into 540 for training and 160 for validation. We will train (i.e., learn neural network parameters via stochastic gradient descent) for 500 iterations with a minimum batch size of 128. Figure 1 shows the convergence results of loss functions, namely box regression loss, class loss, and object loss, as well as precision, recall, and average precision. It is observed from these figures that accurate object detection is achieved by 500 iterations.

In addition, Figure 2 shows some results of object detection by the trained YOLO model. We can observe that the trained YOLO model successfully detects the drone with accurate bounding boxes.

We noticed that the size of the trained YOLO model is 13.6MB, which is very small, and it can accurately and quickly detect drone targets. In fact, the time for object detection is about 30 milliseconds, which means we can use the sampling time Ts = 1/15≈66.7 milliseconds, through which we can process 15 image frames per second for control.

03 PD control based on YOLO target detector

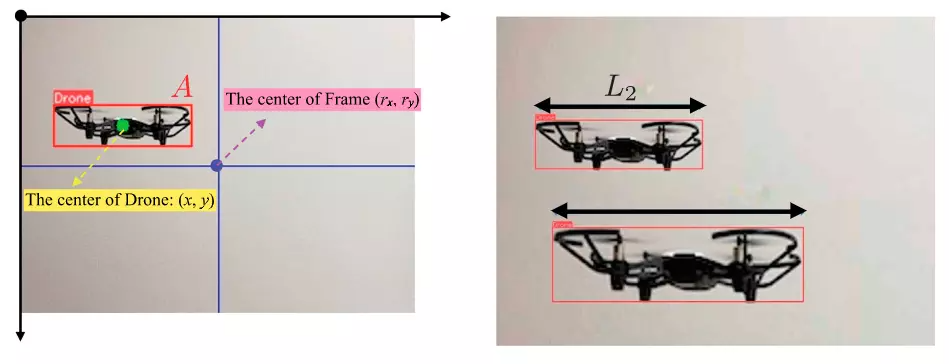

We use YOLO as a sensor for the relative position of the drone in front. Figure 3 (left) shows the results of YOLO object detection. The drone's field of view (FOV) is 82.6 degrees. We set the origin at the upper left corner of the image frame, and the x-axis and y-axis are shown in Figure 3. Let (x, y) and (rx, ry) be the center of the drone in front and in the frame, respectively, and A be the area of the estimated bounding box. In our example, we fix rx=160 and ry=120. Use region A of the bounding box to estimate the distance to the drone in front. We calibrate the system when A = 1800 so that the distance is 60 cm.

When there are more than two drones in front, multiple drones may be detected, as shown in Figure 3 (right). In this case, we measure the length of the bounding box, say L1 and L2, and if L1>L2, as shown in Figure 3, then select the bounding box of L1 as the drone. We then determined the dynamics of the UAV's input/output systems, including YOLO object detectors and Wi-Fi wireless communications (see Figure 4).

We note that the latency of wireless communication varies over time, which can lead to unstable transitions as described in Section 4. For this, we took second-order transfer functions in the x, y, and distance directions, and calculated the coefficients of the transfer functions using the input/output data using MATLAB's System Identification Toolbox. The obtained transfer function (see Figure 4) is as follows:

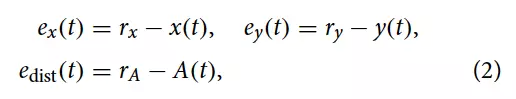

These systems include the dynamics of the YOLO detector and the time delay, which can be time-varying between the drone and the computer that performs the YOLO detection and generates the control signal, since we use Wi-Fi. We also note that each transfer function has a pole near zero, so we can think of it as a system containing an integrator. We use PD (proportional derivative) control without I (integral). We detect tracking error at time t ≥ 0:

where by which we apply feedback control such that the sum

converges

to zero.

We control the system with sample time and discretize the transfer function using a step-invariant transform (also known as zero-order preserving discretization) [18]. PD control is then given by the following discrete-time transfer function:

Among them . We sought parameters by computer simulations using the model obtained in (1). Table 1 presents the obtained parameters. It is easy to check that with these PD feedback controllers, the dynamical system described in (1) is asymptotically stable under ideal sampler and

zero-order hold with sampling time = 1/15 sec. Fig. 5 shows the simulation results using the obtained PD controller. We can see that for the x and y directions, the response time is about 2 seconds, but for the distance direction, the time is about 3 seconds.

04 experiment

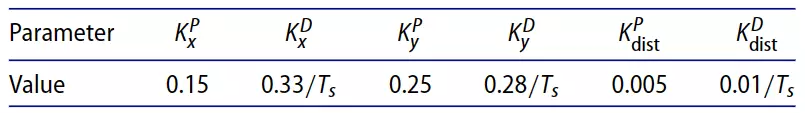

In this section, we present experimental results on a fleet of drones using YOLO object detection in an indoor environment. We used three DJI Tello drones, one of which is the leader drone and the others are follower drones. The lead drone is manually controlled by a person with a smartphone, and the two follower drones are independently controlled by two computers PC1 and PC2 through wireless communication using Wi-Fi, as shown in Figure 6. PC1 and PC2 perform object detection via YOLO, and generate control signals via PD controller, which are then independently sent to the following drones.

We measure the global 3D position of each drone using another YOLO model with recorded video images from a camera fixed in the room. Figure 7 shows the positions of the three drones used to control the x and y directions. Figure 8 also shows the position of the drone used to control the distance direction. Movies for these results can be found on the webpage indicated by the image caption.

Table 2 shows the mean absolute errors in x, y and range directions, and

, between the i-th and j-th UAVs, respectively

.

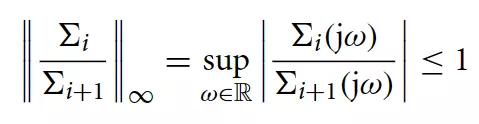

We note that the gap between the simulated and experimental results should be attributed to time-varying communication delays and wind perturbations. From these results, we can see that the follower UAV moves in unison with the front UAV and realizes UAV queue, since the errors in Table 2 are all bounded and do not diverge. We also observed that the second follower drone showed a larger error than the first follower drone. This phenomenon can be explained by string instability [19, 20], which leads to larger and larger errors when we control longer queues. To guarantee string stability for longer rows, we need to reduce the norm (or gain) of the system shown in Figure 4 (from

to

). That is, the following inequalities should be satisfied:

For any , where

is

the transfer function of the input/output system of the th follower UAV, and N is the number of follower UAVs. For this, we need to adopt

control design [21].

05 Conclusion

In this paper, we demonstrate the design of drone platoons based on real-time deep learning object detection. The YOLO model is suitable for real-time detection, and the PD control parameters are adjusted by simulation. Experimental results demonstrate the effectiveness of the proposed system. Future work includes designing multi-input control in three dimensions by employing linear quadratic (LQ) optimal control, control, or model predictive control.

1. Top 10 robot trends worth paying attention to in 2023

2. Distributed collaborative positioning of UAV swarms

3. Turtlebot+ROS Stage simulation environment realizes MPC trajectory tracking

4. PythonRobotics | Robot autonomous navigation based on python

5. Visual 3D object detection, from visual geometry to BEV detection

6. 4D overview | Multi-sensor fusion perception for autonomous driving