MJDK is the Meituan JDK distribution built on OpenJDK. This article mainly introduces how MJDK achieves the effect of increasing the compression/decompression rate by 5-10 times under the premise of ensuring the compatibility of java.util.zip.* API and compression formats. I hope that relevant experience can help more technical students.

1 Introduction

Data compression technology [1] has a very wide range of applications in the computer field (including network transmission, file transmission, database, operating system, etc.) because it can effectively reduce data storage and transmission costs. Mainstream compression technology can be divided into lossless compression [2] and lossy compression [3] according to its principles. The most commonly used compression tools zip and gzip in our work, and the compression function library zlib are all applications of lossless compression technology. The use of the compression library in Java applications includes: body compression/decompression when processing HTTP requests, compression/decompression of large message bodies (such as >1M) when using message queue services, database writing and reading Then compress/decompress operations on large fields, etc. Commonly used in business scenarios involving big data transmission/storage, such as monitoring and advertising.

Meituan's basic research and development platform has developed an Intel-based isa-l library optimized gzip compression tool and zlib[4] compression library (also known as: mzlib[5] library), the optimized compression speed can be increased by 10 times, The decompression speed can be increased by 2 times, and it has been used stably for a long time in scenarios such as image distribution and image processing. Unfortunately, limited by the underlying design of the JDK[6] call to the compression library, the company's Java8 service has not been able to use the optimized mzlib library, nor can it enjoy the benefits brought by the increase in compression/decompression speed. In order to give full play to the performance advantages of mzlib and empower the business, in the latest version of MJDK, we transformed and integrated the mzlib library, and completed the optimization of the java.util.zip.* native class library in the JDK, which can realize Under the premise of compression format compatibility, the effect of increasing the memory data compression rate by 5-10 times. This article mainly introduces the technical principle of this feature, and hopes that relevant experience can bring some inspiration or help to you.

2 Data Compression Technology

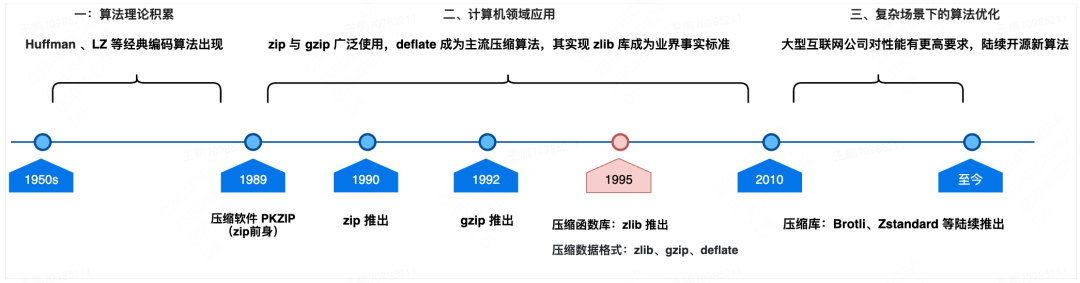

The development of data compression technology in the computer field can be roughly divided into the following three stages:

The detailed time nodes are as follows:

-

From the 1950s to the 1980s, Shannon founded information theory, which laid the theoretical foundation for data compression technology. A variety of classic algorithms appeared during this period, such as Huffman coding, LZ series coding, etc.

-

In 1989, Phil Katz launched the file archiving software PKZIP (the predecessor of zip), and disclosed all technical parameters of the file archiving format zip and the data compression algorithm deflate (combined algorithm of Huffman and LZ77).

-

In 1990, the Info-ZIP group wrote portable, free, open-source implementations zip and unzip based on the publicly available deflate algorithm, greatly expanding the use of the .zip format.

-

In 1992, the Info-ZIP group launched the file compression tool gzip (GUN zip) based on the deflate algorithm code of zip, which is used to replace the compress under Unix (patent dispute). Usually gzip is used in conjunction with the archive tool tar to produce a compressed archive format with a file extension of .tar.gz.

-

In 1995, Info-ZIP team members Jean-loup Gailly and Mark Adler launched a compression library: zlib based on the implementation of the deflate algorithm in the gzip source code. Provide general compression/decompression capabilities for other scenarios (such as PNG compression) by calling library functions. In the same year, three data compression formats, DEFLATE, ZLIB, and GZIP, were released in RFC. Among them, DEFLATE is the original compressed data stream format, and ZLIB and GZIP are based on the former to package data headers and verification logic. Since then, with the wide application of zip, gzip tools and zlib library, deflate has become the de facto standard of data compression format in the Internet era.

-

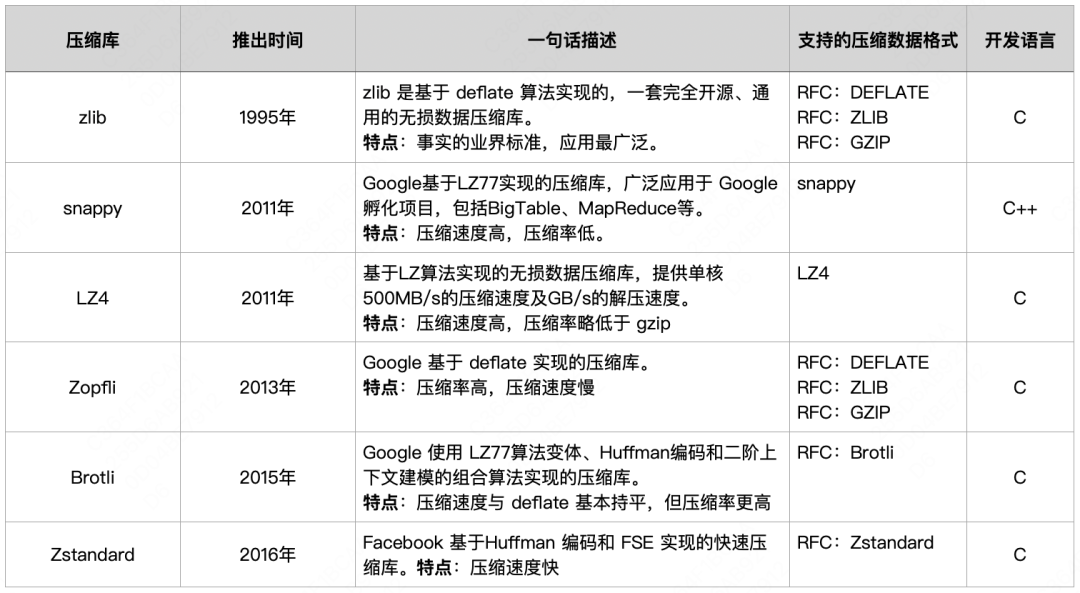

After 2010, various large Internet companies have successively open sourced new compression algorithms and implementations, such as: LZFSE (Apple), Brotli (Google), Zstandard (Facebook), etc., which have improved to varying degrees in terms of compression speed and compression ratio. Common compression libraries are as follows (note: due to differences in compression algorithm protocols, these function libraries cannot be used cross-used, and data compression/decompression must use the same algorithm):

3 Application and optimization ideas of compression technology in Java

We have introduced the basics of compression technology earlier. This chapter mainly introduces the technical principle of the MJDK8_mzlib version to achieve a 5-fold increase in compression rate. The explanation is divided into two parts: the first part introduces the underlying principle of the compression/decompression API in the native JDK; the second part shares the optimization ideas of MJDK.

3.1 Implementation principle of compression/decompression API in Java language

In the Java language, we can use the JDK native compression class library (java.util.zip.*) or the compression class library provided by the third-party Jar package to achieve data compression/decompression. The underlying principle is through JNI (Java Native Interface) mechanism to call shared library functions provided in JDK source code or third-party Jar packages. The detailed comparison is as follows:

In terms of usage, the difference between the two can refer to the following code.

(1) JDK native compression library (zlib compression library)

zip file compression/decompression code demo (Java)

public class ZipUtil {

//压缩

public void compress(File file, File zipFile) {

byte[] buffer = new byte[1024];

try {

InputStream input = new FileInputStream(file);

ZipOutputStream zipOut = new ZipOutputStream(new FileOutputStream(zipFile));

zipOut.putNextEntry(new ZipEntry(file.getName()));

int length = 0;

while ((length = input.read(buffer)) != -1) {

zipOut.write(buffer, 0, length);

}

input.close();

zipOut.close();

} catch (Exception e) {

e.printStackTrace();

}

}

//解压缩

public void uncompress(File file, File outFile) {

byte[] buffer = new byte[1024];

try {

ZipInputStream input = new ZipInputStream(new FileInputStream(file));

OutputStream output = new FileOutputStream(outFile);

if (!outFile.getParentFile().exists()) {

outFile.getParentFile().mkdir();

}

if (!outFile.exists()) {

outFile.createNewFile();

}

int length = 0;

while ((length = input.read(buffer)) != -1) {

output.write(buffer, 0, length);

}

input.close();

output.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}gzip file compression/decompression code demo (Java)

public class GZipUtil {

public void compress(File file, File outFile) {

byte[] buffer = new byte[1024];

try {

InputStream input = new FileInputStream(file);

GZIPOutputStream gzip = new GZIPOutputStream(new FileOutputStream(outFile));

int length = 0;

while ((length = input.read(buffer)) != -1) {

gzip.write(buffer, 0, length);

}

input.close();

gzip.finish();

gzip.close();

} catch (Exception e) {

e.printStackTrace();

}

}

public void uncompress(File file, File outFile) {

try {

FileOutputStream out = new FileOutputStream(outFile);

GZIPInputStream ungzip = new GZIPInputStream(new FileInputStream(file));

byte[] buffer = new byte[1024];

int n;

while ((n = ungzip.read(buffer)) > 0) {

out.write(buffer, 0, n);

}

ungzip.close();

out.close();

} catch (Exception e) {

e.printStackTrace();

}

}

}(2) The third-party compression library ( the snappy compression library launched by Google is used as an example here , and the principles of other third-party libraries are basically similar ) is divided into two steps.

Step 1: Add dependent Jar packages to the pom file

<dependency>

<groupId>org.xerial.snappy</groupId>

<artifactId>snappy-java</artifactId>

<version>1.1.8.4</version>

</dependency>Step 2: In the second step, call the interface to perform compression/decompression operations

public class SnappyDemo {

public static void main(String[] args) {

String input = "Hello snappy-java! Snappy-java is a JNI-based wrapper of "

+ "Snappy, a fast compresser/decompresser.";

byte[] compressed = new byte[0];

try {

compressed = Snappy.compress(input.getBytes("UTF-8"));

byte[] uncompressed = Snappy.uncompress(compressed);

String result = new String(uncompressed, "UTF-8");

System.out.println(result);

} catch (IOException e) {

e.printStackTrace();

}

}To sum up, the default compression library used in the JDK is zlib. Although the business can use other compression library algorithms through third-party Jar packages, because the compressed data format of algorithms such as Snappy is different from the DEFLATE, ZLIB, and GZIP supported by zlib Different, mixed use will have compatibility issues.

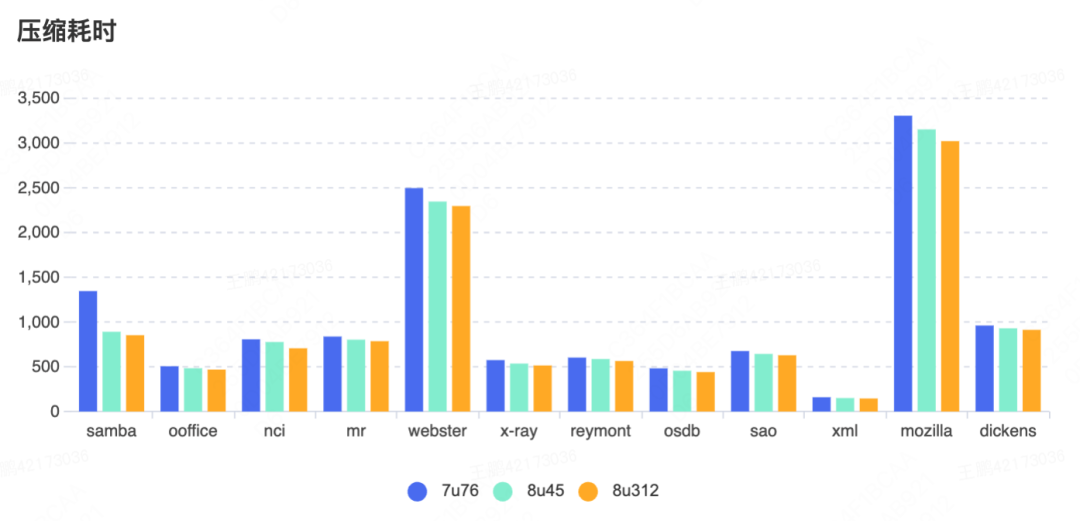

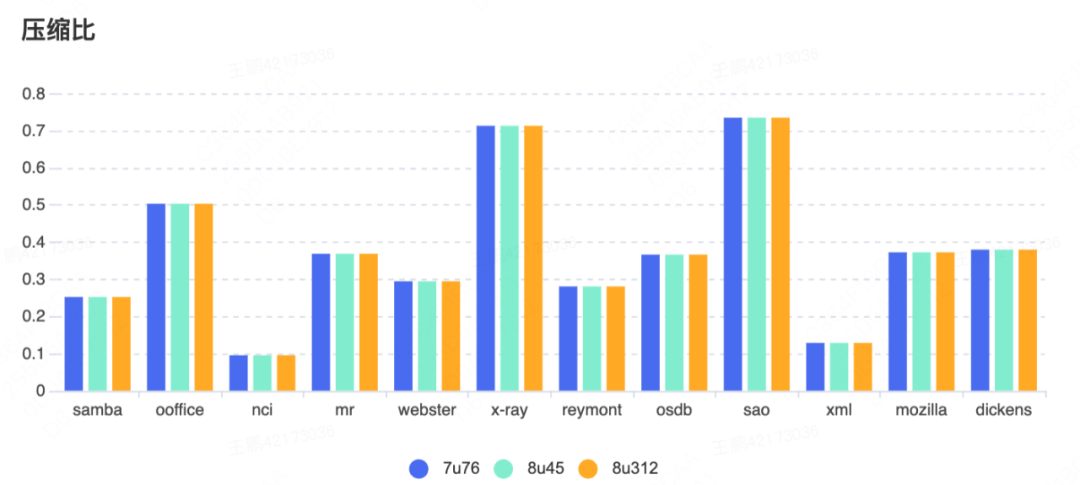

In addition, the iteration speed of the zlib library (launched in 1995) itself is very slow (reason: a wide range of applications and stability, no commercial organization maintenance), here we use the test set Silesia corpus to test OpenJDK 7u76 (released in 2014) , 8u45 (released in 2015) and 8u312 (released in 2022), the performance of the built-in compression class library, as can be seen from the chart, the three have no obvious optimization effect in terms of compression time and compression ratio, and it is difficult to meet the growing demands of business Scenarios with increased compression performance requirements. Therefore, we choose to integrate zlib optimization in MJDK to achieve the effect of being compatible with native interface implementation and improving compression performance.

The Silesia corpus is a compression method performance benchmark set that provides a set of archives covering typical data classes in use today. The size of the file is between 6 MB and 51 MB, and the file format includes text, exe, html, picture, database, bin data, etc. The test data categories are as follows:

| 3.2 MJDK optimization scheme

Through Chapter 3.1, we know that the data compression/decompression capability in Java's native java.util.zip.* class library is finally implemented by calling the zlib library, so the problem of improving the compression performance of the JDK can be converted to the zlib used for the JDK Library optimization.

3.2.1 Optimization ideas

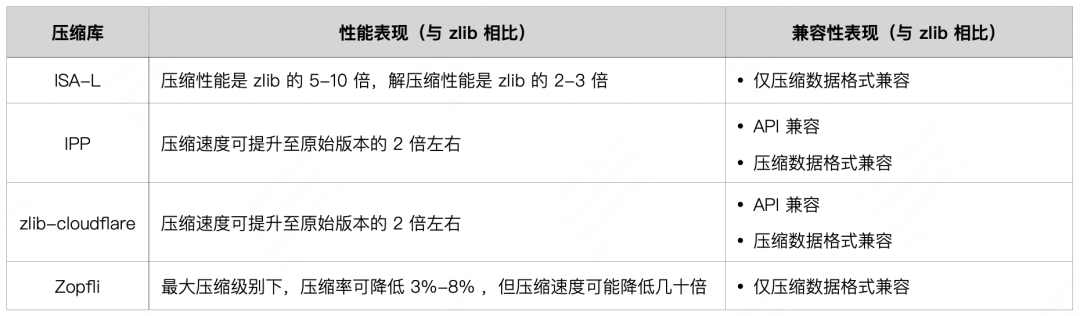

In addition to the native zlib, the compression libraries that also use the deflate algorithm include Intel ISA-L , Intel IPP , and Zopfli . The project directly optimized based on the zlib source code is zlib-cloudflare . The comparison between them and zlib is as follows:

To sum up, we choose the ISA-L based on Intel's open source (the principle is to use intel sse/avx/avx2/avx256 extended instructions to operate multiple streams in parallel to improve the execution performance of the underlying functions) to complete the transformation and optimization of zlib.

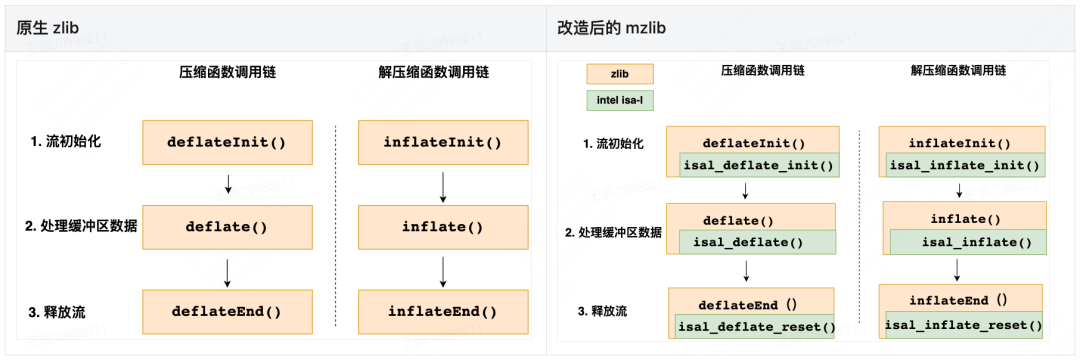

1. zlib transformation process (focus on API compatibility transformation)

The optimized mzlib library has been running stably online for more than 3 years, and the compression rate has been increased by more than 5 times, which effectively solves the problem that the basic R&D platform mentioned above faced high time-consuming compression/decompression in scenarios such as image construction and image processing. The problem.

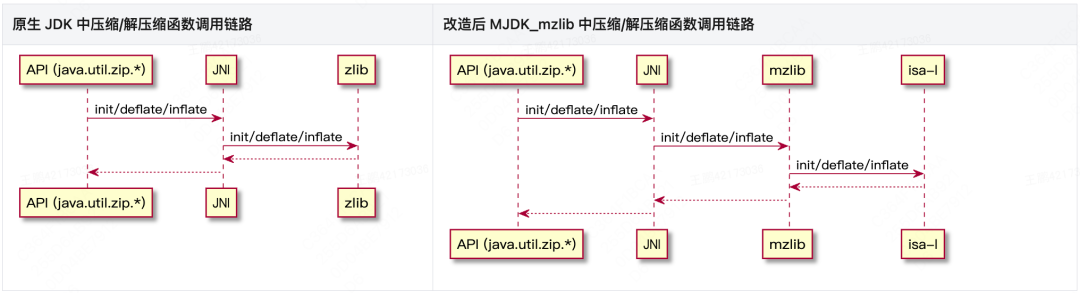

2. Changes at the JDK level

3.2.2 Optimization effect

Test instruction

-

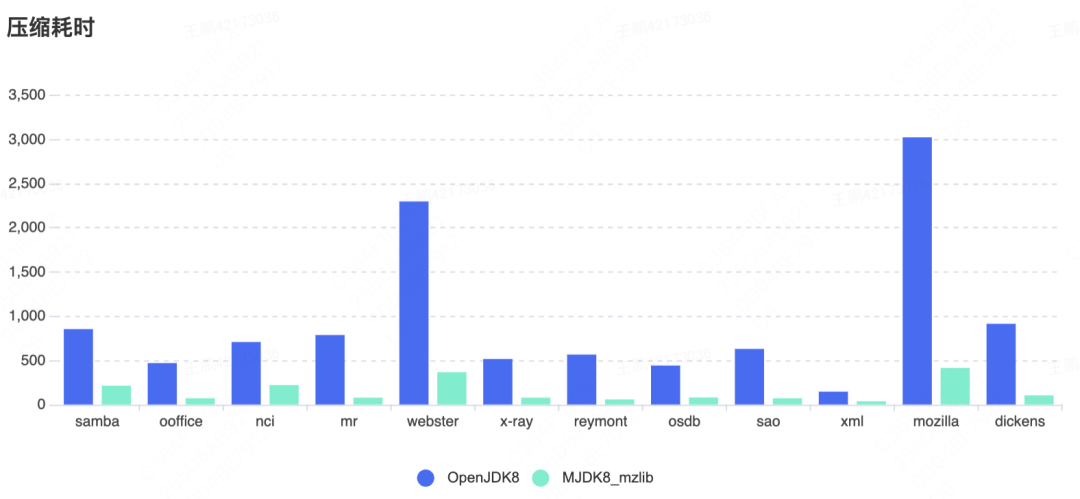

Test set: Silesia corpus

-

Test content: GZip compressed/decompressed files, Zip compressed/decompressed files

test conclusion

-

Compatibility test (passed): The Zip and Gzip compression/decompression interfaces of the modified Java class library can be used normally, and the compression/decompression operation verification passed by intersecting with the interfaces in the native JDK.

-

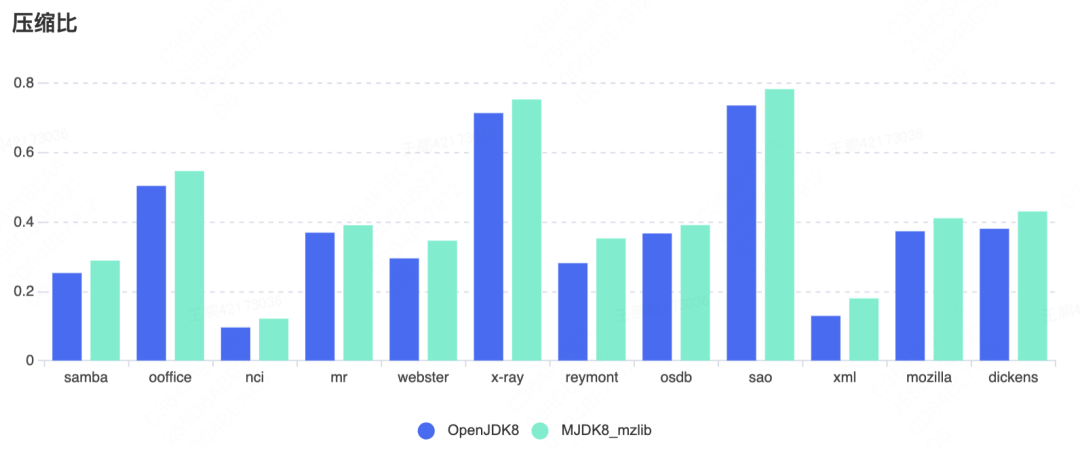

Performance test (pass): Under the same benchmark update version, the data compression time of MJDK8_mzlib is 5-10 times lower than that of OpenJDK8, and the compression ratio does not fluctuate greatly (increased by about 3%).

At present, Meituan's internal document collaboration service has used this MJDK version to compress and store user collaborative editing record data (> 6M), and verified the stable operation of this function online, and the compression performance has increased by more than 5 times.

4 Authors

Yanmei, from Meituan/basic R&D platform.

5 References

note

[1] Data compression technology : On the premise of not losing useful information, reduce the redundancy of source data through corresponding algorithms, thereby improving the efficiency of data storage, transmission and processing.

[2] Lossless compression : use the statistical redundancy of data to compress, common lossless compression coding methods include Huffman coding, arithmetic coding, LZ coding (dictionary compression), etc. The theoretical limit of data statistical redundancy is 2:1 to 5:1, so the compression ratio of lossless compression is generally lower. This type of method is widely used in the compression of text data, programs, etc. that require precise storage of data.

[3] Lossy compression : Utilizes the insensitivity of human vision and hearing to certain frequency components in images and sounds, allowing certain information to be lost during the compression process in exchange for a greater compression ratio. It is widely used in the compression of voice, image and video data.

[4] zlib : zlib is implemented based on the deflate algorithm, a set of completely open source, general-purpose lossless data compression library. It is also the most widely used compression library. It is widely used in network transmission, operating system, image processing and other fields. for example:

-

Linux kernel : Use zlib to implement network protocol compression, file system compression, and decompress its own kernel at boot time.

-

Libpng: An implementation for the PNG graphics format that specifies DEFLATE as the stream compression method for bitmap data.

-

HTTP protocol : Use zlib to compress/decompress HTTP response header data.

-

OpenSSH , OpenSSL : use zlib to optimize encrypted network transmission.

-

Version control systems such as Subversion , Git , and CVS use zlib to compress communication traffic with remote warehouses.

-

Package management software such as dpkg and RPM : decompress RPM or other packages with zlib.

[5] mzlib : Meituan is an optimized zlib compression library based on Intel's isa-l library.

[6] JDK : Java Development Kit, a free software development kit released by Sun for Java developers , is one of the core components of Java development, including Java compiler, Java virtual machine, Java class library and other development tools and resource.

[7] JNI (Java Native Interface) : JNI is a local programming interface. It allows Java code running in the Java Virtual Machine to interoperate with applications and libraries written in other programming languages such as C, C++, and assembly.

---------- END ----------

recommended reading

| Java series | The practice of remote hot deployment in Meituan