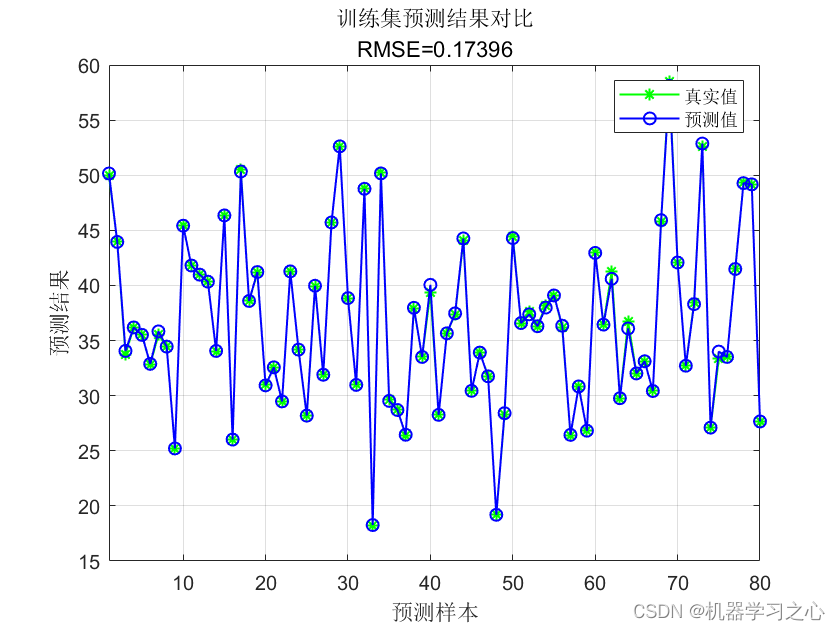

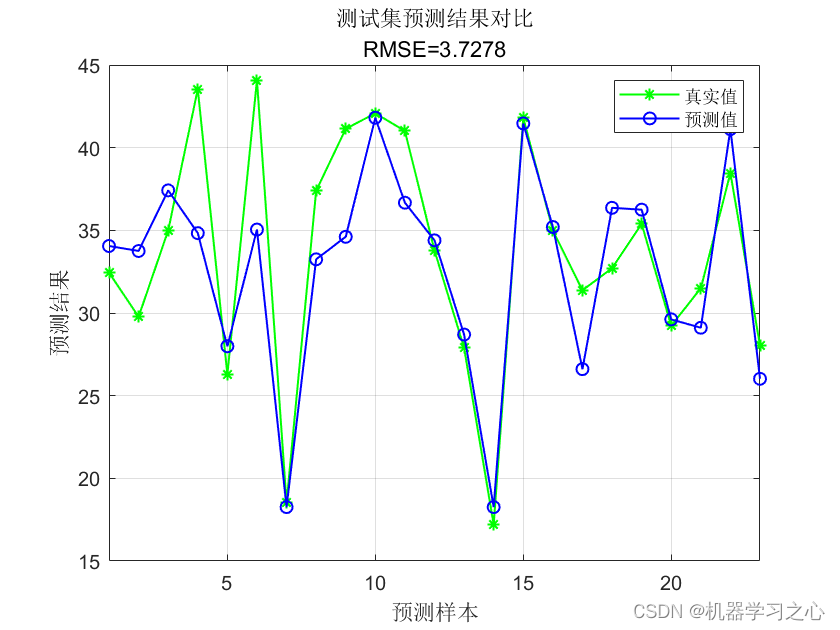

Regression prediction | MATLAB implements SSA-ELM sparrow search algorithm to optimize extreme learning machine multi-input single-output regression prediction (multi-indicator, multi-graph)

Table of contents

List of effects

basic introduction

Regression prediction | MATLAB implements SSA-ELM sparrow search algorithm to optimize extreme learning machine multi-input single-output regression prediction (multi-indicator, multi-map), input multiple features, output single variable, multi-input single-output regression prediction; multi-indicator evaluation,

code High quality; excel data, easy to replace, operating environment 2018 and above.

Sparrow Search Algorithm is a heuristic optimization algorithm based on the foraging behavior of sparrows in nature, which is used to solve optimization problems. The Extreme Learning Machine (ELM) is a machine learning algorithm for solving classification and regression problems. First, you need to define the objective function you want to optimize. In an extreme learning machine, some kind of loss function is usually used to measure the difference between the predicted result and the true result. You can use this loss function as an objective function, and use the sparrow search algorithm to minimize or maximize this objective function. There are some parameters in the extreme learning machine that need to be tuned, such as the number of neurons in the hidden layer, the connection weight between the input layer and the hidden layer, etc. You can use these parameters as optimization variables, and constantly adjust their values during the search process to find the optimal parameter combination. The core of the sparrow search algorithm is to simulate the behavior of sparrow foraging, which includes two aspects of exploration and utilization. You can design a strategy that enables global exploration during the search process and converges to a better solution as soon as possible. For example, some randomness can be introduced to increase the diversity of the search, or a heuristic method can be used to guide the search direction. When optimizing an extreme learning machine using the sparrow search algorithm, the algorithm needs to be evaluated and tuned. The performance of the algorithm can be evaluated by comparing it with other optimization algorithms, or by experimenting on different test functions. According to the evaluation results, adjust the parameters or strategies of the algorithm to improve the effect of the algorithm.

programming

- Complete source code and data acquisition method: private message reply SSA-ELM sparrow search algorithm optimization extreme learning machine multi-input single-output regression prediction (multiple indicators, multiple graphs) .

%% 清空环境变量

warning off % 关闭报警信息

close all % 关闭开启的图窗

clear % 清空变量

clc % 清空命令行

%% 导入数据

res = xlsread('data.xlsx');

%% 划分训练集和测试集

temp = randperm(103);

P_train = res(temp(1: 80), 1: 7)';

T_train = res(temp(1: 80), 8)';

M = size(P_train, 2);

P_test = res(temp(81: end), 1: 7)';

T_test = res(temp(81: end), 8)';

N = size(P_test, 2);

%% 数据归一化

[p_train, ps_input] = mapminmax(P_train, 0, 1);

p_test = mapminmax('apply', P_test, ps_input);

[t_train, ps_output] = mapminmax(T_train, 0, 1);

t_test = mapminmax('apply', T_test, ps_output);

%% 仿真测试

t_sim1 = sim(net, p_train);

t_sim2 = sim(net, p_test);

%% 数据反归一化

T_sim1 = mapminmax('reverse', t_sim1, ps_output);

T_sim2 = mapminmax('reverse', t_sim2, ps_output);

%% 均方根误差

error1 = sqrt(sum((T_sim1 - T_train).^2) ./ M);

error2 = sqrt(sum((T_sim2 - T_test ).^2) ./ N);

%% 相关指标计算

% 决定系数 R2

R1 = 1 - norm(T_train - T_sim1)^2 / norm(T_train - mean(T_train))^2;

R2 = 1 - norm(T_test - T_sim2)^2 / norm(T_test - mean(T_test ))^2;

disp(['训练集数据的R2为:', num2str(R1)])

disp(['测试集数据的R2为:', num2str(R2)])

% 平均绝对误差 MAE

mae1 = sum(abs(T_sim1 - T_train)) ./ M ;

mae2 = sum(abs(T_sim2 - T_test )) ./ N ;

disp(['训练集数据的MAE为:', num2str(mae1)])

disp(['测试集数据的MAE为:', num2str(mae2)])

% 平均相对误差 MBE

mbe1 = sum(T_sim1 - T_train) ./ M ;

mbe2 = sum(T_sim2 - T_test ) ./ N ;

disp(['训练集数据的MBE为:', num2str(mbe1)])

disp(['测试集数据的MBE为:', num2str(mbe2)])

References

[1] https://blog.csdn.net/kjm13182345320/article/details/129215161

[2] https://blog.csdn.net/kjm13182345320/article/details/128105718