Random Forest in OpenCV is a powerful machine learning algorithm designed to solve classification and regression problems. Random forests make predictions using multiple decision trees, each consisting of randomly selected samples and features. In classification problems, random forests determine the final class by voting; in regression problems, random forests obtain the final prediction by averaging the predictions of all decision trees.

The basic idea of random forest

Random forest is a supervised learning algorithm. The "forest" it builds is a collection of decision trees, usually integrated using the Bagging algorithm . Random forest first uses the trained classifier set to classify new samples, and then counts the results of all decision trees by majority voting or averaging the output. Since each decision tree in the forest is independent, it can be understood as a research "expert" in a certain aspect, so it can obtain better performance than a single decision tree by voting and averaging.

Bagging algorithm

Since random forests usually use the Bagging algorithm to integrate decision trees, it is necessary to understand the workflow and principles of the Bagging algorithm. The classification accuracy of some classifiers is sometimes only slightly better than random guessing, such classifiers are called weak classifiers. In order to improve the performance of the classifier, the method of ensemble learning (Ensemble Learning) is usually used to combine several weak classifiers to generate a strong classifier.

Bagging algorithm and Boosting algorithm are basic algorithms in the field of integrated learning.

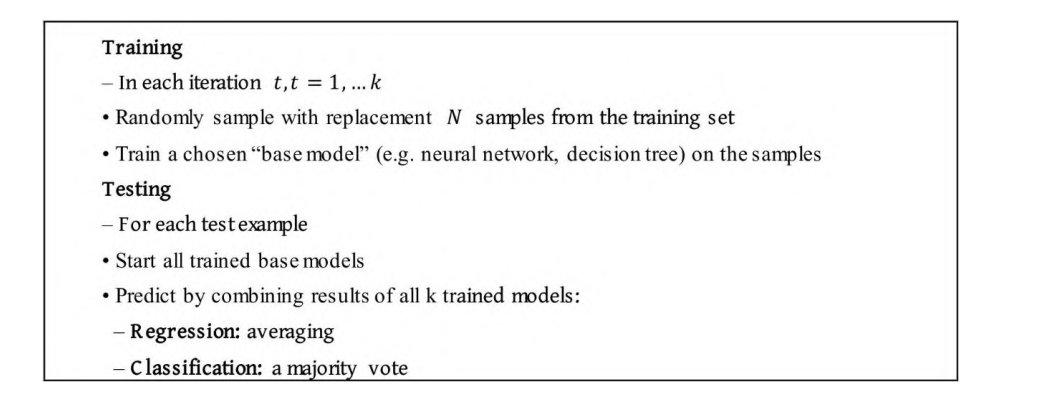

The flow of the Bagging algorithm is as follows

It can be seen that the process of the Bagging algorithm is divided into two stages: training and testing.

Training phase : Use the Bootstrapping sampling method to randomly select N training samples from the original training set, then put the N training samples back into the original training set, and perform k rounds of extraction to obtain k training subsets. Using these k training subsets, train k basic models (the basic model can be a decision tree or a neural network, etc.).

Test phase : For each test sample, all trained basic models are used to make predictions; then the results of all k basic models are combined to make predictions. If it is a regression problem, the prediction average of the k basic models is used as the final prediction result; if it is a classification problem, the classification results of the k basic models are voted on, and the category with the most votes is the final classification result.

Application Scenario

The random forest algorithm is an ensemble learning method that is mainly used to solve classification and regression problems. Application scenarios include:

- Commodity recommendation system : Based on the user's historical behavior, purchase records and other data, it can predict the products that the user may like, so as to make accurate recommendations.

- Medical diagnosis : Based on the patient's basic information, symptoms and other data, it can predict whether the patient is suffering from a certain disease, and give a diagnosis and treatment plan.

- Financial risk assessment : By analyzing the customer's personal credit record, income and other data, predict the customer's default probability, and help the bank formulate a personalized credit plan.

- Stock prediction : By analyzing historical stock prices, financial data and other information, predict future stock price trends.

- Image recognition : It is possible to classify images, such as classifying animal images, and detecting whether there are cats, dogs and other animals in the image.

- Natural language processing : Text classification can be performed, such as classifying news, judging whether an article belongs to international news, sports news, etc.

The following is an example of implementing a random forest classifier with OpenCV. The specific steps are as follows:

- import the necessary libraries

import numpy as np

import cv2

- Prepare training data and labels

features = np.array([[0, 0], [0, 1], [1, 0], [1, 1]], np.float32)

labels = np.array([0, 1, 1, 0], np.float32)

- Initialize the random forest classifier

rf = cv2.ml.RTrees_create()

- Set training parameters

params = cv2.ml.RTrees_Params()

params.max_depth = 2

params.min_sample_count = 1

params.calc_var_importance = True

- train random forest

rf.train(cv2.ml.TrainData_create(features, cv2.ml.ROW_SAMPLE, labels), cv2.ml.ROW_SAMPLE, params=params)

- predict

pred = rf.predict(np.array([[0, 0]], np.float32))

print(pred)

This will output the predicted label.

Full code:

import numpy as np

import cv2

# 生成示例数据

data = np.random.randint(0, 100, (100, 2)).astype(np.float32)

responses = (data[:, 0] + data[:, 1] > 100).astype(np.float32)

# 创建并训练随机森林分类器

rf = cv2.ml.RTrees_create()

# 设置终止条件(最大迭代次数,最大迭代次数,最小变化值)

rf.setTermCriteria((cv2.TERM_CRITERIA_MAX_ITER, 100, 0.01))

# 设置随机森林的最大深度

rf.setMaxDepth(10)

# 设置每个叶子节点的最小样本数量

rf.setMinSampleCount(2)

# 设置回归精度(对分类问题不适用)

rf.setRegressionAccuracy(0)

# 设置是否使用代理(对分类问题不适用)

rf.setUseSurrogates(False)

# 设置是否计算变量重要性

rf.setCalculateVarImportance(True)

# 训练随机森林分类器

rf.train(data, cv2.ml.ROW_SAMPLE, responses)

# 测试分类器

test_data = np.array([[30, 70], [70, 30]], dtype=np.float32)

_, results = rf.predict(test_data)

print("Predictions:", results.ravel())

Official document address

, click to jump

https://docs.opencv.org/2.4/modules/ml/doc/decision_trees.html#cvdtreeparams