Generative AI technology is undoubtedly one of the greatest imaginations of the current era.

Capital, entrepreneurs, and ordinary people are all pouring into generative AI to find out: the "100-model war" started overnight, the scale of financing reached new highs, and various consumer product concepts continued to emerge... According to a Bloomberg Intelligence report, In 2022, the market size of generative AI is only 40 billion US dollars, and this figure is expected to exceed 1.3 trillion US dollars by 2032, with an average annual compound growth rate of 42% in the next 10 years.

However, on the surface it looks very lively, but is the popularity and transformation of generative AI technology really as high as we imagined?

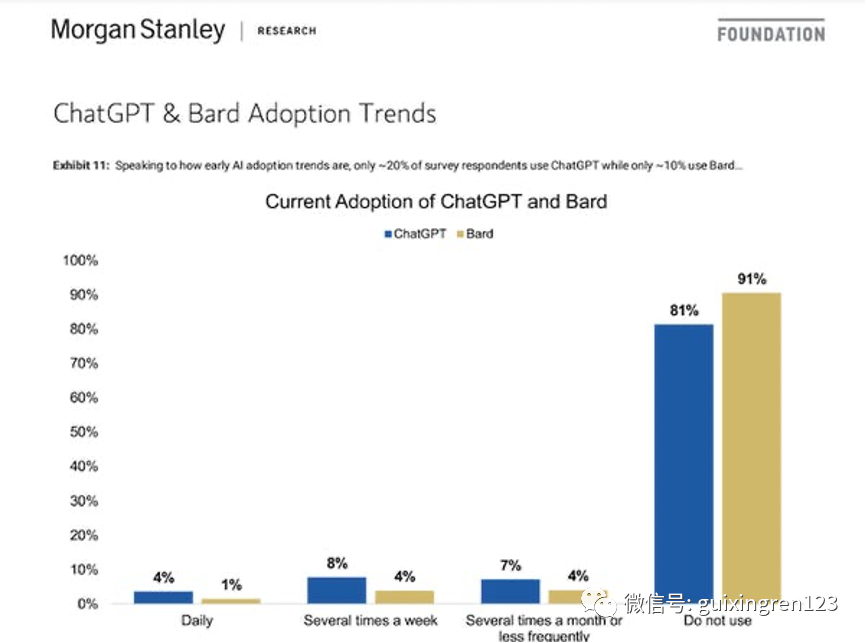

After experiencing explosive growth, since June, almost all visits to generative AI chat products have declined to varying degrees. The latest user survey shows that more than 80%-90% of the respondents said that they will not use ChatGPT, Bard and other chat tools in the next six months. From the perspective of consumption, it seems that generative AI products are more regarded as a toy to catch up with fashion trends than a tool for continuous use.

On the enterprise side, this phenomenon is even more obvious. Once people switch to work mode, generative AI tools rarely appear in everyone's workflow, and are even banned or restricted by many large companies.

For the commercial transformation of a relatively mature technology, more than six months is not a short time. But at present, the focus of fantasies about generative AI still seems to be on large models and product concepts, and the prosperous ecology and transformative impact on the economy and society that people expect to see have not yet come.

So, what is the shackles of its development?

Difficulties in the landing of generative AI: how to break the "wall" between the basic model and developers?

Nobody wants to miss out on the generative AI wave. However, the current ultra-high barriers to entry for generative AI keep most players out.

In the past few years, model training through "deep learning + large computing power" is the most mainstream technical way to realize artificial intelligence. However, the commercialization of large models must first go back to cost accounting.

First of all, the large model has a great demand for computing power, and it is a giant "gold swallowing beast". The cost of training a GPT-3.5 model is between 3 million and 4.6 million US dollars, and some larger language model training costs are even as high as 12 million US dollars. Self-developed large-scale models are a "bottomless pit", and start-up companies without strong financial strength cannot afford it.

In addition, the general model cannot solve all problems, and there are very limited things that can be done for enterprises. The training of large models is completed based on public data on the Internet, and many products are relatively isolated without forming a coherent and overall workflow, and do not have the ability to customize. It means that developers need to do a lot of personalized debugging in combination with private data, and the development and training threshold is extremely high.

Due to the huge investment in the early stage, even after the commercialization of the large model, it often takes a long time to achieve profitability. Therefore, in order for generative AI technology to be truly effective in all walks of life, an affordable, high-efficiency, and low-threshold solution is urgently needed to allow more people to participate in the development of generative AI.

So how can the gap from base model to end application be bridged? At present, a cloud platform that provides one-stop AI professional hosting services may be the best solution at present.

The cloud platform has sufficient and flexible computing power resources, and small and medium-sized enterprises do not need to purchase and maintain expensive hardware equipment to meet individual development needs. Users can easily call third-party resources and package services on the cloud platform through API and SDK, seamlessly connect their applications and services with the cloud platform, and simplify the development process to the maximum extent.

In addition, the cloud platform can also help solve data privacy and security issues. In the past few months, many large companies, including Apple, Samsung, TSMC, and Bank of America, have issued relevant policies to prohibit employees from using ChatGP, and have begun to develop their own large models. For those small and medium-sized enterprises that do not have self-development capabilities, choosing a cloud platform that can provide security measures including data encryption, identity verification, and compliance tools is a good low-cost option.

In response to the current wave of generative AI, does the cloud platform already have the capability to develop large models and provide full-process services for generative AI?

At the just-concluded Amazon Cloud Technology New York Summit, we saw a complete cloud-based generative AI solution.

Amazon Cloud Technology Creates a New Paradigm of Generative AI Pratt & Whitney

This time, Amazon Cloud Technology has continued the consistent "pragmatic" style in the past, aimed at the pain points faced by the current generative AI application transformation, and launched a series of new functions and services. From hardware to software, from development end to application end, we try to create a generative AI service platform with the most complete functions and the strongest capabilities.

-

Amazon Bedrock Service: Build a "fast track" for generative AI development

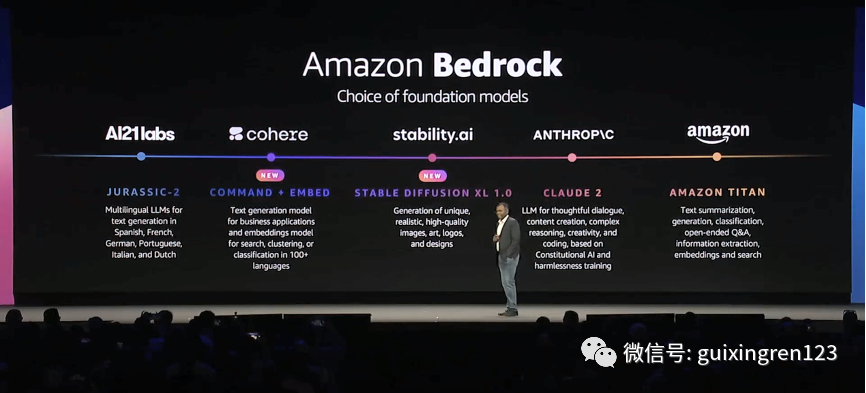

In response to the high cost of basic model training at the development level and the complexity of environment deployment, in April this year, Amazon Cloud Technology announced the launch of the Amazon Bedrock service for the first time, allowing users to use APIs to conveniently use scalable, reliable and secure Amazon Cloud Technology hosting services Access base models from different vendors and leverage them to build generative AI applications.

At that time, in addition to its own Titan model, the first third-party partners and basic models also included AI21 Labs' Jurassic-2, Anthropic's Claude, and Stability AI's Stable Diffusion. At this New York summit, Amazon announced that it has once again added Cohere, one of the largest unicorns in the field of generative AI, as a supplier, and also added Anthropic's latest language model Claude 2, and the latest version of Stability AI's Vincent graph Model Kit Stable Diffusion XL 1.0 and other base models.

Amazon Cloud Technology believes that in the future, one model will not govern everything. By continuously integrating the industry's leading basic models, Amazon Bedrock will allow users to conveniently call the most suitable model according to their own needs.

But after the basic model is available, there is still a thorny problem that has not been solved-how to use these models for personalized application development? The cloud platform also needs to further solve the problems of private data learning, system integration and debugging, and automatic task execution.

Give an example of e-commerce return and exchange that we often encounter in our daily life. You bought a pair of shoes on an e-commerce platform and are not satisfied and want to ask the customer service to change the color. If the customer service is a general chatbot such as ChatGPT, how will he answer you? ——"Sorry, my training data deadline is September 2021, and there is no information about this pair of shoes."

To make the big model really work, the first thing to do is to “feed” all the company’s internal information related to the pair of shoes to the model in advance, including the model color of the shoe, the platform’s return policy, inventory information, etc. The model can give accurate feedback. While giving information, it is also necessary for the AI to be able to perform all operations related to replacement in an orderly and safe manner in the background while chatting.

This used to be a huge undertaking for developers, but now, Amazon has made it easy with a new service called Amazon Bedrock Agents.

The latest Amazon Bedrock Agents service can package dialogue definition, model external information acquisition and analysis, API calls, task execution, etc. into a fully managed service on the basis of the basic model, so as to be able to output in a timely and targeted manner result.

In this way, developers do not need to spend a lot of money to develop their own basic models from scratch, and do not need to spend a lot of time and manpower on personalized deployment and debugging of models, so that developers can focus more on the AI application. In terms of construction and operation, small and medium-sized developers without strong capital and technical strength can join the wave of generative AI.

-

"Vector data + hardware computing power" dual escort, the strongest brain + strongest base for foundry application development

The custom development of the model requires not only professional hosting services such as Amazon Bedrock, but also other related capabilities such as computing, storage, and security to ensure the continuous availability and iterative upgrade of the model.

Undoubtedly, data is the basis for the emergence and development of artificial intelligence. In order to learn and understand the complexity of human language, generative AI needs a large amount of training data, and these training data usually exist in the form of "vectors", that is, converting natural language into numbers that computers can understand and process.

So, what is vector data, and why is it critical to the development of generative AI?

Assuming you are using a music recommendation software, we can quantify and mark each song according to three characteristics such as rhythm, lyrics, and melody. For example, the first song is (120, 60, 80), and the second song is ( 100, 80, 70), when you tell the system that you like the rhythm of the first song, the system will find the rhythm vector data "120" of this song, search for other vectors similar to this vector in the database, and then Recommend songs with similar characteristics to you.

Of course, not only three-dimensional, but also a data can be marked into more latitudes. In natural language processing, "word vectors" represented using word embedding techniques are usually hundreds of dimensions, while in image processing, image vectors represented using pixel values may have thousands to millions of dimensions. The "vectorized" data will be stored in a vector database to efficiently retrieve and generate the most relevant or similar data in a high-dimensional space.

However, vectorizing and storing data is not an easy task, and it often takes a lot of manpower and time. In response to this problem, Amazon Cloud Technology has launched a vector engine for Amazon OpenSearch Serverless. This vector engine can support simple API calls and can be used to store and query billions of Embeddings (mapping high-dimensional data to low-level dimensional space process). Amazon Cloud Technology also stated that in the future, all Amazon Cloud Technology databases will have vector functions, and will become the developer's "most powerful brain" at the level of AI data.

In addition to the support of the vector engine, in terms of computing power, Amazon Cloud Technology has also been committed to building low-cost, low-latency cloud infrastructure.

Amazon Cloud Technology and NVIDIA have cooperated for more than 12 years to provide large-scale, low-cost GPU solutions for various applications such as artificial intelligence, machine learning, graphics, games and high-performance computing, and have unparalleled experience in delivering GPU-based instances. rich experience. This time, Amazon Cloud Technology demonstrated the latest P5 instance based on the support of Nvidia H100 Tensor Core GPU, which can achieve lower latency and efficient horizontal expansion performance.

The P5 instances will be the first GPU instances to take advantage of Amazon Cloud Technology's second-generation Amazon Elastic Fabric Adapter (EFA) networking technology. Compared with the previous generation, the training time of P5 instances can be shortened by up to 6 times, from days to hours. This performance improvement will help customers reduce training costs by up to 40%. With the second-generation Amazon EFA, users can scale their P5 instances to more than 20,000 NVIDIA H100 GPUs, providing the supercomputing power required by customers of all sizes, from start-ups to large enterprises.

-

Lower the threshold of generative AI and maximize the empowerment of users with products

In addition to tools and platforms for generative AI development, some ready-to-use generative AI products are needed in the daily operations of enterprises to help improve work and management efficiency. Regarding this point, Amazon Cloud Technology has also successively launched some products that can be directly used in work scenarios. These products not only cover the underlying developers but also pay attention to a large number of non-technical personnel in the enterprise.

For example, in the field of code development, since Amazon Cloud Technology first launched the AI programming assistant Amazon CodeWhisperer in June last year, this function has now become one of the daily must-have tools for many developers.

Based on billions of lines of open source code training, Amazon CodeWhisperer can generate code suggestions in real time based on code comments and existing code, and can also scan for security vulnerabilities. Currently supports 15 programming languages including Python, Java and JavaScript and integrated development environments including VS Code, IntelliJ IDEA, JupyterLab and Amazon SageMaker Studio.

In order to further improve development efficiency, at the New York Summit, Amazon Cloud Technology officially announced that Amazon Glue Studio Notebooks can also support Amazon CodeWhisperer. With Amazon Glue Studio Notebooks, developers can write specific tasks in natural language, and then Amazon CodeWhisperer can recommend one or more code snippets that can accomplish this task directly in Notebooks for developers to use and edit directly.

Amazon CodeWhisperer supports languages and environments, the picture comes from the official website of Amazon Cloud Technology

For non-development work scenarios, by combining Amazon Bedrock's large language model capabilities with Amazon QuickSight Q, which supports natural language question answering, it provides users with new business intelligence services based on generative AI.

For example, if you are a financial analyst, you can give commands in natural language like chatting with ChatGPT, and Amazon QuickSight Q can complete the operation of searching for key financial information or creating a company's financial visualization chart in a few seconds, and it can also help you Summarize the trend characteristics and make recommendations.

Similar out-of-the-box products include Amazon Entity Resolution, which helps companies break internal information silos and accelerate data-driven decision-making, and Amazon HealthScribe, which helps medical software suppliers easily build clinical applications based on generative AI, etc. All walks of life are expanding the usage scenarios of generative AI products.

Unleash the "cloud power" in the AI era

The development of generative AI requires the cloud, and more importantly, a large number of cloud-based tools and services.

After the big model, the next stage of generative AI technology will definitely develop in the direction of diversity and personalization. We can see not only general productivity tools, but also various AI products targeting specific scenarios. In this process, the cloud platform will play an increasingly critical role.

On the one hand, the cloud platform will greatly reduce the threshold for AI application development. With the support of the platform's computing power and basic model, developers basically don't need to care about hardware and infrastructure issues, so they can spend more time and energy on business and operations. On the other hand, the cloud platform can continuously accelerate the development and operation efficiency of AI applications. Users can develop and manage applications by directly calling the API, and share them among teams or organizations safely and conveniently.

With the help of the cloud platform, the future generative AI will no longer be just a "money-burning game" that can only be played by giants, but more ordinary people will also be able to sit at the poker table.

As one of the industry leaders in the field of cloud services, Amazon Cloud Technology provides more than 200 services, covering a wide range of fields such as computing, storage, databases, networks, developer tools, security, analysis, Internet of Things, and enterprise applications. Facilities span the globe. At the same time, Amazon Cloud Technology is also a leader in the field of artificial intelligence and machine learning. Over the years, it has continuously provided and updated a series of end-to-end AI-related services, allowing developers to develop and deploy generative AI applications flexibly, conveniently and at low cost. .

This time, Amazon Cloud Technology released the generative AI tool "Family Bucket". In the development and application of AI.

The importance of generative AI does not lie in how powerful the model is, but more importantly, how it can evolve from a basic model to specific applications in various fields, thereby empowering the development of the entire economy and society.

Now, Amazon Cloud Technology is becoming that bridge.