face recognition

Article directory

1. Overview of Pose Estimation

1 Overview

In many applications we need to know how the head is tilted relative to the camera. For example, in a virtual reality application, the pose of the head can be used to render the right view of the scene. In driver assistance systems, cameras in the vehicle watching the driver's face can use head pose estimation to see if the driver is paying attention to the road. Of course, one can use gestures based on head posture to control hands-free apps/games. For example, tilting your head from left to right might mean "no."

2. Attitude estimation

In computer vision, the pose of an object refers to its relative orientation and position with respect to the camera. You can change the pose by moving the object relative to the camera or by moving the camera relative to the object.

The pose estimation problem is often called the Perspective-n-Point problem or PNP in computer vision terminology. In this problem, the goal is to find the pose of an object when we have a calibrated camera and we know the positions of n 3D points on the object and the corresponding 2D projected images.

3. Represent camera motion mathematically

A 3D rigid body has only two types of motion relative to the camera.

Translation: Moving the camera from its current 3D position (X, Y, Z) to a new 3D position (X', Y', Z') is called translation. As you can see, translation has 3 degrees of freedom - you can move in X, Y, or Z directions. A translation is represented by a vector \mathbf{t} which equals (X' - X, Y' - Y, Z' - Z).

Rotation: You can also rotate around the X, Y and Z axes. Therefore, rotation also has three degrees of freedom. There are many ways to represent rotation. You can represent it using Euler angles (roll, pitch, and yaw), a3\sub3 rotation matrix, or the direction of rotation (ie axis) and angle.

Therefore, estimating the pose of a 3D object means finding 6 numbers - three for translation and three for rotation.

4. What is needed for pose estimation

To calculate the 3D pose of an object in an image, you need the following information

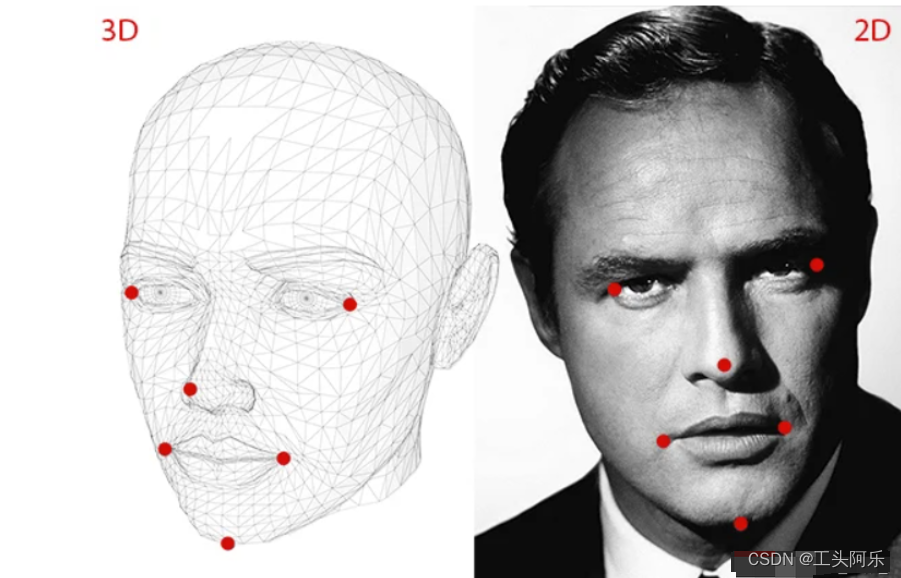

(1) 2D coordinates of several points

You need the 2D (x,y) positions of several points in the image. For faces, you can choose the corners of the eyes, the tip of the nose, the corners of the mouth, etc. Dlib's facial feature detector gives us many points to choose from. For this tutorial, we will use the tip of the nose, the chin, the left corner of the left eye, the right corner of the right eye, the left corner of the mouth, and the right corner of the mouth.

(2) 3D position of the same point

You also need the 3D positions of the 2D feature points. You might think that you need a 3D model of the person in the photo to get a 3D location. Ideally yes, but in practice, you won't. A generic 3D model is sufficient. Where did you get a 3D model of the head? Well, you don't really need a full 3D model. All you need is the 3D position of a few points in some arbitrary frame of reference. In this tutorial we will use the following 3D points.

Nose Tip: ( 0.0, 0.0, 0.0)

Chin: (0.0, -330.0, -65.0)

Left Eye Left Eye Corner: (-225.0f, 170.0f, -135.0)

Right Eye Corner: ( 225.0, 170.0, -135.0)

Left Mouth Corner: (-150.0, -150.0, -125.0)

Right mouth corner: (150.0, -150.0, -125.0)

The above points lie in some arbitrary reference frame/coordinate system. This is called world coordinates (also called model coordinates in the OpenCV documentation).

Four coordinate systems involved in image processing:

(3) Intrinsic parameters of the camera

(3) Intrinsic parameters of the camera

As mentioned earlier, in this problem, it is assumed that the camera is calibrated. In other words, the focal length of the camera, the optical center in the image, and the radial distortion parameters need to be known. So you need to calibrate your camera. Instead of an exact 3D model, however, an approximate state can be used. The optical center can be approximated by the center of the image, the focal length can be approximated by the image width in pixels, and assuming no radial distortion exists.

5. Pose Estimation Algorithm

There are several pose estimation algorithms. The first known algorithm dates back to 1841. Here is a brief introduction.

There are three coordinate systems. The 3D coordinates of the various facial features shown above are world coordinates. We can convert a 3D point in world coordinates to a 3D point in camera coordinates if we know the rotation and translation (ie pose). Using the camera's intrinsic parameters (focal length, optical center, etc.), a 3D point in camera coordinates can be projected onto the image plane (i.e. the image coordinate system).

In the image above, O is the center of the camera, and the plane shown in the image is the image plane. We find the equation for the projection of 3D points onto the image plane.

In the image above, O is the center of the camera, and the plane shown in the image is the image plane. We find the equation for the projection of 3D points onto the image plane.

For the projection from 3D to 2D, you can simply refer to the link below

https://skydance.blog.csdn.net/article/details/124991406

Suppose we know the position (U, V, W) of a 3D point in world coordinates. If we know the rotation R (a 3×3 matrix) and the translation T (a 3×1 vector), relative to the world coordinates of the camera coordinates, we can calculate the P of the point at position (X, Y, Z) in the camera coordinate system Use the following equation.

In expanded form, the above equation looks like this

In linear algebra, if we know a sufficient number of point correspondences (i.e. (X, Y, Z) and (U, V, W)), the above is a system of linear equations where and are unknowns, you can easily solve the unknowns.

We know many points on the 3D model (ie (U, V, W)), but we don't know (X, Y, Z). We only know the position of the point in 2D (ie (x, y)). In the absence of radial distortion, the p-image coordinates of a point at coordinates (x, y) are given by

where and are the focal lengths in the x and y directions, and is the optical center. Things get slightly more complicated when radial distortion is involved, so I'll leave that out for now.

How about that s in the equation? This is an unknown scaling factor. It's in the equation because we don't know depth in any image. If you add any point p in 3D to the center ○ of the camera, the point p, where the ray intersects the image plane is P. Note that all points P along the ray connecting the camera center and the point produce the same image.

Equations of the above form can be solved using some algebraic magic using a method called the Direct Linear Transformation (DLT). You can use DLT anytime you find a problem where the equation is almost linear but deviates from an unknown scale.

6. Levenberg-Marquardt optimization

The DLT solutions mentioned above are not very accurate for the following reasons. 1. Rotation has three degrees of freedom, but the matrix representation used in the DLT solution has 9 numbers. There is nothing in the DLT solution that forces the estimated 3×3 matrix to be a rotation matrix. More importantly, DLT solutions do not minimize the correct objective function. Ideally, we would like to minimize the reprojection error described below.

If we know the correct pose (and ), we can predict the 2D position of a 3D facial point on the image by projecting the 3D point onto the 2D image. In other words, if we know and we can find the focal point p in the image for each 3D point p.

We also know the 2D facial landmarks (using Dlib or manually clicking). We can look at the distance between the projected 3D points and the 2D facial features. When the estimated pose is perfect, the 3D points projected onto the image plane will almost perfectly align with the 2D facial features. When the pose estimation is incorrect, we can compute the reprojection error metric - the sum of squared distances between projected 3D points and 2D facial landmarks.

As mentioned earlier, an approximate estimate of the pose (and ) can be found using a DLT solution. A simple way to improve the DLT solution is to randomly vary the pose (and ) and check whether the reprojection error decreases. If so, we can accept new pose estimates. We can keep perturbing and looking for better estimates over and over again. While this process will work, it will be slow. It turns out that principled approaches can iteratively change and make the reprojection error smaller.

Two, solvePnP function

1. Function prototype

As can be seen in the OpenCV documentation, pose estimation has a series of solvePnP functions, and only the solvePnP function is introduced here.

This function returns the rotation and translation vectors that transform a 3D point represented in the object's coordinate system into the camera's coordinate system, using a different approach:

P3P methods (SOLVEPNP_P3P, SOLVEPNP_AP3P): 4 input points are required to return a unique solution.

SOLVEPNP_IPPE Input points must be >= 4 and object points must be coplanar.

SOLVEPNP_IPPE_SQUARE is suitable for the special case of marker pose estimation. The number of points entered must be 4. Object points must be defined sequentially.

For all other flags, the number of input points must be >= 4, and the object points can be in any configuration.

bool cv::solvePnP (InputArray objectPoints, InputArray imagePoints, InputArray cameraMatrix, InputArray distCoeffs, OutputArray rvec, OutputArray tvec, bool useExtrinsicGuess=false, int flags=SOLVEPNP_ITERATIVE)

2. Detailed explanation of parameters

objectPoints Array of object points in object coordinate space, Nx3 1-channel or 1xN/Nx1 3-channel, where N is the number of points. vector can also be passed here.

imagePoints corresponds to an array of image points, Nx2 1 channel or 1xN/Nx1 2 channels, where N is the number of points. vector can also be passed here.

cameraMatrix input camera intrinsic matrix

input vector of distortion coefficients for distCoeffs4, 5, 8, 12 (k1,k2,p1,p2[,k3[,k4,k5,k6[,s1,s2,s3,s4[,τx,τy]]]]) or 14 elements. If the vector is NULL/empty, zero distortion coefficients are assumed.

rvec outputs a rotation vector (see Rodrigues), which together with tvec brings a point from the model coordinate system to the camera coordinate system.

tvec outputs a translation vector.

useExtrinsicGuess for SOLVEPNP_ITERATIVE parameter. If true (1), the function uses the supplied rvec and tvec values as initial approximations for the rotation and translation vectors, respectively, and refines them further.

The method of flags to solve the PnP problem: see calib3d_solvePnP_flags

3. OpenCV source code

1. Source path

opencv\modules\calib3d\src\solvepnp.cpp

int solvePnPGeneric( InputArray _opoints, InputArray _ipoints,

InputArray _cameraMatrix, InputArray _distCoeffs,

OutputArrayOfArrays _rvecs, OutputArrayOfArrays _tvecs,

bool useExtrinsicGuess, SolvePnPMethod flags,

InputArray _rvec, InputArray _tvec,

OutputArray reprojectionError) {

CV_INSTRUMENT_REGION();

Mat opoints = _opoints.getMat(), ipoints = _ipoints.getMat();

int npoints = std::max(opoints.checkVector(3, CV_32F), opoints.checkVector(3, CV_64F));

CV_Assert( ( (npoints >= 4) || (npoints == 3 && flags == SOLVEPNP_ITERATIVE && useExtrinsicGuess)

|| (npoints >= 3 && flags == SOLVEPNP_SQPNP) )

&& npoints == std::max(ipoints.checkVector(2, CV_32F), ipoints.checkVector(2, CV_64F)) );

opoints = opoints.reshape(3, npoints);

ipoints = ipoints.reshape(2, npoints);

if( flags != SOLVEPNP_ITERATIVE )

useExtrinsicGuess = false;

if (useExtrinsicGuess)

CV_Assert( !_rvec.empty() && !_tvec.empty() );

if( useExtrinsicGuess )

{

int rtype = _rvec.type(), ttype = _tvec.type();

Size rsize = _rvec.size(), tsize = _tvec.size();

CV_Assert( (rtype == CV_32FC1 || rtype == CV_64FC1) &&

(ttype == CV_32FC1 || ttype == CV_64FC1) );

CV_Assert( (rsize == Size(1, 3) || rsize == Size(3, 1)) &&

(tsize == Size(1, 3) || tsize == Size(3, 1)) );

}

Mat cameraMatrix0 = _cameraMatrix.getMat();

Mat distCoeffs0 = _distCoeffs.getMat();

Mat cameraMatrix = Mat_<double>(cameraMatrix0);

Mat distCoeffs = Mat_<double>(distCoeffs0);

vector<Mat> vec_rvecs, vec_tvecs;

if (flags == SOLVEPNP_EPNP || flags == SOLVEPNP_DLS || flags == SOLVEPNP_UPNP)

{

if (flags == SOLVEPNP_DLS)

{

CV_LOG_DEBUG(NULL, "Broken implementation for SOLVEPNP_DLS. Fallback to EPnP.");

}

else if (flags == SOLVEPNP_UPNP)

{

CV_LOG_DEBUG(NULL, "Broken implementation for SOLVEPNP_UPNP. Fallback to EPnP.");

}

Mat undistortedPoints;

undistortPoints(ipoints, undistortedPoints, cameraMatrix, distCoeffs);

epnp PnP(cameraMatrix, opoints, undistortedPoints);

Mat rvec, tvec, R;

PnP.compute_pose(R, tvec);

Rodrigues(R, rvec);

vec_rvecs.push_back(rvec);

vec_tvecs.push_back(tvec);

}

else if (flags == SOLVEPNP_P3P || flags == SOLVEPNP_AP3P)

{

vector<Mat> rvecs, tvecs;

solveP3P(opoints, ipoints, _cameraMatrix, _distCoeffs, rvecs, tvecs, flags);

vec_rvecs.insert(vec_rvecs.end(), rvecs.begin(), rvecs.end());

vec_tvecs.insert(vec_tvecs.end(), tvecs.begin(), tvecs.end());

}

else if (flags == SOLVEPNP_ITERATIVE)

{

Mat rvec, tvec;

if (useExtrinsicGuess)

{

rvec = _rvec.getMat();

tvec = _tvec.getMat();

}

else

{

rvec.create(3, 1, CV_64FC1);

tvec.create(3, 1, CV_64FC1);

}

CvMat c_objectPoints = cvMat(opoints), c_imagePoints = cvMat(ipoints);

CvMat c_cameraMatrix = cvMat(cameraMatrix), c_distCoeffs = cvMat(distCoeffs);

CvMat c_rvec = cvMat(rvec), c_tvec = cvMat(tvec);

cvFindExtrinsicCameraParams2(&c_objectPoints, &c_imagePoints, &c_cameraMatrix,

(c_distCoeffs.rows && c_distCoeffs.cols) ? &c_distCoeffs : 0,

&c_rvec, &c_tvec, useExtrinsicGuess );

vec_rvecs.push_back(rvec);

vec_tvecs.push_back(tvec);

}

else if (flags == SOLVEPNP_IPPE)

{

CV_DbgAssert(isPlanarObjectPoints(opoints, 1e-3));

Mat undistortedPoints;

undistortPoints(ipoints, undistortedPoints, cameraMatrix, distCoeffs);

IPPE::PoseSolver poseSolver;

Mat rvec1, tvec1, rvec2, tvec2;

float reprojErr1, reprojErr2;

try

{

poseSolver.solveGeneric(opoints, undistortedPoints, rvec1, tvec1, reprojErr1, rvec2, tvec2, reprojErr2);

if (reprojErr1 < reprojErr2)

{

vec_rvecs.push_back(rvec1);

vec_tvecs.push_back(tvec1);

vec_rvecs.push_back(rvec2);

vec_tvecs.push_back(tvec2);

}

else

{

vec_rvecs.push_back(rvec2);

vec_tvecs.push_back(tvec2);

vec_rvecs.push_back(rvec1);

vec_tvecs.push_back(tvec1);

}

}

catch (...) {

}

}

else if (flags == SOLVEPNP_IPPE_SQUARE)

{

CV_Assert(npoints == 4);

#if defined _DEBUG || defined CV_STATIC_ANALYSIS

double Xs[4][3];

if (opoints.depth() == CV_32F)

{

for (int i = 0; i < 4; i++)

{

for (int j = 0; j < 3; j++)

{

Xs[i][j] = opoints.ptr<Vec3f>(0)[i](j);

}

}

}

else

{

for (int i = 0; i < 4; i++)

{

for (int j = 0; j < 3; j++)

{

Xs[i][j] = opoints.ptr<Vec3d>(0)[i](j);

}

}

}

const double equalThreshold = 1e-9;

//Z must be zero

for (int i = 0; i < 4; i++)

{

CV_DbgCheck(Xs[i][2], approxEqual(Xs[i][2], 0, equalThreshold), "Z object point coordinate must be zero!");

}

//Y0 == Y1 && Y2 == Y3

CV_DbgCheck(Xs[0][1], approxEqual(Xs[0][1], Xs[1][1], equalThreshold), "Object points must be: Y0 == Y1!");

CV_DbgCheck(Xs[2][1], approxEqual(Xs[2][1], Xs[3][1], equalThreshold), "Object points must be: Y2 == Y3!");

//X0 == X3 && X1 == X2

CV_DbgCheck(Xs[0][0], approxEqual(Xs[0][0], Xs[3][0], equalThreshold), "Object points must be: X0 == X3!");

CV_DbgCheck(Xs[1][0], approxEqual(Xs[1][0], Xs[2][0], equalThreshold), "Object points must be: X1 == X2!");

//X1 == Y1 && X3 == Y3

CV_DbgCheck(Xs[1][0], approxEqual(Xs[1][0], Xs[1][1], equalThreshold), "Object points must be: X1 == Y1!");

CV_DbgCheck(Xs[3][0], approxEqual(Xs[3][0], Xs[3][1], equalThreshold), "Object points must be: X3 == Y3!");

#endif

Mat undistortedPoints;

undistortPoints(ipoints, undistortedPoints, cameraMatrix, distCoeffs);

IPPE::PoseSolver poseSolver;

Mat rvec1, tvec1, rvec2, tvec2;

float reprojErr1, reprojErr2;

try

{

poseSolver.solveSquare(opoints, undistortedPoints, rvec1, tvec1, reprojErr1, rvec2, tvec2, reprojErr2);

if (reprojErr1 < reprojErr2)

{

vec_rvecs.push_back(rvec1);

vec_tvecs.push_back(tvec1);

vec_rvecs.push_back(rvec2);

vec_tvecs.push_back(tvec2);

}

else

{

vec_rvecs.push_back(rvec2);

vec_tvecs.push_back(tvec2);

vec_rvecs.push_back(rvec1);

vec_tvecs.push_back(tvec1);

}

} catch (...) {

}

}

else if (flags == SOLVEPNP_SQPNP)

{

Mat undistortedPoints;

undistortPoints(ipoints, undistortedPoints, cameraMatrix, distCoeffs);

sqpnp::PoseSolver solver;

solver.solve(opoints, undistortedPoints, vec_rvecs, vec_tvecs);

}

/*else if (flags == SOLVEPNP_DLS)

{

Mat undistortedPoints;

undistortPoints(ipoints, undistortedPoints, cameraMatrix, distCoeffs);

dls PnP(opoints, undistortedPoints);

Mat rvec, tvec, R;

bool result = PnP.compute_pose(R, tvec);

if (result)

{

Rodrigues(R, rvec);

vec_rvecs.push_back(rvec);

vec_tvecs.push_back(tvec);

}

}

else if (flags == SOLVEPNP_UPNP)

{

upnp PnP(cameraMatrix, opoints, ipoints);

Mat rvec, tvec, R;

PnP.compute_pose(R, tvec);

Rodrigues(R, rvec);

vec_rvecs.push_back(rvec);

vec_tvecs.push_back(tvec);

}*/

else

CV_Error(CV_StsBadArg, "The flags argument must be one of SOLVEPNP_ITERATIVE, SOLVEPNP_P3P, "

"SOLVEPNP_EPNP, SOLVEPNP_DLS, SOLVEPNP_UPNP, SOLVEPNP_AP3P, SOLVEPNP_IPPE, SOLVEPNP_IPPE_SQUARE or SOLVEPNP_SQPNP");

CV_Assert(vec_rvecs.size() == vec_tvecs.size());

int solutions = static_cast<int>(vec_rvecs.size());

int depthRot = _rvecs.fixedType() ? _rvecs.depth() : CV_64F;

int depthTrans = _tvecs.fixedType() ? _tvecs.depth() : CV_64F;

_rvecs.create(solutions, 1, CV_MAKETYPE(depthRot, _rvecs.fixedType() && _rvecs.kind() == _InputArray::STD_VECTOR ? 3 : 1));

_tvecs.create(solutions, 1, CV_MAKETYPE(depthTrans, _tvecs.fixedType() && _tvecs.kind() == _InputArray::STD_VECTOR ? 3 : 1));

for (int i = 0; i < solutions; i++)

{

Mat rvec0, tvec0;

if (depthRot == CV_64F)

rvec0 = vec_rvecs[i];

else

vec_rvecs[i].convertTo(rvec0, depthRot);

if (depthTrans == CV_64F)

tvec0 = vec_tvecs[i];

else

vec_tvecs[i].convertTo(tvec0, depthTrans);

if (_rvecs.fixedType() && _rvecs.kind() == _InputArray::STD_VECTOR)

{

Mat rref = _rvecs.getMat_();

if (_rvecs.depth() == CV_32F)

rref.at<Vec3f>(0,i) = Vec3f(rvec0.at<float>(0,0), rvec0.at<float>(1,0), rvec0.at<float>(2,0));

else

rref.at<Vec3d>(0,i) = Vec3d(rvec0.at<double>(0,0), rvec0.at<double>(1,0), rvec0.at<double>(2,0));

}

else

{

_rvecs.getMatRef(i) = rvec0;

}

if (_tvecs.fixedType() && _tvecs.kind() == _InputArray::STD_VECTOR)

{

Mat tref = _tvecs.getMat_();

if (_tvecs.depth() == CV_32F)

tref.at<Vec3f>(0,i) = Vec3f(tvec0.at<float>(0,0), tvec0.at<float>(1,0), tvec0.at<float>(2,0));

else

tref.at<Vec3d>(0,i) = Vec3d(tvec0.at<double>(0,0), tvec0.at<double>(1,0), tvec0.at<double>(2,0));

}

else

{

_tvecs.getMatRef(i) = tvec0;

}

}

if (reprojectionError.needed())

{

int type = (reprojectionError.fixedType() || !reprojectionError.empty())

? reprojectionError.type()

: (max(_ipoints.depth(), _opoints.depth()) == CV_64F ? CV_64F : CV_32F);

reprojectionError.create(solutions, 1, type);

CV_CheckType(reprojectionError.type(), type == CV_32FC1 || type == CV_64FC1,

"Type of reprojectionError must be CV_32FC1 or CV_64FC1!");

Mat objectPoints, imagePoints;

if (opoints.depth() == CV_32F)

{

opoints.convertTo(objectPoints, CV_64F);

}

else

{

objectPoints = opoints;

}

if (ipoints.depth() == CV_32F)

{

ipoints.convertTo(imagePoints, CV_64F);

}

else

{

imagePoints = ipoints;

}

for (size_t i = 0; i < vec_rvecs.size(); i++)

{

vector<Point2d> projectedPoints;

projectPoints(objectPoints, vec_rvecs[i], vec_tvecs[i], cameraMatrix, distCoeffs, projectedPoints);

double rmse = norm(Mat(projectedPoints, false), imagePoints, NORM_L2) / sqrt(2*projectedPoints.size());

Mat err = reprojectionError.getMat();

if (type == CV_32F)

{

err.at<float>(static_cast<int>(i)) = static_cast<float>(rmse);

}

else

{

err.at<double>(static_cast<int>(i)) = rmse;

}

}

}

return solutions;

}

4. Example of effect image

The position of the facial feature points here is hard-coded, you can use dlib to locate the facial feature points, and then change the image_points.

https://skydance.blog.csdn.net/article/details/107896225

You can simply refer to the link above

#include <opencv2/opencv.hpp>

using namespace std;

using namespace cv;

int main(int argc, char **argv)

{

// Read input image

cv::Mat im = cv::imread("headPose.jpg");

// 2D image points. If you change the image, you need to change vector

std::vector<cv::Point2d> image_points;

image_points.push_back( cv::Point2d(359, 391) ); // Nose tip

image_points.push_back( cv::Point2d(399, 561) ); // Chin

image_points.push_back( cv::Point2d(337, 297) ); // Left eye left corner

image_points.push_back( cv::Point2d(513, 301) ); // Right eye right corner

image_points.push_back( cv::Point2d(345, 465) ); // Left Mouth corner

image_points.push_back( cv::Point2d(453, 469) ); // Right mouth corner

// 3D model points.

std::vector<cv::Point3d> model_points;

model_points.push_back(cv::Point3d(0.0f, 0.0f, 0.0f)); // Nose tip

model_points.push_back(cv::Point3d(0.0f, -330.0f, -65.0f)); // Chin

model_points.push_back(cv::Point3d(-225.0f, 170.0f, -135.0f)); // Left eye left corner

model_points.push_back(cv::Point3d(225.0f, 170.0f, -135.0f)); // Right eye right corner

model_points.push_back(cv::Point3d(-150.0f, -150.0f, -125.0f)); // Left Mouth corner

model_points.push_back(cv::Point3d(150.0f, -150.0f, -125.0f)); // Right mouth corner

// Camera internals

double focal_length = im.cols; // Approximate focal length.

Point2d center = cv::Point2d(im.cols/2,im.rows/2);

cv::Mat camera_matrix = (cv::Mat_<double>(3,3) << focal_length, 0, center.x, 0 , focal_length, center.y, 0, 0, 1);

cv::Mat dist_coeffs = cv::Mat::zeros(4,1,cv::DataType<double>::type); // Assuming no lens distortion

cout << "Camera Matrix " << endl << camera_matrix << endl ;

// Output rotation and translation

cv::Mat rotation_vector; // Rotation in axis-angle form

cv::Mat translation_vector;

// Solve for pose

cv::solvePnP(model_points, image_points, camera_matrix, dist_coeffs, rotation_vector, translation_vector);

// Project a 3D point (0, 0, 1000.0) onto the image plane.

// We use this to draw a line sticking out of the nose

vector<Point3d> nose_end_point3D;

vector<Point2d> nose_end_point2D;

nose_end_point3D.push_back(Point3d(0,0,1000.0));

projectPoints(nose_end_point3D, rotation_vector, translation_vector, camera_matrix, dist_coeffs, nose_end_point2D);

for(int i=0; i < image_points.size(); i++)

{

circle(im, image_points[i], 3, Scalar(0,0,255), -1);

}

cv::line(im,image_points[0], nose_end_point2D[0], cv::Scalar(255,0,0), 2);

cout << "Rotation Vector " << endl << rotation_vector << endl;

cout << "Translation Vector" << endl << translation_vector << endl;

cout << nose_end_point2D << endl;

// Display image.

cv::imshow("Output", im);

cv::waitKey(0);

}

python version

#!/usr/bin/env python

import cv2

import numpy as np

# Read Image

im = cv2.imread("headPose.jpg");

size = im.shape

#2D image points. If you change the image, you need to change vector

image_points = np.array([

(359, 391), # Nose tip

(399, 561), # Chin

(337, 297), # Left eye left corner

(513, 301), # Right eye right corne

(345, 465), # Left Mouth corner

(453, 469) # Right mouth corner

], dtype="double")

# 3D model points.

model_points = np.array([

(0.0, 0.0, 0.0), # Nose tip

(0.0, -330.0, -65.0), # Chin

(-225.0, 170.0, -135.0), # Left eye left corner

(225.0, 170.0, -135.0), # Right eye right corne

(-150.0, -150.0, -125.0), # Left Mouth corner

(150.0, -150.0, -125.0) # Right mouth corner

])

# Camera internals

focal_length = size[1]

center = (size[1]/2, size[0]/2)

camera_matrix = np.array(

[[focal_length, 0, center[0]],

[0, focal_length, center[1]],

[0, 0, 1]], dtype = "double"

)

print "Camera Matrix :\n {0}".format(camera_matrix)

dist_coeffs = np.zeros((4,1)) # Assuming no lens distortion

(success, rotation_vector, translation_vector) = cv2.solvePnP(model_points, image_points, camera_matrix, dist_coeffs, flags=cv2.CV_ITERATIVE)

print "Rotation Vector:\n {0}".format(rotation_vector)

print "Translation Vector:\n {0}".format(translation_vector)

# Project a 3D point (0, 0, 1000.0) onto the image plane.

# We use this to draw a line sticking out of the nose

(nose_end_point2D, jacobian) = cv2.projectPoints(np.array([(0.0, 0.0, 1000.0)]), rotation_vector, translation_vector, camera_matrix, dist_coeffs)

for p in image_points:

cv2.circle(im, (int(p[0]), int(p[1])), 3, (0,0,255), -1)

p1 = ( int(image_points[0][0]), int(image_points[0][1]))

p2 = ( int(nose_end_point2D[0][0][0]), int(nose_end_point2D[0][0][1]))

cv2.line(im, p1, p2, (255,0,0), 2)

# Display image

cv2.imshow("Output", im)

cv2.waitKey(0)

reference link

https://blog.csdn.net/bashendixie5/article/details/125689183

https://blog.csdn.net/weixin_41010198/article/details/116028666