Author | Mingming Ruyue, CSDN Blog Expert

Editor in charge | Xia Meng

Listing | CSDN (ID: CSDNnews)

foreword

There used to be a batch of powerful AI models in front of me, but I never cherished them. I regretted it when I found out that others could easily control them and play a huge role. If God gave me another chance, I would work hard to learn experience and skills , and become the first batch of people who are proficient in driving AI models!

With the advent of ChatGPT, all walks of life have been deeply shocked, and various new large-scale models at home and abroad have sprung up one after another. Some people who are quick to respond to opportunities have begun to apply AI to their studies and work, and have even started to make money with AI.

At present, there are many AI models on the market, including ChatGPT, Claude, Bard, etc. abroad, and domestic Wenxin Yiyan, Tongyi Qianwen, Xunfei Xinghuo large models, etc. At this stage, it is not a lack of AI tools, but a lack of experience in using these tools . Due to lack of experience, many people were disappointed when they found that the answer did not meet expectations after using the large model several times, which is a pity. In fact, the capabilities of different models are different, but the same model can be used by different people with great differences. The key here is whether there is a good solution for prompt word skills and common problems encountered in the process of use and business access. to solve.

Although we have entered the AI era and can use natural language to interact with models, the requirements for prompt words are still a bit high. In the relatively early stage of the development of AI tools, many problems have not been completely resolved, and many functions are not yet perfect. In my opinion, there are two main types of problems with large models that most people use these days:

One is that the prompt words are not written well enough, resulting in unsatisfactory answers;

One is the lack of experience in the use and access of large models, and many common problems do not know how to solve them.

If you do not master the prompt words well, you may encounter the following confusion:

The answer from the big model is always brief, empty and mechanical, so what to do?

The answer of the large model can't always be output in the format you want, what should I do?

The answer of the big model is always not perfect, what should I do?

Insufficient mastery of large model usage skills may encounter the following confusion:

Many version prompt words have been optimized, but the answer is always unsatisfactory, what should I do?

I want to use ChatGPT in my company, but I am worried about data leakage, what should I do?

After many rounds of conversations with the AI, the AI seems to have forgotten what its mission is, what should I do?

Ask AI questions, but worry about it "telling lies", what should I do?

What should I do if I find it troublesome to input similar prompt words every time?

The fee model has a limit on the number of times (such as GPT-4), how to make it more effective?

Insufficient experience in business access to large models may encounter the following problems:

I think that the large model is omnipotent, and I want to use the large model to solve all functions, but the result is half the effort.

At the very beginning of adjusting the model, it went online in a hurry, resulting in unsatisfactory results and loss of users.

Constructing manual annotations is too time-consuming.

Algorithm engineers are not enough, and developers train models by themselves, and many optimizations are not satisfactory.

If you also encounter the above problems, then this article will help you. Next, I will mainly introduce how to get the desired answer through precise prompt word skills, and how to solve common problems encountered in the process of using and accessing large models.

experience

2.1 Prompt word experience

Many people try a large model a few times, don't get the answer they want, get disappointed, and then throw it away. In fact, it is mostly caused by the poor writing of the prompt words. There are all kinds of prompt word tutorials on the Internet, which are either unsystematic or too complicated. Next, in a relatively down-to-earth way, I will talk about the standard of prompt words and how to write prompt words for better effect.

2.1.1 Standards and principles of prompt words

In my opinion, a rough and simple criterion is: whether the people around you can easily understand . If you write a cue word and you need someone to ask you several more questions to really understand what it means, then this cue word is not a good cue word.

A good prompt word should follow the principles of being clear, specific, focused, and fully detailed. Give the main information he needs to answer the question in the prompt word, and tell it clearly and specifically what to do.

2.1.2 Prompt word formula

For relatively simple and common tasks, since the model is usually good at such tasks, it is generally sufficient to follow the principles mentioned above and write prompt words directly

Example 1:

Please submit 5 attractive titles based on XXX

Example 2:

Please help me find the typo in the following paragraph, which reads: XXX.

Example 3:

Please give me a sample code to implement strategy design pattern in Java language.

For relatively complex and specialized tasks, you can refer to the following formula: establish a role + ask questions + set goals + give examples + add background + supplement requirements, and you can often get better answers. In actual use, not all of these four items must be available, and they can be combined flexibly according to the actual situation.

Example:

I want you to be my tour guide (stand-up role). I plan to travel from Qingdao to Hangzhou. The budget is 10,000 yuan. There are 2 people in total and the trip is 3 days. Please give me a strategy (ask questions and set goals). Be careful not to arrange the itinerary too tight. You don’t want to go to the Internet celebrity check-in spots, but you want to go to scenic spots with cultural heritage. When recommending scenic spots, please attach the price of the scenic spot, and don’t go to too high-end restaurants to eat (supplementary requirements).

2.1.3 Prompt word technique

There are many techniques for prompt words. Here are some very useful experiences that I have practiced. For more advanced techniques, you can search for more information on the Internet for further study.

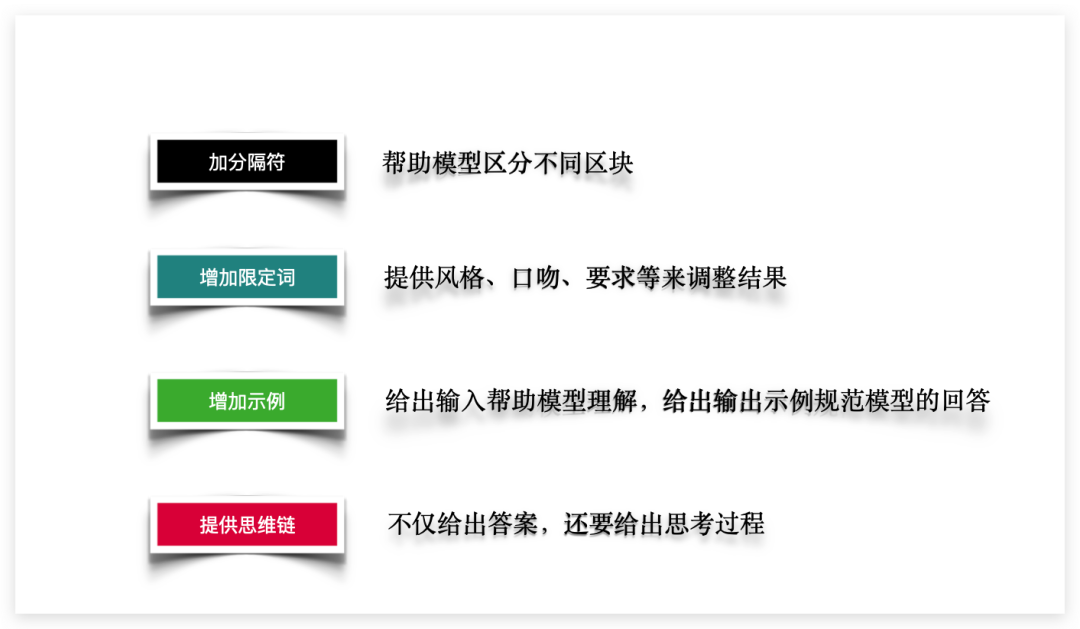

Adding a delimiter helps the model distinguish between different blocks. If the prompt word contains multiple parts, in order to better distinguish them, you can use a delimiter. Such as using three backticks to separate the command and the pending paragraph.

Example:

Follow the steps below:

1 Summarize the following text delimited by three quotation marks in one sentence.

2 Translate the summary into English

3 Count the number of each letter in English

4 Refer to the text format of the three # divisions for output

"""{text}"""

###{"a":1,"b":2}###

Require the output of the specification model by adding qualifiers

If the output style of the AI model does not meet your requirements, you can let the big model answer the questions according to your intention by setting the tone, explaining the target audience, etc. If there is a Bad Case in the content output by the AI model, you can intervene in the result through powerful modal adverbs, such as "must", "must not", "must", "not allowed", "should", etc. A friend around me reported that the content written by AI has a "machine taste". After using the optimized prompt words such as setting tone and setting requirements, he was very satisfied with the content written.

Example 1:

You are a well-known children's literature writer, please use a friendly tone and help me write a story for kindergarten children that can reflect the importance of family affection.

Require:

1 The content of the article needs to involve at least two animals.

2 The content of the article should be imaginative.

3. The content of the article must be positive, and there must be no bloody or violent content.

4 ...

Explanation: In the prompt words, the tone, crowd-oriented and specific requirements are given to the model, which makes it easier to write a story that satisfies you. Through the qualification of "must not" in the prompt word, the model will deliberately avoid it when constructing the story.

Example 2:

Please use the syntax of PlantUML to help me generate a sequence diagram.

Timing objects include: A, B, C. The timing is as follows: XXX

Explanation: If you do not explain the time series objects, the objects extracted by the model may deviate from what you think. However, it is easier to draw a time series diagram that satisfies you by directly handing over the time series objects to the AI model in the prompt.

Example 3:

Follow the steps below:

1 Summarize the following text delimited by three quotation marks in one sentence.

2 Translate the summary into English

3 Count the number of each letter in English

4 Refer to the text format of the three # divisions for output

5 There is no need to output the intermediate process, only need to refer to the three # split text format to output the final result (be sure not to output the beginning and end separator #)

"""{text}"""

###{"a":1,"b":2}###

Explanation: If you don’t add "do not output the separator # at the beginning and end", some models will output the result with three # before and after, and this problem can be perfectly solved by limiting.

Provide reference examples to make the model better understand your intent

Giving some examples in the prompt words will help the big model better understand your intentions and give answers that better meet your requirements.

Example 1:

Please act as a title optimization assistant, I will send you a theme, please choose the most suitable one from the following principles, and give 5 reference titles.

Good article titles follow three principles:

(1) The law of numbers. Such as "5 tips for writing articles", "I have learned these 10 points after working for 5 years", "3 postures to help you make a beautiful resume".

(2) Give conclusions and values. Such as "necessity and methods of refactoring", "thinking about software complexity", "engineers must also have product thinking".

(3) Stimulate curiosity. Such as "DDD's shortest learning path", "the original design pattern can still be used in this way", "99% of programmers misunderstand the variability of strings", "not writing code, the most important skill for programmers".

Subject: XXX

Example 2:

Please help me write a regular expression, the matching rules are as follows: number or underscore #some.com, and cannot start with an underscore.

Correct examples: 123#some.com, 123#some.com, 1_23#some.com

Examples of errors: 123#some.com, 12ac#some.com

Example 3:

You are an editor of a well-known Internet forum, please help me proofread a professional blog post, please point out the technical terminology errors, awkward sentences, etc., and give suggestions for revision.

Refer to the output format in three quote-delimited sections:

"""

No. 1

Original text: Code review is very important in the software development process, which can help programmers find problems in advance.

Reason: The term "code review" is wrong, it should be "code review"

Modification: Code review is very important in the software development process and can help programmers find problems in advance.

No. 2

Original: Create a new project with the command dune init project my_compiler.

Reason: The description of the command in this sentence is not smooth enough. It is suggested to add the word "lai" to connect the action and the purpose.

Edit: Use the command dune init project my_compiler to create a new project.

"""

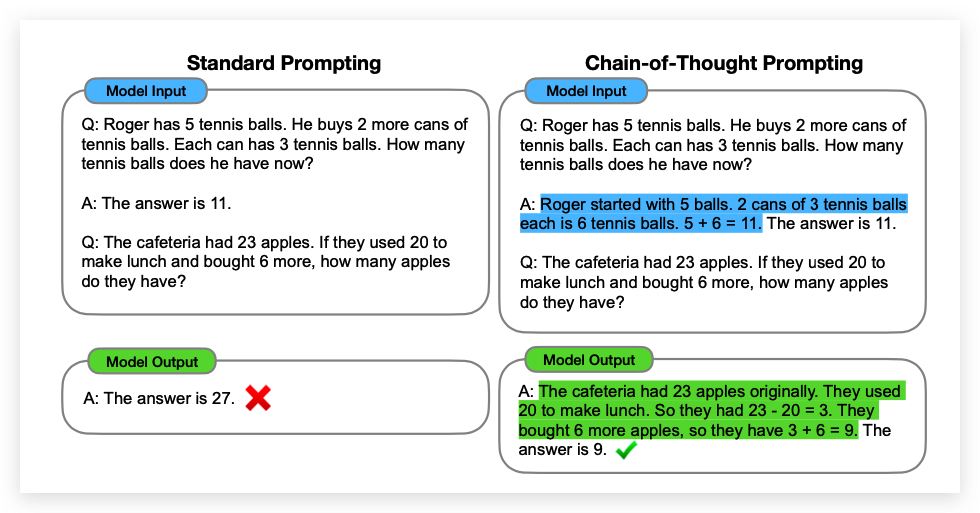

Chain-of-Thought (CoT)

When people solve complex problems, they usually divide them into some intermediate steps and solve them step by step, and finally get the answer. The thinking chain refers to the solutions of human beings, and the prompt word mode of the thinking chain includes input questions, thinking chains and output conclusions. Let the model learn this reasoning process, thereby improving the accuracy of large models in complex reasoning.

(Image source: "Chain-of-Thought Prompting Elicits Reasoning in Large Language Models" paper)

2.2 Common problems and solutions during model use

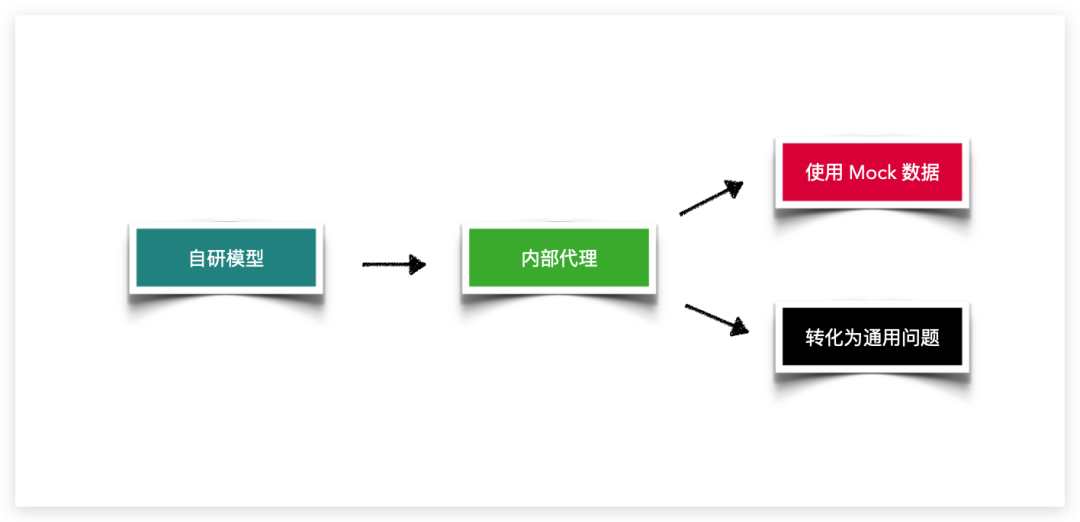

2.2.1 Want to use an external large model, but worry about business data leakage

Many people want to use AI tools in their companies, but have concerns about business data leakage.

If you are in a large factory, you can consider the compliance model developed by the company itself. Foreign AI models of corporate proxies (with security filtering) can also be selected. If you want to directly use large foreign language models such as ChatGPT and Bard to try the effect, you can desensitize the data first, construct mock data, or try to turn the problem you encounter into a general question to ask, and get reliable The program or code is then migrated to the company.

2.2.2 Customize prompt words to improve the efficiency of AI model questioning

Now AI is very smart, but AI products are not smart. The ChatGPT official chat page has only one input box, which seems to adhere to the concept of "Simple is Better", but in many cases, the simpler the better, the use of ChatGPT often requires input of similar prompt words, which is extremely inefficient.

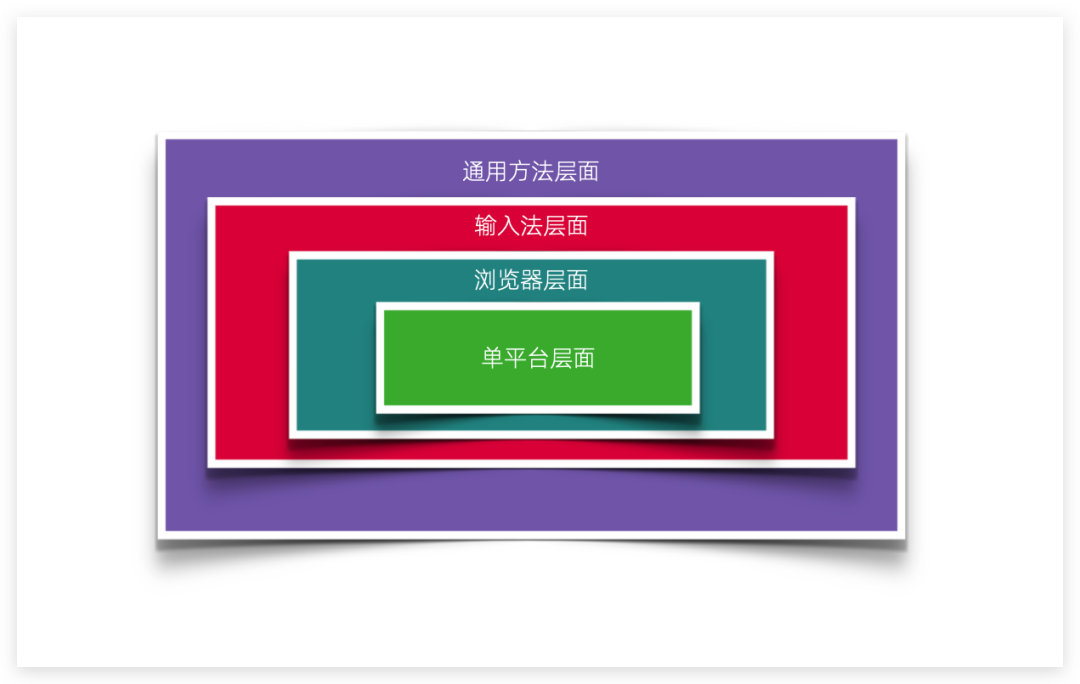

The following will briefly explain how to improve input efficiency through predefined prompt words from four aspects.

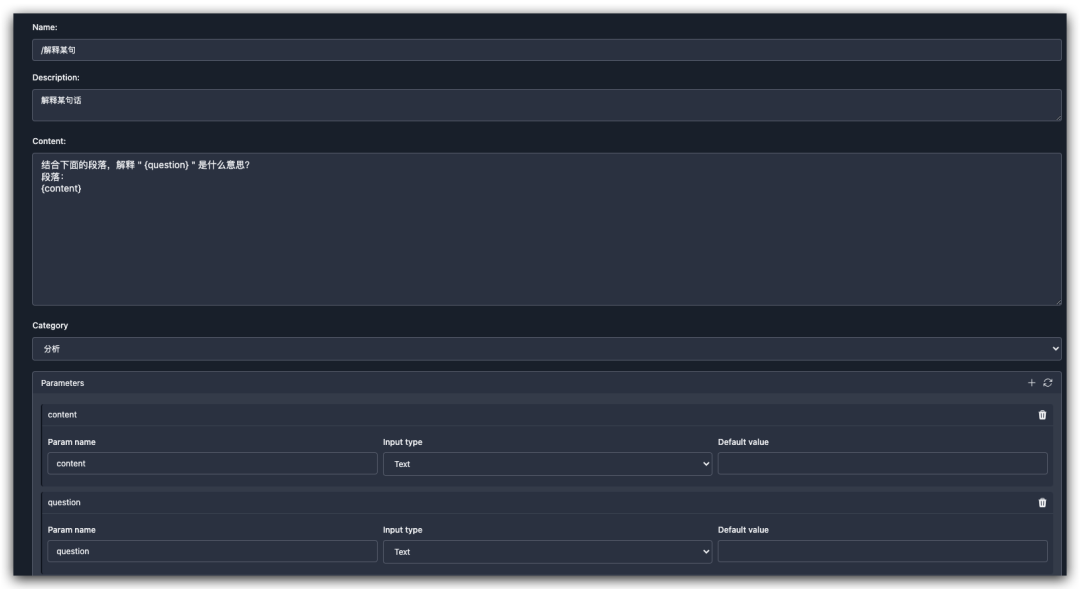

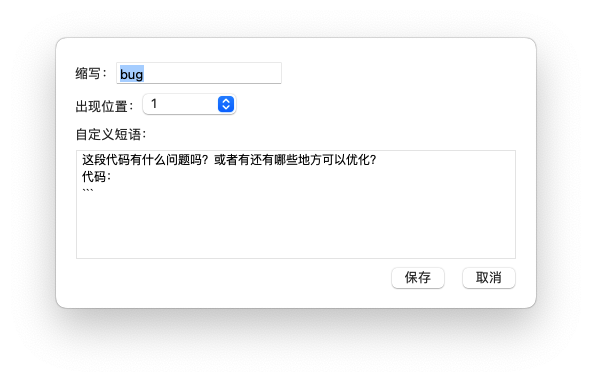

At the single-platform level , if you use the ChatGPT official website, we recommend the ChatGPT Prompt Plus plug-in, which supports custom prompt words, can be called out quickly, and also supports defining variables in the prompt words, and supports grouping prompt words. You can select or fill in the prompt words when you call out the prompt words It is very convenient to ask questions. The picture below shows the interface for "explaining a certain sentence" is reserved through this plug-in. The content to be filled in can be defined as a variable, and it can be filled in after calling out during use.

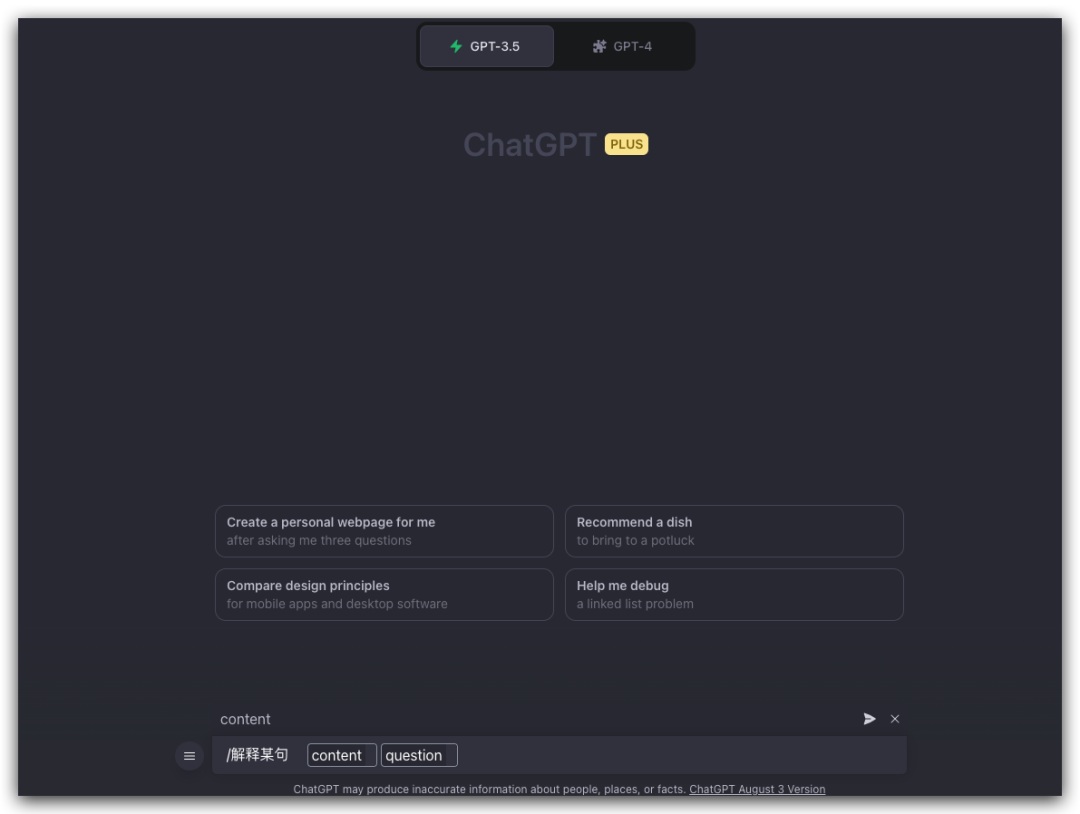

You can call out directly through "/Explain a sentence", you don't need to input repeated prompt words every time, you only need the necessary content each time, such as the content and questions here, the prompt words can be automatically spliced and sent to ChatGPT for questioning.

If you use the Poe platform, you can pre-define robots on the platform. The specific method is similar to the above, and you can study it yourself if you are interested.

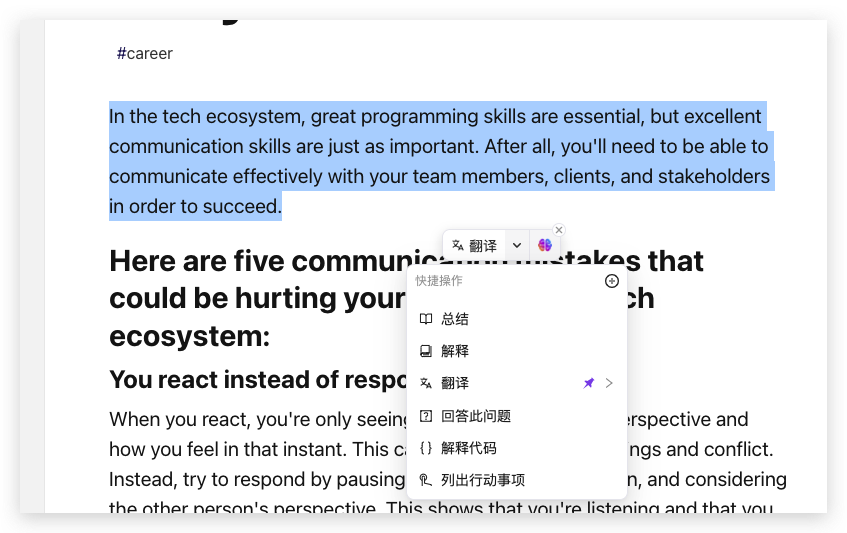

At the browser level, you can install an AI plug-in such as ChatGPT Sidebar. After selecting a piece of content on the page, you can directly select the built-in or predefined prompt words for processing.

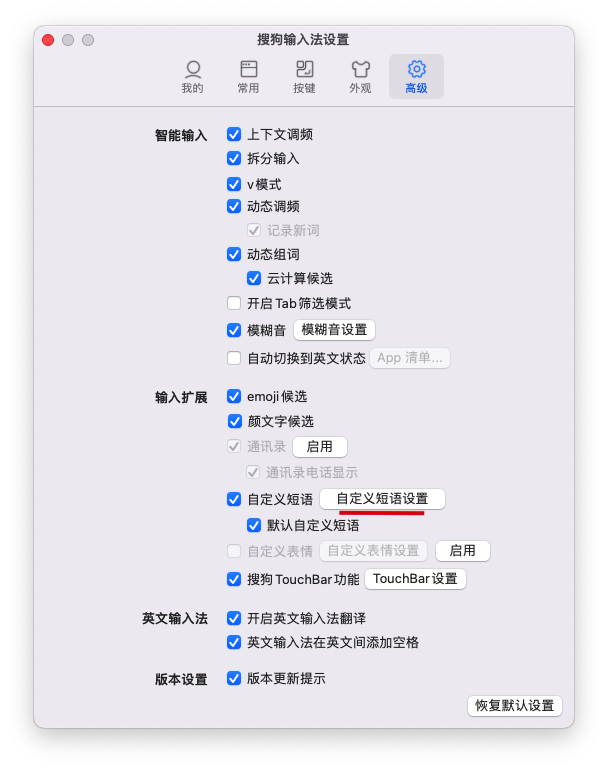

At the input method level, you can pre-define prompt words by customizing phrase settings, and when inputting content, you can automatically fill in prompt words by entering abbreviations.

At the level of general methods , you can use Alfred's Snippets function (supports clipboard content as a variable to automatically replace placeholders in predefined prompt words) or utools' memo function to pre-define and quickly paste prompt words.

2.2.3 No matter how optimized the prompt word is, the desired answer cannot be obtained

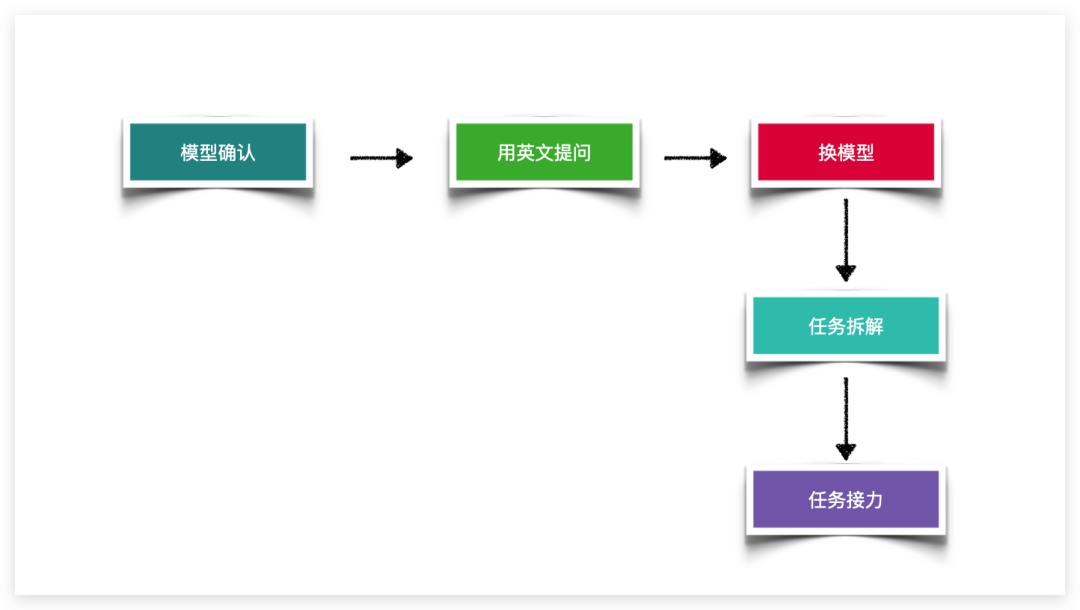

When using AI tools, if the answer is unsatisfactory, you can check whether your prompt words really meet the principle of "clear, specific, focused and fully detailed". If you still can’t get the desired answer after adjusting the optimization prompt words, you can try the following methods to solve it.

Sometimes you think that your expression is very clear, but in fact, the understanding of the model deviates from your expression. You can try to let the model restate your task , and you can find deviations based on its restatement, and make targeted corrections to the prompt words.

For the prompt words are not very complicated, but the model does not seem to understand your intention well, you can consider asking questions in English , sometimes it will be miraculous. This may be related to the fact that the model's corpus is more Chinese and English, and the model is better at English. It may also be that Chinese questions are first matched with Chinese corpus, but the quality of Chinese corpus is not high. I encountered a technical problem before. I asked New Bing many times in Chinese but didn't get the answer I wanted. I switched to English and got a reliable answer once. If you are not good at English, you can also use the "matryoshka" method, such as asking ChatGPT to help you translate into English prompts and then ask ChatGPT questions.

If the model is understood correctly, asking questions in English has no effect. It is recommended to change to a more advanced model. In my opinion, different models are like students at different levels. For example, some models may be at the level of middle school students, some may be at the level of high school students, and some models may be at the level of college students or even graduate students. And different models are good at different things. Therefore, when the prompt words have been well written, but the model answer is not satisfactory, it is possible to consider switching to a more powerful model . In my practice, I can clearly feel that GPT-4 will answer better than GPT-3.5 on most tasks, and there may be more powerful models in the future.

When using a more advanced model, no satisfactory answer can be obtained, indicating that the current task is too complicated for the large model, and task decomposition can be considered at this time. For complex tasks, it is recommended that you first disassemble , dismantle to the steps that are easier for the model to complete, and then let the model complete each step, often with better results. For example, if you want the model to write a class, you can disassemble it into different functions, and then let the model write each function.

When you try the above method and the effect is not satisfactory, you can try the task relay. The so-called " task relay " means that after the task is disassembled, the AI can complete it step by step or the human and AI can work together to complete it . For example, when writing code, you can open a dialog window to let AI write code, use another dialog box to let AI find out the problems, and then let AI tools optimize the code. For example, when writing a manuscript, you can ask AI to write a table of contents for your own reference, or let AI write a draft and optimize it yourself, or you can directly write the manuscript yourself, and finally let AI polish it. Such complex tasks can achieve better results by dismantling each step into a granularity that is easier for AI to complete, or by assigning simple and repetitive tasks to AI and assigning the parts that complex AI is not good at to humans.

2.2.4 Context loss problem

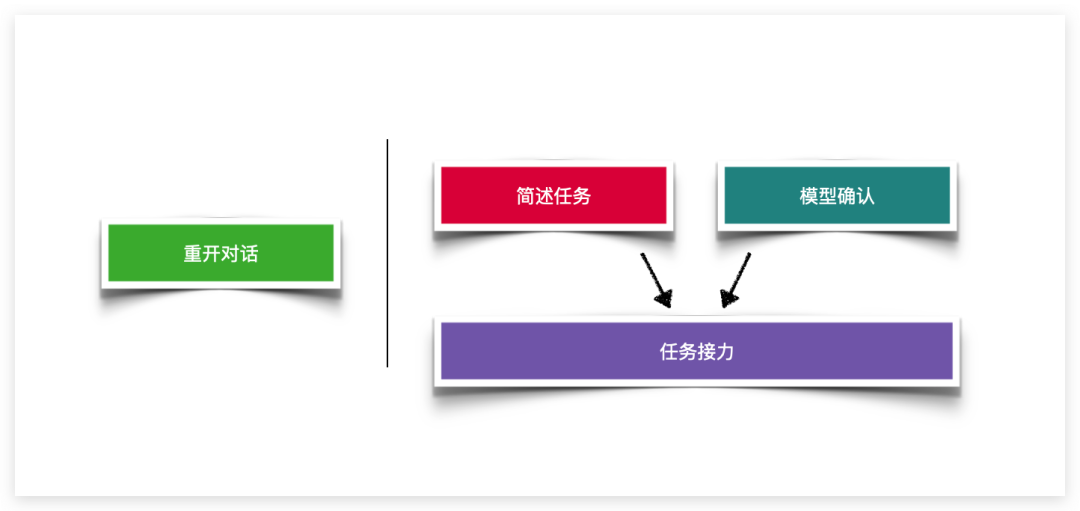

In the process of using AI tools, after many rounds of dialogue, you may find that the model has forgotten what the original task was.

For this situation, the easiest way to deal with it is to reopen a dialogue window, re-phrase the question, and continue to deal with the following materials.

If there are too many steps and it is very troublesome to restart too many conversations, you can try the following methods.

As mentioned earlier, the model restatement task is helpful to check whether the model really understands the task. In my opinion, it is also helpful for the problem of context loss. If your task requires multiple rounds of dialogue to complete, you can try to ask the AI without a few rounds of dialogue. What is the task, the probability of "forgetting" can be reduced by reminding.

I use the "task brief" approach a lot in my practice. For example, when the model needs to summarize the main points of each paragraph, I specify the requirements in the first prompt word. I then restate or briefly state the request before sending the paragraphs in the second round. In this way, even if the model forgets the initial task, it can complete the task according to the brief description after the second round. For example, "Please continue to extract the key points of the following paragraphs and send them to me according to my initial request. Paragraph content: XXX".

If the model confirmation or brief task method can't solve the problem, it shows that this task may be a bit complicated for the model. It is suggested that the task can be further disassembled, so that each Chat interface can only do one of the steps or some steps let AI Do it, some steps are done by people.

2.2.5 Answering reliability questions

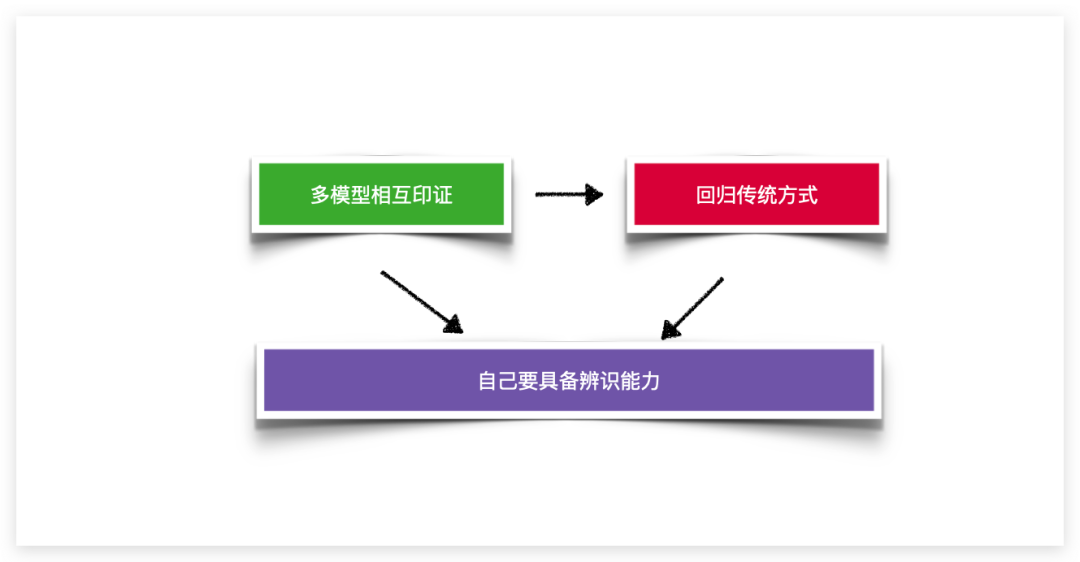

When using large models, many people occasionally encounter situations where the answers are obviously wrong, but the large model answers with confidence, and it is easy to be "bluffed" by the large model.

It is suggested that you can ask multiple different models for questions that you are not sure about. Their answers corroborate each other and reduce the probability of "nonsense". Sometimes, for relatively rigorous content, AI's answer can only be used as a basic reference, and it needs to be combined with traditional research methods, such as using search engines, consulting papers and other materials, etc.

The most important thing is to have the ability to identify yourself. Because even if it is not the AI era, if you ask others to help you prepare materials, if you do not have enough recognition, problems will easily occur. Although AI can improve our efficiency, we need to improve our professionalism and ability to identify the authenticity of information more than ever.

2.2.6 How to better express the value of the charging model

Taking ChatGPT as an example, GPT-3.5 is currently free to use, while GPT-4 needs to activate the Plus service, and there is a limit of 50 messages per 3 hours (it may be further increased in the future, or even completely released). On the Poe platform, ChatGPT and Claude are also divided into free version and paid version, and the paid version also has a limit on the number of times.

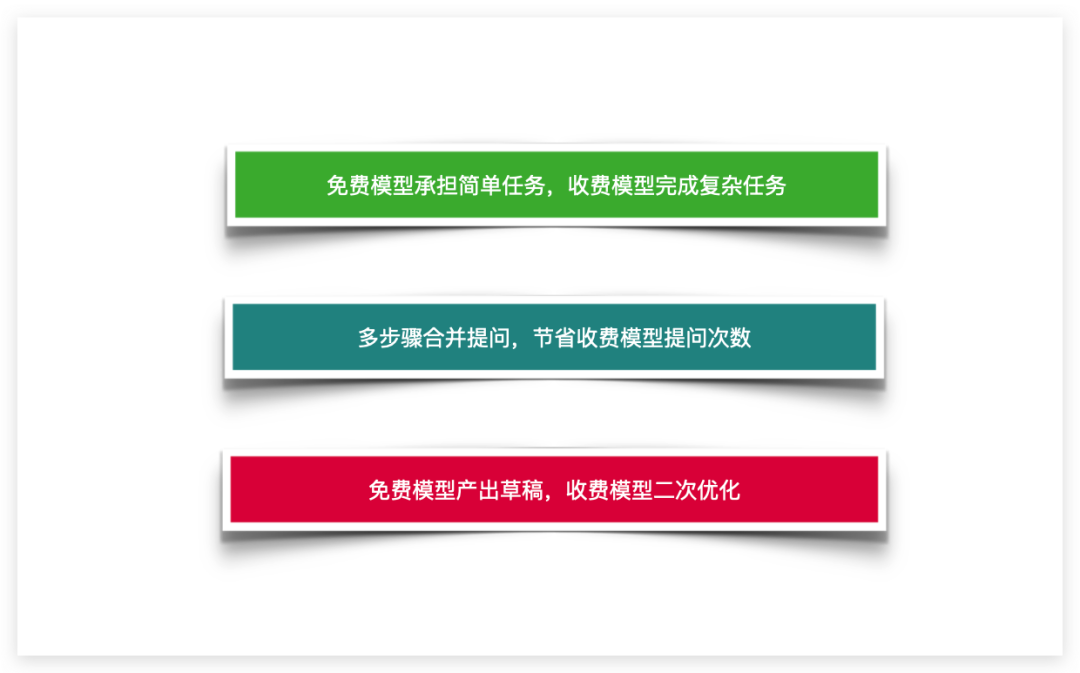

Many simple tasks can be done well with free models, and free models can be used first. For complex tasks, it can be arranged to charge the model for processing.

When using the charging model, multiple steps can be combined into one prompt word to save the number of calls of the charging model.

It is also a good choice to use the free model to produce a draft version, and then let the paid advanced model be re-optimized.

2.2.7 Experience of service access large model access

Now many companies have begun to develop large-scale models by themselves, and many businesses have also begun to access large-scale models. Let me talk about some experience of business access to large-scale models. Mastering these experiences can save you from detours.

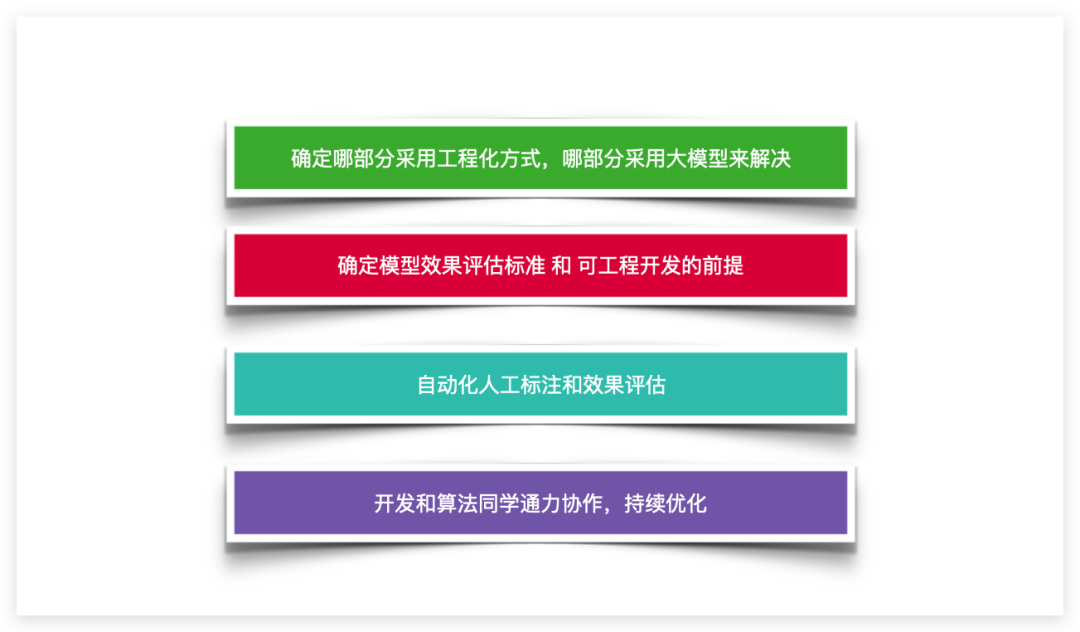

Big models are not everything, and we shouldn't "use AI for the sake of using AI". Just like a car, although convenient and fast, is not the best choice in all situations. In practice, we've found that certain tasks are better and less costly to engineer. Therefore, when accessing large models, we need to weigh which tasks are suitable for engineering solutions and which tasks are more suitable for large models .

Before formally accessing the model, it is necessary to determine the effect evaluation criteria of the model , so that the deficiencies of the model can be found and optimized accordingly. We also need to determine the prerequisites for engineering development , for example, the adoption rate of the generated code is 50%, and the generated paragraphs reach more than 80 points. If we enter into engineering development too early, the product may not meet the expected standard for a long time after it goes online, and may even fail to achieve it forever, resulting in poor product usability and wasting a lot of resources.

Some complex tasks require fine-tuning of the model, a large amount of manual labeling, and the work of evaluating the effect of the model. In order to improve efficiency, it is recommended that you can write scripts yourself, or use AI models to assist in realizing automated or semi-automated processes.

In the process of implementing the AI model into the business, although the developers are familiar with the business, they may not understand the method of model optimization; although the algorithm personnel are more professional, they may not know enough about the business. Therefore, if you want to continuously optimize the effect of the large model, you need to cooperate with the developers and algorithm students . Moreover, due to the shortage of algorithm personnel in the large model team of some companies, many businesses want to go online as soon as possible, and some developers have also begun to train large models in person. But the lack of professional guidance may lead to many unscientific optimization ideas, which will lead to many detours. Therefore, it is recommended that when you consider adding business to AI capabilities and allowing AI to improve business efficiency, increase the input of algorithm students, and let algorithm and development students work together to better solve problems.

Summarize

Perhaps cue words are just a compromise in the early stages of the development of large language models. It can be compared to the development of automobiles, gradually evolving from manual transmission to assisted driving and automatic driving. In the future, we may also use more advanced ways to interact with large models, such as brain waves and ideas.

Although the emergence of ChatGPT is impressive, there are still many other problems to be solved in the large language model, and we still need to accommodate it.

However, in my opinion, this is something to be thankful for. It is precisely because of the imperfection of AI that we are less prone to unemployment.

At the current stage of AI development, I think the most important thing is to learn prompt words and master the best practices of AI tools, so that you can become the first batch of people who can flexibly control large models to better solve your life and work problems, and be able to be in the AI era. gain some competitive advantage early on. I hope that some of the experience provided in this article can save you some detours.

What experience do you have with AI tools? What is your opinion on the current development of large language models? Everyone is welcome to add comments and discussions in the comment area.