nn.CrossEntropyLoss() instance understanding:

Specific understanding for PICA:

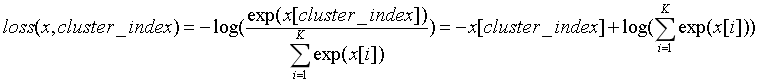

The following can be understood as the loss corresponding to a row in the K*K PUI:

Among them, x can be understood as a row in the PUI of K*K ; cluster_index refers to the corresponding element in the row; the denominator part is the cumulative sum of all elements in the row.

Among them, x can be understood as a row in the PUI of K*K ; cluster_index refers to the corresponding element in the row; the denominator part is the cumulative sum of all elements in the row.

CrossEntropyLoss(input, target)

1.

input:

entroy=nn.CrossEntropyLoss()

input=torch.Tensor([[-0.7715, -0.6205, -0.2562],

[-0.7715, -0.6205, -0.2562],

[-0.7715, -0.6205, -0.2562]])

target = torch.tensor([0, 0, 0])

# target = torch.arange(3)

output = entroy(input, target)

print(output)

output : tensor(1.3447)

target corresponds to the first element to be sought in a certain obtained feature vector.

(1)

-x[0] + log(exp(x[0]), exp(x[1]), exp(x[2])) =

0.7715 + log(exp(-0.7715) + exp(-0.6205) + exp(-0.2562)) = 1.3447

(2)

-x[0] + log(exp(x[0]), exp(x[1]), exp(x[2])) =

0.7715 + log(exp(-0.7715) + exp(-0.6205) + exp(-0.2562)) = 1.3447

(3)

-x[0] + log(exp(x[0]), exp(x[1]), exp(x[2])) =

0.7715 + log(exp(-0.7715) + exp(-0.6205) + exp(-0.2562)) = 1.3447

loss = [(1) + (2) + (3)] /3 = 1.3447

2.

input:

entroy=nn.CrossEntropyLoss()

input=torch.Tensor([[-0.7715, -0.6205, -0.2562],

[-0.7715, -0.6205, -0.2562],

[-0.7715, -0.6205, -0.2562]])

target = torch.tensor([1, 1, 1])

# target = torch.arange(3)

output = entroy(input, target)

print(output)

output : tensor(1.1937)

(1)

-x[1] + log(exp(x[0]), exp(x[1]), exp(x[2])) =

0.6205 + log(exp(-0.7715) + exp(-0.6205) + exp(-0.2562)) = 1.1937

(2)

-x[1] + log(exp(x[0]), exp(x[1]), exp(x[2])) =

0.6205 + log(exp(-0.7715) + exp(-0.6205) + exp(-0.2562)) = 1.1937

(3)

-x[1] + log(exp(x[0]), exp(x[1]), exp(x[2])) =

0.6205 + log(exp(-0.7715) + exp(-0.6205) + exp(-0.2562)) = 1.1937

loss = [(1) + (2) + (3)] / 3 = 1.1937

3.

input:

entroy=nn.CrossEntropyLoss()

input=torch.Tensor([[-0.7715, -0.6205, -0.2562],

[-0.7715, -0.6205, -0.2562],

[-0.7715, -0.6205, -0.2562]])

target = torch.tensor([2, 2, 2])

# target = torch.arange(3)

output = entroy(input, target)

print(output)

output :tensor(0.8294)

(1)

-x[2] + log(exp(x[0]), exp(x[1]), exp(x[2])) =

0.2562 + log(exp(-0.7715) + exp(-0.6205) + exp(-0.2562)) = 0.8294

(2)

-x[2] + log(exp(x[0]), exp(x[1]), exp(x[2])) =

0.2562 + log(exp(-0.7715) + exp(-0.6205) + exp(-0.2562)) = 0.8294

(3)

-x[2] + log(exp(x[0]), exp(x[1]), exp(x[2])) =

0.2562 + log(exp(-0.7715) + exp(-0.6205) + exp(-0.2562)) = 0.8294

loss = [(1) + (2) + (3)] / 3 = 0.8294

4.

input:

entroy=nn.CrossEntropyLoss()

input=torch.Tensor([[-0.7715, -0.6205, -0.2562],

[-0.7715, -0.6205, -0.2562],

[-0.7715, -0.6205, -0.2562]])

target = torch.tensor([0, 1, 2]) # 或 target = torch.arange(3)

# target = torch.arange(3)

output = entroy(input, target)

print(output)

output :tensor(1.1226)

(1)

-x[0] + log(exp(x[0]), exp(x[1]), exp(x[2])) =

0.7715 + log(exp(-0.7715) + exp(-0.6205) + exp(-0.2562)) = 1.3447

(2)

-x[1] + log(exp(x[0]), exp(x[1]), exp(x[2])) =

0.6205+ log(exp(-0.7715) + exp(-0.6205) + exp(-0.2562)) = 1.1937

(3)

-x[2] + log(exp(x[0]), exp(x[1]), exp(x[2])) =

0.2562 + log(exp(-0.7715) + exp(-0.6205) + exp(-0.2562)) = 0.8294

loss = [(1) + (2) + (3)] / 3 = 1.1226