Table of contents

1. Introduction to BP neural network:

2. Introduction to the principle of genetic algorithm:

3. BP neural network optimized by genetic algorithm:

5. Matlab code for this article:

Summary:

Based on the Matalb platform, the genetic algorithm (GA) is combined with the BP neural network, and the main parameters of the BP neural network are optimized using GA. Then multiple characteristic factors that affect the output response value are used as the input neuron of the GA-BP neural network model, and the output response value is used as the output neuron for predictive testing. The program has been standardized, which is convenient for users to replace their own data, so as to realize the functions they need.

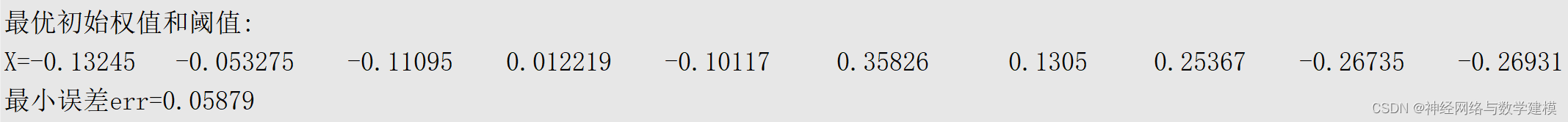

1. Introduction to BP neural network:

BP neural network is a multi-layer forward neural network, generally composed of three layers of neurons, each layer has multiple neurons and each neuron is independent of each other. When the three-layer network model is successfully constructed, an input sample is given to the network, and the sample vector starts to be transmitted from the input layer neurons to the hidden layer unit, and then sequentially output to the output layer unit after being processed layer by layer in the middle layer, and then passed to the output layer unit To obtain an actual sample output, this process is to propagate the modified state forward layer by layer, which is the forward propagation process. When the output sample vector does not match the expected output, an error occurs, and the error needs to be backpropagated. The process of backpropagation is also a process of passing layer by layer and modifying the connection weights of each layer. Such a transfer process needs to be carried out continuously until a set of training patterns ends or the error reaches a minimum value, and the output meets the expected output. Through experiments, it is known that continuously modifying the weights and biases between neurons can make the output of the network fit all training inputs within the allowable range of error. This fitting process is the process of neural network training. The gradient descent algorithm of BP network is a relatively fast weight adjustment algorithm, which can continuously carry out error reverse transmission to adjust the weight and threshold of the network structure to achieve the expected goal. Due to the functional characteristics of BP neural network, it has become one of the most widely used network models.

Figure 1 Basic structure of BP neural network

2. Introduction to the principle of genetic algorithm:

The theory of Genetic Algorithm (GA) is an algorithm designed according to Darwin's theory of evolution. It generally means that organisms evolve in a good direction, and in the process of evolution, good genes will be automatically selected to eliminate inferior genes. Evolution in a good direction is the direction of the optimal solution. Good genes are sample genes that meet the current conditions and can be fully selected. A series of biological processes in the process of biological evolution in the genetic mechanism include three main behavioral mechanisms: selection, crossover and variation. Therefore, the genetic algorithm is mainly divided into three types of operations: selection, crossover, and mutation to complete the elimination mechanism, so that the self-evolution after training is carried out in a powerful direction according to the conditions, and finally the optimal individual reconciliation set is obtained. The formation of an algorithm also requires encoding, genetic variation calculation fitness and decoding.

The implementation process and operation process of the genetic algorithm are shown in Figure 2. First of all, it is necessary to form the initial population through coding to form the basis of genetic algorithm; after that, it is the process of selecting crossover and mutation and then selecting. The operations of the individual genetic operators in the population all occur under certain probability. The transition from individuals to the optimal solution in the initial genetic algorithm cluster is also random. However, it should be noted that this randomized operation selection operation method is different from the traditional random search method. GA performs an efficient directed search, while the general random search is an undirected random search for each operation.

Figure 2 Basic operation process of genetic algorithm

3. BP neural network optimized by genetic algorithm:

The following are the specific steps of improving the genetic algorithm to optimize the BP neural network:

(1) Initialize the BP neural network, determine the network structure and learning rules, and the chromosome length of the genetic algorithm.

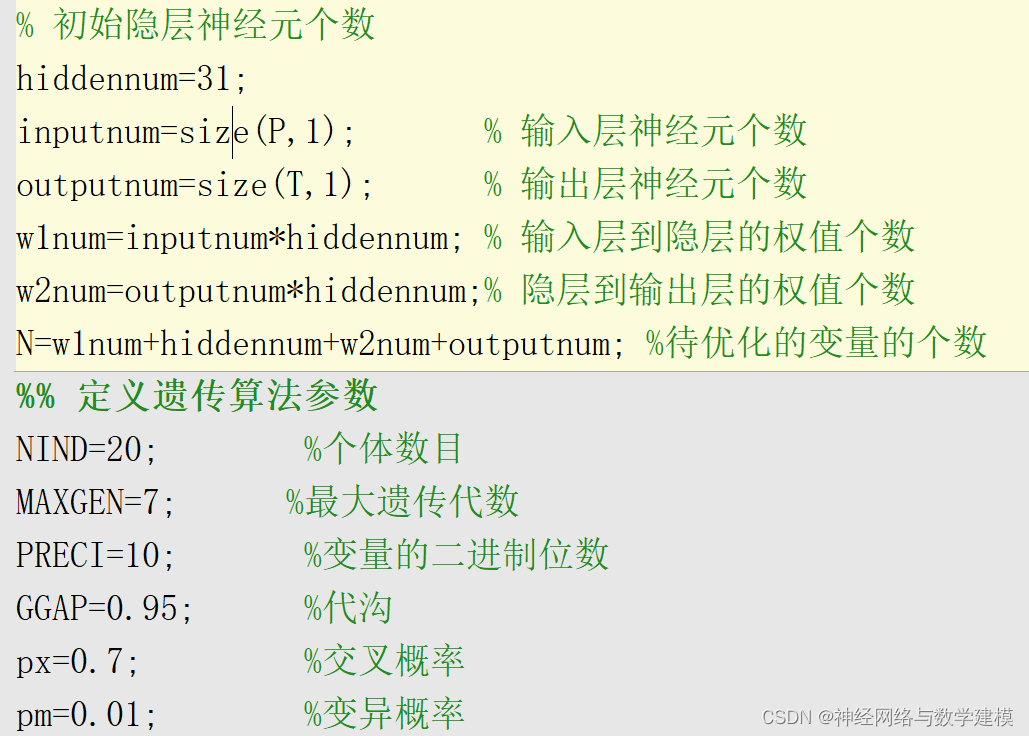

(2) Initialize the parameters of the genetic algorithm (including the number of iterations, population size, crossover probability and mutation probability, etc.), and the required fitness function of the genetic algorithm for population selection.

(3) Use the roulette method to select several chromosomes to meet the requirements of the fitness function as the paternal line of the new population.

(4) By changing the crossover process and genetic algorithm changes, male parents are dealt with to generate new population generation.

(5) Judging whether the error has reached the accuracy, if not, re-execute (3) (4) steps, so that the chromosomes are constantly updated so that the individual is continuously updated; when the goal is reached, the optimal chromosome is found and assigned to the initial weight of the BP neural network and threshold.

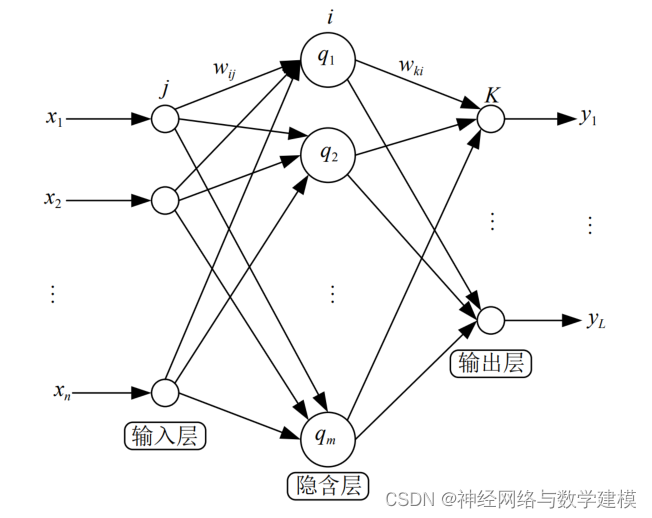

Among the above steps, the important step is to solve the coding problem of the genetic algorithm and select the appropriate fitness function. We use real numbers in the genetic algorithm, and the main purpose of optimizing the GA algorithm is to find the best blue color so that the sum of squared errors of the BP neural network reaches the minimum value. So we can choose the sum of squared errors of the BP network as the fitness function of the genetic algorithm. The formula of the fitness function is as follows:

4. Case analysis:

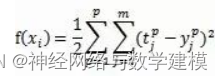

First import the training data: the training input data contains 15 samples, each sample has 9 eigenvalues, and each training sample has 3 output specific data as follows:

Set the initial parameters of genetic algorithm and BP neural network:

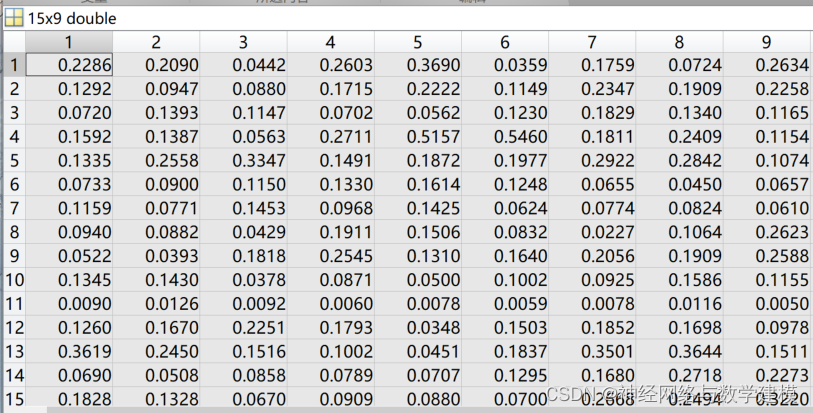

Construct the GA-BP structure, optimize the parameters in the BP network, and output the final optimization variable results after reaching the maximum number of cycles, and use the optimized BP neural network to predict the data of the test set and evaluate the prediction the accuracy. The evolution process is as follows:

The specific prediction results are as follows: