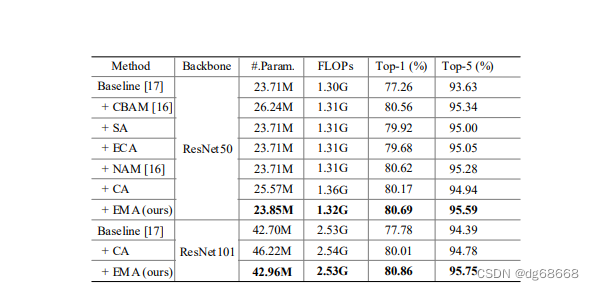

Significant channel- or space-effective attention-generating mechanisms for more discriminative feature representations have been illustrated using a variety of computer vision tasks. However, cross-channel modeling relations and channel dimensionality reduction may bring side effects when extracting deep visual representations. In this paper, we propose a new efficient multi-scale attention (EMA) method. module presented. Focusing on preserving information on each channel and reducing computation above, we reshape part of the channels into batch dimensions and group channel dimensions into multiple sub-features constituting spatial semantic features that are well distributed within each feature group. Specifically, in addition to encoding global information into the weight branch that recalibrates each parallel channel, the cross-dimensional interactions of the output features of the two parallel branches are further aggregated to capture pixel-level pairwise relationships. Our extensive ablation studies and image experiments on classification and object detection tasks popular benchmarks (e.g. CIFAR-100, ImageNet-1k, MS COCO and VisDrone2019) to evaluate their performance. Code URL: https://github.com/YOLOonMe/EMAattention-module

Significant channel- or space-effective attention-generating mechanisms for more discriminative feature representations have been illustrated using a variety of computer vision tasks. However, cross-channel modeling relations and channel dimensionality reduction may bring side effects when extracting deep visual representations. In this paper, we propose a new efficient multi-scale attention (EMA) method. module presented. Focusing on preserving information on each channel and reducing computation above, we reshape part of the channels into batch dimensions and group channel dimensions into multiple sub-features constituting spatial semantic features that are well distributed within each feature group. Specifically, in addition to encoding global information into the weight branch that recalibrates each parallel channel, the cross-dimensional interactions of the output features of the two parallel branches are further aggregated to capture pixel-level pairwise relationships. Our extensive ablation studies and image experiments on classification and object detection tasks popular benchmarks (e.g. CIFAR-100, ImageNet-1k, MS COCO and VisDrone2019) to evaluate their performance. Code URL: https://github.com/YOLOonMe/EMAattention-module

The version of yolov5 in this article is 7.0

1. Add the following code to the models/common.py file

import torch

from torch import nn

class EMA(nn.Module):

def __init__(self, channels, factor=8):

super(EMA, self).__init__()

self.groups = factor

assert channels // self.groups > 0

self.softmax = nn