1. Knowledge about Ingress

1.1 Introduction to Ingress

The role of service is reflected in two aspects. Inside the cluster, it keeps track of pod changes, updates the corresponding pod objects in the endpoint, and provides a service discovery mechanism for pods with changing IP addresses; outside the cluster, it is similar to a load balancer, which can access pods inside and outside the cluster.

In Kubernetes, the IP address of the Pod and the ClusterIP of the service can only be used within the cluster network, and are invisible to applications outside the cluster. In order to enable external applications to access services in the cluster, Kubernetes currently provides the following solutions:

● NodePort : expose services on the node network. Behind NodePort is Kube-Proxy, which is a bridge for communicating service networks, Pod networks, and node networks.

The test environment is okay to use, but when dozens or hundreds of services are running in the cluster, the port management of NodePort is a disaster. Because each port can only be one service, the default port range can only be 30000-32767.

● LoadBalancer : Map to the LoadBalancer address provided by the cloud service provider by setting the LoadBalancer. This usage is only used in the scenario of setting up a Service on the cloud platform of a public cloud service provider. It is limited by the cloud platform, and usually additional fees are required to deploy LoadBalancer on the cloud platform.

After the service is submitted, Kubernetes will call CloudProvider to create a load balancing service for you on the public cloud, and configure the IP address of the proxied Pod to the load balancing service as the backend.

● externalIPs : Service allows to assign external IPs to it. If the external IPs are routed to one or more Nodes in the cluster, the Service will be exposed to these externalIPs. The traffic entering the cluster through the external IP will be routed to the Endpoint of the Service.

● Ingress : With only one or a small number of public IPs and LBs, multiple HTTP services can be exposed to the external network at the same time, seven-layer reverse proxy.

A service that can be simply understood as a service is actually a set of rules that forward user requests to one or more services based on domain names and URL paths.

1.2 Composition of Ingress

●ingress:

ingress is an API object, which is configured through a yaml file. The function of the ingress object is to define the rules for how requests are forwarded to the service, which can be understood as a configuration template.

Ingress exposes the internal services of the cluster through http or https, and provides services with external URLs, load balancing, SSL/TLS capabilities, and domain name-based reverse proxy. Ingress depends on ingress-controller to implement the above functions.

●ingress-controller:

ingress-controller is a program that specifically implements reverse proxy and load balancing. It analyzes the rules defined by ingress and implements request forwarding according to the configured rules.

Ingress-controller is not a built-in component of k8s. In fact, ingress-controller is just a general term. Users can choose different ingress-controller implementations. Currently, the only ingress-controllers maintained by k8s are GCE and ingress-nginx of Google Cloud. There are many other ingress-controllers maintained by third parties. For details, please refer to official documents. But no matter what kind of ingress-controller, the implementation mechanism is similar, but there are differences in specific configurations.

Generally speaking, the ingress-controller is in the form of a pod, which runs a daemon program and a reverse proxy program. The daemon is responsible for continuously monitoring changes in the cluster, generating configurations based on ingress objects and applying new configurations to the reverse proxy. For example, ingress-nginx dynamically generates nginx configurations, dynamically updates upstream, and reloads the program to apply new configurations when needed. For convenience, the following examples all use the ingress-nginx officially maintained by k8s as an example .

1.3 Working principle of Ingress-Nginx

(1) The ingress-controller interacts with the kubernetes APIServer to dynamically perceive changes in ingress rules in the cluster.

(2) Then read it, according to the custom rules, the rules are to specify which domain name corresponds to which service, and generate a piece of nginx configuration.

(3) Then write to the pod of nginx-ingress-controller, which runs an Nginx service in the pod of ingress-controller, and the controller will write the generated nginx configuration into the /etc/nginx.conf file.

(4) Then reload to make the configuration take effect. In this way, the role of domain name distinction configuration and dynamic update can be achieved

1.4 New Generation Ingress-controller ( Traefik )

Traefik is a modern HTTP reverse proxy and load balancing tool that was born to make deploying microservices easier. It supports multiple backends (Docker, Swarm, Kubernetes, Marathon, Mesos, Consul, Etcd, Zookeeper, BoltDB, Rest API, file…) to automatically and dynamically apply its configuration file settings.

Simple comparison of Ingress-nginx and Ingress-Traefik

ingress-nginx

Use nginx as the front-end load balancer, continuously interact with the kubernetes api through the ingress controller, obtain changes in the back-end service, pod, etc. in real time, then dynamically update the nginx configuration, and refresh the configuration to take effect to achieve the purpose of service discovery.

Ingress-traefik:

Traefik itself is designed to be able to interact with the kubernetes api in real time, perceive changes in backend services, pods, etc., automatically update configurations and reload. Relatively speaking, traefik is faster and more convenient, and supports more features, making reverse proxy and load balancing more direct and convenient. (Written in GO language, natively supports K8S and other cloud-native applications, with better compatibility, but the concurrency capability is 60% of that of ingress-nginx)

1.5 How Ingress is exposed

Method 1: Service in Deployment+LoadBalancer mode

If you want to deploy the ingress in the public cloud, then this method is more appropriate. Use Deployment to deploy ingress-controller, create a service of type LoadBalancer and associate this group of pods. Most public clouds will automatically create a load balancer for the LoadBalancer service, and usually bind a public network address. As long as the domain name resolution points to this address, the external exposure of the cluster service is realized

eg: Take Alibaba Cloud as an example

Method 2: DaemonSet+HostNetwork+nodeSelector

Use DaemonSet combined with nodeselector to deploy ingress-controller to a specific node, and then use HostNetwork to directly connect the pod with the network of the host node, and directly use the 80/433 port of the host to access the service. At this time, the node machine where the ingress-controller is located is very similar to the edge node of the traditional architecture, such as the nginx server at the entrance of the computer room. This method is the simplest in the entire request link, and its performance is better than that of the NodePort mode. The disadvantage is that a node can only deploy one ingress-controller pod due to the direct use of the network and port of the host node. It is more suitable for large concurrent production environments.

Method 3: Service in Deployment+NodePort mode

also deploys ingress-controller in deployment mode and creates a corresponding service, but the type is NodePort. In this way, the ingress will be exposed on the specific port of the cluster node ip. Since the port exposed by nodeport is a random port, a load balancer is usually built in front to forward requests. This method is generally used in scenarios where the IP address of the host machine is relatively fixed.

Although it is simple and convenient to expose ingress through NodePort, NodePort has an additional layer of NAT, which may have a certain impact on performance when the request level is large.

2. Deploy DaemonSet+HostNetwork to expose Ingress

2.1 Deploy nginx-Ingress-controller

(1) Download the yaml configuration method of the official nginx-Ingress

The #mandatory.yaml file contains the creation of many resources, including namespace, ConfigMap, role, ServiceAccount, etc. All resources needed to deploy ingress-controller.

Official download address:

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.25.0/deploy/static/mandatory.yaml

may not be available for downloading, you can use domestic gitee

wget https://gitee.com/mirrors/ingress-nginx/raw/nginx-0.25.0/deploy/static/mandatory.yaml

wget https:// gitee.com/mirrors/ingress-nginx/raw/nginx-0.30.0/deploy/static/mandatory.yaml

2.2 The specific deployment process of DaemonSet+HostNetwork

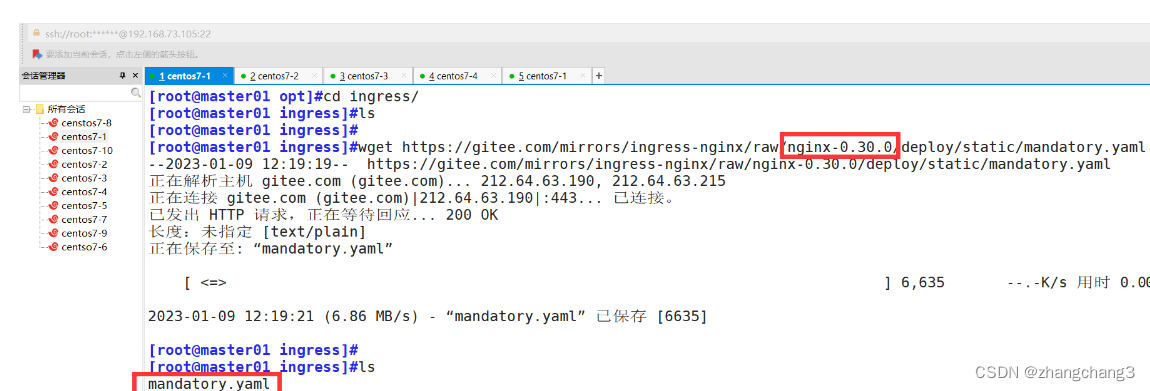

Step 1: Download and install the ingress-controller pod and related resources

mkdir /opt/ingress

cd /opt/ingress

#Here for convenience, we directly use the nginx-0.30.0 version. If it is nginx-0.25.0, you can modify it as above

wget https://gitee.com/mirrors/ingress-nginx/raw/nginx-0.30.0/deploy/static/mandatory.yaml

Step 2: Add a label to the node02 node

This step is because when the DaemonSet controller is configured, a pod node will be deployed for each node node. Here I just want to make an ingress-controller

#Specify nginx-ingress-controller to run on node02 node

kubectl label node node02 ingress=true

kubectl get nodes --show-labels

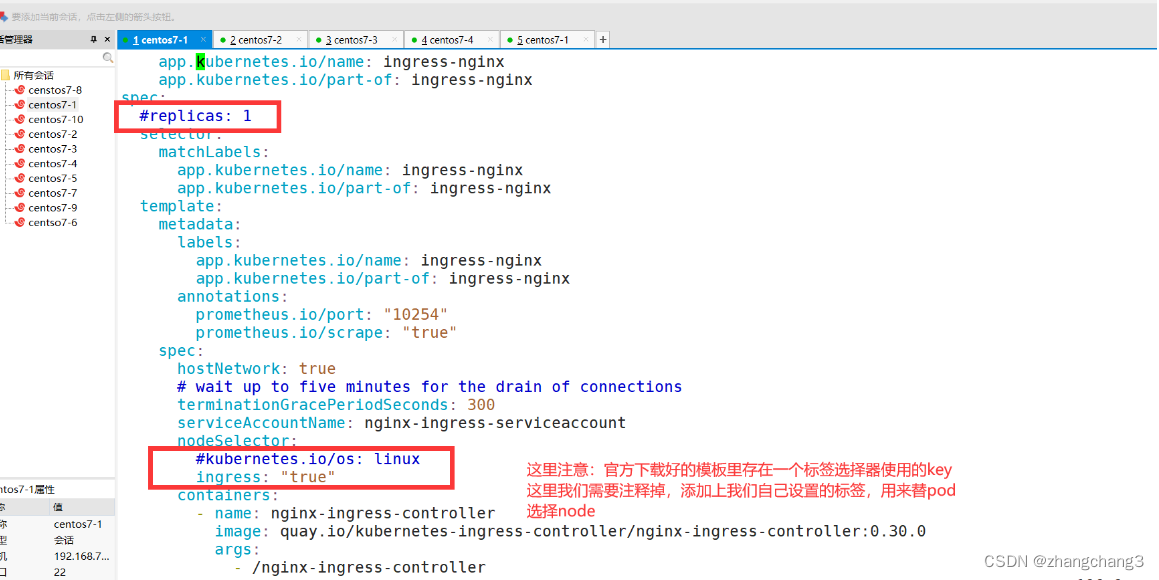

#Modify the Deployment to DaemonSet, specify the node to run, and open the hostNetwork network. Specify pod on node02 via label selector

vim mandatory.yaml

...

apiVersion: apps/v1

# Modify kind

# kind: Deployment

kind: DaemonSet

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes. io/part-of: ingress-nginx

spec:

# Delete Replicas

# replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

template:

metadata:

labels:

app.kubernetes.io/name: ingress-nginx

app.kubernetes.io/part-of: ingress-nginx

annotations:

prometheus.io/port: "10254"

prometheus.io/scrape: "true"

spec:

# use host network

hostNetwork: true

# Select the node to run

nodeSelector:

ingress: "true"

serviceAccountName: nginx-ingress-serviceaccount

…

Step 3: Upload the nginx-ingress-controller image compression package and load it

#Upload the nginx-ingress-controller image compression package inree.contro.tar.gz to the /opt/ingress directory on all node nodes, and decompress and load the image #Upload the image compression package on the

master node

cd /opt/ingress

tar zxvf inree.contro.tar.gz

#Transfer the image package to other nodes via scp

scp inree.contro.tar root@192. 168.73.106:/root

scp ingree.contro.tar [email protected]:/root

#All nodes load image package

docker load -i ingree.contro.tar

Step 4: Start nginx-ingress-controller and check the health of the pod

kubectl apply -f mandatory.yaml

//nginx-ingress-controller has been running node02 node

kubectl get pod -n ingress-nginx -o wide

kubectl get cm,daemonset -n ingress-nginx -o wide

//Go to node02 node to view

netstat -lntp | grep nginx

Since hostnetwork is configured, nginx is already in node The host locally listens on port 80/443/8181. Among them, 8181 is a default backend configured by nginx-controller by default (when the Ingress resource does not have a matching rule object, traffic will be directed to this default backend).

In this way, as long as the access node host has a public IP, it can directly map the domain name to expose the service to the external network. If you want nginx to be highly available, you can

deploy it on multiple nodes, and build a set of LVS+keepalived in front of it for load balancing.

Step 5: Create ingress rules

(1) Create a business pod and svc resource

vim service-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-app

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-app-svc

spec:

type: ClusterIP

ports:

- protocol: TCP

port: 80

targetPort: 80

selector:

app: nginx

kubectl apply -f service-nginx.yaml

2) Create an Ingress resource

//Create ingress

#Method 1: (extensions/v1beta1 Ingress will be deprecated in version 1.22 soon)

vim ingress-app.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: nginx-app-ingress

spec:

rules:

- host: www.test.com

http:

paths:

- path: /

backend:

serviceName: nginx-app-svc

servicePort: 80

#Method 2:

vim ingress-app.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-app-ingress

spec:

rules:

- host: www.test.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-app-svc

port:

number: 80

kubectl apply -f ingress-app.yaml

Test access:

#Select a client, directly add the node IP of node02 and the pod domain name to map

vim /etc/hosts

192.168.73.107 www.test.com

curl www.test.com

#Enter the pod of the ingress controller

kubectl exec -it nginx-ingress-controller-mvgn9 -n ingress-nginx /bin/bash

less /ect/nginx/nginx.conf

//You can see that this domain name is used for reverse proxy configuration from start server www.kgc.com to end server www.kgc.com

3. Service in Deployment+NodePort mode

3.1 Deploy the Service in Deployment+NodePort mode

Step 1: Download related ingress and service-nodeport templates

mkdir /opt/ingress/test

cd /opt/ingress/test

#官方下载地址:

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.30.0/deploy/static/mandatory.yaml

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/nginx-0.30.0/deploy/static/provider/baremetal/service-nodeport.yaml

#国内 gitee 资源地址:

wget https://gitee.com/mirrors/ingress-nginx/raw/nginx-0.30.0/deploy/static/mandatory.yaml

wget https://gitee.com/mirrors/ingress-nginx/raw/nginx-0.30.0/deploy/static/provider/baremetal/service-nodeport.yaml

#在所有 node 节点上传镜像包 ingress-controller-0.30.0.tar 到 /opt/ingress-nodeport 目录,并加载镜像

docker load -i ingress-controller-0.30.0.tar

Step 2: Start the official template directly and use it

kubectl apply -f mandatory.yaml

kubectl apply -f service-nodeport.yaml

#//If K8S Pod scheduling fails, when kubectl describe pod resource:

Warning FailedScheduling 18s (x2 over 18s) default-scheduler 0/2 nodes are available: 2 node(s) didn't match Node selector solution: 1. Add the corresponding label to the

node

that needs to be scheduled

# Compared with the example of the Yaml file above,

kubectl label nodes node_name kubernetes.io/os=linux

2. Delete the nodeSelector in the Yaml file. If there is no requirement for the node, just delete the node selector

3.2 Operation demonstration of Ingress Http proxy access

#创建 deployment、Service、Ingress Yaml 资源

vim ingress-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-app

spec:

replicas: 2

selector:

matchLabels:

name: nginx

template:

metadata:

labels:

name: nginx

spec:

containers:

- name: nginx

image: nginx:1.14

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

name: nginx

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-test

spec:

rules:

- host: www.test.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-svc

port:

number: 80

-------------------------------------------------------------------------------------------

#host The hostname can be an exact match, or it can be matched using wildcards, but wildcards only cover one DNS label (eg *.foo.com does not match baz.bar.foo.com).

There are three path types supported by #pathType:

●ImplementationSpecific: For this path type, the matching method depends on the IngressClass. Implementations may treat it as a separate pathType or in the same way as Prefix or Exact types.

●Exact: Match the URL path exactly, and it is case-sensitive.

● Prefix: Matches based on URL path prefixes separated by /. Matching is case sensitive. It will not match if the last element of the path is a substring of the last element in the requested path (eg: /foo/bar matches /foo/bar/baz, but not /foo/barbaz).

For details, see: https://kubernetes.io/zh-cn/docs/concepts/services-networking/ingress/#the-ingress-resource

----------------------------------------------------------------------------------------------

kubectl apply -f ingress-nginx.yaml

3.3 Ingress HTTP proxy access virtual host

We know that nginx and apache can set three virtual hosts, namely: based on IP, based on domain name, and based on port. Similarly, ingress-nginx can also set up these three virtual hosts here. The following will take the most commonly used domain name-based virtual host as an example to demonstrate the operation

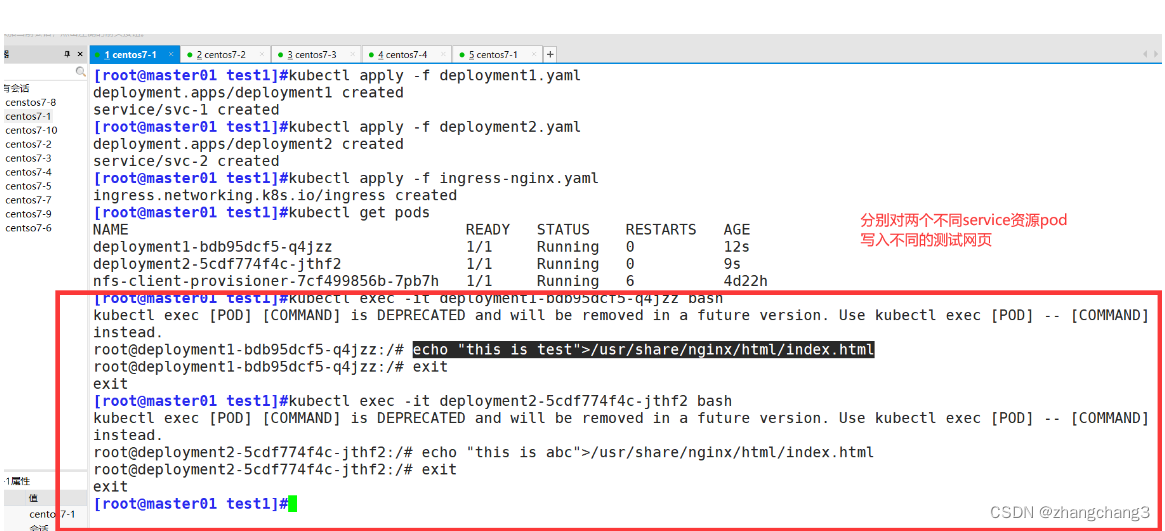

(1)设置虚拟主机1资源

vim deployment1.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment1

spec:

replicas: 1

selector:

matchLabels:

name: nginx1

template:

metadata:

labels:

name: nginx1

spec:

containers:

- name: nginx1

image: nginx:1.14

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: svc-1

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

name: nginx1

kubectl apply -f deployment1.yaml

(2) Create a virtual host 2 resource

vim deployment2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deployment2

spec:

replicas: 1

selector:

matchLabels:

name: nginx2

template:

metadata:

labels:

name: nginx2

spec:

containers:

- name: nginx2

image: nginx:1.14

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: svc-2

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

name: nginx2

kubectl apply -f deployment2.yaml

(3) Create ingress resource

vim ingress-nginx.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress1

spec:

rules:

- host: www.test.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: svc-1

port:

number: 80

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress2

spec:

rules:

- host: www.abc.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: svc-2

port:

number: 80

kubectl apply -f ingress-nginx.yaml

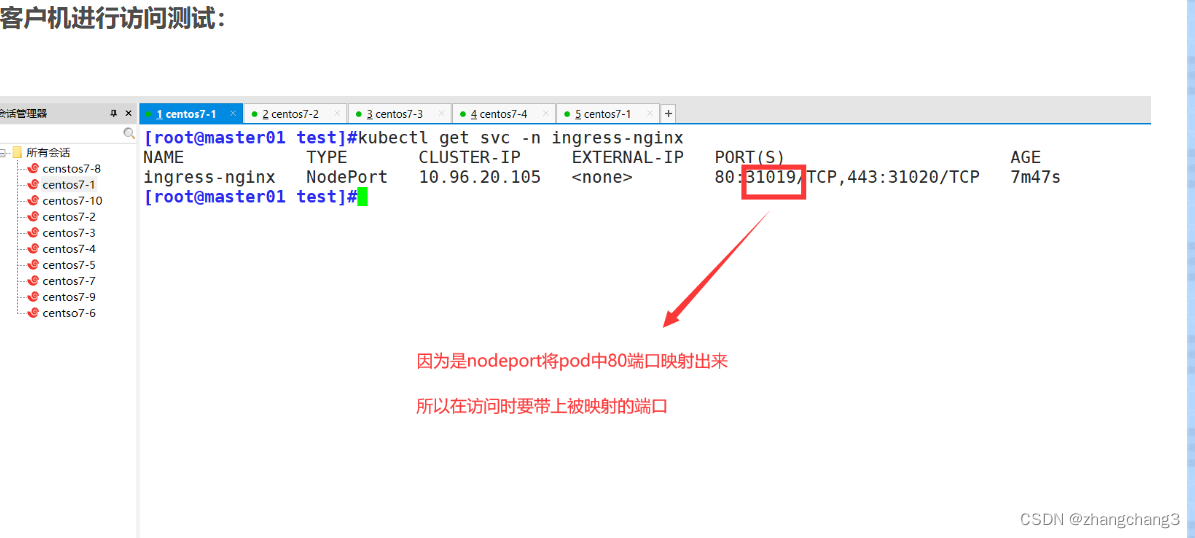

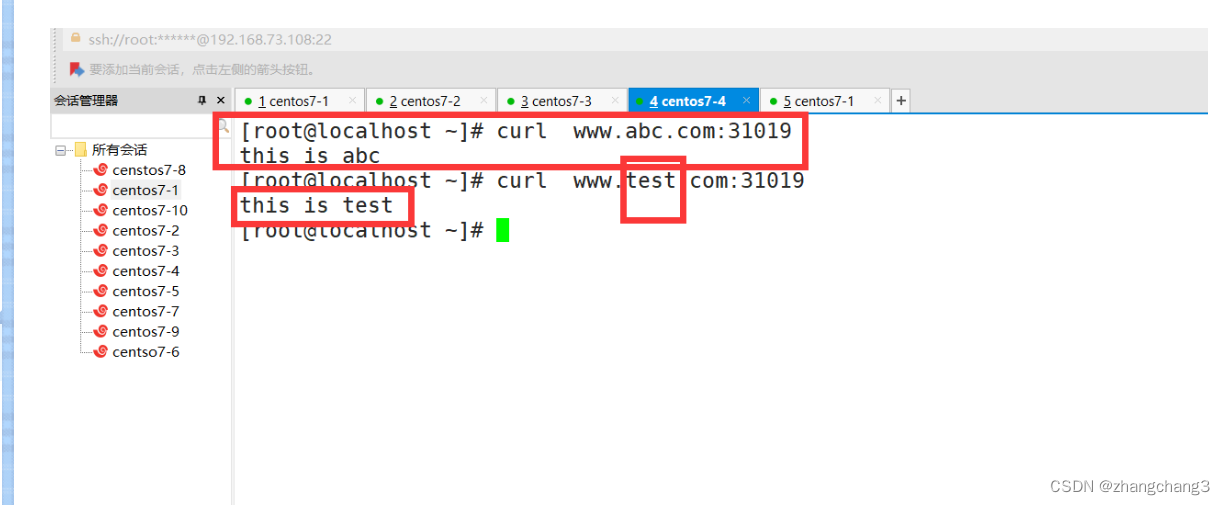

client for test access

vim /etc/hosts

#Here you can fill in any IP of a node node

192.168.50.25 www.test.com www.abc.com

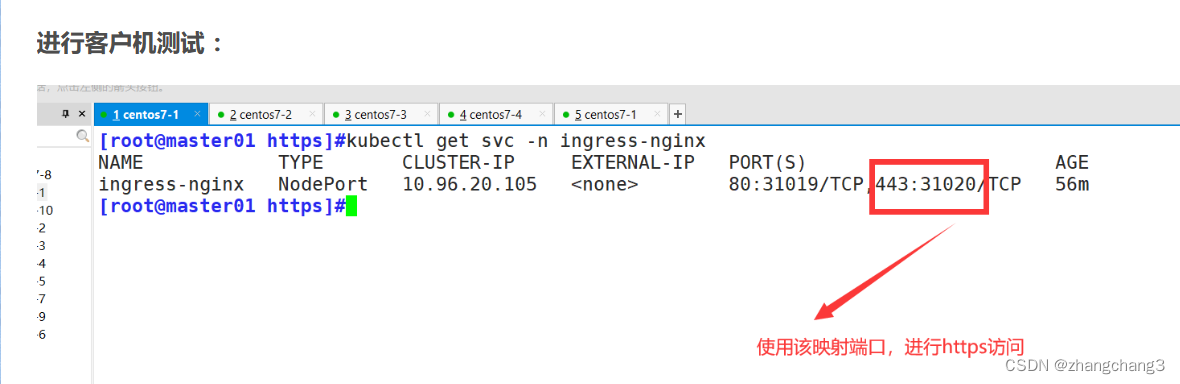

3.4 Ingress HTTPS proxy access

(1) Create an ssl certificate for secret resource storage

#yum install openssl

yum install -y openssl

#create ssl certificate

openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 -keyout tls.key -out tls.crt -subj "/CN=nginxsvc/O=nginxsvc" #create secret

resource for storage

kubectl create secret tls tls- secret --key=tls.key --cert=tls.crt

(2) Create deployment, Service, Ingress Yaml resources

vim ingress-https.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-app

spec:

replicas: 2

selector:

matchLabels:

name: nginx

template:

metadata:

labels:

name: nginx

spec:

containers:

- name: nginx

image: nginx:1.14

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

name: nginx

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-https

spec:

tls:

- hosts:

- www.asd.com

secretName: tls-secret

rules:

- host: www.asd.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-svc

port:

number: 80

kubectl apply -f ingress-https.yaml

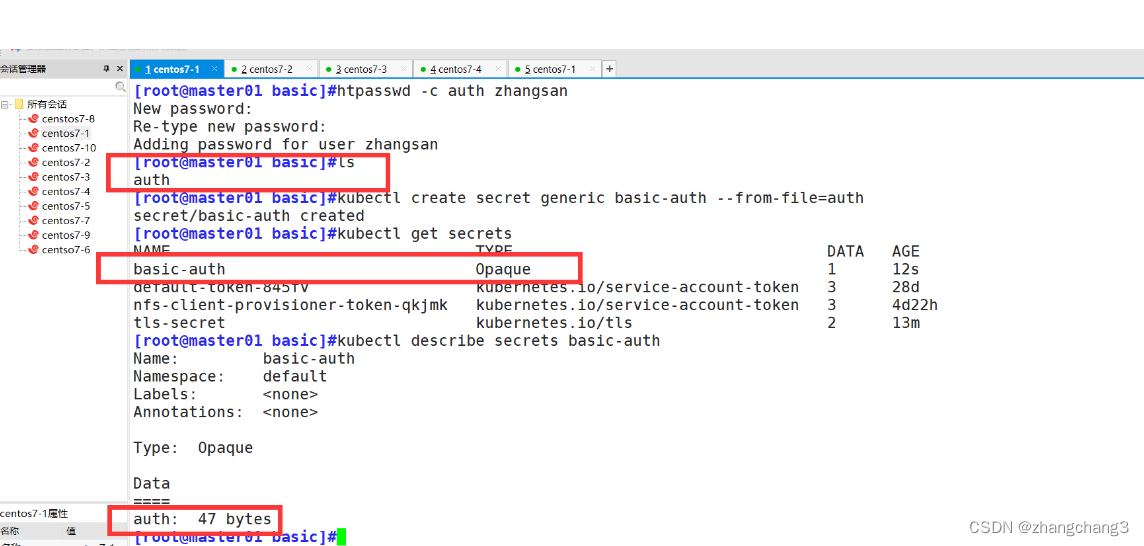

3.5 Ingress setting pod business nginxBasicAuth authentication

(1) Generate a user password authentication file and create a secret resource for storage

#Generate user password authentication file, create secret resource for storage

yum -y install httpd-tools

htpasswd -c auth zhangsan #Authentication file name must be auth

kubectl create secret generic basic-auth --from-file=auth

(2) Create an Ingress resource

vim ingress-auth.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-auth

annotations:

#Set authentication type basic

nginx.ingress.kubernetes.io/auth-type: basic

#Set secret resource name basic-auth

nginx.ingress.kubernetes.io/auth-se cret: basic-auth

#Set authentication window prompt information

nginx.ingress.kubernetes.io/auth-realm: 'Authentication Required - zhangsan'

spec:

rules:

- host: www.asd.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-svc

port:

number: 80

(3) Create pod and service resources

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-app

spec:

replicas: 1

selector:

matchLabels:

name: nginx

template:

metadata:

labels:

name: nginx

spec:

containers:

- name: nginx

image: nginx:1.14

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

name: nginx

Do a client access test:

3.6 Set nginx rewrite in Ingress

#metadata.annotations configuration instructions

nginx.ingress.kubernetes.io/rewrite-target: <string> #Target URI where traffic must be redirected

nginx.ingress.kubernetes.io/ssl-redirect: <boolean> #Indicates if the location part is only accessible for SSL (defaults to true when the Ingress contains a certificate) nginx.ingress.kubernetes.io/force-ssl-redirect: <boolean> #even if the Ingress is not enabled TLS, also force redirection to

HTTPS nginx.ingress.kubernetes.io/app-root: <string> #Defines the

application root that the Controller must redirect if it is in a '/' context nginx.ingress.kubernetes.io/use-regex: <Boolean> #Indicates whether

the path defined on the Ingress uses a regular expression

Perform a demo demonstration:

We add an http pod and svc resource, ingress (www.asd.com). Then write the rewrite ingress

(1) Rewrite to resource creation

vim ingress-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-app

spec:

replicas: 1

selector:

matchLabels:

name: nginx

template:

metadata:

labels:

name: nginx

spec:

containers:

- name: nginx

image: nginx:1.14

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: nginx-svc

spec:

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

name: nginx

---

apiVersion: networking.k8s.io/v1

kind: Ingress

ports:

- port: 80

targetPort: 80

protocol: TCP

selector:

name: nginx

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-test

spec:

rules:

- host: www.asd.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: nginx-svc

port:

number: 80

kubectl apply -f ingress-nginx.yaml

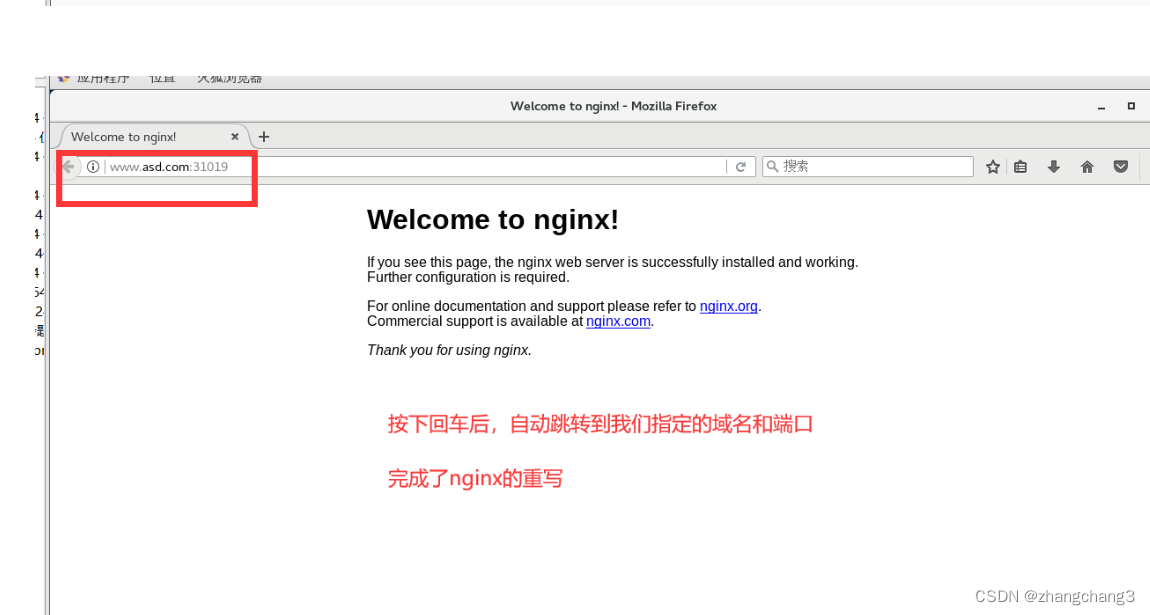

2) Rewrite the edit of the ingress resource

vim write-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-rewrite

annotations:

nginx.ingress.kubernetes.io/rewrite-target: http://www.asd.com:31019

spec:

rules:

- host: www.write.com http: path s: - path: / pathType: Prefix backend: #Since

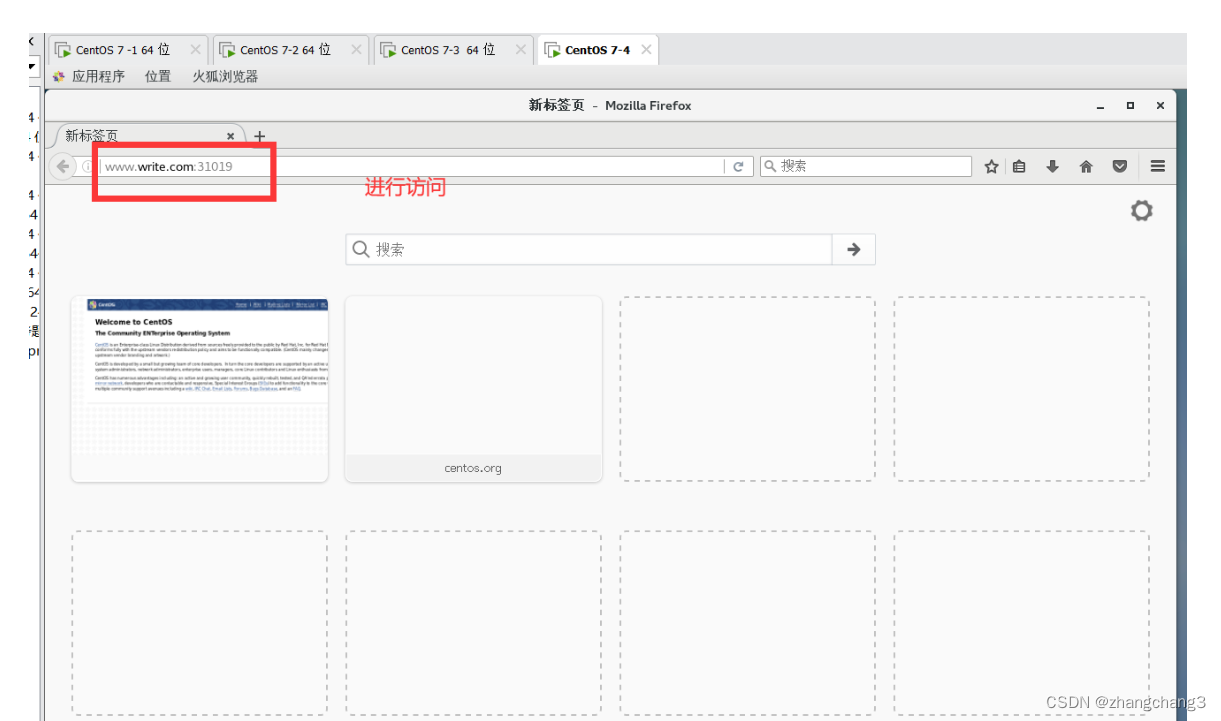

www.rewrite.com is only used for jumping and does not require the existence of a real site, the name of the svc resource can be freely defined service: name: nginx-svc port: number: 80 kubectl apply -f write-ingress.yaml

Conduct Client Access Tests

#Add mapping in the client

echo "192.168.73.107 www.write.com">>/etc/hosts

Summarize

ingress的使用:

DaemonSet + host网络模式 部署ingress-controller:

客户端(防火墙,前端负载均衡器) -> Node节点的80或443端口(ingress-controller,host网络模式下节点主机与Pod共享网络命名空间) -> 业务Pod的Service -> 业务Pod

Deployment + Service(NodePort) 部署:

客户端(防火墙,前端负载均衡器) -> ingress-controller的Service(NodeIP:NodePort) -> ingress-controller(以Pod形式运行) -> 业务Pod的Service -> 业务Pod

ingress的配置:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: 资源名称

spec:

rules:

- host: 域名(可以精确匹配或通配符匹配,比如 *.kgc.com 可以匹配www.kgc.com或mail.kgc.com等,但不能匹配ky22.www.kgc.com)

http:

paths:

- path: 域名后的URL路径(比如 / 代表网页根路径,或者 /test)

pathType: Prefix|Exact (Exact is used for exact URL path matching; Prefix is used for prefix matching and can only match complete character strings, /test/abc can match /test/abc/123, but not /test/abc123) backend: service: name: specify the name of SVC port: number: specify the port of SVC domain name-based proxy forwarding spec: rules: - host: domain name 1 http: .... - host: domain name 2 http: .... Proxy forwarding based on URL path spec: rules: - host: http: paths

:

-

path :

URL path 1 .... - path : URL path 2 .... https Proxy forwarding Issue TLS certificate and private key file first

创建 tls 类型的 Secret 资源,把证书和私钥信息保存到 Secret 资源中

创建 ingress 资源,调用 tls 类型的 Secret 资源

spec:

tls:

- hosts:

- 指定使用https的域名

secretName: 指定tls_Secret资源名称

rules:

....

basic-auth 访问认证

创建 Opaque 类型的 Secret 资源,把basic认证文件内容保存到 Secret 资源中

创建 ingress 资源

metadata:

annotations:

nginx.ingress.kubernetes.io/auth-type: basic

nginx.ingress.kubernetes.io/auth-secret: 指定保存basic认证文件内容的Secret资源

nginx.ingress.kubernetes.io/auth-realm: '指定提示信息'

rewrite 重写

metadata:

annotations:

nginx.ingress.kubernetes.io/rewrite-target: 指定要跳转的目标域名或URL路径

http|https://<domain name>:<ingress-controller svc port>