Table of contents

1.1 Pre-training in the image field

1.2 The idea of pre-training

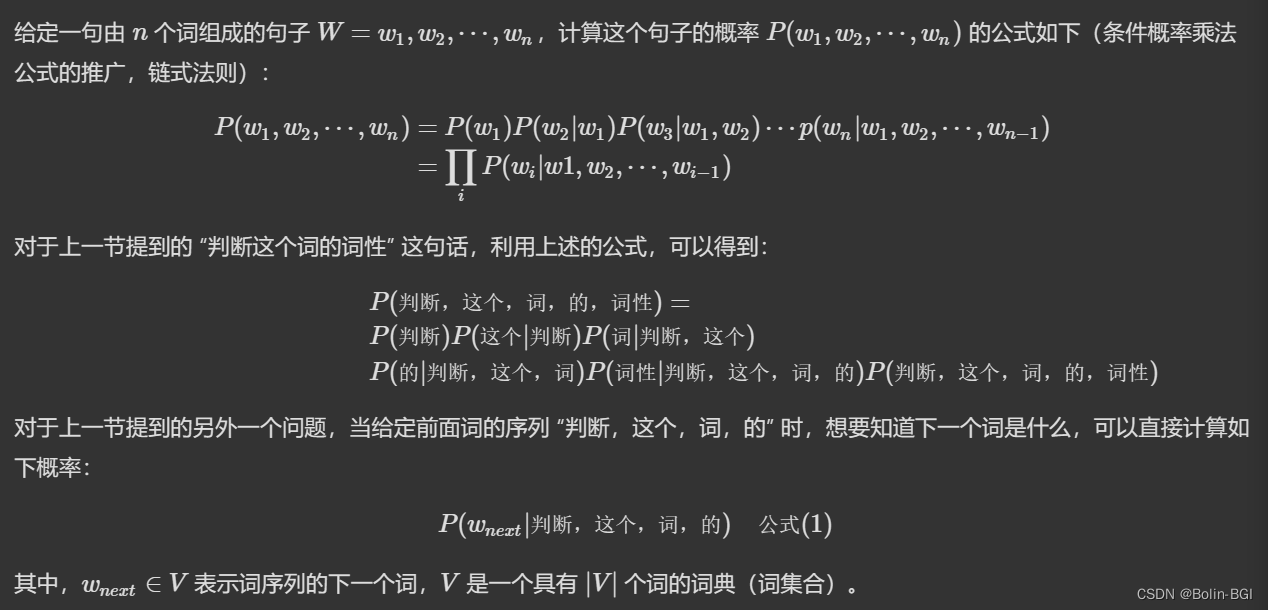

2.1 Statistical Language Model

2.2 Neural Network Language Model

5. The BUG that the traditional neural network model cannot obtain timing information

Sebastian Ruder, a computer scientist at DeepMind, has given the milestone progress of natural language processing since the 21st century from the perspective of neural network technology, as shown in the following table:

| years | year 2013 | Year 2014 | 2015 | 2016 | 2017 |

|---|---|---|---|---|---|

| technology | word2vec | GloVe | LSTM/Attention | Self-Attention | Transformer |

| years | 2018 | 2019 | 2020 |

|---|---|---|---|

| technology | GPT/ELMo/BERT/GNN | XLNet/BoBERTa/GPT-2/ERNIE/T5 | GPT-3/ELECTRA/ALBERT |

1. Pre-training

1.1 Pre-training in the image field

Before introducing the pre-training in the image field, we first introduce the convolutional neural network (CNN). CNN is generally used for image classification tasks, and CNN is composed of multiple hierarchical structures. The image features learned by different layers are also different. The shallower The more general the features learned by the layer (horizontally and vertically), the stronger the correlation between the features learned by the deeper layer and specific tasks (face-face outline, car-car outline), as shown in the following figure :

Suppose, we have a task: there are 10 pictures of cats, dogs, and tigers each, and a deep neural network is designed to classify the pictures of the three of them. For the above tasks, it is basically impossible to design a deep neural network by hand, because a weak point of deep learning is that the demand for data volume is particularly large during the training phase . We only have 30, which is obviously not enough.

Although our data volume is very small, can we use the large number of classified and labeled pictures that exist on the Internet . For example, there are 14 million pictures in ImageNet, and these pictures have been classified and labeled.

The above-mentioned idea of using existing pictures on the Internet is the idea of pre-training. The specific method is:

- Through the ImageNet dataset we train a model A

- Since the above-mentioned CNN's shallow learned features are particularly versatile , we can make some improvements to model A to get model B (two methods)

- Freezing: The shallow layer parameters use the parameters of model A, the high-level parameters are randomly initialized, the shallow layer parameters remain unchanged , and then use 30 pictures to train the parameters

- Fine-tuning: The shallow parameters use the parameters of model A, the high-level parameters are randomly initialized, and then the parameters are trained with 30 pictures, but here the shallow parameters will continue to change with the training of the task

Through the above explanation, make a summary of image pre-training (refer to the above picture): For a task A with a small amount of data, first build a CNN model A through an existing large amount of data, due to the superficial learning of CNN The feature versatility is particularly strong, so when building a CNN model B, the shallow parameters of model B use the shallow parameters of model A, and the high-level parameters of model B are randomly initialized, and then use the data training of task A by freezing or fine-tuning Model B, model B is the model corresponding to task A.

1.2 The idea of pre-training

With the introduction of pre-training in the image field, we give the idea of pre-training here:

The parameters of model A corresponding to task A are no longer randomly initialized, but model B is obtained through pre-training of task B, and then model A is initialized with the parameters of model B, and model A is trained with the data of task A . NOTE: The parameters of model B are initialized randomly.

2. Language model

The twenty-three-year-old Youde is quoted here

The following will introduce two branches of language model, statistical language model and neural network language model .

2.1 Statistical Language Model

The basic idea of statistical language model is to calculate conditional probability .

2.2 Neural Network Language Model

The neural network language model introduces the neural network architecture to estimate the distribution of words, and measures the similarity between words by the distance of word vectors . Therefore, for words that do not appear, they can also be estimated by similar words, thereby avoiding the problem of data sparsity .

3. Word vector

When describing the neural network language model, I mentioned Onehot encoding and word vectors to see what it is.

3.1 Onehot encoding

Representing words with vectors is a core technology for introducing deep neural network language models into the field of natural language processing.

In natural language processing tasks, the training set is mostly a word or a word, and it is very important to convert them into numerical data suitable for computer processing.

In the early days, the method that people thought of was to use Onehot encoding, as shown in the following figure:

For the explanation of the above figure, suppose there is a dictionary V containing 4 times, "Rome" is located in the first position of the dictionary, "Paris" is located in the second position of the dictionary, using the one-hot representation method, for "Rome" For the vector, except the first position is 1, the rest of the positions are 0; for the vector of "Paris", except the second position is 1, the rest of the positions are 0.

For vectors represented by one-hot representation, if the cosine similarity is used to calculate the similarity between vectors, it can be clearly found that the similarity result of any two vectors is 0 , that is, any two are not related, that is to say, the one-hot representation cannot Solve the similarity problem between words.

3.2 Word Embedding

Since the one-hot representation cannot solve the similarity problem between words, this representation was quickly replaced by a word vector representation, that is, a word vector C(wi) that appears in the neural network language model, this C(wi ) In fact, it is the Word Embedding value corresponding to the word, that is, the word vector .

For example, there are now four one-hot encodings of words w1, w2, w3, and w4:

w1=[1,0,0,0]

w2=[0,1,0,0]

w3=[0,0,1,0]

w4=[0,0,0,1]

There is a V×m matrix Q, this matrix Q contains V=4 lines, V represents the size of the dictionary, and the content of each line represents the Word Embedding value of the corresponding word. It’s just that the content of Q is also a network parameter, which needs to be learned. At the beginning of the training, the matrix Q is initialized with random values. After the network is trained, the content of the matrix Q is correctly assigned, and each row represents the Word embedding value corresponding to a word.

Inner product with w1, w2, w3, w4 respectively:

w1*Q=c1

w2*Q=c2

w3*Q=c3

w4*Q=c4

also equivalent to

w = [w1,w2,w3,w4] c = [c1,c2,c3,c4]

W*Q = C

softmax (U[tanh(WC+B1)]+B2)== [0.1,0.1,0.2,0.1, 0.5 ]

If the four words are "I", "Love", "You", "中", then the probability that the next word is "国" is 0.5.

Q is a random matrix learnable, a parameter, and a by-product of the neural network language model. It is equivalent to even if the one-hot encoding matrix is very large and has a high dimension, the dimension size can be controlled by the inner product with Q. Through a lot of learning, c is trained more and more accurately, and can almost represent the original word w, and then become a "word vector".

Now if you give any word, such as "apple": the one-hot encoding of apple is w1 = [1,0,0,0, ... ,0,0]

w1 * Q = c1 c1 is the word vector for judging the word;

Similar to the random matrix Q in the figure below, this matrix Q contains V rows, and V represents the dictionary size. It’s just that the content of Q is also a network parameter, which needs to be learned. At the beginning of the training, the matrix Q is initialized with random values. After the network is trained, the content of the matrix Q is correctly assigned , and each row represents the Word embedding value corresponding to a word .

But one-hot encoding has a lot of defects; for example, if one-hot encoding is used for 3,500 words in high school English, the RAM will not be enough during the calculation process, and the COS similarity between each word is 0, making further learning impossible.

At the same time, this word vector solves the similarity problem between words. The calculation process is as follows:

Through the calculation of the word vector above, it can be found that the word vector of the fourth word is expressed as [10 12 19].

If the cosine similarity is used again to calculate the similarity between two words, the result is no longer 0, which can describe the similarity between two words to a certain extent.

The picture below is an example on the Internet. After a word is expressed as Word Embedding, it is easy to find other words with similar semantics.

4. Word2Vec model

Simple understanding, Word2Vec is a neural network language model, its main task is to generate word vector Q;

Word2Vec has two training methods:

- The first is called CBOW, the core idea is to remove a word from a sentence , and use the context and context of the word to predict the word that has been removed;

- The second is called Skip-gram, which is just the opposite of CBOW. Enter a word and ask the network to predict its context words.

Example: As shown in the figure below, a question answering model of NLP: Given a question Question-X, given an answer-Y, judge whether sentence Y is the correct answer to question X.

Each word in the sentence is input in the form of Onehot, and then multiplied by the learned Word Embedding matrix Q, and the Word Embedding corresponding to the word is directly taken out.

The Word Embedding matrix Q is actually the network parameter matrix mapped from the network Onehot layer to the embedding layer.

Using Word Embedding is equivalent to initializing the network from the Onehot layer to the embedding layer with the pre-trained parameter matrix Q. This is actually the same as the low-level pre-training process in the image field. The difference is that Word Embedding can only initialize the first-layer network parameters, and the higher-level parameters are powerless .

Downstream NLP tasks are also similar to images when using Word Embedding. There are two ways:

- One is Frozen, which means that the network parameters of the Word Embedding layer are fixed;

- The other is Fine-Tuning, that is, the Word Embedding layer parameters need to be updated with the training process when using a new training set for training.

The pre-trained language model summarizes a sentence, (we first use one-hot encoding, and then use the Q matrix pre-trained by Word2Vc to directly get the word vector, and then proceed to the next task)

- 1. Freezing: the Q matrix can not be changed

- 2. Fine-tuning: change the Q matrix as the task changes

5. The BUG that the traditional neural network model cannot obtain timing information

Traditional neural networks cannot obtain timing information, but timing information is very important in natural language processing tasks.

When explaining Word Embedding, careful readers must have discovered that these word representation methods are static in nature, and each word has a uniquely determined word vector, which cannot be changed according to different sentences , and cannot handle natural language processing tasks. polysemy problem .

Example:

- "I ate an apple", the part of speech and meaning of "apple" depends on the information of the previous word, if there is no such words as "I ate an apple", "apple" can also be translated as the bitten one created by Jobs A bite of apple.

- As shown in the figure below, for example, the polysemous word Bank has two common meanings, but Word Embedding cannot distinguish between these two meanings when encoding the word bank.

Although the words appearing in the context of bank in the two sentences containing bank are different, when the language model is used for training, no matter what context the sentence passes through Word2Vec, the same word bank is predicted, and the same word occupies The parameter space of the same row will cause two different context information to be encoded into the same Word Embedding space, which will cause Word Embedding to be unable to distinguish different semantics of polysemous words.

So what to do? Will I write again tomorrow? Thanks for the explanation of the personal space of the programmer who went fishing _哔哩哔哩_bilibili!

Next issue: From RNN to LSTM to ELMo to solve this problem.