Gang scheduling istn’t worth it yet

- coordinated scheduling :

Coscheduling is the principle for concurrent systems of scheduling related processes to run on different processors at the same time (in parallel). There are various specific implementations to realize this. When scheduling, try to schedule

related processes at the same time . If some threads are not scheduled when the process needs to communicate, they will be blocked waiting to interact with other threads. This results in at most one interaction per time slice, reducing throughput and causing high latency.

Using coscheduling technology, if some threads are not scheduled at the same time as the coordinated collection, some abnormal processes that cause blocking are called "fragments".

- Gang scheduling is a variant of coscheduling, which strictly requires no fragmentation.

1 Introduction

General-purpose operating systems have little benefit from gang scheduling.

In this article, focus on the SPMD (a single program, multiple data) model, where data is divided into multiple threads for processing. SPMD is widely used in OpenMP, numerical libraries, MapReduce

2 Background and related work

gang scheldung can reduce synchronization overhead in two ways:

- gang scheduling adjusts the schedule at the same time, so that all threads reach the synchronization point at the same time

- The use of busy waiting reduces the time for switching between Arabic and Chinese in the previous period. Reduce waiting time.

Gang scheduling also has some disadvantages:

when the number of processors does not match the gang, it will cause fragmentation, resulting in underutilization of processors.

In gang scheduling, the benefits are really small, and there are a series of constraints to be met.

3 Conditions for useful gang scheduling

In order for gang scheduling to perform better than other scheduling, the following conditions need to be met:

- fine-grained synchronization,

good load balancing of work, - latency-sensitive workloads,

- multiple important parallel workloads,

- a need to adapt under changing conditions.

Some assumptions are required beyond these conditions:

- There must be multiple tasks participating in the competition, otherwise the scheduler will not be able to function

- The threads must be synchronized, otherwise there is no need to wait, and it has no effect on whether they are scheduled at the same time.

- The overhead of blocking is greater than the overhead of spin waiting.

3.1 Experimental environment

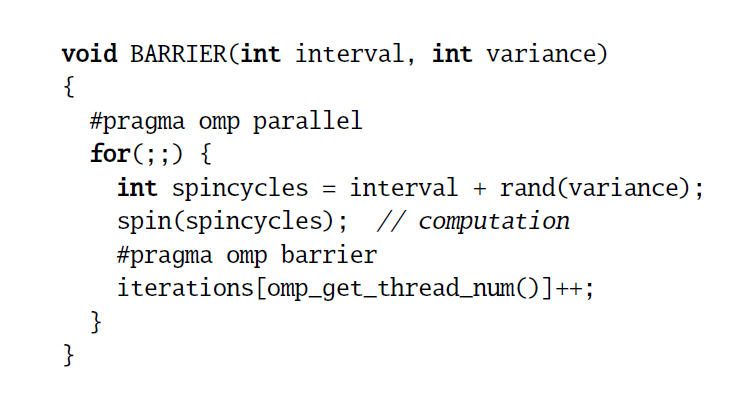

workload

Using a synchronous loop with a fixed interval and maximum variance, the gangs always match the number of cores available in the experiment

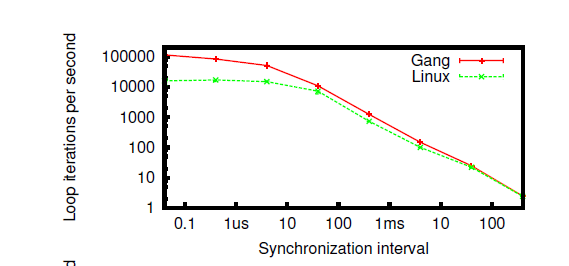

3.2 Fine-grained synchronization

We first

Run two BARRIER applications at the same time. Because the condition of task competition must be met.

In a BARRIER program, run a series of experiments with varying intervals. The other is a competing background program with interval set to 0.

When the synchronization interval is 40us, the advantage of gang scheduling is reduced. When the synchronization interval reaches 50ms, there is almost no advantage.

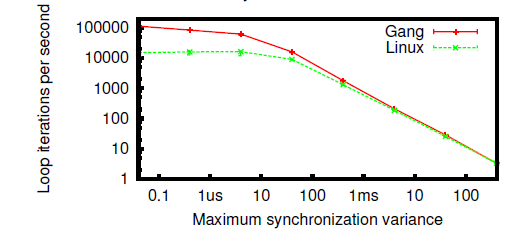

3.3 Good load-balancing of work

The workload is best balanced. If the waiting time is too long when using busy-wait, it is better for the block to free up the processor for its own process.

If the workload among a group of threads is unbalanced, it will cause some threads to wait too long, resulting in reduced efficiency.

3.4 Latency-sensitive workload

If the load does not care about response time or completion time at all, but only cares about throughput, then its performance is not even as good as some simple algorithms such as FIFO, and there is no thread switching overhead at all.

Two sets of experiments were performed, each running two BARRIER programs for 10 seconds:

- Divided into two sections of 5s batch operation, 5s to run a program

- Two programs run concurrently

The throughput of batch mode operation is higher, which is 1.5% higher than gang scheduling.

Time slices are used to trade off the additional overhead of scheduling and the requirements for interaction and response.

3.5 Multiple important parallel workloads

A necessary condition for gang scheduling to outperform priority-based scheduling is the interest in multiple parallel programs. If only one important program is concerned, prioritization is enough to ensure that all parts of the program are parallelized.

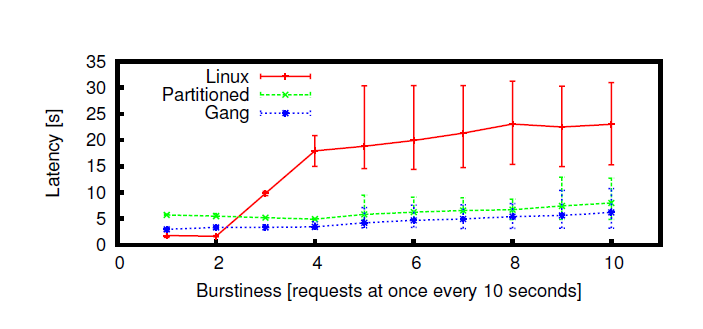

3.6 Bursty, adaptive workloads

Space-partitioned scheduling: demand is static over long periods of time, and a fixed amount of hardware can be allocated to an application

In fact, if the granularity of the workload matches the cores in the system, space partitioning can perform better than gang scheduling, saving context switching overhead.

However, if there are a lot of loads that are sudden, if the CPU cannot be pre-allocated to the process, frequent re-partitioning is required to perform space partitioning and scheduling.

But it is difficult to detect and reallocate unutilized resources.

Therefore, we believe that sudden load is a necessary condition for gang scheduling to proceed.

4 Future application workloads

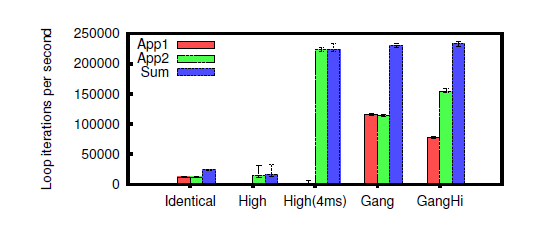

Using a hypothetical interactive workload and satisfying the above conditions, compare with the Linux default scheduler.

an interactive network monitoring application that employs data stream

clustering to analyze incoming TCP streams, looking for packets clustering around a specific attribute suggesting

an attack.