1. Graph structure

A graph network is a neural network defined on graph-structured data: ①Each node in the graph consists of one or a group of neurons; ②Connections between nodes can be directed or undirected; ③Each node can receive information from adjacent nodes or own information.

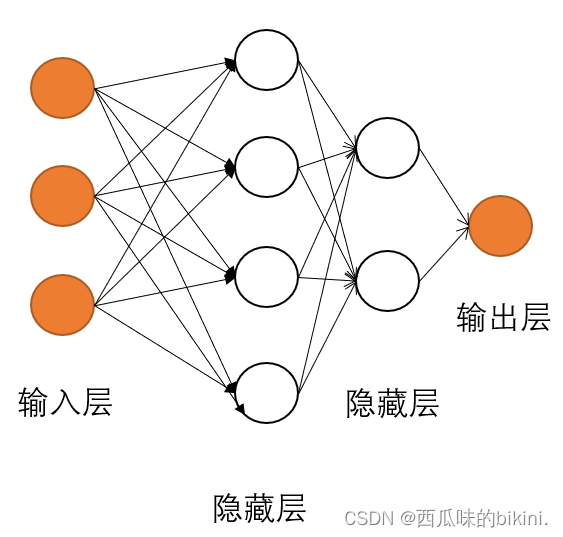

2. Feedforward neural network

In the feed-forward neural network, each neuron is divided into different groups according to the sequence of receiving information. Each group can be regarded as a neural layer, and the neurons in each layer receive the output of the previous layer of neurons and output to In the next layer of neurons, the information in the entire network propagates in the same direction, without reverse information propagation, including fully connected feedforward neural networks and convolutional neural networks. It can be regarded as a function that realizes the complex mapping from the input space to the output space through multiple complexes of simple nonlinear functions.

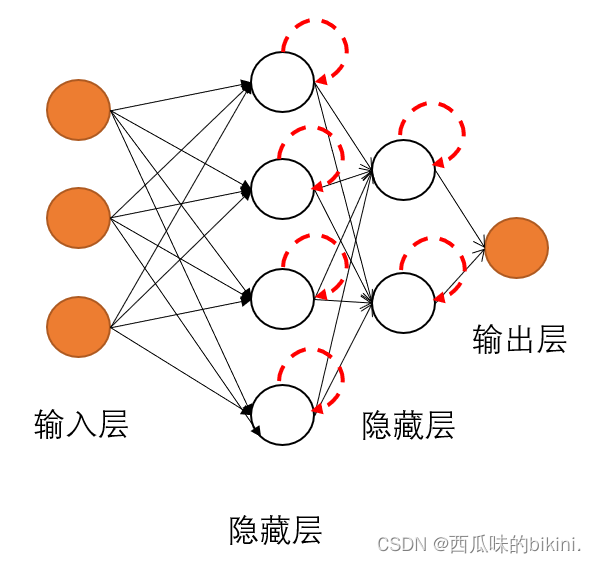

3. Feedback neural network

The neurons in the feedback neural network can not only receive signals from other neurons, but also receive their own feedback signals. Compared with the feedforward neural network, the neurons in the feedback neural network have a memory function and have different states at different times. The information propagation in the feedback neural network can be one-way or two-way transmission, including the recurrent neural network, Hopfield networks and Boltzmann machines. In order to further enhance the memory capacity of the memory network, external memory units and read-write mechanisms can be used to save some intermediate states in the network, which are called memory-enhanced networks, such as neural Turing machines.