Evolutionary computation (EC) is a metaheuristic algorithm that draws inspiration from natural evolution and swarm intelligence behaviors.

At present, EC has developed rapidly due to its effectiveness and efficiency in solving optimization problems. There are two main branches of EC algorithm:

1. Evolutionary Algorithm (EA)

Differential evolution (DE)

Genetic Algorithm

2. Swarm intelligence

Particle swarm optimization particle swarm optimization (PSO)

Ant colony optimization

During the evolution of the EC algorithm, a large amount of data will be generated, which can reveal the evolutionary behavior of the individual explicitly or implicitly. For example, during the evolution of DE, the successful differential vector reveals the successful behavior of each individual. In particle swarms, the velocity of success can steer particles close to the global optimum.

Data usually means information that reveals the evolutionary behavior of a population during evolution. For example, location information, orientation information, and fitness information are all data.

A successful experience is the direction of successful evolution in a position. For example, suppose an individual is at position P1 with fitness value F1 and jumps to position P2 with fitness value F2. If F2 is better than F1, that is, the individual evolves successfully, then the position P1 is paired with the successful direction D = P2 - P1 as a successful experience, which is recorded as (P1, D).

Knowledge (knowledge) knowledge is defined as a rule of how to dig out the direction of successful evolution from successful experience. Therefore, successful experience is a special kind of data generated in the process of evolutionary computing EC, which can be used to mine knowledge and guide the evolution of EC algorithms.

This article is divided into two steps:

1. "learning from experiences to obtain knowledge": First, in the process of learning and acquiring knowledge from experience, the KL framework maintains a knowledge base model (KLM) based on a feed-forward neural network (FNN) to preserve knowledge. During the evolution process, KLM collects the successful experiences obtained by all individuals, and mines and learns these experiences to obtain general knowledge about the relationship between individuals and successful experiences.

2. "Utilizing knowledge to guide evolution": Secondly, in the process of using knowledge to guide evolution, individuals can query the KLM (knowledge base model) for guidance information, and KLM is based on the learned knowledge and individual The position they are in gives each individual a suitable evolutionary direction.

The features and contributions of the proposed KL framework are summarized as follows:

1) This paper proposes a novel and effective KL framework. The KL framework can deeply mine the successful experience generated in the evolution process to obtain knowledge, and can correctly use the knowledge to guide the individual according to the position of the individual. First, since knowledge is acquired by mining a large number of successful experiences, the knowledge in the KL model is more general and effective in guiding evolution. Second, the KL framework can provide relatively effective evolutionary direction guidance for each individual, since the provided direction is calculated based on the knowledge and the current state of the individual.

2) The KL framework is a general EC algorithm framework that can be easily embedded with many EC algorithms. To clearly show how to combine the KL framework and EC algorithm, we combine the KL framework with two representative EC algorithms DE and PSO, and propose KL-based DE (denoted as KLDE) and KL-based PSO ( denoted as KLPSO). According to the experimental results, these two KL-based EC algorithms are more effective and efficient than their classical versions.

3) To further evaluate the effect of the KL framework, we combine the KL framework with several state-of-the-art and even champion EC algorithms, and demonstrate the performance improvement of the KL-based algorithm compared to the original algorithm. Experimental results on benchmark functions and real-world optimization problems demonstrate that our proposed KL framework can significantly improve the performance of these EC algorithms.

Knowledge-based Evolutionary Computing (KLEC)

A. Architecture of Knowledge Learning (KL)

B. to acquire knowledge by learning from experience

1. Knowledge base model KLM (knowledge library model)

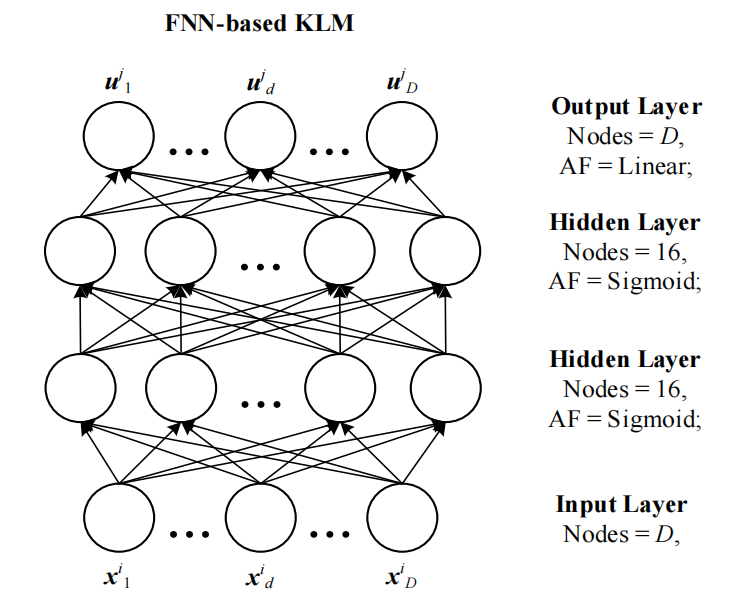

KLM should be able to store a large amount of knowledge and quickly retrieve knowledge. The KLM used in this article is an FNN model, and the training is a pair of data, (the "position" of the individual, the "successful direction" corresponding to the "position")

2. Learn from experience to update the knowledge base model

In the KL framework, before learning the process of updating KLM from experience, successful experiences should be collected first. In order to collect successful experiences, we create a list Q to store successful experiences. Specifically, in each generation, once the fitness of an individual is improved, this successful experience will be collected into Q. This experience will not be immediately learned by KLM until all successful experiences in this generation have been collected. That is to say, the experience is temporarily stored in the experience list Q, waiting to be learned by KLM.

Then, the success experiences collected in Q are learned by KLM to gain knowledge about the relationship between position and direction of success. Specifically, the position of each experience in the list Q is input to KLM as input, and the corresponding direction is used as expected output to train the FNN-based KLM. During the training process, the backpropagation algorithm is used to adjust the weight of KLM. During the learning process of KLM, all experiences in the list Q are discarded (i.e., all experiences in the list Q are cleared at the end of each generation) to make room for new experiences in the next generation. It should be noted that in each generation, the KLM is not re-initialized, but is continuously trained by the newly collected experience in this generation according to the weights of the KLM in the previous generation. Therefore, KLM can actually gain knowledge by learning from successful experiences generated throughout its history. If no successful experience is collected in Q, i.e. no individual achieves successful evolution, then KLM will not be updated in the current generation.

C. Using knowledge to guide evolution

The process of using knowledge to guide evolution aims to provide an individual with an appropriate evolutionary direction based on its current position and learned knowledge. Since KLM has learned the mapping from position to direction based on historical successful experience, we only need to input the current position of the individual to KLM, and the output of KLM is the query of the evolution direction of this individual. In this way, the utilization operation of KLM can provide an appropriate evolution direction for each individual according to its current location. In addition, for the sake of clarity, we present Figure 4 to better illustrate the relationship between successful experience and KLEC knowledge, and also to better illustrate the knowledge learning and utilization process of KLEC.

D. KLDE (Differential Evolution of Knowledge Learning)

Make an example of KL and DE to show everyone how to combine them:

Readers have something to say:

I feel that the whole article is not nutritious to read, and I don’t understand how to use knowledge, and there is no code...